An Automatic Building Extraction Method Fused with Geometry Perception and Image Understanding

An image understanding and automatic extraction technology, applied in biological neural network models, instruments, calculations, etc., can solve the problems of lack of detailed information in classification results, increase in the amount of network calculations and parameters, and lack of adequate mining of LiDAR data spatial geometric information. Achieve the effect of improving the extraction effect, improving the extraction accuracy, and efficient automatic extraction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] In order to facilitate the understanding and implementation of the present invention by those of ordinary skill in the art, the present invention will be further described below with reference to the accompanying drawings and specific embodiments. invention.

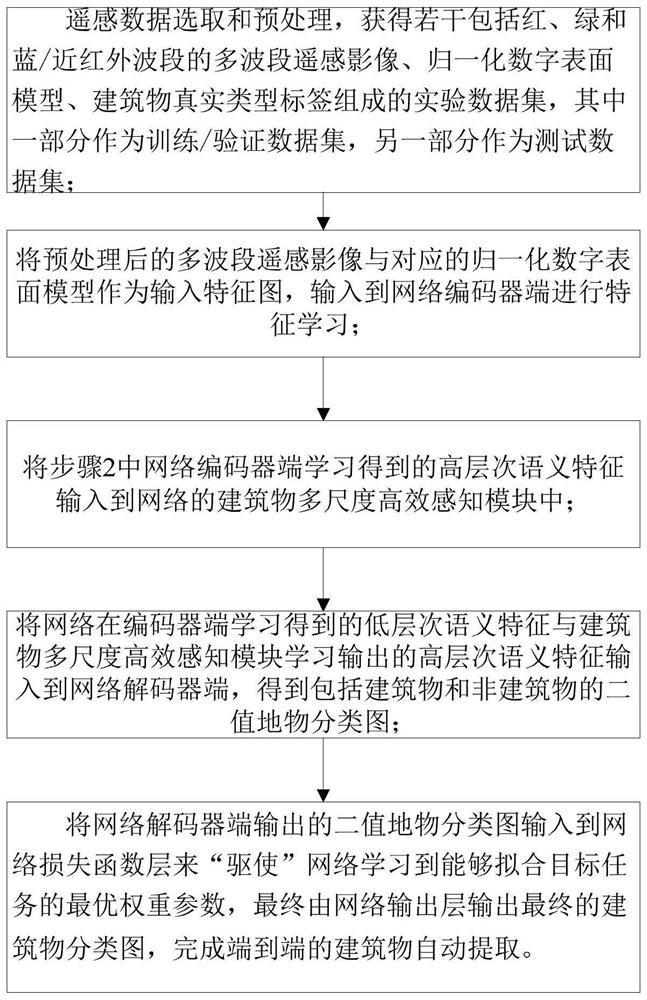

[0038] see figure 1 , a kind of building automatic extraction method that integrates geometric perception and image understanding provided by the present invention, is characterized in that, comprises the following steps:

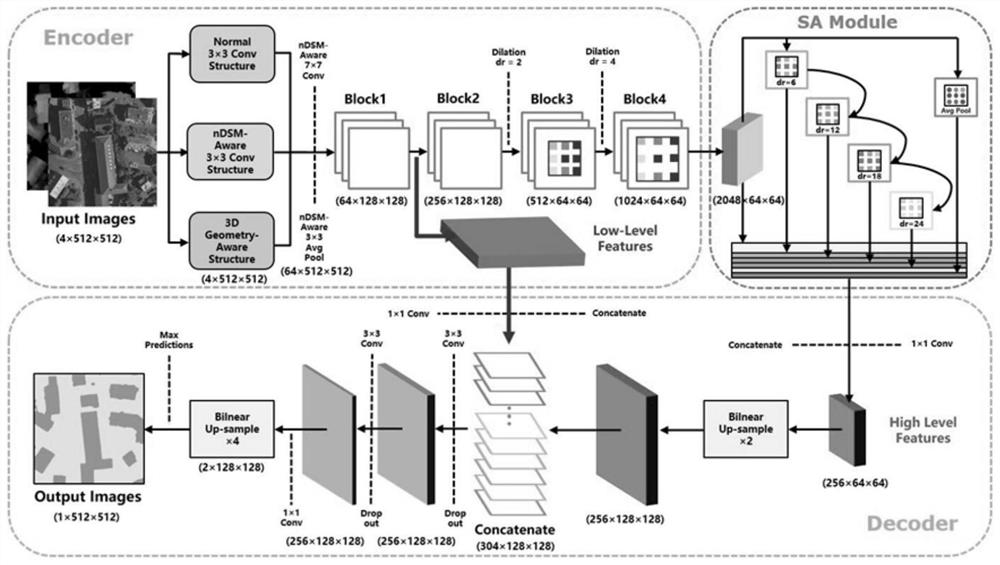

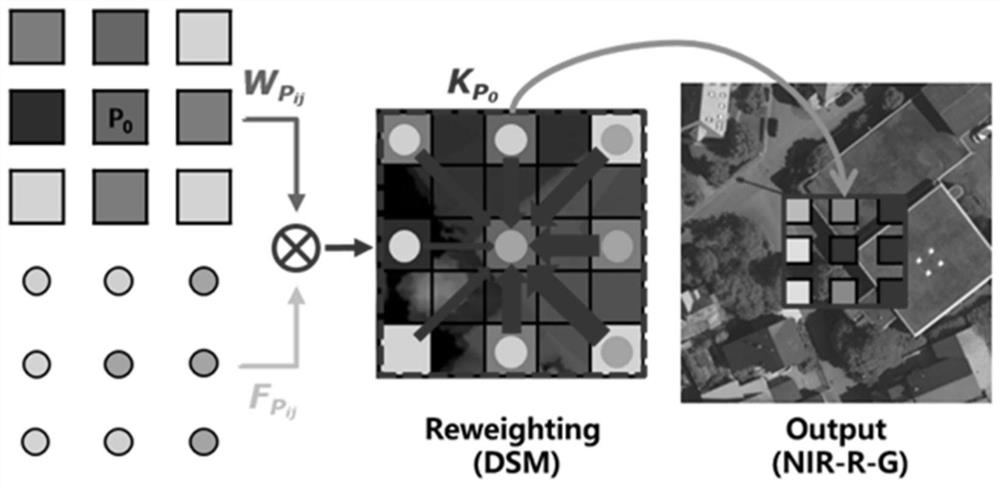

[0039] Step 1: remote sensing data selection and preprocessing to obtain an experimental data set consisting of a first data set, a second data set, a normalized digital surface model, and a real type label of a building; the first data set includes several red, Multi-band remote sensing images of green and blue bands, the second dataset includes multi-band remote sensing images of several red, green and near-infrared bands; part of the first dataset is used as training and validation datasets...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com