Multi-modal image fusion method based on convolution analysis operator

A multi-modal image and fusion method technology, applied in the field of image fusion, can solve the problem that a single modal image cannot express all the information of the scene well, and achieve the effect of avoiding artifacts and excessive fusion and improving the reconstruction quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

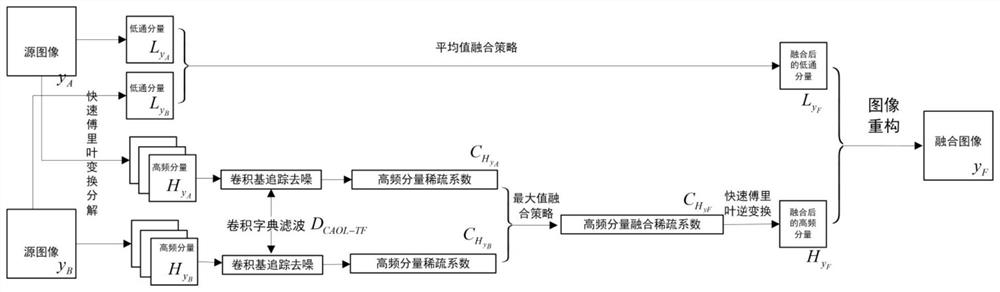

Method used

Image

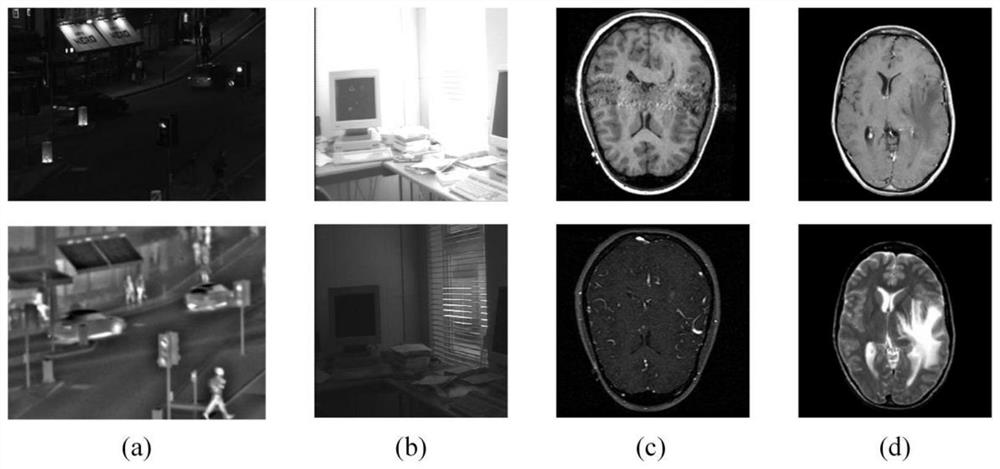

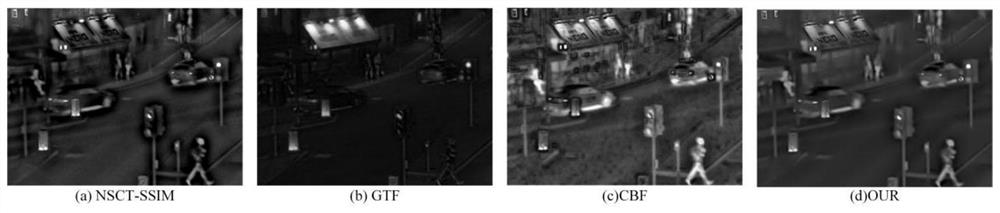

Examples

Embodiment Construction

[0032] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below with reference to the accompanying drawings and examples.

[0033] The present invention utilizes the Convolutional Analytical Operator Learning (CAOL) framework with orthogonal constraints proposed by I.Y.Chun to enforce tight frame (TF) filtering in the convolutional perspective, and converts the USC-SIPI image dataset (50 512×512 standard image) is applied to the dictionary learning framework (abbreviated as CAOL-TF) to obtain compact and diverse dictionary filtering (the dictionary filter size obtained in the present invention is 11×11×100), and the dictionary learning is expressed as follows.

[0034]

[0035]

[0036] D:=[d 1 ,...,d K ] (3)

[0037] in, Represents a convolution operator; Represents a set of convolution kernels; α is a threshold parameter that controls feature sparsity; Repres...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com