Action recognition model training method, and action recognition method and device

A technology of action recognition and model training, applied in character and pattern recognition, acquisition/recognition of facial features, instruments, etc., can solve the problems of inability to obtain recognition results, limited expression capture accuracy and node selection, and achieve good recognition accuracy , increase the amount of effective data, reduce the effect of dependence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0079] In order to make the above objects, features and advantages of the present invention more comprehensible, specific embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings.

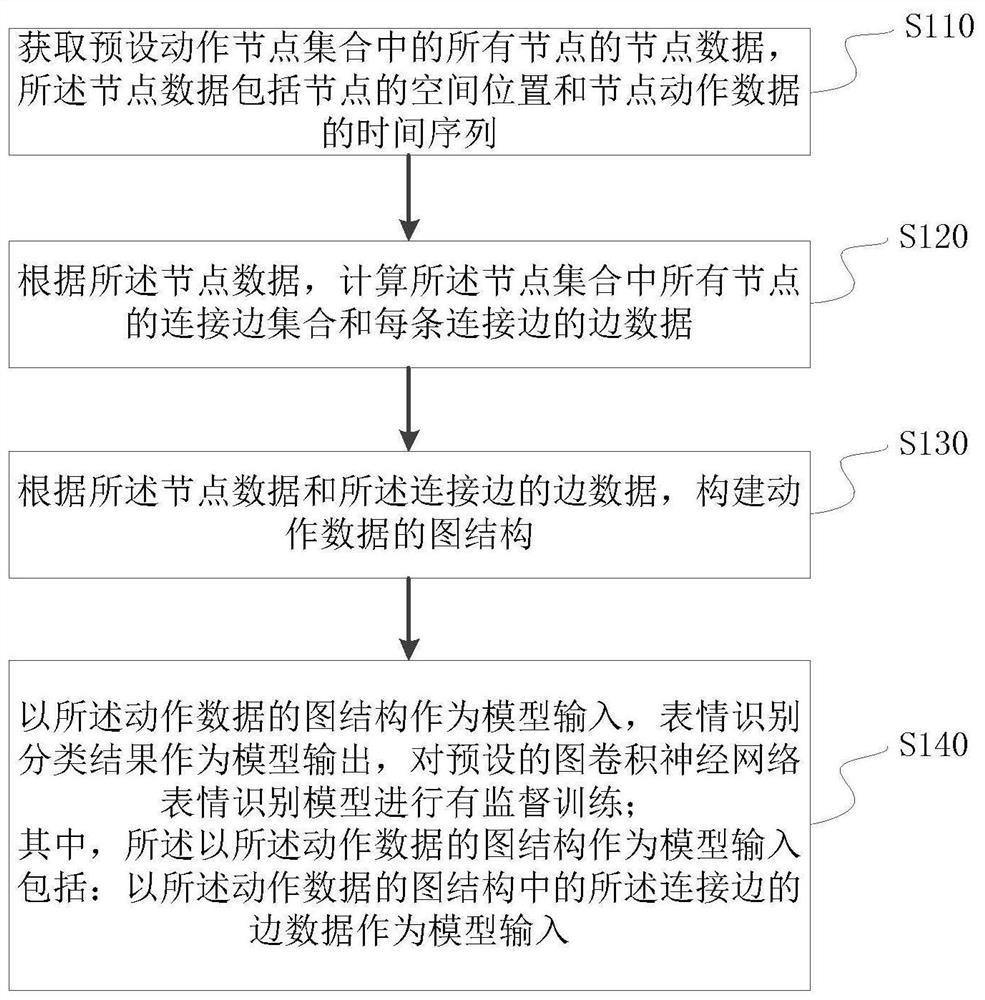

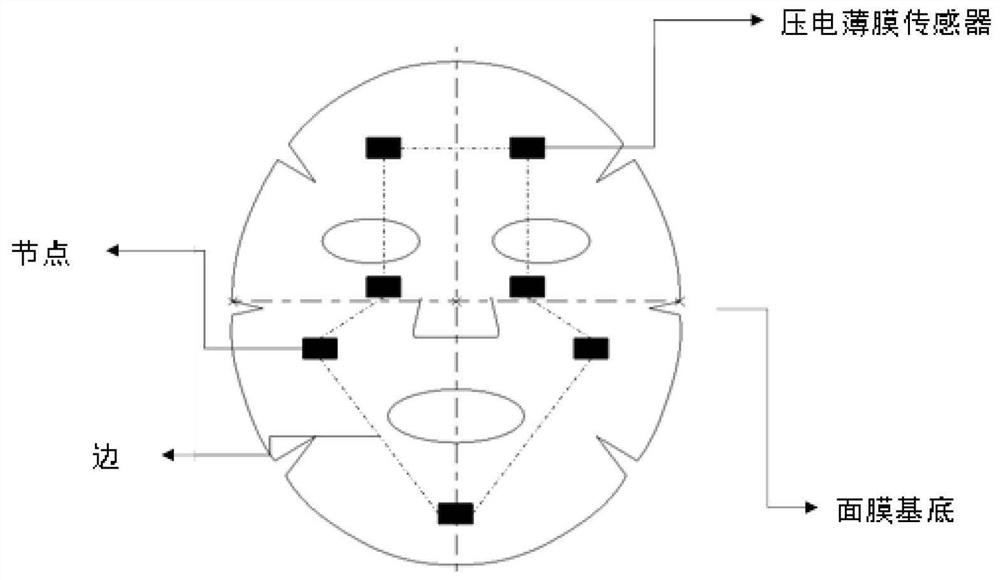

[0080] In related technologies, when using graph convolutional neural network for image and video processing to achieve the technical purpose of human posture, face recognition, expression recognition and other action recognition, the method of convolution calculation of node data is adopted. Among them, the adjacency matrix representing the connection relationship between nodes is usually a 0,1 matrix, and the mark 1 for the existence of connection edges; there are also some directed graphs that introduce -1 to indicate the direction, but overall, adjacency The matrix is static during the convolution calculation. Moreover, in the update process of the node data, the adjacency matrix participates in the convolution calculation as a part ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com