Model training method and device, electronic equipment and storage medium

A model training and model technology, applied in the fields of artificial intelligence and deep learning, can solve problems such as failure to go online, failure of the model to meet performance requirements, failure to obtain labeled data, etc., to achieve the effect of reducing capital costs and manpower consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

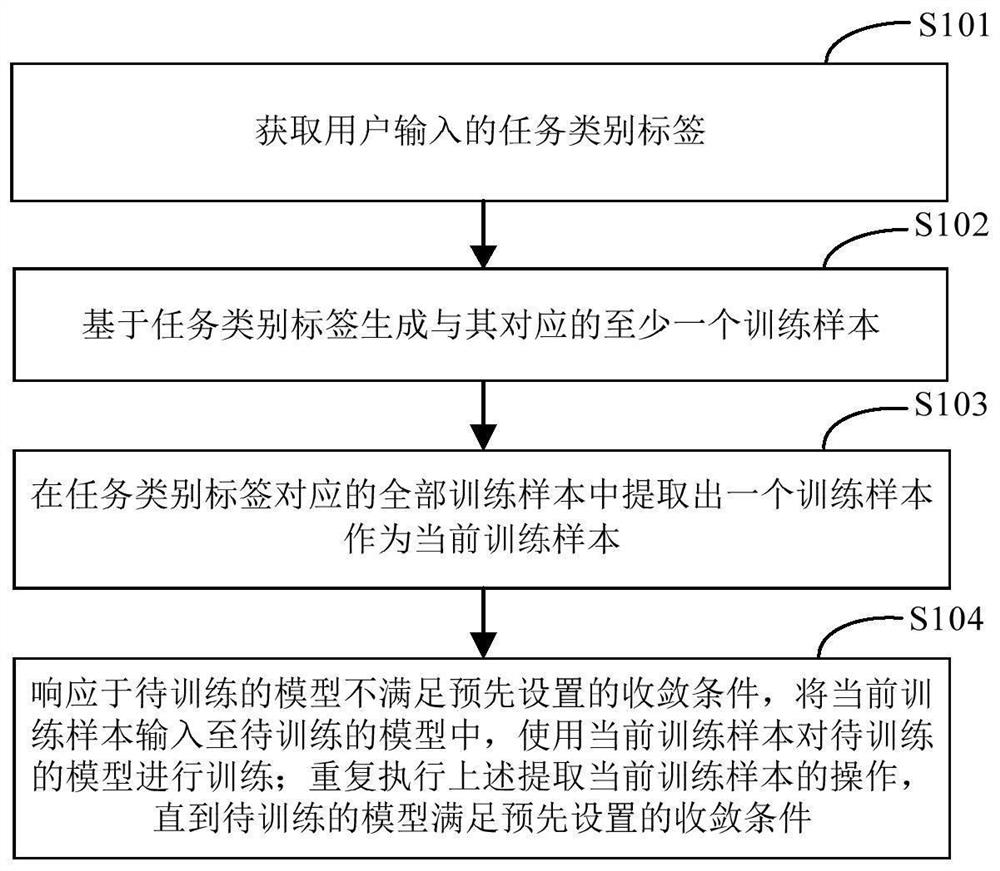

[0031] figure 1 It is the first schematic flow chart of the model training method provided by the embodiment of the present application. The method can be executed by a model training device or an electronic device. The device or electronic device can be implemented by software and / or hardware. The device or electronic device Can be integrated in any smart device with network communication function. Such as figure 1 As shown, the model training method may include the following steps:

[0032] S101. Obtain a task category label input by a user.

[0033] In this step, the electronic device may obtain the task category label input by the user. Specifically, the task category label input by the user is in text format. For example, the task type tag entered by the user is "dog".

[0034] S102. Generate at least one training sample corresponding to the task category label based on the task category label.

[0035] In this step, the electronic device may generate at least one t...

Embodiment 2

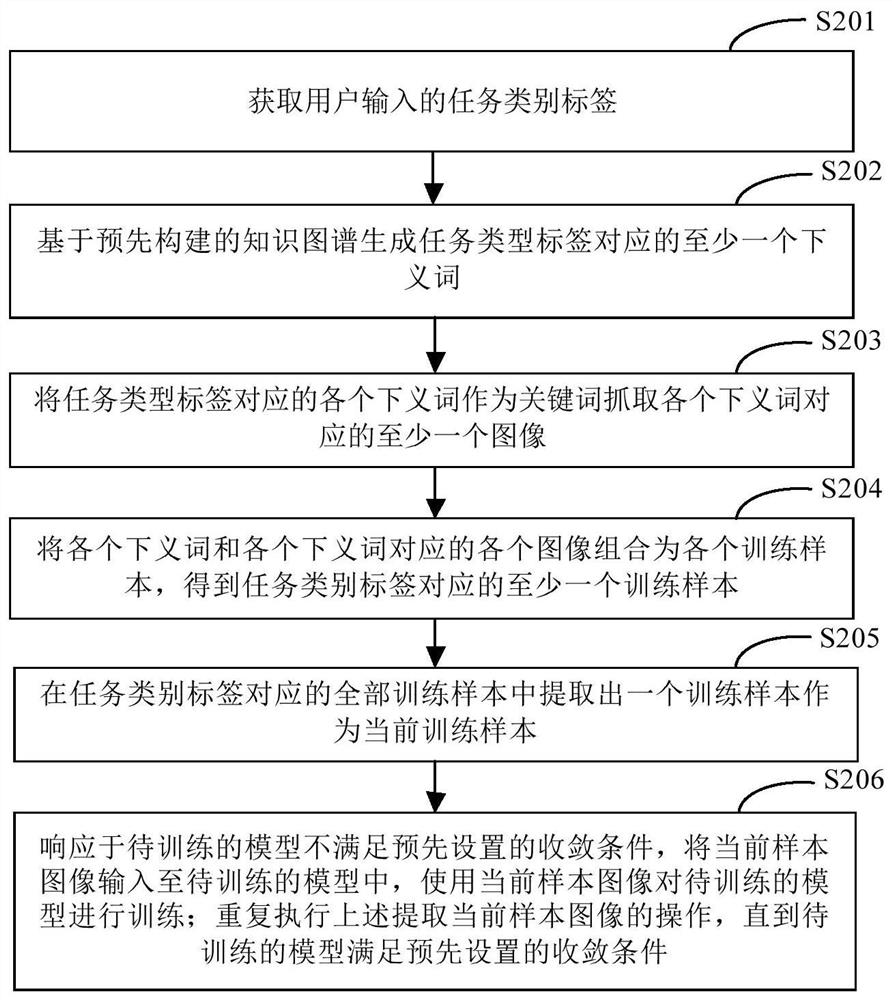

[0042] figure 2 is a second schematic flowchart of the model training method provided in the embodiment of the present application. Further optimization and expansion based on the above technical solution, and may be combined with each of the above optional implementation modes. Such as figure 2 As shown, the model training method may include the following steps:

[0043] S201. Obtain a task category label input by a user.

[0044] S202. Generate at least one hyponym corresponding to the task type label based on the pre-built knowledge graph.

[0045] In this step, the electronic device may generate at least one hyponym corresponding to the task type tag based on the pre-built knowledge map. Specifically, the electronic device may input the task category label into the knowledge map, and obtain at least one hyponym corresponding to the task type label through the knowledge map. For example, the electronic device can input the task category label "dog" input by the user ...

Embodiment 3

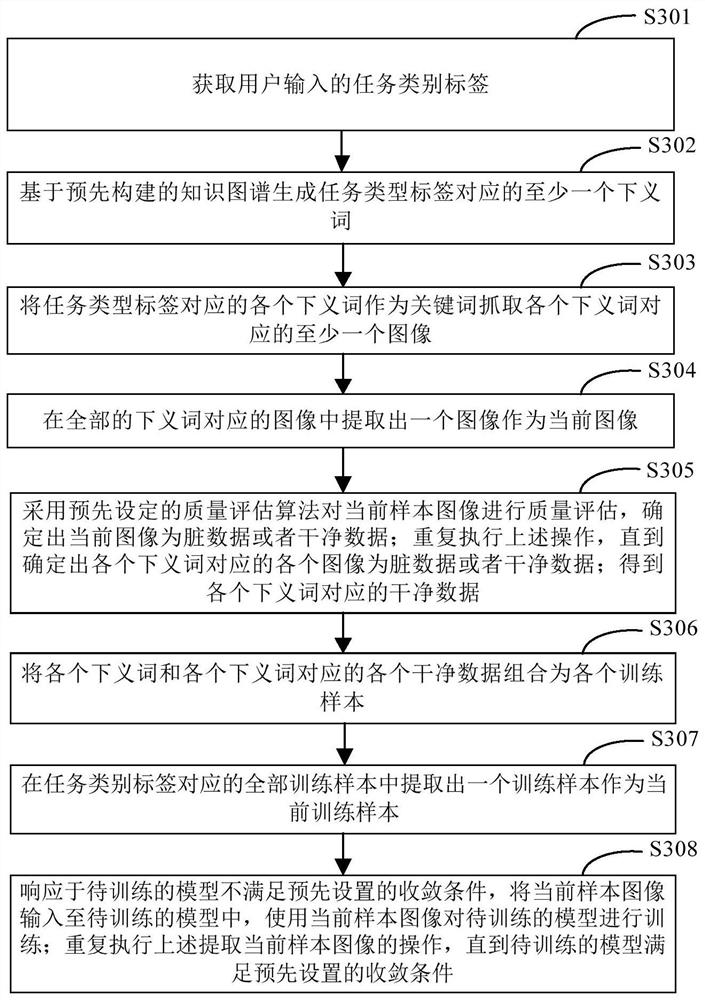

[0054] image 3 is a third schematic flowchart of the model training method provided in the embodiment of the present application. Further optimization and expansion based on the above technical solution, and may be combined with each of the above optional implementation modes. Such as image 3 As shown, the model training method may include the following steps:

[0055] S301. Obtain a task category label input by a user.

[0056] S302. Generate at least one hyponym corresponding to the task type label based on the pre-built knowledge graph.

[0057] S303. Capture at least one image corresponding to each hyponym by using each hyponym corresponding to the task type tag as a keyword.

[0058] S304. Extract an image from all the images corresponding to the hyponym as the current image.

[0059] In this step, the electronic device may extract a sample image from at least one sample image corresponding to the task training label as the current sample image. Specifically, it i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com