Intra-frame prediction method based on generative adversarial network

An intra-frame prediction and intra-frame prediction mode technology, applied in the video field, can solve the problem of inability to effectively deal with complex textures and curved edges, and achieve the effect of saving bit rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

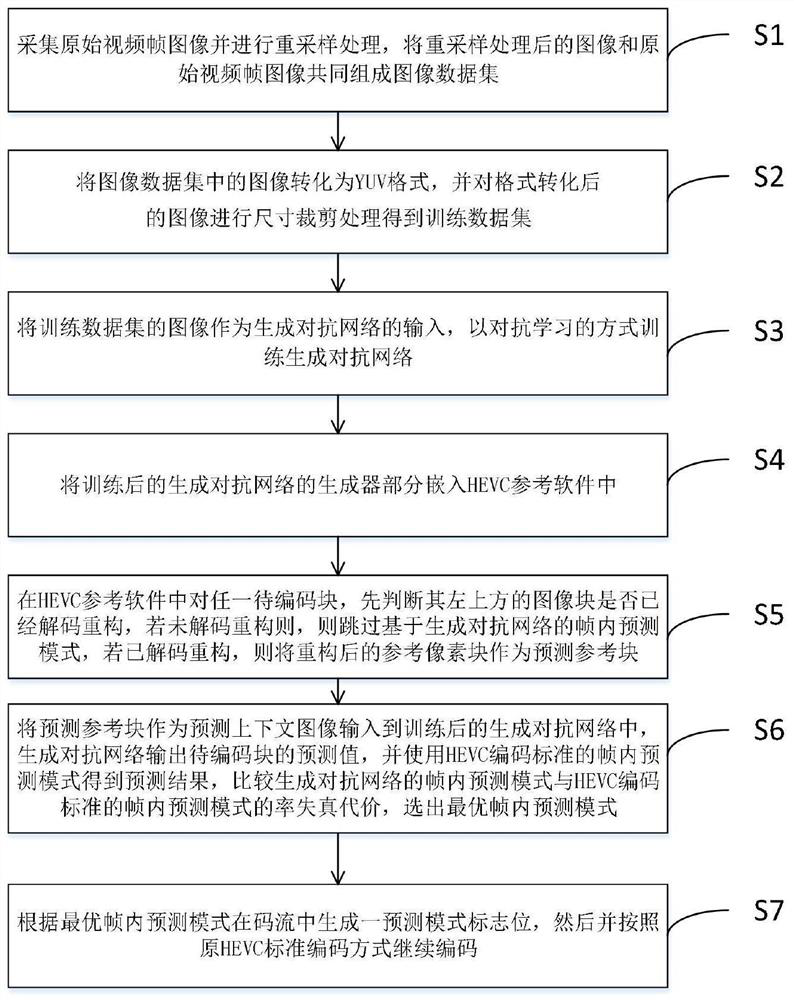

[0040] Such as figure 1 As shown, an intra prediction method based on generating confrontation network includes the following steps:

[0041] S1: Collect the original video frame image and perform resampling processing, and combine the resampled image and the original video frame image together to form an image data set;

[0042] In a specific embodiment, first enough video frame images are collected, and for high-resolution video frame images, such as images with a resolution of 1000*1000, a resampling method based on the regional pixel relationship is used to downsample and scale them to 1 / 2 of the original pixel size, and then the resampled image and the original video frame image together form an image dataset.

[0043] S2: Convert the images in the image data set to YUV format, and perform size cropping processing on the converted images to obtain the training data set;

[0044] It should be noted that converting the images in the image data set into the YUV format real...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com