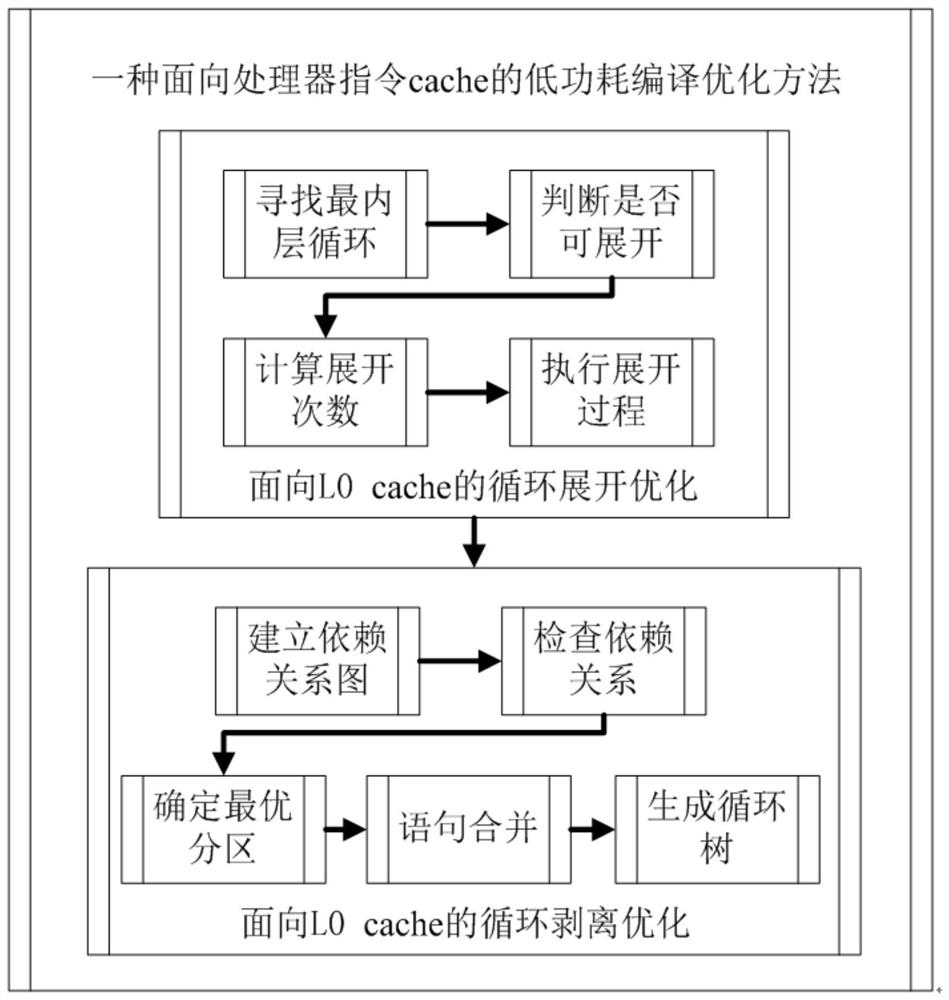

A processor instruction cache-oriented low-power-consumption compiling method

A technology of processor instructions and compilation methods, applied in the field of low-power compilation, can solve the problems of lack of instruction cache low-power compilation optimization technology, inability to efficiently utilize instruction cache components, and inability to effectively ensure high L0cache hit rate, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

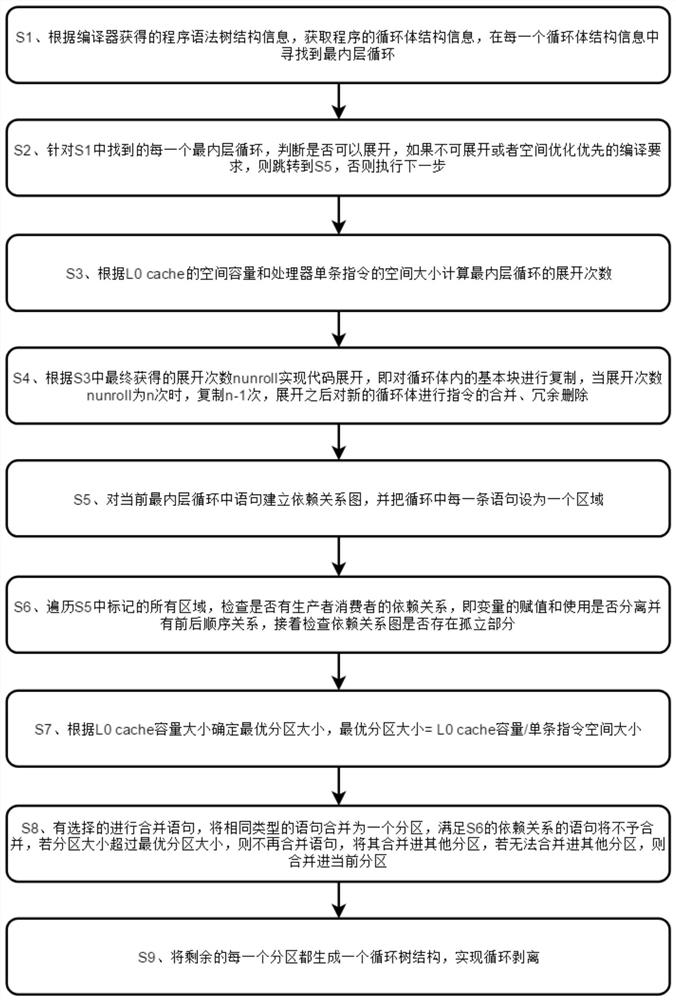

[0026] EXAMPLES: A low power compilation method for processor instruction cache, including the following steps:

[0027] S1, according to the programming tree structure information obtained by the compiler, acquire the cyclic body structure information of the program, and find the innermost cycle in each cyclic body structure information;

[0028] S2, for each of the innermost cycles found in S1, determine if it can be expanded, if it is not expandable or spatial optimization priority compilation requirements, then jump to S5, otherwise the next step is performed;

[0029] S3, according to the space capacity of the L0 Cache and the spatial size of the processor single instruction calculate the number of expansion of the innermost cycle, that is, the maximum average pseudo directive of the most innermost cycler according to the maximum pseudo command number of the innermost cycle. Max_average_unrolled_insns and the maximum number of expansion times of the innermost cycler Max_unrol...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com