Black-box attack defense system and method based on neural network middle layer regularization

A neural network and defense system technology, applied in biological neural network models, neural learning methods, neural architectures, etc., can solve the problems of difficulty in knowing the training data set, affecting the efficiency of black box attacks, and inability to make progress, and achieve confrontational training. Robust, addressing the effects of poor transfer quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described below in conjunction with the accompanying drawings. Embodiments of the present invention include, but are not limited to, the following examples.

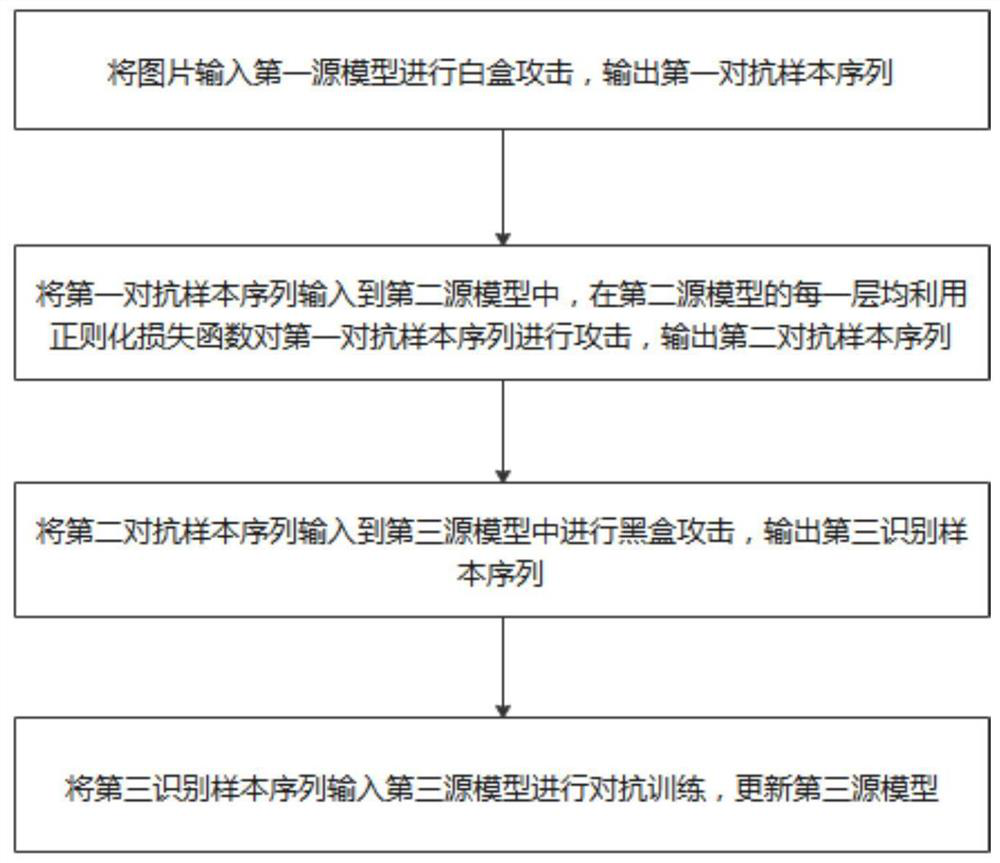

[0033] A black-box attack defense system based on the regularization of the middle layer of the neural network, including:

[0034] The first source model adopts the ResNet network based on the residual module. In this embodiment, the first source model uses a white-box attack method to attack, and finally outputs the first adversarial sample sequence. Taking the input original picture as an example, input A set of original pictures, using the white box attack method to add appropriate adversarial perturbation to attack the first source model, generate the first adversarial sample sequence, the first adversarial sample sequence also has a certain degree of migration, but for the second source model In other words, because the decision-making direction of the first adversarial...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com