Data processing method, model optimization device and model execution device

A data processing and model technology, applied in the direction of electrical digital data processing, multi-programming devices, biological neural network models, etc., can solve the problems of long model loading time, large pre-computing time, etc., to reduce memory multiplexing calculations , the effect of reducing the load time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

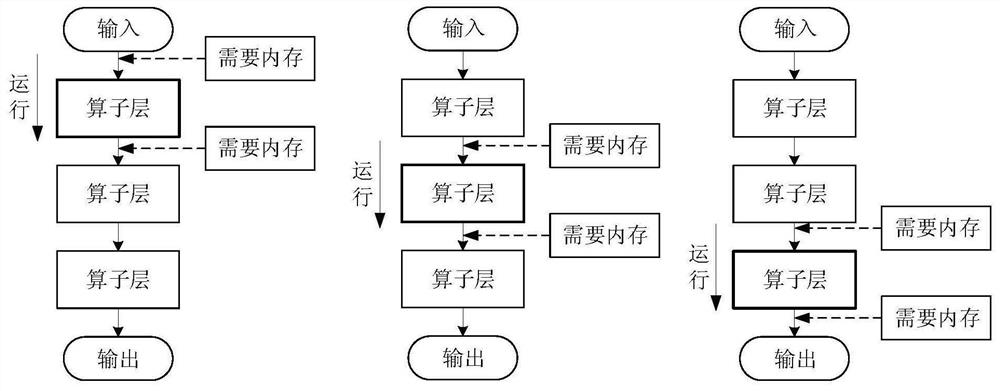

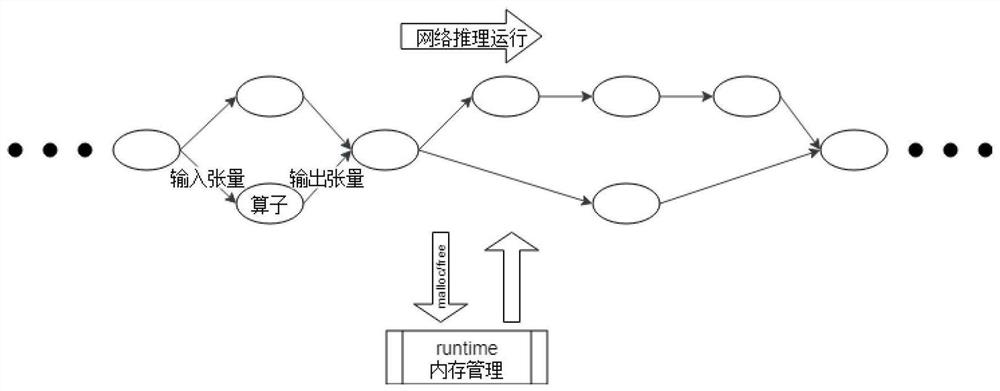

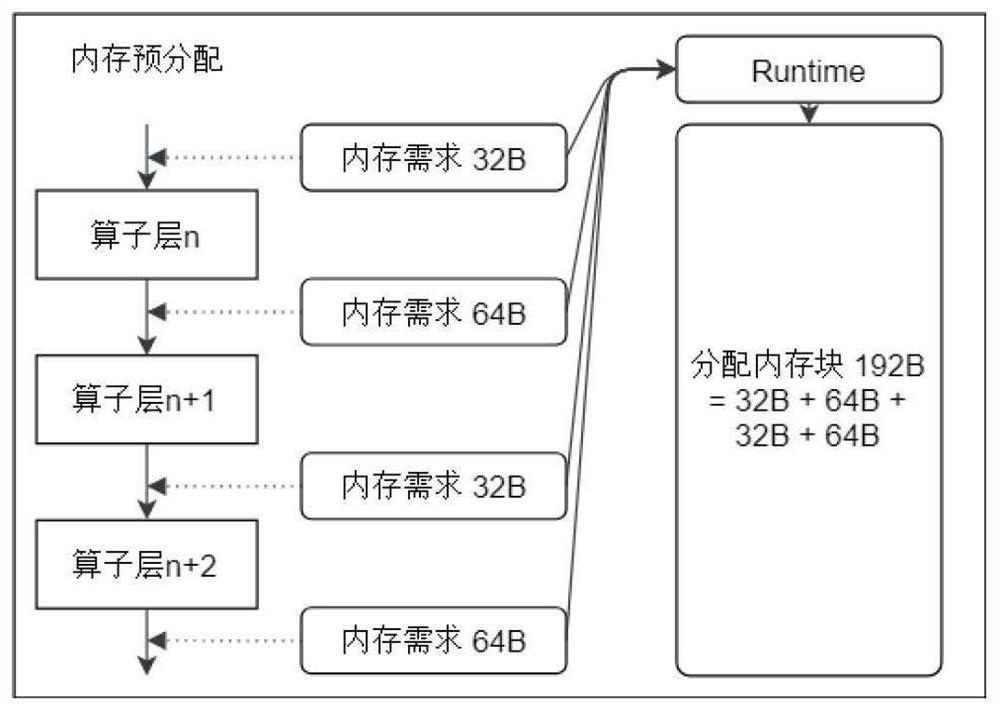

[0053] The embodiment of the present application provides a data processing method, which is used to reduce memory waste when the neural network model is running, reduce the allocation time of memory allocation and release, and can also reduce the loading time of the neural network model.

[0054] A neural network model refers to programs and data that are trained on a large amount of labeled data to perform cognitive computing. The neural network model includes neural network architecture components and neural network parameter components. Wherein, the neural network architecture component refers to the network and its hierarchical structure related to the neural network algorithm in the neural network model, that is, the program used to perform cognitive computing in the above-mentioned neural network model. The neural network model can be used to perform reasoning operations. The process of reasoning operations is to convert input data into outputs through multi-layer opera...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com