Vision-based mutual positioning method in unknown indoor environment

A positioning method and indoor environment technology, applied in the field of image processing, to achieve broad development prospects, improve retrieval speed, and high positioning accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

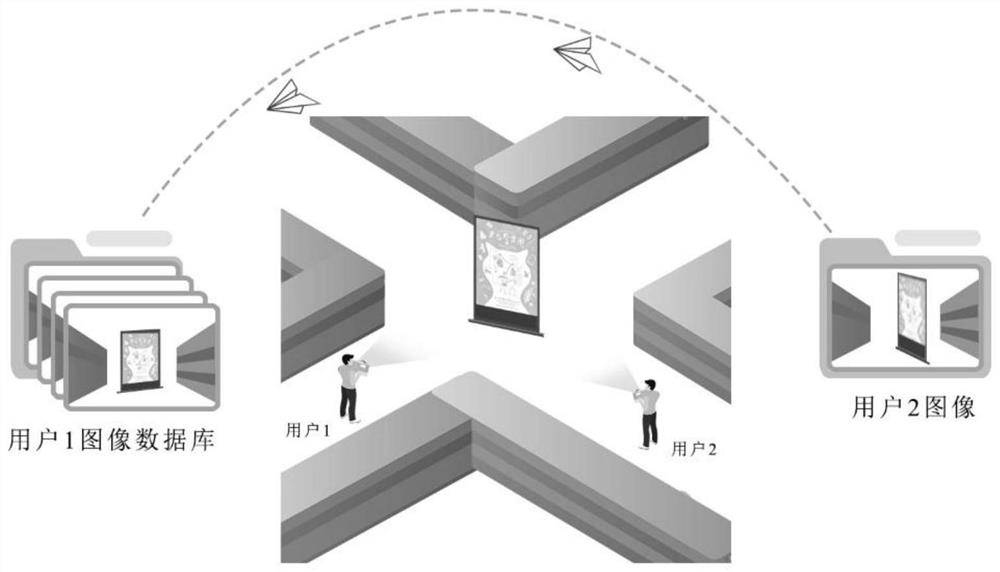

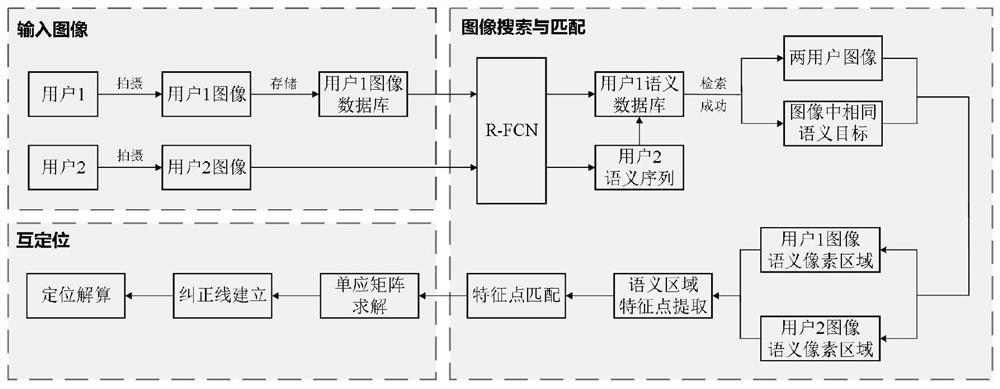

[0063] Mutual positioning between users is a process of determining each other's position, so it is necessary for two users to share information and determine the position of the other party relative to itself based on the information provided by the other party. Since images contain rich visual information, image sharing among users can obtain more content than sharing of language or text. Users judge whether they can see the same semantic scene as themselves through the pictures sent by the other party. If they fail to find the same target, it means that the two are far apart and need to continue walking to find other representative signs; When the user finds the same target that can be observed by the other party, it means that both users have a relative position relationship with the target, so a coordinate system can be established centering on the target. After the coordinate system is established, the two users can obtain their own position coordinates in the coordinate...

Embodiment 2

[0139] To verify the feasibility of the method proposed in the present invention, it is necessary to select an experimental scene for testing. The experimental environment of the present invention is the corridor on the 12th floor of Building 2A, Harbin Institute of Technology Science Park, and the plan view of the experimental scene is as follows Figure 6 shown. It can be seen from the schematic diagram that the experimental scene contains multiple corners. When two users stand on both sides of the corner, the two users cannot see each other due to obstacles, but they can observe at the same time. To the same scene, it meets the background conditions of the method proposed by the present invention, and is suitable for verifying the feasibility of the method proposed by the present invention.

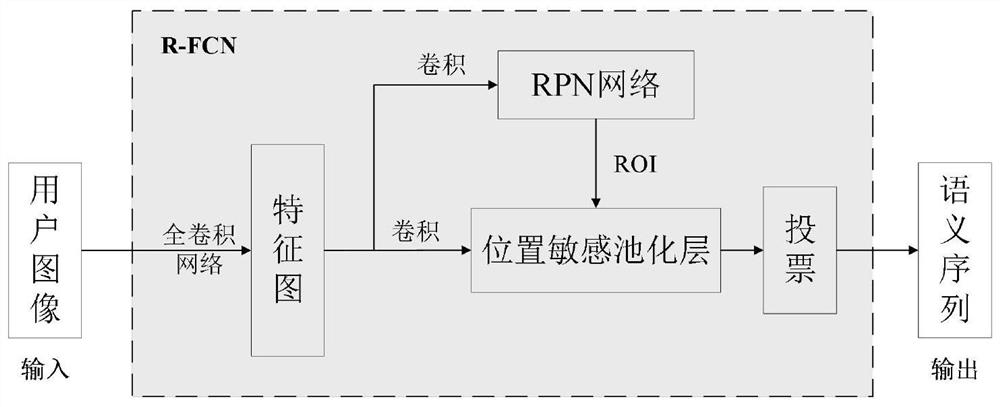

[0140] Before positioning, it is necessary to accurately identify the semantic information contained in the user's image, so as to judge whether two users can observe the same scene t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com