Visual SLAM method for target detection based on deep learning

A target detection and deep learning technology, applied in the field of computer vision sensing, can solve problems such as inability to effectively deal with dynamic scenes, and achieve the effect of improving the accuracy of pose calculation and excellent accuracy

Pending Publication Date: 2021-06-01

ARMY ENG UNIV OF PLA

View PDF7 Cites 1 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

[0005] The invention improves the accuracy of visual SLAM pose calculation and trajectory evaluation in dynamic scenes, and the accuracy is better than existing methods, and solves the problem that traditional visual SLAM cannot effectively deal with dynamic scenes

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

Embodiment 1

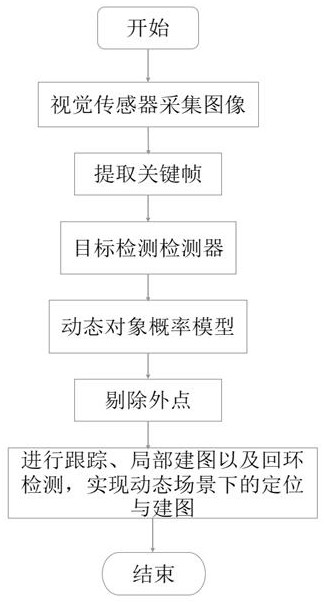

[0051] A visual SLAM method for target detection based on deep learning:

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

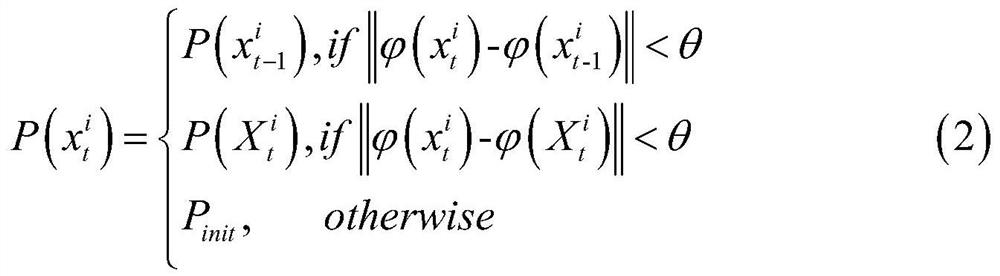

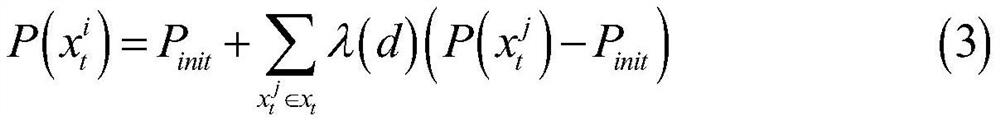

The invention discloses a visual SLAM method for target detection based on deep learning, and relates to the technical field of computer vision sensing. The method comprises the following steps: firstly, acquiring an image through a visual sensor, and performing feature extraction and target detection on the acquired image to obtain an extracted feature point and a bounding box result of target detection; according to the extracted feature points and a bounding box result of target detection, establishing a dynamic object probability model, discovering and eliminating dynamic feature points, and creating an initialized map; and continuing to perform tracking, local mapping and loopback detection processes on the initialized map in sequence, thereby constructing an accurate three-dimensional map in a dynamic scene, and finally realizing visual SLAM of target detection based on deep learning for the dynamic scene. According to the method, the precision of pose calculation and trajectory evaluation of the visual SLAM in the dynamic scene is improved, the precision is superior to that of an existing method, and the problem that the traditional visual SLAM cannot effectively deal with the dynamic scene is solved.

Description

technical field [0001] The invention relates to the technical field of computer vision sensing, in particular to a visual SLAM method combining a deep learning target detection algorithm and a dynamic object probability model. Background technique [0002] SLAM, the full name is Simultaneous Localization and Mapping, that is, simultaneous positioning and mapping, which means that the robot creates a map in a completely unknown environment under the condition of its own uncertain position, and uses the map for autonomous positioning and navigation. Among them, the SLAM system based on visual sensors is called visual SLAM. Because of its low hardware cost, high positioning accuracy, and the advantages of completely autonomous positioning and navigation, this technology has attracted wide attention in the fields of artificial intelligence and virtual reality. , also gave birth to many excellent visual SLAM systems such as PTAM, DSO, ORB-SLAM2 and OpenVSLAM. [0003] Traditiona...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More Patent Type & Authority Applications(China)

IPC IPC(8): G06T7/73G06T17/05

CPCG06T7/73G06T17/05

Inventor 艾勇保芮挺赵晓萌方虎生符磊何家林陆明刘帅赵璇

Owner ARMY ENG UNIV OF PLA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com