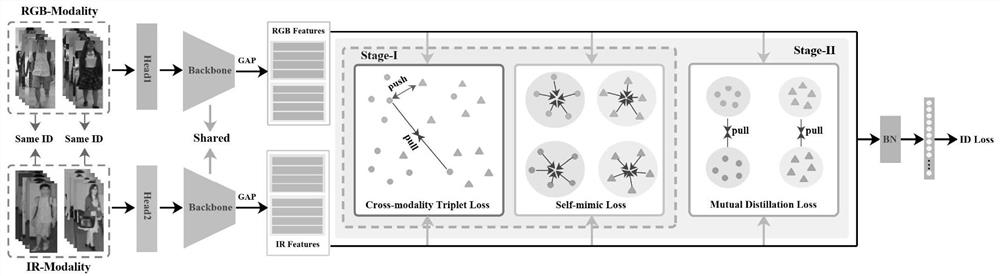

Cross-modal pedestrian re-identification method based on self-imitation mutual distillation

A pedestrian re-identification and cross-modal technology, applied in the field of image processing, can solve problems such as inability to effectively alleviate performance improvement and increase model complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] The following embodiments will further illustrate the present invention in conjunction with the accompanying drawings.

[0042] Embodiments of the present invention include the following steps:

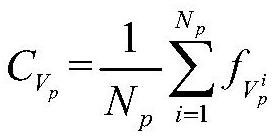

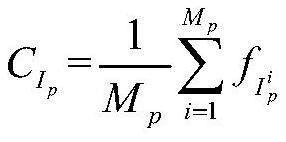

[0043] (1) The cross-modal data set contains the visible light image set and the infrared image set where p represents the identity label (ID) of the pedestrian, N p and M p Denote the total number of visible light image samples and the total number of infrared image samples, respectively. Sampling the data set, selecting eight pedestrian pictures with different IDs for each mode in each batch, and selecting four visible light images and four infrared images for each ID as the network input of the current batch;

[0044] (2) Normalize the input image, randomly crop it to a specified size (288*144), and use random flip for data enhancement;

[0045] (3) Input the visible light image to a convolution module (Head1) whose parameters are not shared, and the obtained feature ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com