Position embedding interpretation method and device, computer equipment and storage medium

A technology of given location and category, applied in the field of machine learning, can solve problems such as ignoring the interpretability of location embeddings, failing to meet the needs of business scenarios, ignoring the interpretability of models, etc., to overcome the sparseness of learning vectors and the inability to cover many semantics information, speed up model convergence, and reduce the effect of learning scale

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

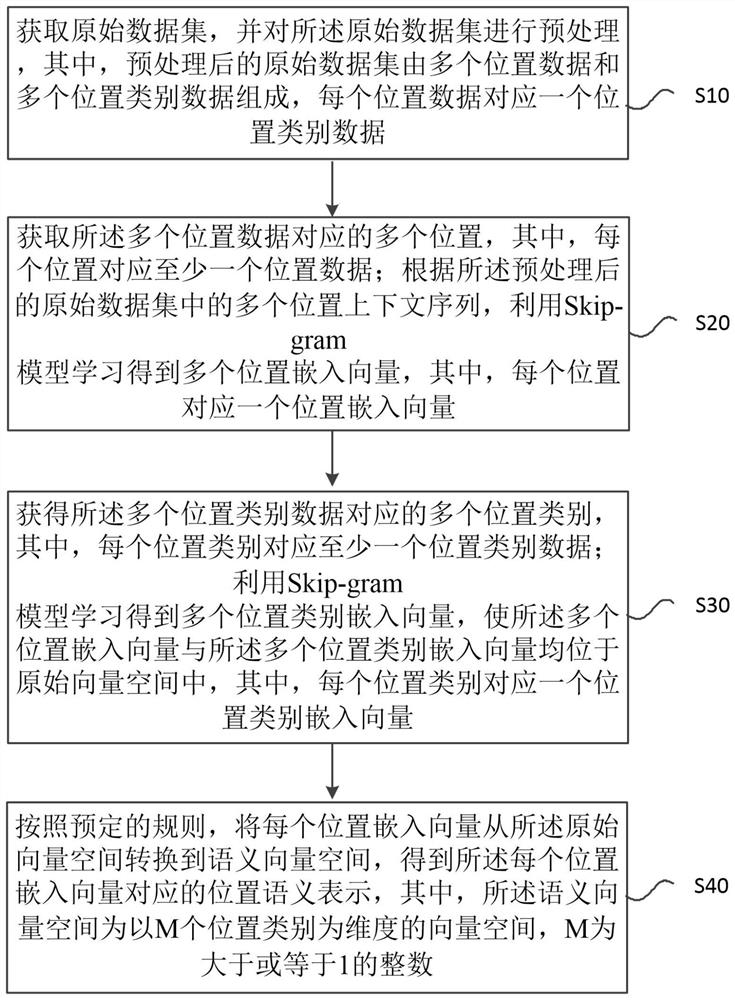

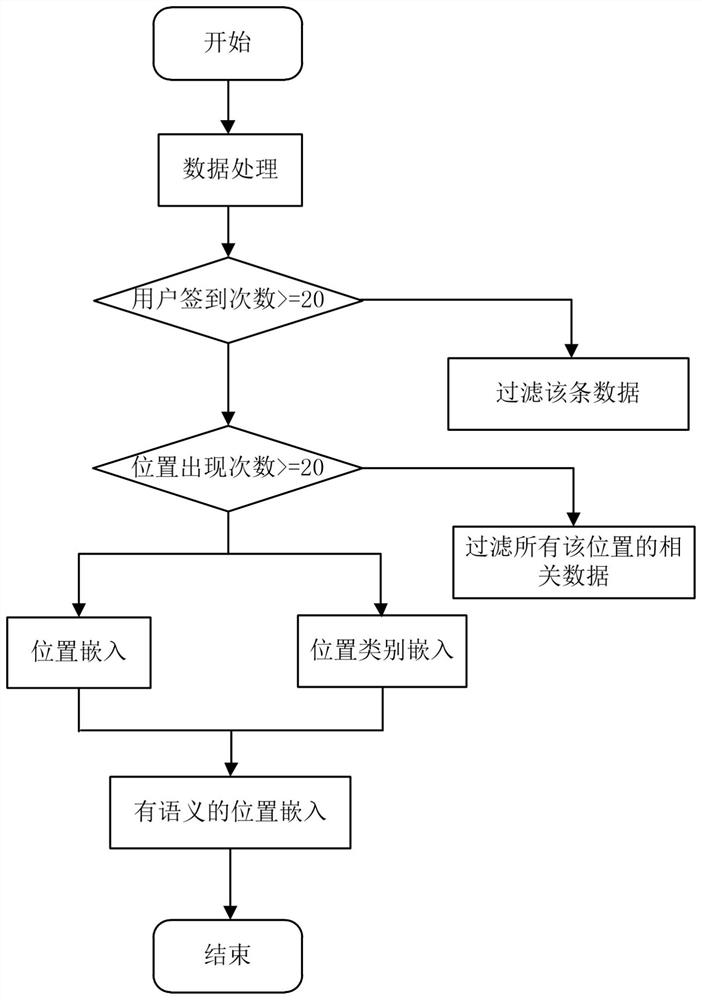

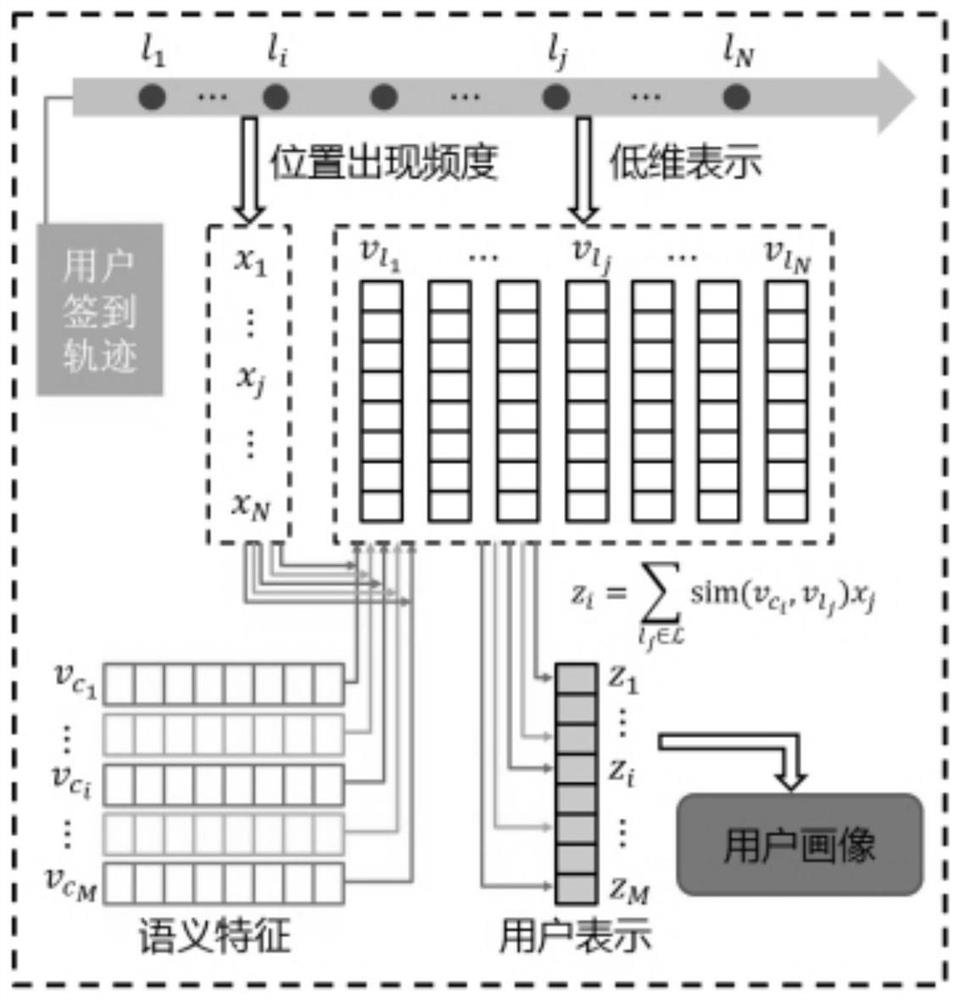

[0055] figure 1 It is a flow chart of a location embedding interpretation method provided by an embodiment of the present invention. This method aims at the uninterpretable position representation in the trajectory representation field, and makes each dimension of the position representation have clear and easy-to-understand semantics through the representation and learning of the model. The method includes steps S10-S40.

[0056] S10: Obtain an original data set, and perform preprocessing on the original data set, wherein the preprocessed original data set is composed of multiple location data and multiple location category data, and each location data corresponds to one location category data.

[0057] In one embodiment, the original data set is the user's check-in data set, and the preprocessing of the original data set includes: filtering out the check-in data corresponding to users with less than 20 check-in times in the original data, and simultaneously filtering out th...

Embodiment 2

[0138] Figure 4 is a schematic structural diagram of a position-embedded interpretation device provided by an embodiment of the present invention. The device is used to implement the location embedding interpretation method provided in Embodiment 1, including a data acquisition module 410 , a location embedding module 420 , a category embedding module 430 and a semantic representation module 440 .

[0139] The data acquisition module 410 is used to acquire an original data set and perform preprocessing on the original data set, wherein the preprocessed original data set is composed of a plurality of location data and a plurality of location category data, and each location data corresponds to a Location category data.

[0140] The location embedding module 420 is used to obtain multiple locations corresponding to the multiple location data, wherein each location corresponds to at least one location data; according to the multiple location context sequences in the preprocesse...

Embodiment 3

[0169] Figure 5 It is a schematic structural diagram of a computer device provided by an embodiment of the present invention. Such as Figure 5 As shown, the device includes a processor 510 and a memory 520 . The number of processors 510 may be one or more, Figure 5 A processor 510 is taken as an example.

[0170] The memory 520, as a computer-readable storage medium, can be used to store software programs, computer-executable programs and modules, such as the program instructions / modules embedded in the interpretation method in the embodiment of the present invention. The processor 510 executes the software programs, instructions and modules stored in the memory 520 to implement the above-mentioned location-embedded interpretation method.

[0171] The memory 520 may mainly include a program storage area and a data storage area, wherein the program storage area may store an operating system and an application program required by at least one function; the data storage ar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com