Adaptive distributed parallel training method for neural network based on reinforcement learning

A reinforcement learning and neural network technology, applied in the field of model parallel training schemes, can solve the problems of inability to guarantee other performance of the strategy, single parallel dimension, etc., to achieve the effect of expanding offline learning capabilities, speeding up, and improving comprehensive performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The present invention will be further described below in conjunction with accompanying drawing and specific implementation steps:

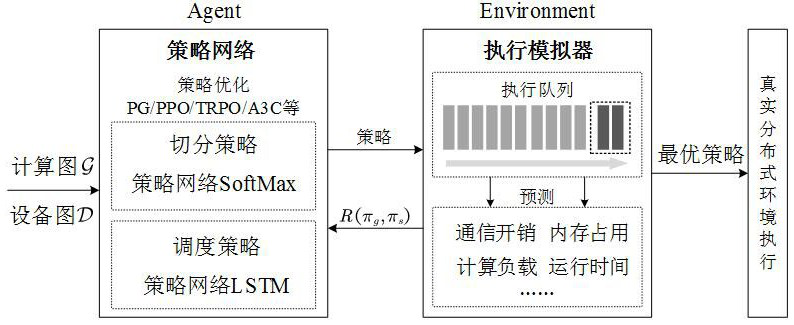

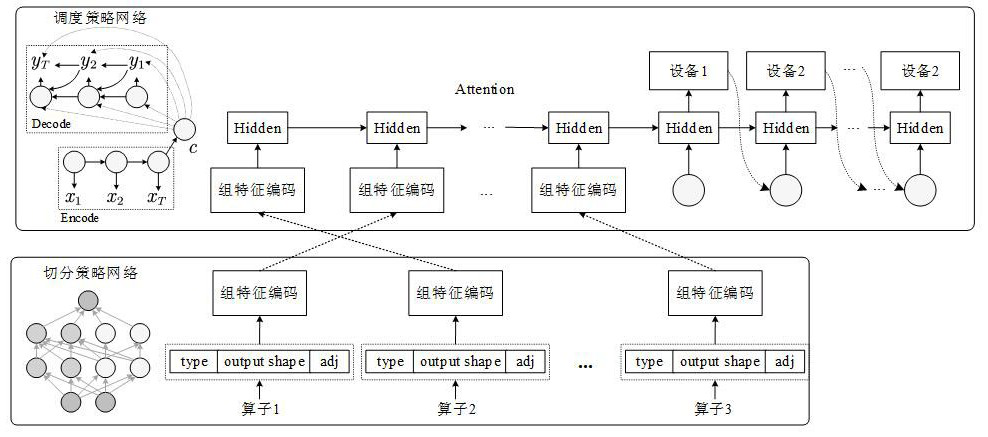

[0025] Such as figure 1 As shown, a neural network adaptive distributed parallel training method based on reinforcement learning, including the following steps:

[0026] Step 1: Build a multidimensional performance evaluation model R(π g , π s ), to measure the comprehensive performance of the strategy. First, analyze the factors that affect the execution performance of the neural network, including the structure of the neural network model, computing properties, and cluster topology; secondly, extract the computing cost E i , communication cost C i and memory usage M iand other performance factors, where the calculation cost E i , communication cost C i and memory usage M i It is defined as follows:

[0027] The calculation cost is expressed by dividing the precision of the tensor involved in the operation by the calculation densi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com