Complex component point cloud splicing method and system based on feature fusion

A feature fusion and point cloud splicing technology, applied in the field of computer vision, can solve problems such as inability to accurately estimate the relative pose transformation of local point clouds, inability to meet the application requirements of high-precision three-dimensional measurement, and inability to fit non-rigid body transformations, etc., to achieve improved Accuracy, good feature expression ability, good noise robustness effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

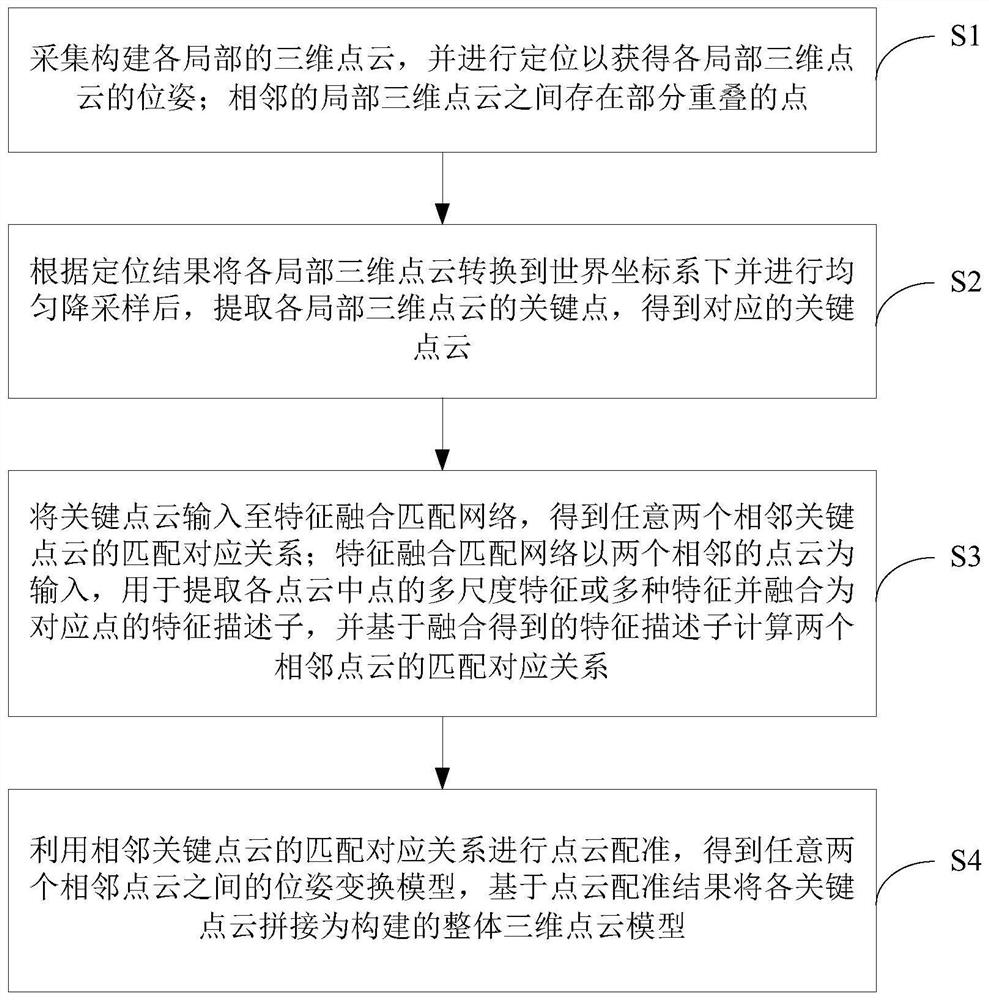

[0070] A complex component point cloud stitching method based on feature fusion, such as figure 1 shown, including the following steps:

[0071] (S1) Collect the 3D point cloud of each part of the component, and perform positioning to obtain the pose of each local 3D point cloud; there are partially overlapping points between adjacent local 3D point clouds;

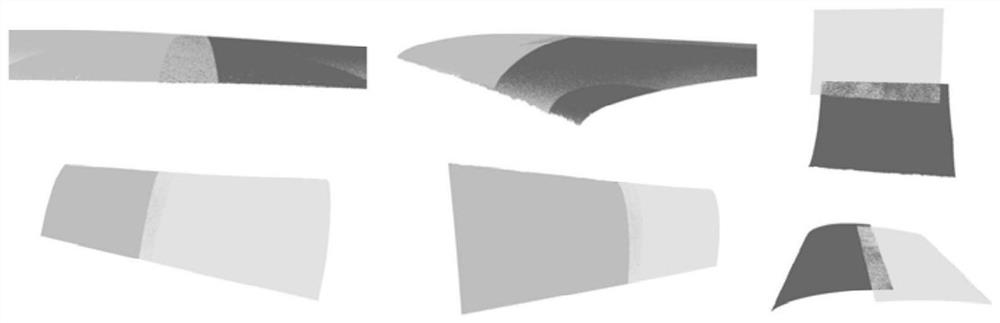

[0072] figure 2 Shown are 6 pairs of adjacent local 3D point clouds, and there is overlap between each pair of adjacent local 3D point clouds;

[0073] In practical applications, each local 3D point cloud model can be obtained through a 3D scanner, and the positioning of each piece of point cloud in the center can be obtained through a multi-sensor target positioning method, so as to obtain the position of each local 3D point cloud in the world coordinate system posture;

[0074] In the positioning process, the local 3D point clouds that are adjacent to each other in space have their positioning order also adjacent. ...

Embodiment 2

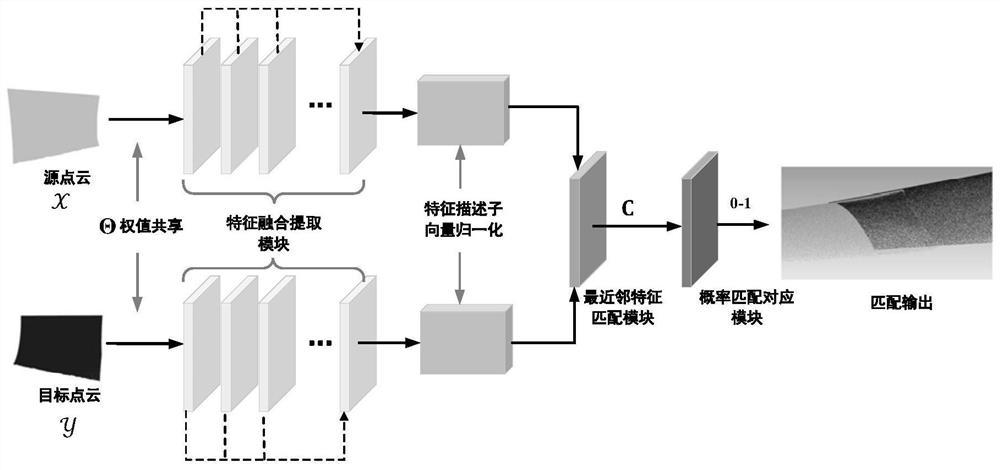

[0149] A method for splicing point clouds of complex components based on feature fusion, this embodiment is similar to the above-mentioned embodiment 1, the difference is that in step (S3) of this embodiment, the feature fusion matching network used is based on two phases The adjacent point cloud is used as input, which is used to extract various features of points in each point cloud and fuse them into feature descriptors of corresponding points, and calculate the matching correspondence between two adjacent point clouds based on the fused feature descriptors; The various features extracted by the fusion matching network can be FPFH, SHOT, Super4PCS, etc.;

[0150] In terms of structure, the feature fusion matching network in this embodiment is also similar to the feature fusion matching network structure in the above-mentioned embodiment 1. The difference is that in this embodiment, the first feature fusion extraction in the feature fusion matching network The module is used...

Embodiment 3

[0157] A complex component point cloud stitching system based on feature fusion, including: a data acquisition module, a preprocessing module, a feature fusion matching module, and a registration stitching module;

[0158] The data acquisition module is used to collect the 3D point cloud of each part of the component, and perform positioning to obtain the pose of each local 3D point cloud; there are partially overlapping points between adjacent local 3D point clouds;

[0159] The preprocessing module is used to convert each local 3D point cloud to the world coordinate system according to the positioning result and perform uniform downsampling, extract the key points of each local 3D point cloud, and obtain the corresponding key point cloud;

[0160] The feature fusion matching module is used to input the key point cloud into the feature fusion matching network to obtain the matching correspondence between any two adjacent key point clouds; the feature fusion matching network us...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com