Hand-eye calibration method based on deep learning

A hand-eye calibration and deep learning technology, which is applied in the field of hand-eye calibration based on deep learning, can solve the problems that the corner points cannot obtain the position information of the calibration object, consumes a long time, and requires a large amount of calculation. Robust effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

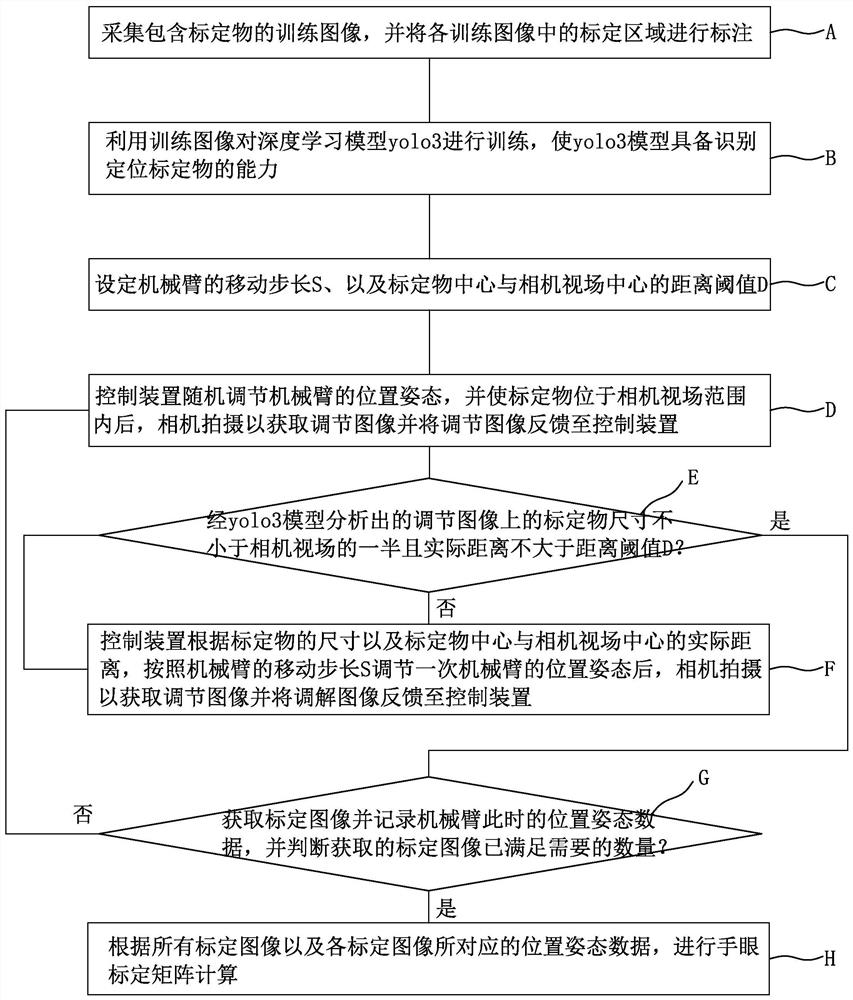

[0034] Such as figure 1 As shown, the hand-eye calibration method based on deep learning includes the following steps:

[0035] A. Collect training images containing calibration objects, and mark the calibration object areas in each training image; specifically: place the calibration objects in different scenes, and adjust the calibration object positions to shoot different angles of view of the calibration objects ( For example, the calibration object is located in the middle of the training image, and part of the calibration object is located in the training image), by adjusting the camera model, shooting the calibration object in different fields of view to obtain a sufficient number of training images, and using image labeling tools (such as labelimage software) to mark out the calibration object area in each training image; the calibration object can be an object with a special mark, which is a prior art;

[0036] B. Use the training image to train the deep learning mode...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com