Real-time video recognition accelerator architecture based on key object splicing

An accelerator and object technology, applied in the field of neural networks, can solve the problems of difficulty in guaranteeing recognition accuracy, difficulty in improving recognition speed, and long time consumption, so as to reduce redundant calculations, improve processing speed and recognition accuracy, and save calculations workload effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0062] In order to solve the technical problems existing in the prior art, an embodiment of the present invention provides a real-time video recognition accelerator architecture based on key object stitching.

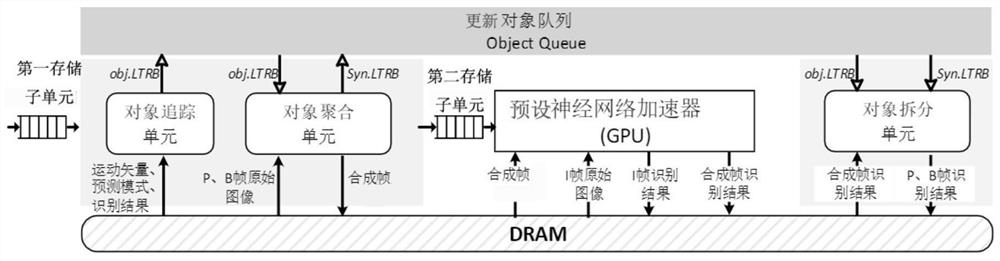

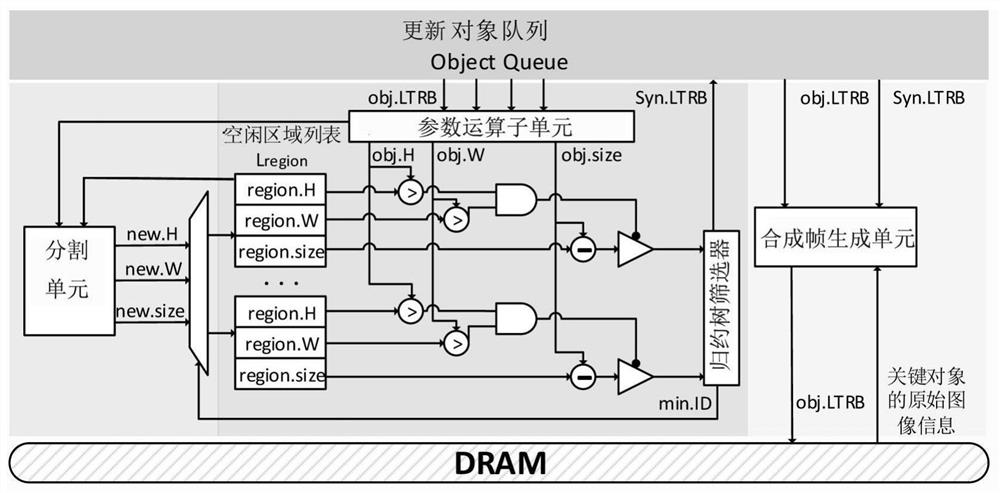

[0063] figure 1 It shows a schematic structural diagram of a real-time video recognition accelerator architecture based on key object stitching according to an embodiment of the present invention; refer to figure 1 As shown, the real-time video recognition accelerator architecture based on the combination of key objects in the present invention includes an object tracking module, an object aggregation module, an object splitting module, a preset neural network accelerator, an update object queue module and a main memory module, wherein the object tracking module, The object aggregation module, the object splitting module, and the preset neural network accelerator are respectively connected to the update object queue module and the main memory module, and the main memory...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com