Indoor navigation method based on augmented reality

An augmented reality and indoor navigation technology, applied in the field of indoor navigation based on augmented reality, can solve problems such as poor signal strength accuracy, indoor navigation positioning, and aesthetic impact, and achieve high accuracy, strong fault tolerance, and fast speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The present invention will be described in further detail below in conjunction with the accompanying drawings.

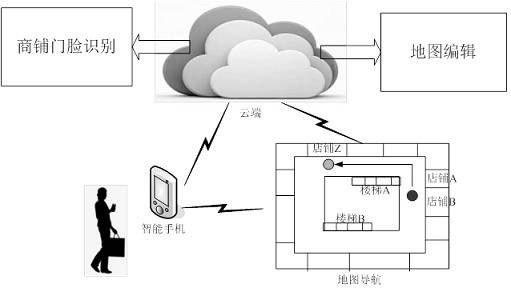

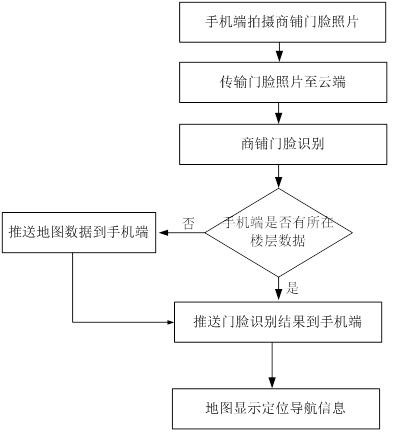

[0020] Such as figure 1 As shown, the present invention completes the definition and editing of the shopping mall map through the map editing function of the cloud, and provides indoor positioning and navigation functions for users through the shop face recognition function of the cloud. The present invention has the following characteristics:

[0021] (1) The cloud map editing function is the basis of positioning and navigation functions, responsible for the definition and editing of shopping mall maps. The fully enclosed area in the map is a no-passing area, and shops, stairs, toilets, etc. are all fully enclosed areas. Each fully enclosed area can define a separate logo for positioning and navigation. The all-communication area is the passable area for users, and positioning and navigation can only be performed in the all-communication area outside the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com