Method for projecting notes in real time based on book position

A book and projection technology, applied in the field of projection display, which can solve problems such as difficult notes and projections

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

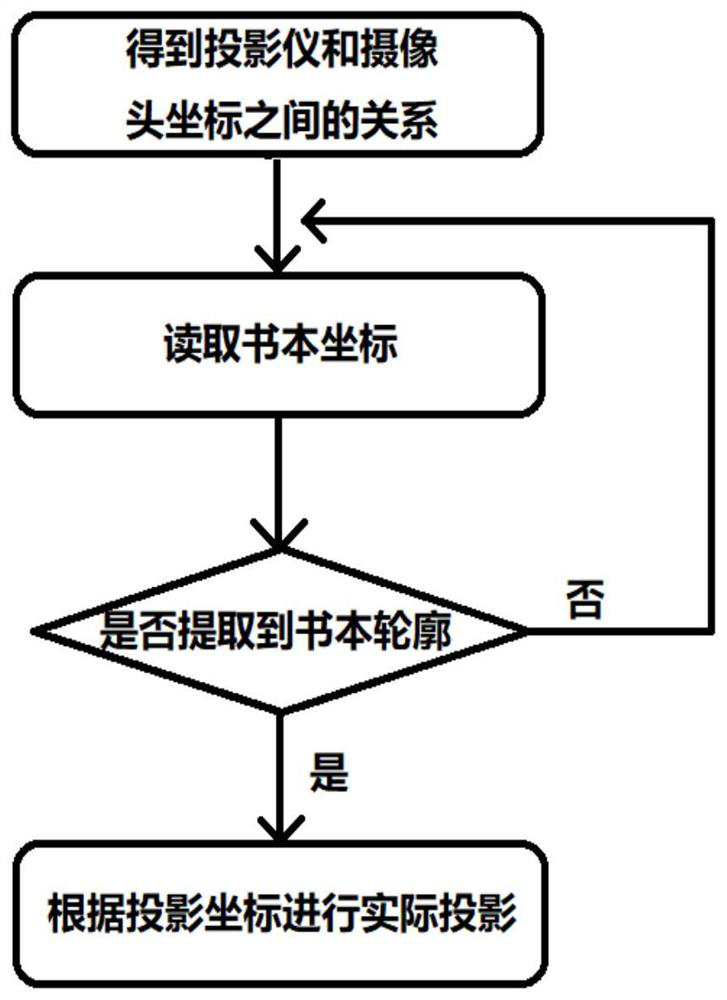

Image

Examples

Embodiment 2

[0125] For reading the coordinates of the book in step 2, the following methods can be used to extract the outline of the book:

[0126] 1) Use the camera to take a picture containing a complete book page.

[0127] 2) Use Photoshop to extract 5 to 7 points with representative colors within the range of the book page in the picture, read the color RGB values of these points, and determine the RGB range of the book page color according to these RGB values.

[0128] 3) According to the RGB range in the previous step, use the inRange function in the CV2 library to extract the corresponding color range from the image captured by the camera to obtain a binary image.

[0129] 4) Gaussian blur the binarized picture.

[0130] 5) Then proceed to step (26), and the subsequent steps are the same.

Embodiment 3

[0132] For reading the coordinates of the book in step 2, the following methods can be used to calculate the coordinates of the four points of the book:

[0133] 1) Judging the polygon obtained in step (27), if the number of corner points of the polygon is equal to 4 (that is, a quadrilateral is extracted), then it is considered that the outline of the book has been extracted, and the coordinates of the four corner points of the polygon are ( x1, y1), (x2, y2), (x3, y3), (x4, y4).

[0134] 2) Substitute (x1, y1), (x2, y2), (x3, y3), (x4, y4) obtained in the above step into step (32), and the subsequent steps are the same.

Embodiment 4

[0136] To obtain the relationship between the projector and camera coordinates in step 1, make the following changes:

[0137] (1) Place a square piece of cardboard with a side length of 10cm on the base. Hereinafter referred to as the paper sheet, the function of the paper sheet is to provide an accurate reference for subsequent calibration.

[0138] (2) Obtain an image with a square through the camera.

[0139] (3) According to the projection size of the projector, generate a black picture that just occupies the screen of the projector with a red square at any position, and this square is recorded as a red square below. The midpoint of the red square is located at (α0, β0). The camera distortion is considered here, so the width and height are not necessarily equal. It is recorded as W0, H0, and the picture is projected on the projector, where the picture is named src.

[0140] (4) Mark the center point of the paper in the image acquired by the camera and obtain the pixel c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com