An intelligent human-computer interaction method and device based on graphic-text matching

A technology of human-computer interaction and graphic text, applied in the field of computer vision, can solve the problems of human-computer interaction limitations and low interaction efficiency, and achieve the effect of efficient and simple operation, efficient extraction, and accurate and reliable correlation matching algorithm

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

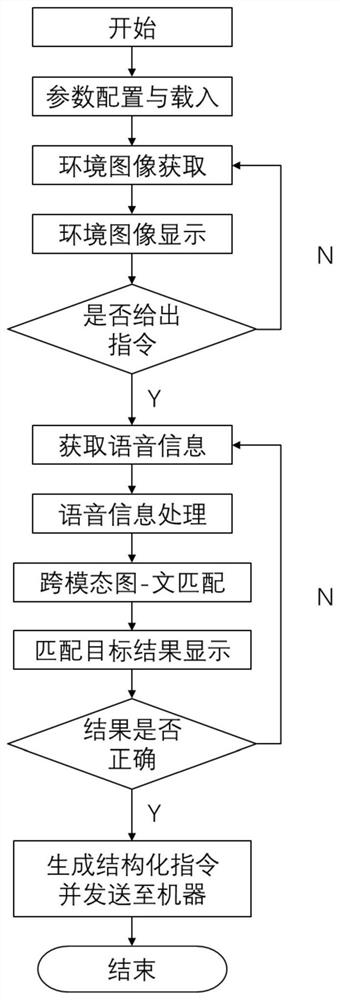

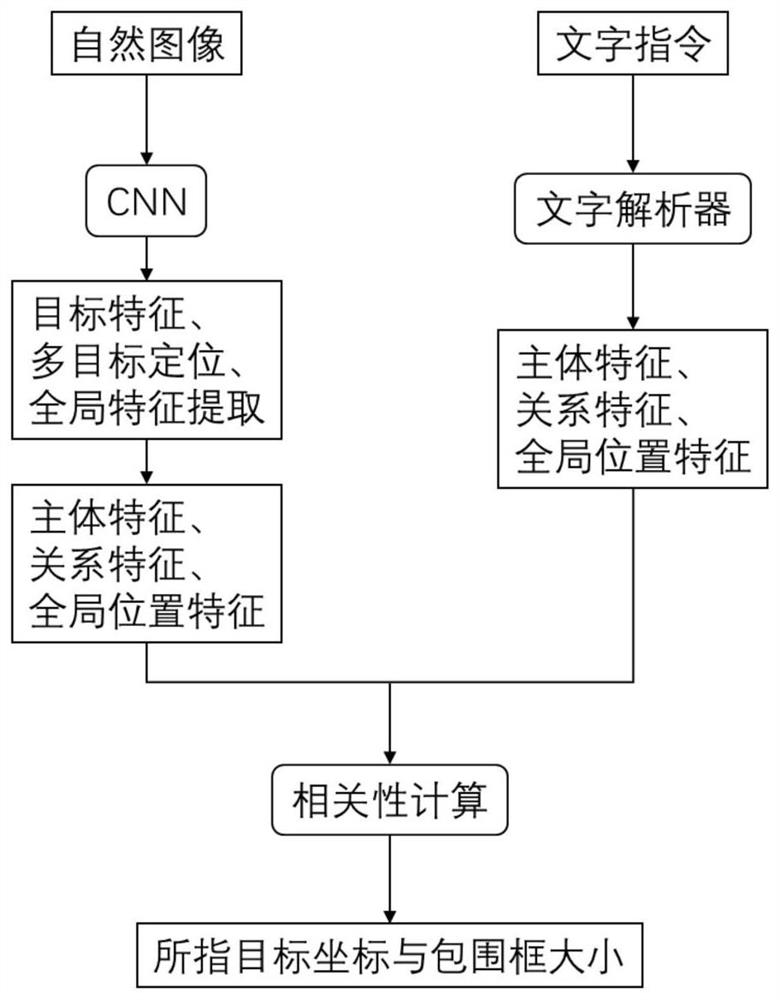

[0035] Please refer to figure 1 , which shows a block diagram of an intelligent human-computer interaction method based on image-text matching provided by an embodiment of the present invention.

[0036] Such as figure 1 As shown, the method of the embodiment of the present invention mainly includes the following steps:

[0037] S1 speech recognition: collect the user's speech information, and use the template matching speech recognition algorithm to convert the speech information into a text sequence; the template matching uses dynamic time warping technology for feature training and recognition, and the hidden Markov model is used to analyze the speech signal Statistical models are established for the time series structure, and signal compression is performed by vector quantization technology;

[0038] After the sound signal is collected with a microphone, the sound signal is converted into a digital signal. The preprocessing operation of denoising the signal, and then us...

Embodiment 2

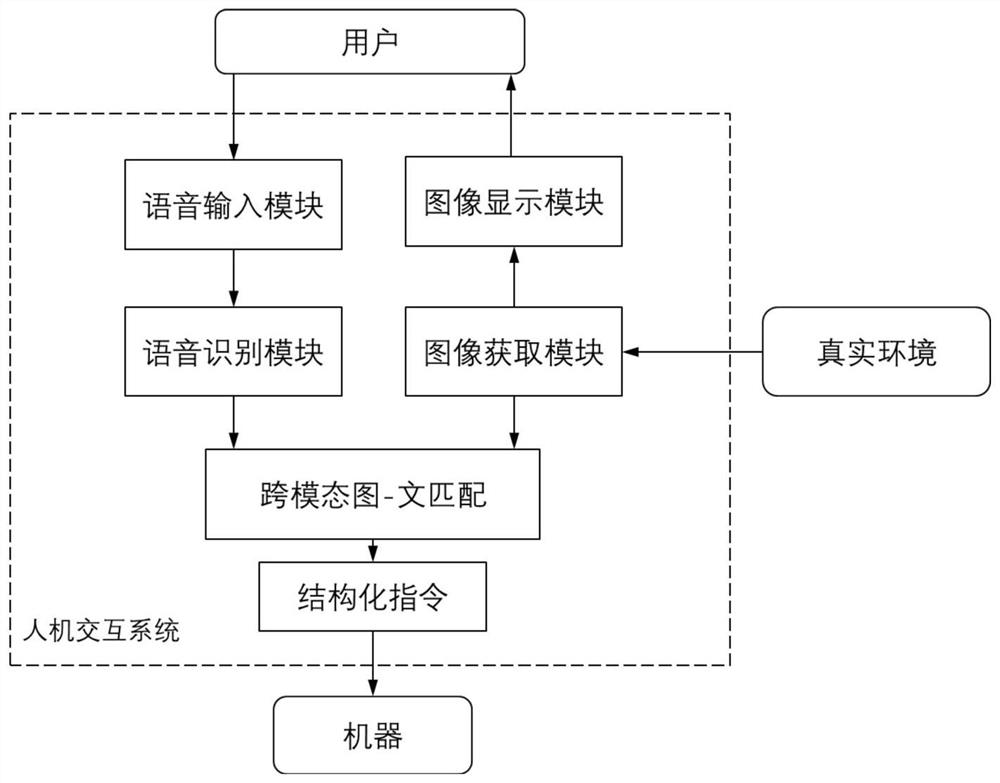

[0050] Furthermore, another embodiment of the present invention provides an intelligent human-computer interaction device based on image-text matching as an implementation of the methods shown in the above-mentioned embodiments. This device embodiment corresponds to the foregoing method embodiment. For the convenience of reading, this device embodiment does not repeat the details in the foregoing method embodiment one by one, but it should be clear that the device in this embodiment can correspond to the foregoing method implementation. Everything in the example. image 3 A composition block diagram of an intelligent human-computer interaction device based on image-text matching provided by an embodiment of the present invention is shown. Such as image 3 As shown, in the device of this embodiment, there are following modules:

[0051] 1. Voice input module: used to collect voice information of users;

[0052] Including sound acquisition unit and signal denoising unit; the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com