A Method for 3D Object Point Cloud Recognition Based on Subflow Pattern Sparse Convolution

A three-dimensional target and sub-flow technology, which is applied in the fields of deep learning and three-dimensional target detection and recognition, can solve problems such as less computing resources, achieve stable training process, reduce training difficulty, and achieve time efficiency and recognition accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] Below in conjunction with embodiment, the present invention is described in further detail:

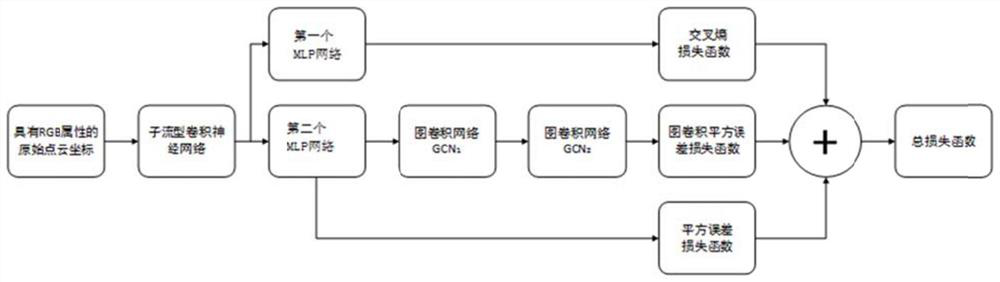

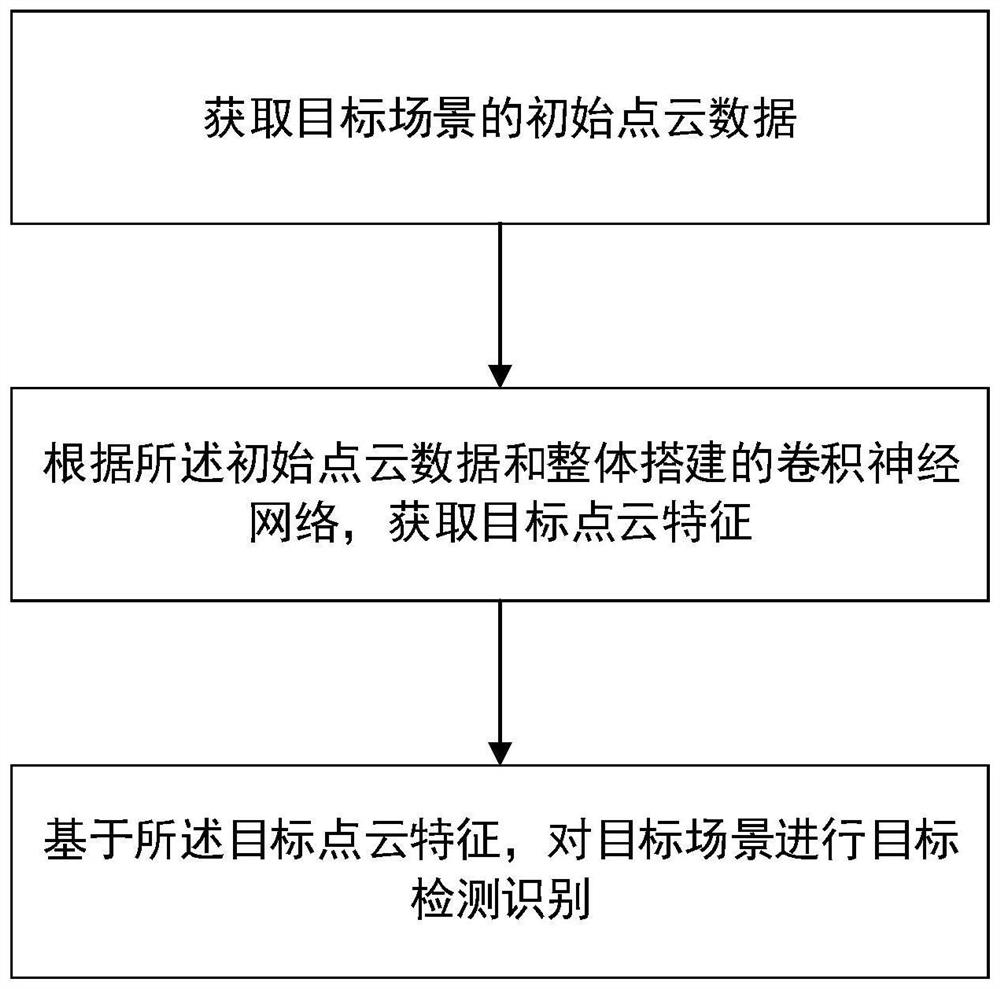

[0042] like Figure 1 to Figure 2 As shown, a method for 3D target point cloud recognition based on sub-flow type sparse convolution includes the following steps:

[0043] Step 1: Get the initial point cloud data of the target scene:

[0044] The target scene can be an outdoor scene or an indoor scene. It is necessary to obtain the initial point cloud data of the target scene, which can be acquired by a depth camera, or can be obtained by using other monocular or binocular imaging systems. Common depth cameras include Kinect and TOF cameras.

[0045] Step 2: Based on the initial point cloud data and the sub-flow type convolutional neural network, use the sub-flow type sparse convolution to perform local feature extraction to obtain local features of the target point cloud;

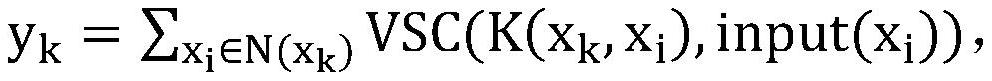

[0046] The point cloud itself has the characteristics of sparseness. When the sub-flow convolutional ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com