Gradient compression method for distributed DNN training in edge computing environment

A technology of edge computing and compression method, applied in the fields of distributed DNN training, gradient compression, and adaptive sparse ternary gradient compression, which can solve the problems of poor communication efficiency and model accuracy optimization, and achieve the effect of reducing communication costs.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] Below in conjunction with specific embodiment, further illustrate the present invention, should be understood that these embodiments are only used to illustrate the present invention and are not intended to limit the scope of the present invention, after having read the present invention, those skilled in the art will understand various equivalent forms of the present invention All modifications fall within the scope defined by the appended claims of the present application.

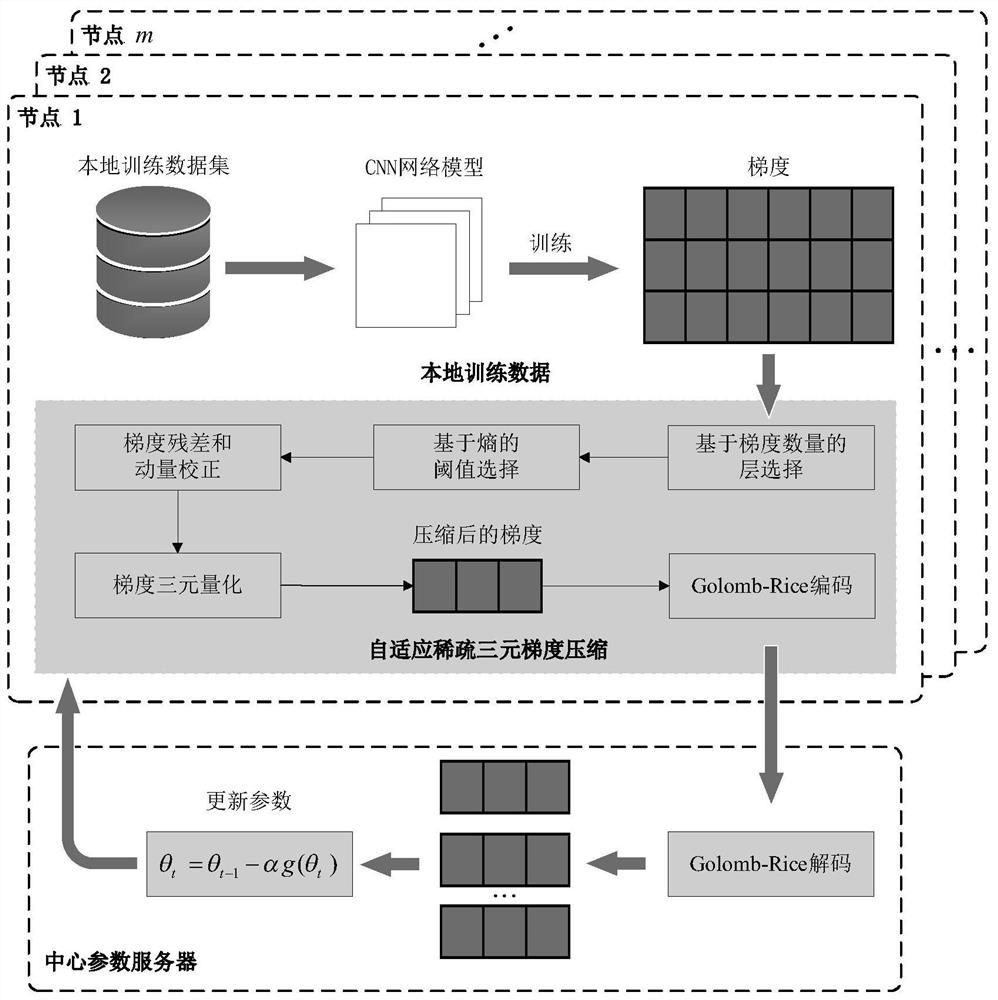

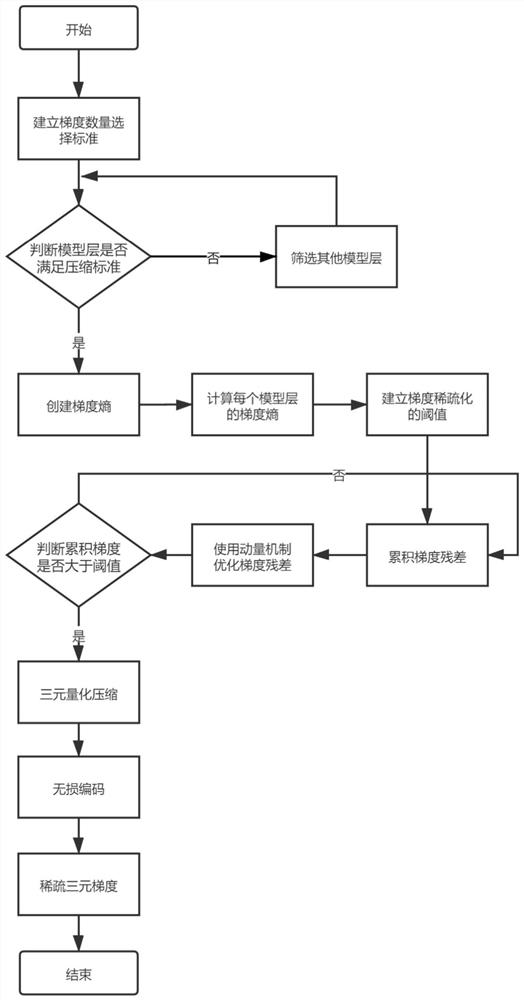

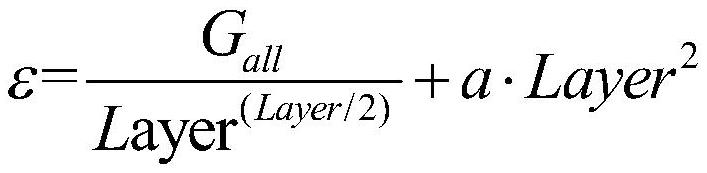

[0048]In this embodiment, an adaptive sparse ternary gradient compression method for distributed DNN training in a multi-edge computing environment. By establishing selection criteria based on the number of gradients, designing an entropy-based sparse threshold selection algorithm, introducing gradient residuals and momentum corrections to optimize sparse compression resulting in loss of model accuracy, combined with ternary gradient quantization and lossless coding techniques, effectively reducing...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com