Design of background automatic computing engine of data management system

A data management system and automatic calculation technology, applied in database management systems, electronic digital data processing, special data processing applications, etc., can solve the problem that the storage system cannot store big data, etc., and achieve the effect of convenient use and good user experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

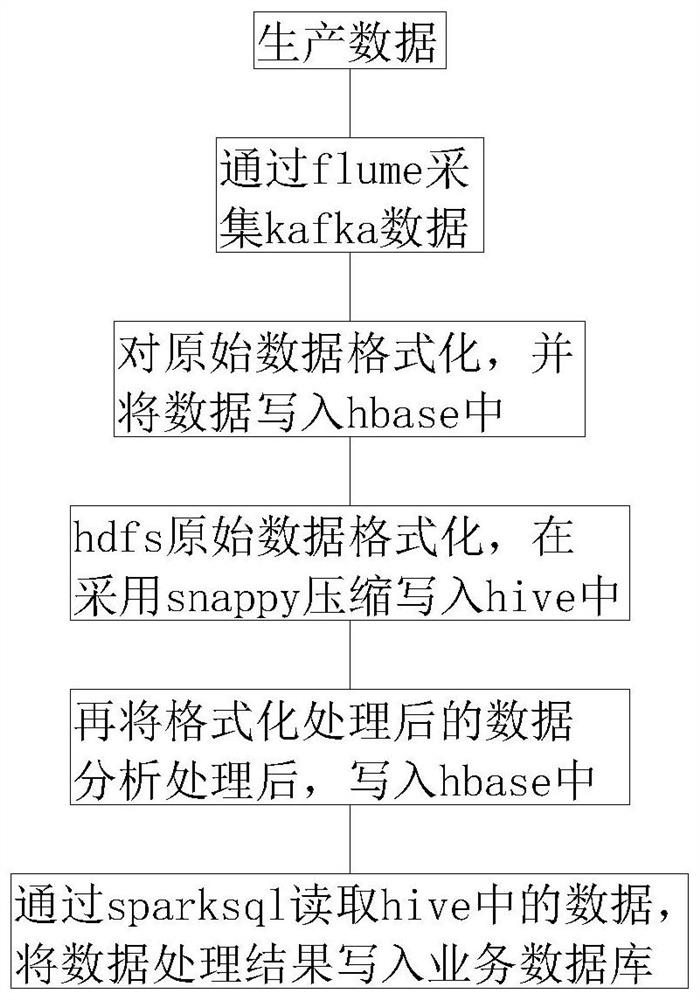

[0025] Such as figure 1 As shown, the embodiment of the present invention provides the design of the background automatic calculation engine of the data management system, and adopts the technology combining spark and hadoop distributed architecture to process large-scale data calculation, including the following specific implementation steps:

[0026] Step 1. The party that produces data produces data in Kafka. The Kafka cluster contains multiple broker servers, and Flume can be one of Flume-ng and Flume-og;

[0027] Step 2. The offline architecture uses flume to collect data in Kafka and transfer it to HDFS;

[0028] Step 3. The real-time architecture uses sparkstreaming to consume data in Kafka, formats the original data, and writes the data into hbase or elasticsearch;

[0029] Step 4. Write sql scripts to format the original data in hdfs, and finally use snappy compression to write them into the offline data warehouse hive;

[0030] Step 5. Analyze and process the forma...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com