Continuous sign language statement recognition method based on modal matching

A sentence recognition and sign language technology, applied in the field of sign language recognition, can solve problems such as unsatisfactory practicability, time-consuming and labor-consuming, large parameter volume, etc., and achieve the effect of improving practical application ability, reducing high dependence, and reducing strict requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

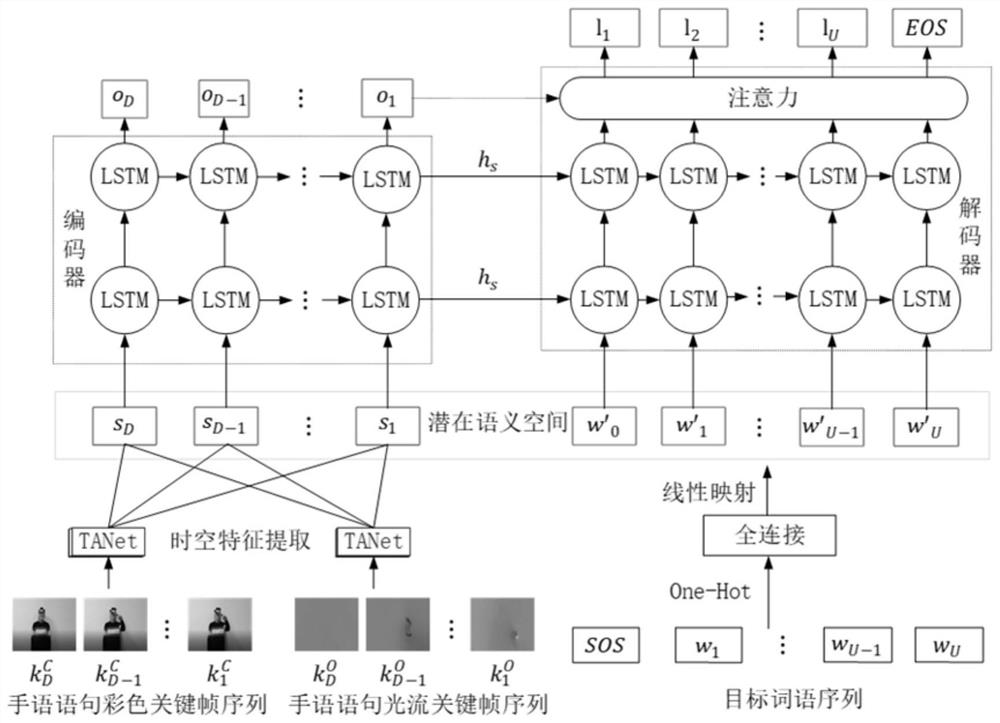

[0076] The continuous sign language sentence recognition method based on modal matching of the present invention, the steps are as follows:

[0077] Step S1, collect 1000 sign language videos in color video mode, use the TV-L1 algorithm to extract the optical flow information of sign language videos in color video mode, and form an optical flow image sequence with the same number of frames, and provide optical flow video mode sign language video in different modalities; using CNN to extract the key frames of each sign language video in the above two modalities respectively, corresponding to the key frame sign language of the two modalities with a pixel size of 224×224 and uniform sampling of key frames to 8 frames For the video, 800 corresponding videos are selected from the key-frame sign language videos of the two modalities to form the training set, and the remaining videos in the key-frame sign language videos of the two modalities form the test set. The number D of key fr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com