An Online Cross-Modal Retrieval Method and System for Streaming Data

A cross-modal, streaming data technology, applied in the direction of still image data retrieval, digital data information retrieval, metadata still image retrieval, etc., can solve the problems of unguaranteed performance, data information loss, etc., to save computation and facilitate dynamic Changes, the effect of ensuring retrieval accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

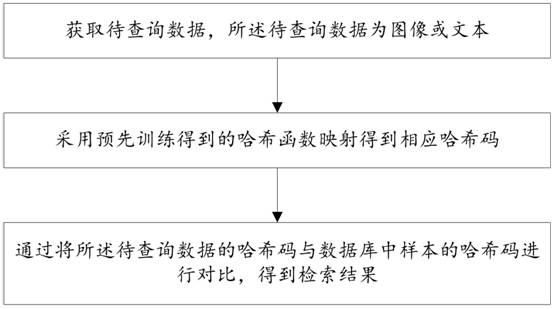

[0048] This embodiment discloses an online cross-modal retrieval method for streaming data, such as figure 1 shown, including the following steps:

[0049] Step 1: Obtain the data to be queried, and use the pre-trained hash function to map to obtain the corresponding hash code, and the data to be queried is an image or text;

[0050] Step 2: By comparing the hash code of the data to be queried with the hash code of the sample in the database, the retrieval result is obtained.

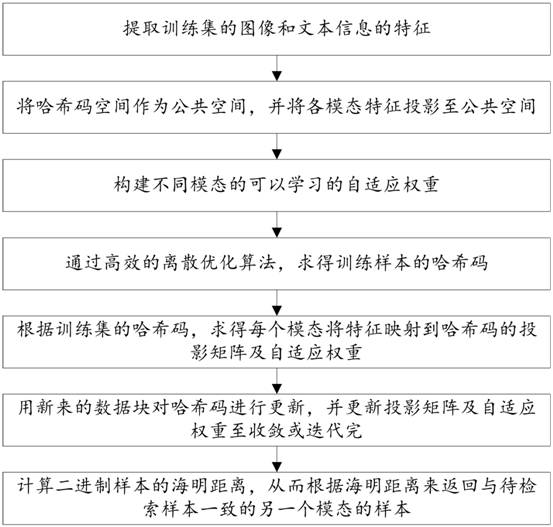

[0051] Among them, such as figure 2 Shown, the training method of described hash function comprises:

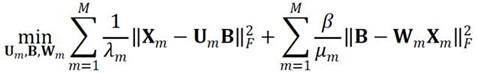

[0052] S1: Acquire data and divide it into training data and test data. The training data includes pairs of image and text data. Since the network resources available for retrieval (such as image and text data) are continuously updated in the form of data streams, in order to adapt to online retrieval tasks, the training data is divided into rounds times, used to simulate the arrival of streaming da...

Embodiment 2

[0134] The purpose of this embodiment is to provide an online cross-modal retrieval system for streaming data, including:

[0135] The hash mapping module is used to obtain the data to be queried, and the corresponding hash code is obtained by using the pre-trained hash function mapping, and the data to be queried is an image or text;

[0136] A cross-modal retrieval module, configured to obtain a retrieval result by comparing the hash code of the data to be queried with the hash code of the sample in the database;

[0137] Wherein, the training method of described hash function comprises:

[0138] Obtain training data comprising pairs of images and text, and divide the training data into rounds;

[0139] Starting from the first round, the hash code learning is performed on the training data of each round in turn to obtain the corresponding hash function.

[0140] The steps involved in the above second embodiment correspond to the first method embodiment, and for the specifi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com