Pyramid Transform-based point cloud reconstruction method, device and equipment, and medium

A pyramid and point cloud technology, applied in the field of computer vision, can solve the problem of low reconstruction accuracy of object surface details, and achieve the effect of high sampling efficiency, accurate sampling and high precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

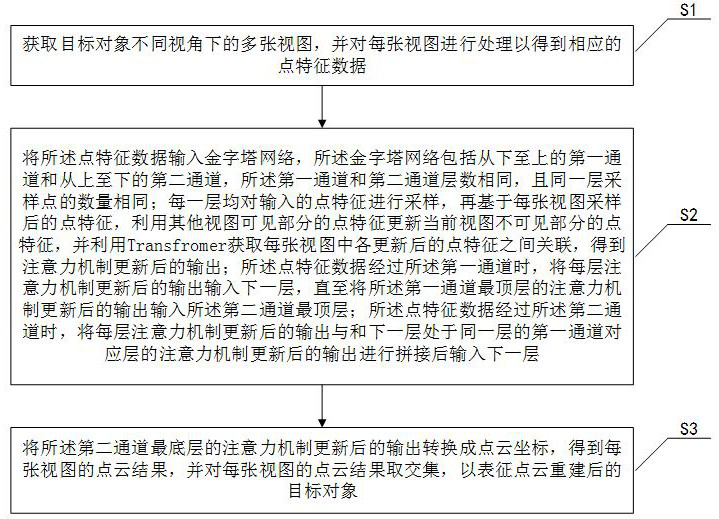

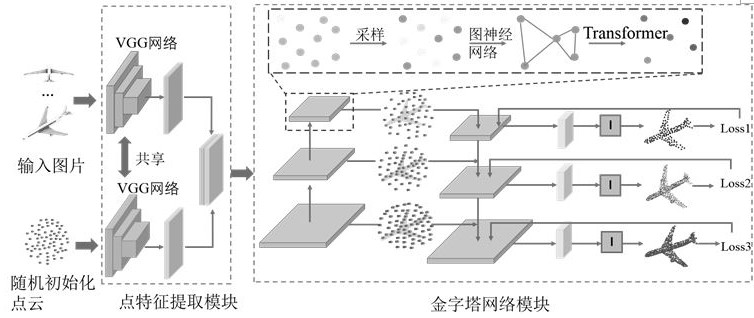

[0031] refer to figure 1 , combined with figure 2 , the embodiment of the present invention provides a kind of point cloud reconstruction method based on Pyramid Transformer, comprising:

[0032] S1. Obtain multiple views of the target object under different viewing angles, and process each view to obtain corresponding point feature data.

[0033] In this embodiment, in order to obtain more accurate point features, multi-scale global and local information is extracted from the input image. For the local features of points, this program uses the VGG16 network to encode the input image, and outputs the results of different depth convolutional neural networks to obtain multi-scale feature maps. After obtaining the feature maps of these two-dimensional images, use the intrinsic matrix of the camera to project the initial random point cloud onto the feature map, and obtain the coordinates of each point on the feature map. The feature corresponding to the coordinates in the feature...

Embodiment 2

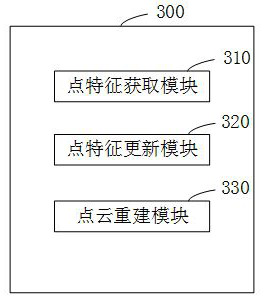

[0060] refer to image 3, the present invention provides a pyramid Transformer-based point cloud reconstruction device 300 provided by an embodiment of the present invention, and the device 300 includes:

[0061] A point feature acquisition module 310, configured to acquire multiple views of the target object under different viewing angles, and process each view to obtain corresponding point feature data;

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com