Stumpage diameter at breast height measuring method based on machine vision and deep learning

A technology of deep learning and machine vision, applied in the field of machine vision, can solve problems such as difficult to carry, cumbersome steps to use, and difficult to promote, and achieve the effects of improving efficiency, improving accuracy, and reducing errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The principles and features of the present invention are described below in conjunction with the accompanying drawings, and the examples given are only used to explain the present invention, and are not intended to limit the scope of the present invention. In the following paragraphs the invention is described more specifically by way of example with reference to the accompanying drawings. Advantages and features of the present invention will be apparent from the following description and claims. It should be noted that all the drawings are in a very simplified form and use imprecise scales, and are only used to facilitate and clearly assist the purpose of illustrating the embodiments of the present invention.

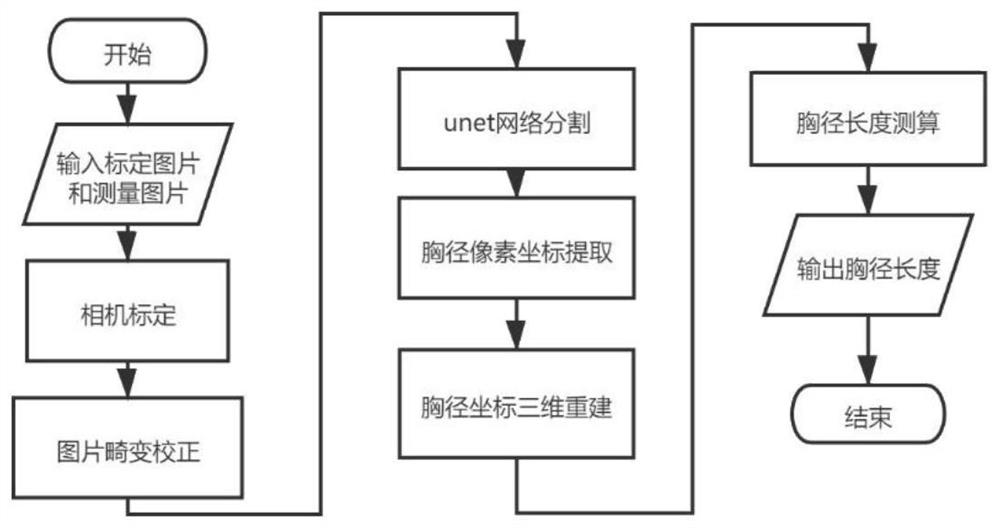

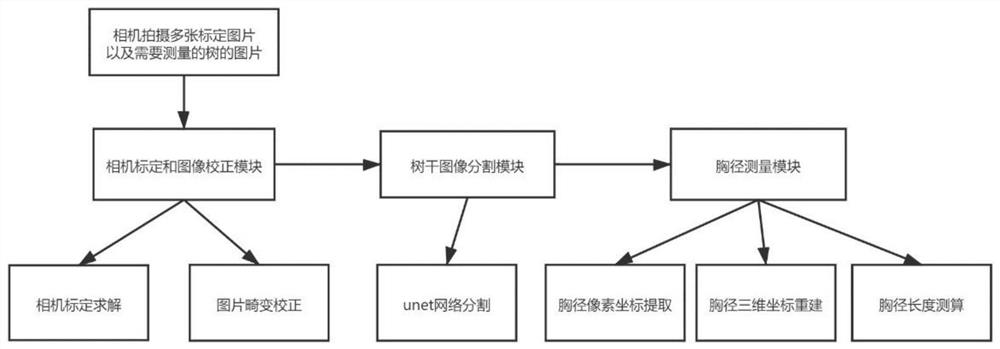

[0035] see Figure 1-11 , in an embodiment of the present invention, a standing tree diameter measurement method based on machine vision and deep learning, comprising the following steps:

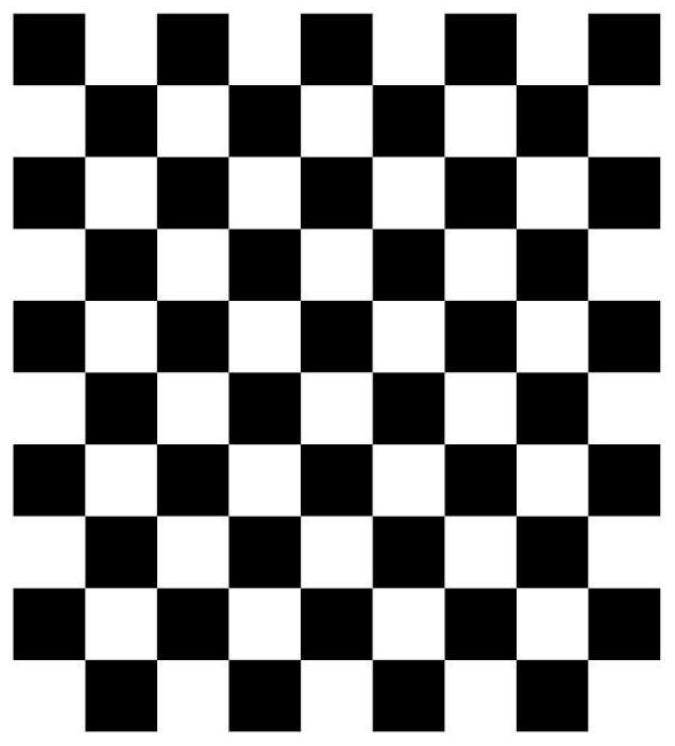

[0036] The first step is camera calibration and image correction. The ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com