Three-dimensional attitude classification method based on two-dimensional key points and related device

A technology of three-dimensional attitude and classification method, which is applied in the field of image processing, can solve problems such as high cost, large amount of data, and high requirements for input cameras, and achieve the effects of saving calculation and cost, good economy and practicability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

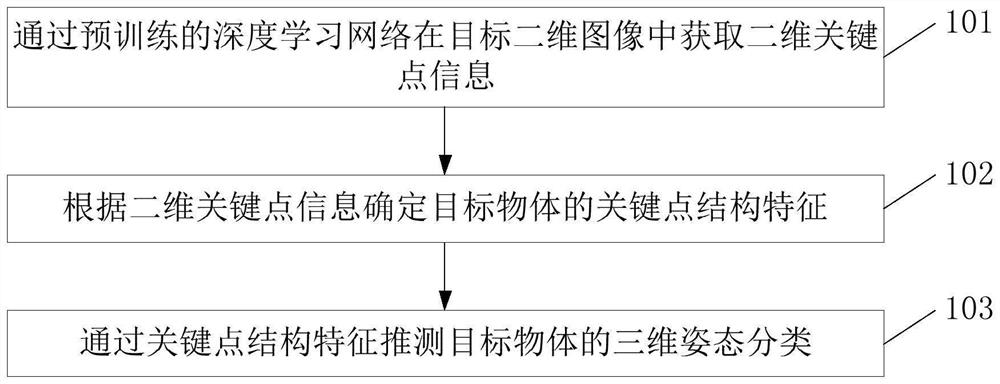

[0134] see figure 1 , figure 1 This is a schematic flowchart of the three-dimensional attitude classification method based on the two-dimensional key points of the target object provided in the first embodiment of the present application. This embodiment is used for the situation of performing three-dimensional attitude classification based on the two-dimensional key point information of the target object in the two-dimensional image, The method can be performed by a three-dimensional pose classification device based on two-dimensional key points of a target object, the device can be implemented in software and / or hardware, and can be integrated in an electronic device, the electronic device can be a mobile terminal or Computers and other equipment. like figure 1 As shown, a three-dimensional pose classification method based on two-dimensional key points of a target object provided in this embodiment may include:

[0135] Identify the target object area where the target obj...

Embodiment 2

[0155] This embodiment is an improvement and perfection of Embodiment 1. Embodiment 1 uses a three-key point relative expression structure. Because of the invariance of translation and rotation, this expression structure can perceive changes in the depth direction. It is very sensitive, but not so sensitive to changes in horizontal and vertical directions. In order to achieve better three-dimensional attitude perception and accurate perception in three directions including horizontal, vertical and depth, this embodiment adds ontology coordinate normalization structure expression way, see the network structure model Figure 7 , in order to better provide a three-dimensional pose classification method based on two-dimensional key points of the target object, which may include:

[0156] Identify the target object area where the target object is located from a target two-dimensional image, that is, determine the area of the target object to be classified, and obtain the two-dime...

Embodiment 3

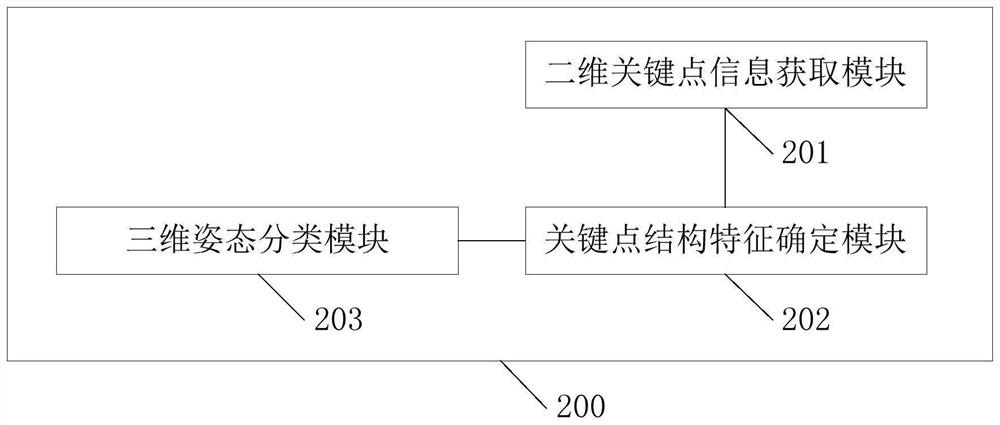

[0185] figure 2This is a schematic structural diagram of a three-dimensional attitude classification device based on two-dimensional key points of a target object provided in the third embodiment of the present application, which can execute the three-dimensional attitude classification method provided by the embodiment of the present application to achieve the corresponding functional modules and effects of the execution method. . like figure 2 As shown, the three-dimensional pose classification apparatus 200 based on two-dimensional key points may include:

[0186] A two-dimensional key point information acquisition module 201, configured to acquire two-dimensional key point information in a target two-dimensional image through a pre-trained deep learning network;

[0187] A key point structure feature determining module 202, configured to determine the key point structure feature of the target object according to the two-dimensional key point information;

[0188] The ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com