Scene text recognition method based on HRNet coding and double-branch decoding

A text recognition and branching technology, applied in neural learning methods, character and pattern recognition, instruments, etc., can solve problems such as the inability to meet the accuracy requirements of scene text recognition, the inaccuracy of the decoder to recognize the target sequence, and the loss of visual feature information. Improve the effect of upsampling, suppress the amount of information, and reduce the effect of feature loss

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention is further analyzed below in conjunction with specific embodiment.

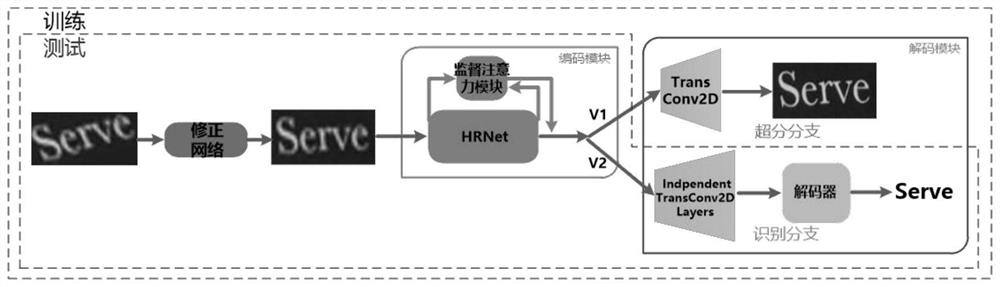

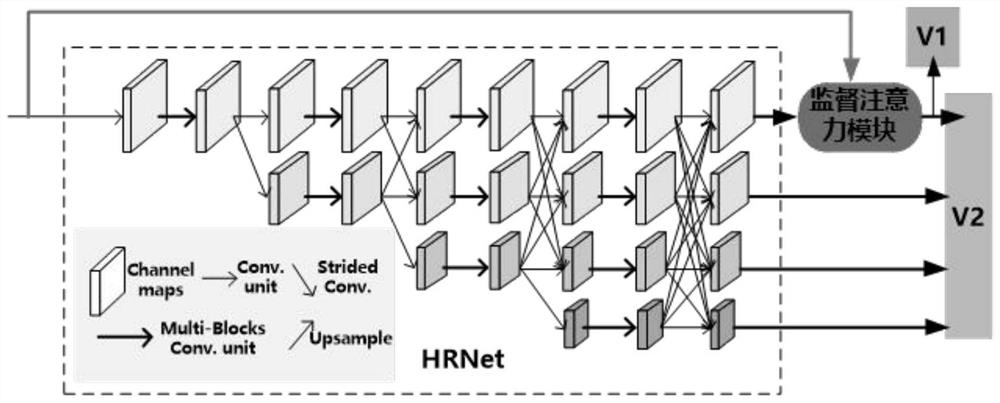

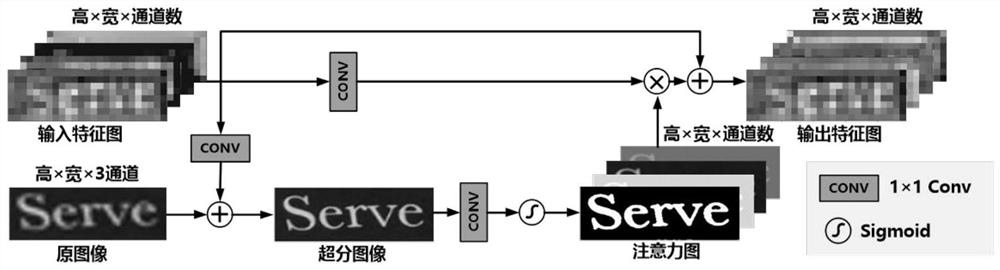

[0039] A scene text recognition method based on HRNet encoding and dual-branch decoding framework, the adopted model includes modified network TPS, encoding module, super-segmentation branch and recognition branch. The encoding module includes HRNet network, supervised attention. The super-resolution branch includes transposed convolution (TransConv2D) upsampling. The recognition branch includes IndependentTransConv2D Layers for multi-scale fusion and attention-based decoding to obtain text characters. The encoding module is used to perform feature extraction on a single scene text image to obtain visual features, and four resolution feature maps are obtained; the super-separation branch is used for the highest resolution feature map output by the encoding module, and the transposed convolution is upsampled to generate super Resolution image; the recognition branch is used to ex...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com