Binocular stereo matching method

A binocular stereo matching and matching cost technology, applied in the field of computer vision, can solve the problems of complex extraction network, reduced running time, and too many parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

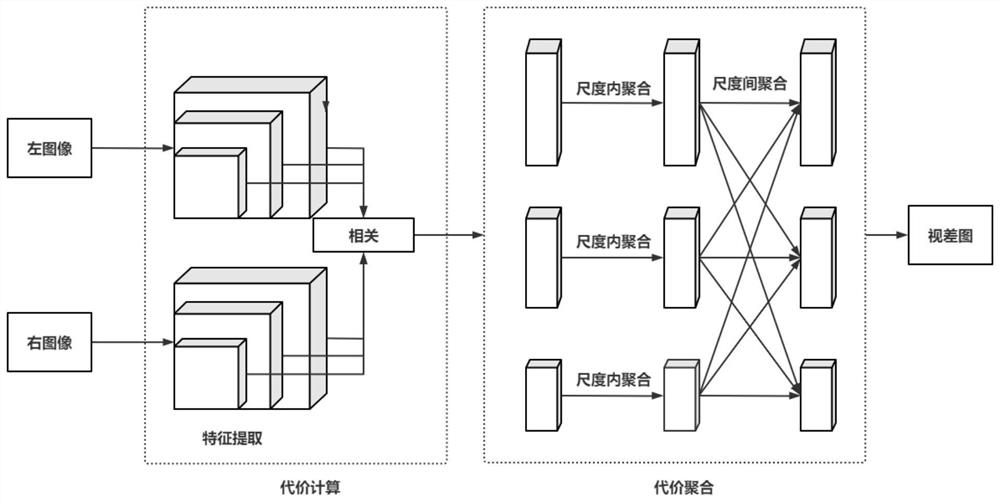

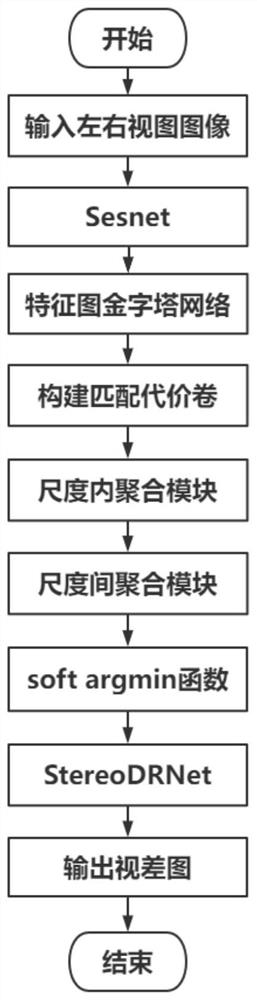

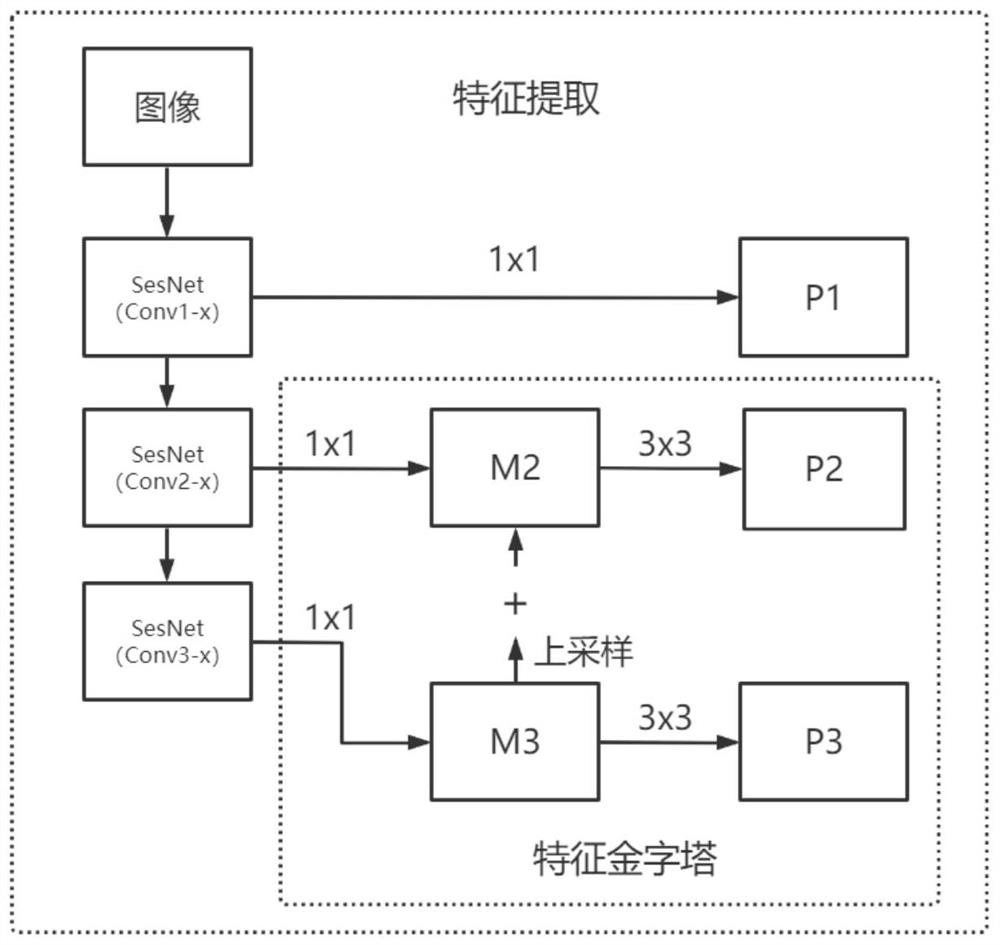

Method used

Image

Examples

Embodiment Construction

[0065] The present invention proposes an end-to-end network suitable for stereo matching. Firstly, it introduces the data set and training process used in the network training of the method of the present invention. Then, each model is evaluated through different settings, and the advantages of the proposed network in terms of time and memory usage are confirmed by the performance of the Sceneflow test set. Finally, it fine-tunes and verifies its algorithm performance on the KITTI2012 and KITTI2015 datasets.

[0066] 1. Dataset and training process

[0067] The present invention first uses the Sceneflow data set to train the network. The data sets commonly used in the previous stereo matching algorithms, such as KITTI and Middlebury, have fewer training images. In order to improve the performance of the stereo matching network, especially the end-to-end stereo matching The network requires a large amount of data for training. In 2016, CVPR (ComputerVision and Pattern Recognit...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com