Texture recognition model training method, texture migration method and related device

A technology for identifying models and training methods. Applied in the field of computer vision, it can solve the problems of high modeling cost and low efficiency, and achieve the effect of low learning threshold, cost reduction and efficiency improvement.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

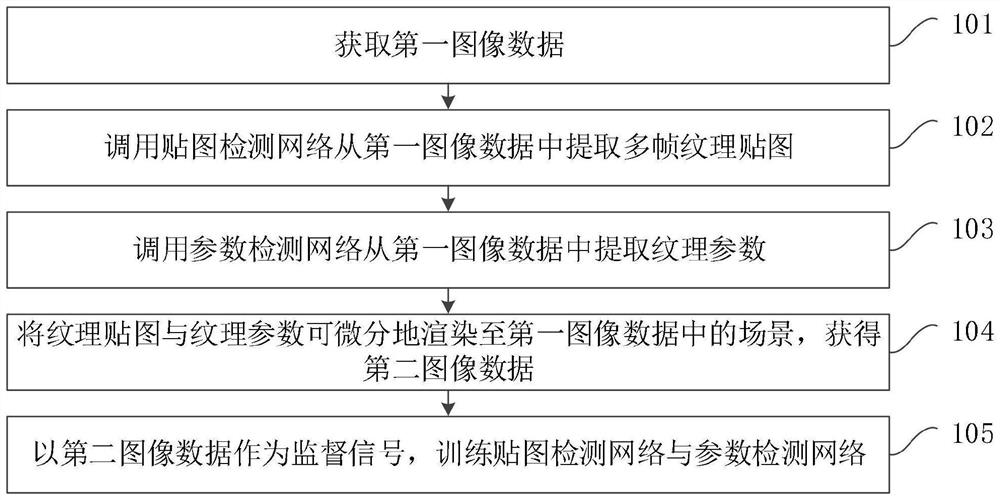

[0044] figure 1 It is a flow chart of a method for training a texture recognition model provided by Embodiment 1 of the present invention. This embodiment is applicable to the situation of self-supervised training of a texture recognition model. This method can be executed by a training device for a texture recognition model. The texture The training device for the recognition model can be implemented by software and / or hardware, and can be configured in computer equipment, such as servers, workstations, personal computers, etc., specifically including the following steps:

[0045] Step 101. Acquire first image data.

[0046] In this embodiment, multiple frames of first image data can be collected through channels such as public data sets, etc. The first image data is two-dimensional image data taken in real scenes, in which there are characters, animals, tools, Objects such as buildings, which have realistic textures.

[0047] Step 102, calling the texture detection network...

Embodiment 2

[0129] Figure 4 It is a flowchart of a texture migration method provided by Embodiment 2 of the present invention. This embodiment is applicable to the situation of migrating texture in image data. This method can be executed by a training device for a texture migration model. The texture migration The training device of the model can be implemented by software and / or hardware, and can be configured in computer equipment, for example, servers, workstations, personal computers, mobile terminals (such as mobile phones, tablet computers, etc.), etc., specifically include the following steps:

[0130] Step 401, load the texture recognition model.

[0131] In this embodiment, the texture recognition model can be pre-trained, such as Figure 5 As shown, the texture recognition model includes a texture detection network and a parameter detection network. The training method is as follows:

[0132] Obtain the first image data;

[0133] calling the map detection network to extract ...

Embodiment 3

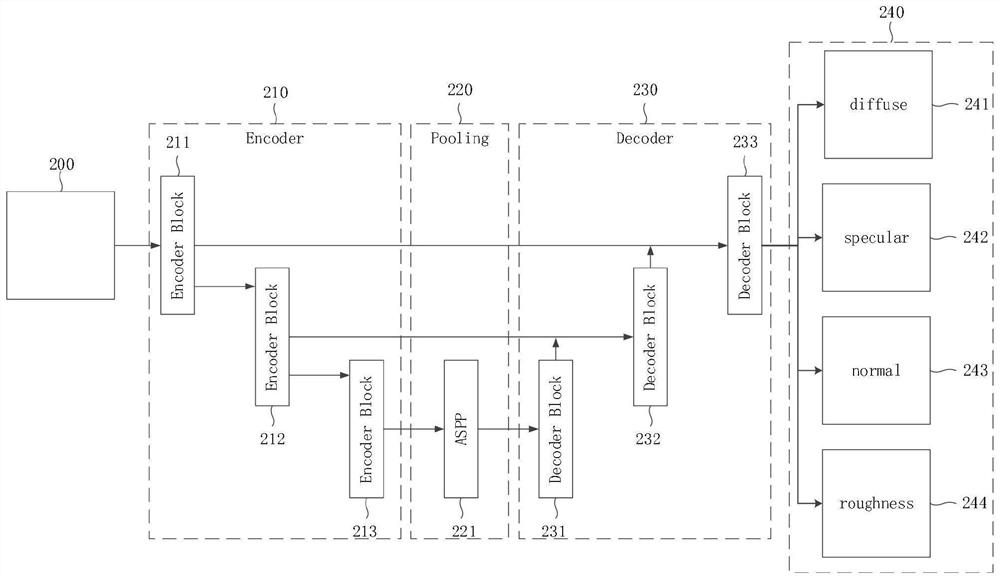

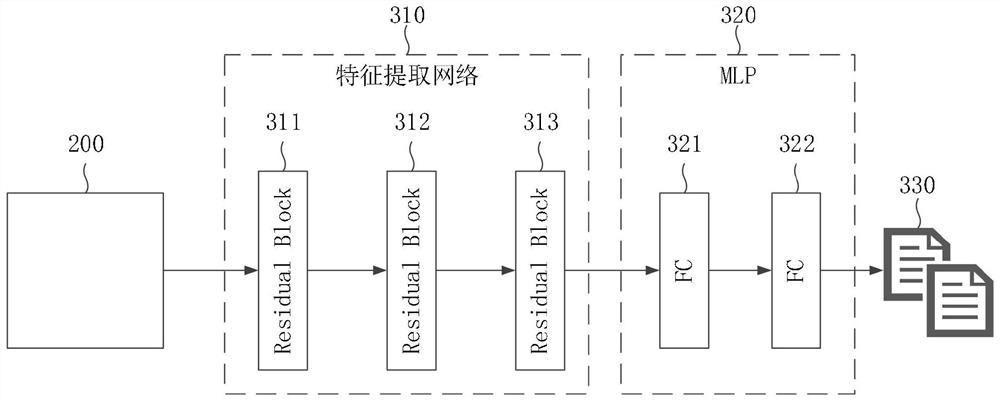

[0169] Image 6 It is a structural block diagram of a training device for a texture recognition model provided in Embodiment 3 of the present invention. The texture recognition model includes a texture detection network and a parameter detection network. The device may specifically include the following modules:

[0170] An image data acquisition module 601, configured to acquire first image data;

[0171] A texture map extraction module 602, configured to call the texture detection network to extract multiple frames of texture maps from the first image data;

[0172] A texture parameter extraction module 603, configured to call the parameter detection network to extract texture parameters from the first image data;

[0173] An image data rendering module 604, configured to differentiably render the texture map and the texture parameters to the scene in the first image data to obtain second image data;

[0174] A network training module 605, configured to use the second imag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com