Named entity recognition method and device based on hybrid lattice self-attention network

A technology of named entity recognition and entity recognition, applied in biological neural network models, natural language data processing, instruments, etc., can solve the problem of ignoring the role of global lexical information, and word feature fusion methods that do not take into account the difference in semantic expression of word vectors. , can not effectively enhance the word-level features of word vectors, etc., to achieve the effect of improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0062] The present invention is described in further detail now in conjunction with accompanying drawing.

[0063] It should be noted that terms such as "upper", "lower", "left", "right", "front", and "rear" quoted in the invention are only for clarity of description, not for Limiting the practicable scope of the present invention, and the change or adjustment of the relative relationship shall also be regarded as the practicable scope of the present invention without substantive changes in the technical content.

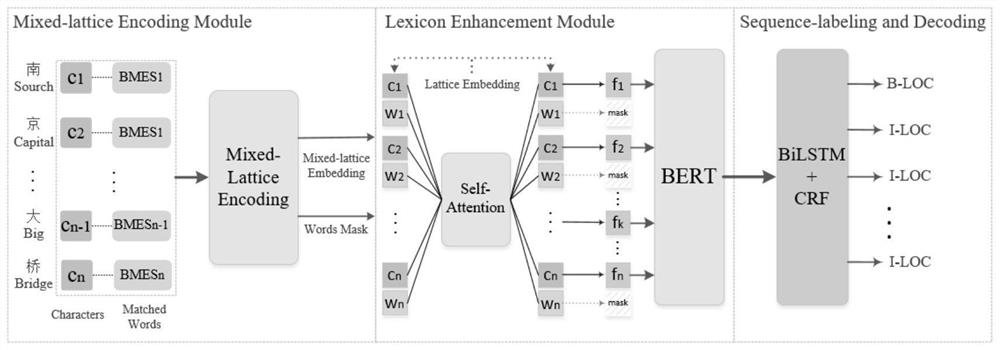

[0064] The present invention refers to a named entity recognition method based on a mixed lattice self-attention network, which includes the following steps:

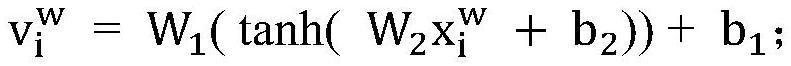

[0065] S1, look up the words consisting of consecutive words in the input sentence in the dictionary, merge them into a single multidimensional vector through positional alternation mapping, and encode the sentence feature vector represented by the word pair into a single dimension by using mixed word and wor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com