Model training method and device, equipment and storage medium

A training model and training algorithm technology, applied in the field of training models, can solve the problems of consuming human resources, decreasing training efficiency, increasing artificial adjustment time, etc., and achieving the effect of efficient training files

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

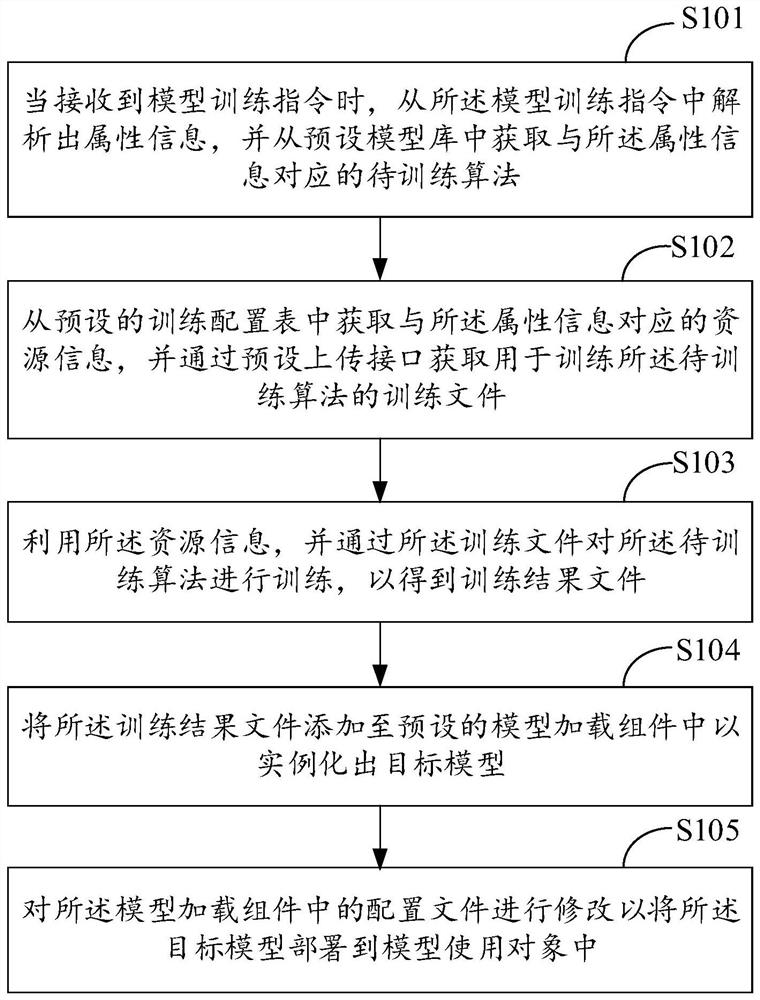

[0052] Embodiments of the present invention provide a method for training models, such as figure 1 As shown, the method specifically includes the following steps:

[0053]Step S101: When a model training instruction is received, attribute information is parsed from the model training instruction, and an algorithm to be trained corresponding to the attribute information is acquired from a preset model library.

[0054] Among them, the attribute information is set in advance according to the business requirements. The attribute information includes: algorithm type, the industry of the model use object, the business scenario of the industry, the algorithm parameters used to generate the algorithm to be trained, the total number of training times, and the The preset weight value of the importance of the training task, and the interface path of the object used by the model.

[0055] Specifically, step S101 includes:

[0056] Step A1: Parsing out the algorithm type from the attrib...

Embodiment 2

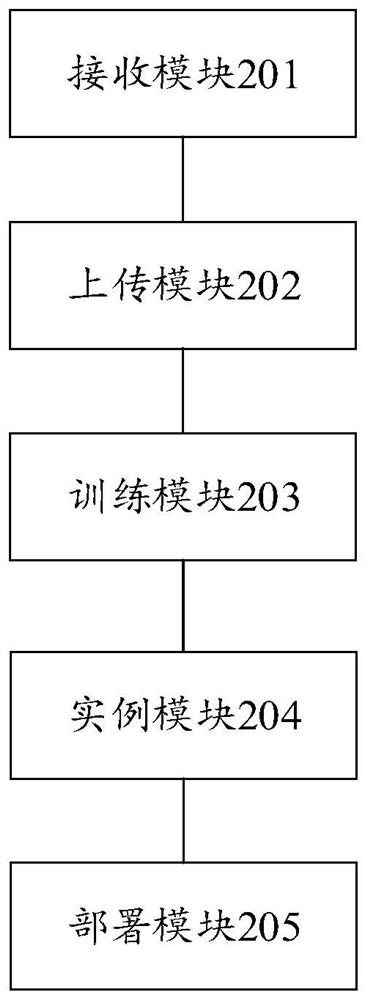

[0103] Embodiments of the present invention provide a device for training models, such as figure 2 As shown, the device specifically includes the following components:

[0104] The receiving module 201 is configured to parse out attribute information from the model training instruction when a model training instruction is received, and acquire an algorithm to be trained corresponding to the attribute information from a preset model library;

[0105] The upload module 202 is configured to obtain resource information corresponding to the attribute information from a preset training configuration table, and obtain a training file for training the algorithm to be trained through a preset upload interface;

[0106] A training module 203, configured to use the resource information to train the algorithm to be trained through the training file to obtain a training result file;

[0107] An instance module 204, configured to add the training result file to a preset model loading comp...

Embodiment 3

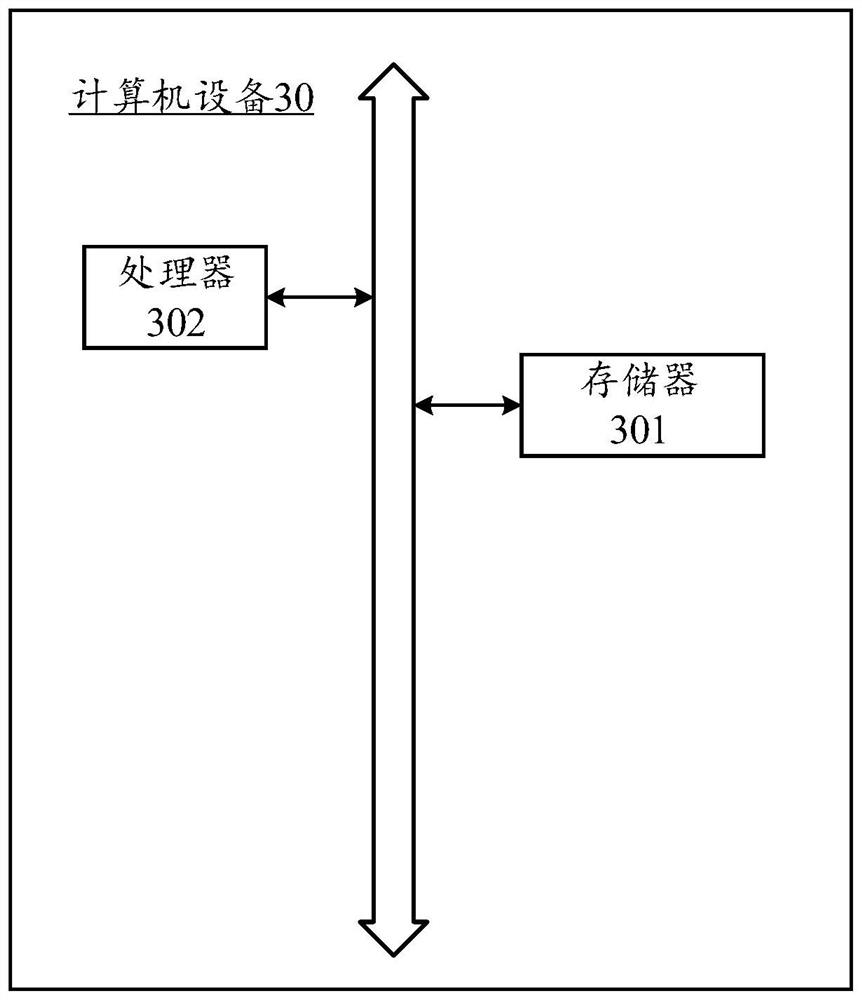

[0132] This embodiment also provides a computer device, such as a smart phone, a tablet computer, a notebook computer, a desktop computer, a rack server, a blade server, a tower server, or a cabinet server (including an independent server, or A server cluster composed of multiple servers), etc. Such as image 3 As shown, the computer device 30 in this embodiment at least includes but is not limited to: a memory 301 and a processor 302 that can be communicated with each other through a system bus. It should be pointed out that, image 3 Only computer device 30 is shown having components 301-302, but it should be understood that implementing all of the illustrated components is not a requirement and that more or fewer components may instead be implemented.

[0133] In this embodiment, the memory 301 (that is, a readable storage medium) includes a flash memory, a hard disk, a multimedia card, a card-type memory (for example, SD or DX memory, etc.), random access memory (RAM), s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com