Fashion field-based end-to-end image semantic description method and system

A semantic description and fashionable technology, applied in the image semantic description generation method and system field, can solve the problems of high click-through rate, obtain good effect, etc., and achieve reasonable design effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] The present invention will be further explained below.

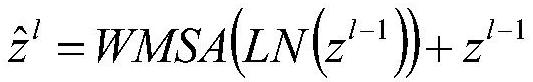

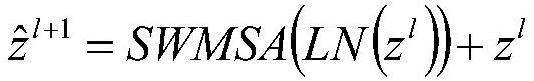

[0061] A kind of end-to-end image semantic description method based on the fashion field of the present invention comprises the following steps:

[0062] Step 1: Data Preparation:

[0063]Fashion datasets are often constructed by crawling e-commerce websites or manually photographing. The size, angle, and quantity of the pictures in the products are not uniform. The resolutions of the pictures of different products are quite different, and there are some noise pictures that have nothing to do with the description content. . In addition, the description expressions of different data sets are also very different. In Fashion-Gen, the description is a templated description composed of multiple sentences. The overall description is longer and has more professional vocabulary in the fashion field, but the template The form may make it easier for the model to predict the words in the template, while ignoring the meanin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com