Virtual-real fusion rendering method and device based on monocular camera reconstruction

A virtual reality fusion, single-purpose technology, applied in the field of medical image processing, can solve the problem of lack of depth sense in display

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

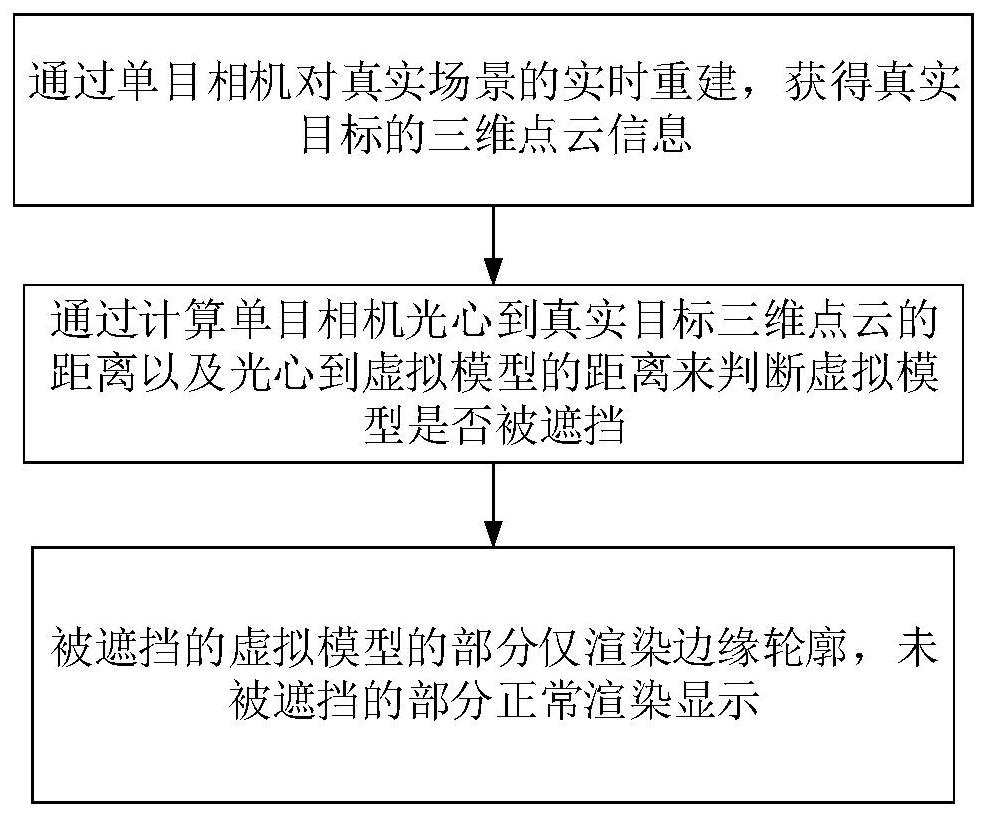

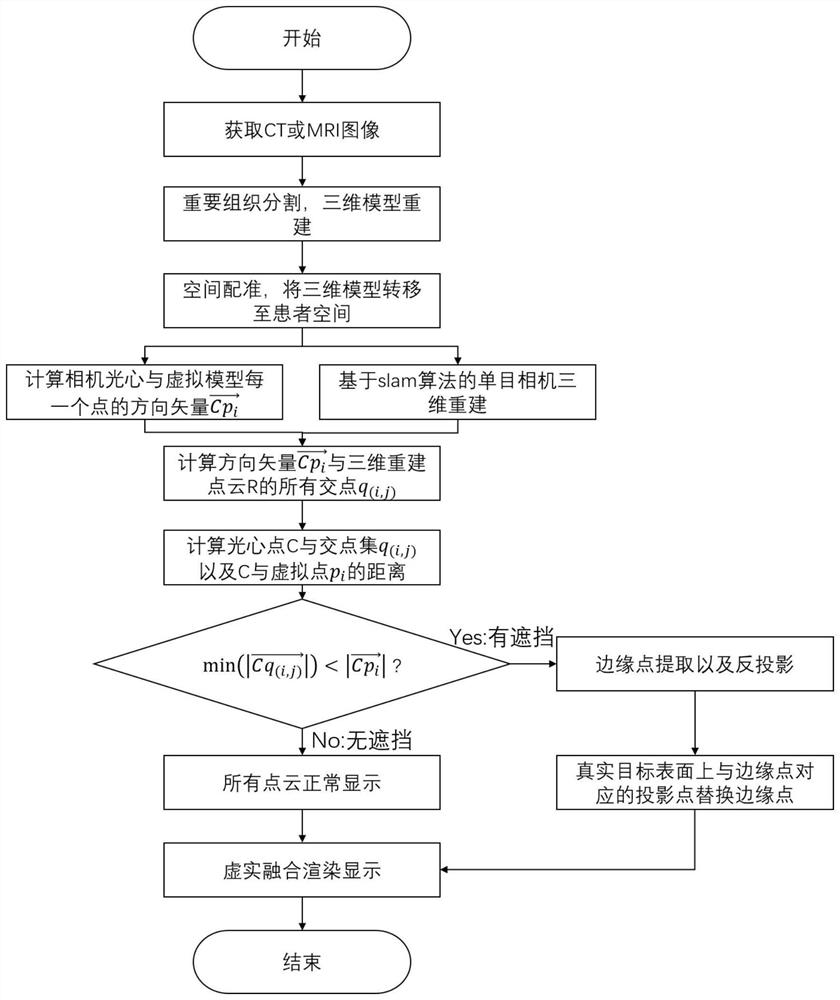

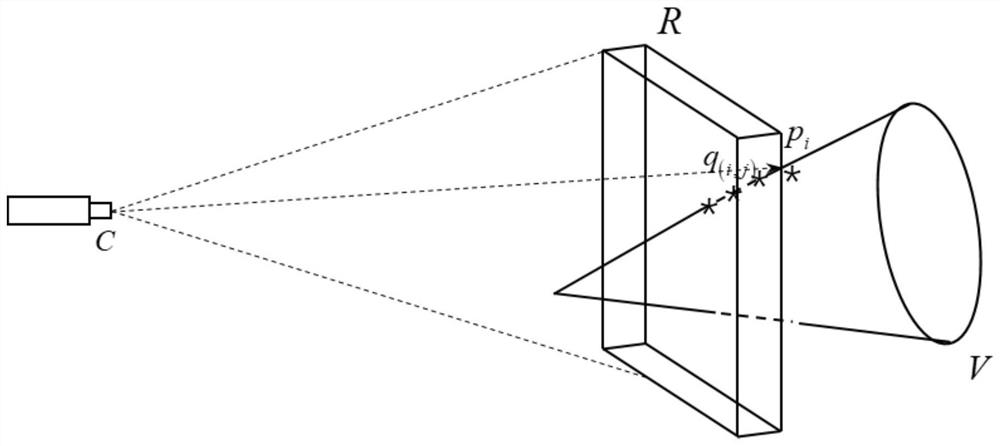

[0025] An effective way to solve the problem of invisibility caused by mutual occlusion between virtual and real objects is to obtain the depth information of the camera, the real target and the virtual model in real time, and render the virtual model in layers based on the depth information, so that the occluded virtual part only shows the edge outline. Unoccluded virtual parts display all point clouds normally. By replacing all the occluded point clouds with edge contours, the occluded part of the virtual model will not occlude the real target because it floats in front of the real target.

[0026] Such as figure 1 As shown, this virtual-real fusion rendering method based on monocular camera reconstruction includes the following steps:

[0027] (1) Obtain the 3D point cloud information of the real target through the real-time reconstruction of the real scene by the monocular camera;

[0028] (2) Determine whether the virtual model is blocked by calculating the distance fro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com