Video space-time action positioning method based on progressive attention hypergraph

A positioning method and attention technology, applied in the field of computer vision, can solve problems such as high computational overhead, increased computational overhead, and decreased confidence, and achieve the effects of accurate action recognition rate, improved operating efficiency, and guaranteed computational efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The present invention will be further described below with reference to the accompanying drawings.

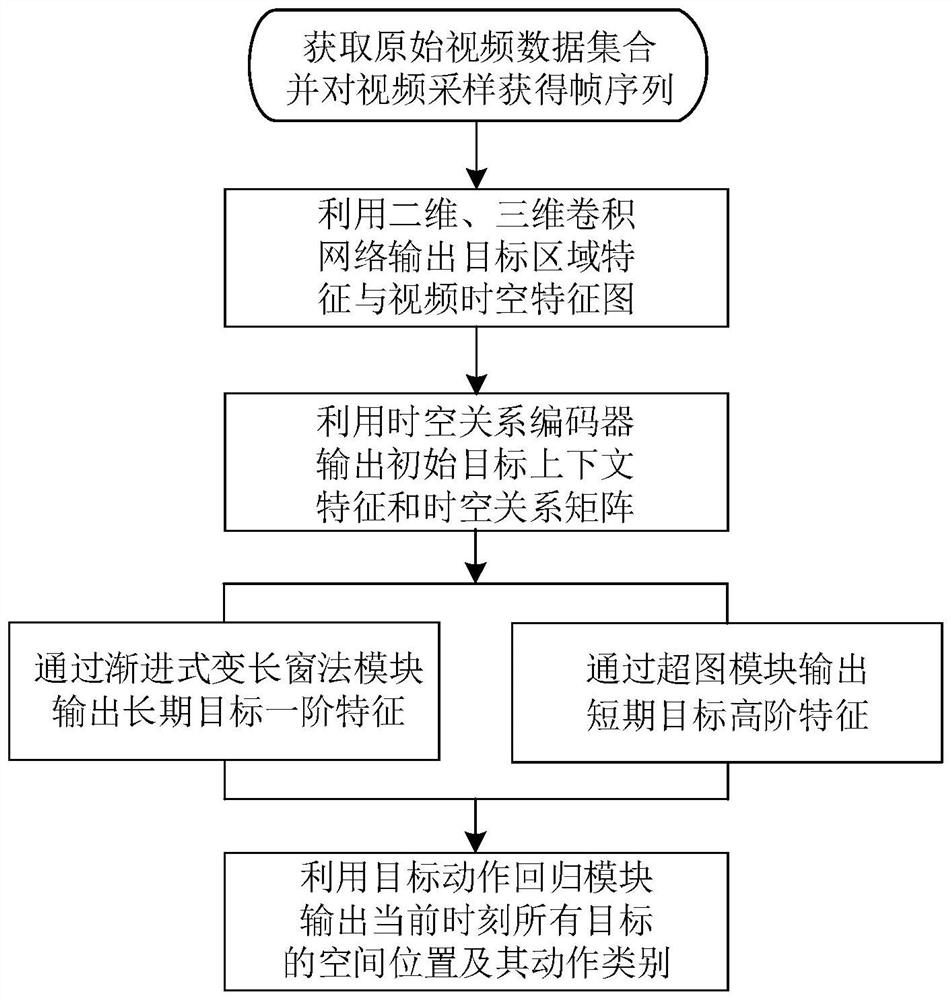

[0050] like figure 1 As shown, the video spatiotemporal action localization method based on the progressive attention hypergraph first uniformly samples the original video, uses the convolutional neural network to extract the target region features and the video spatiotemporal feature map; uses the spatiotemporal relation encoder to obtain the target context features and spatiotemporal features Relation matrix; then input the target context feature and spatiotemporal relationship matrix into the progressive variable-length sliding window module to obtain the target first-order features consistent with the original action; at the same time, construct a hypergraph module to generate short-term target height by increasing the constraints of shared attributes First-order features; finally, use the target action regression module to obtain the spatial positions and action cat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com