Statistics and rule combination based phonetic driving human face carton method

A technology that drives human face and face movement, which is applied in computing, special data processing applications, biological neural network models, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

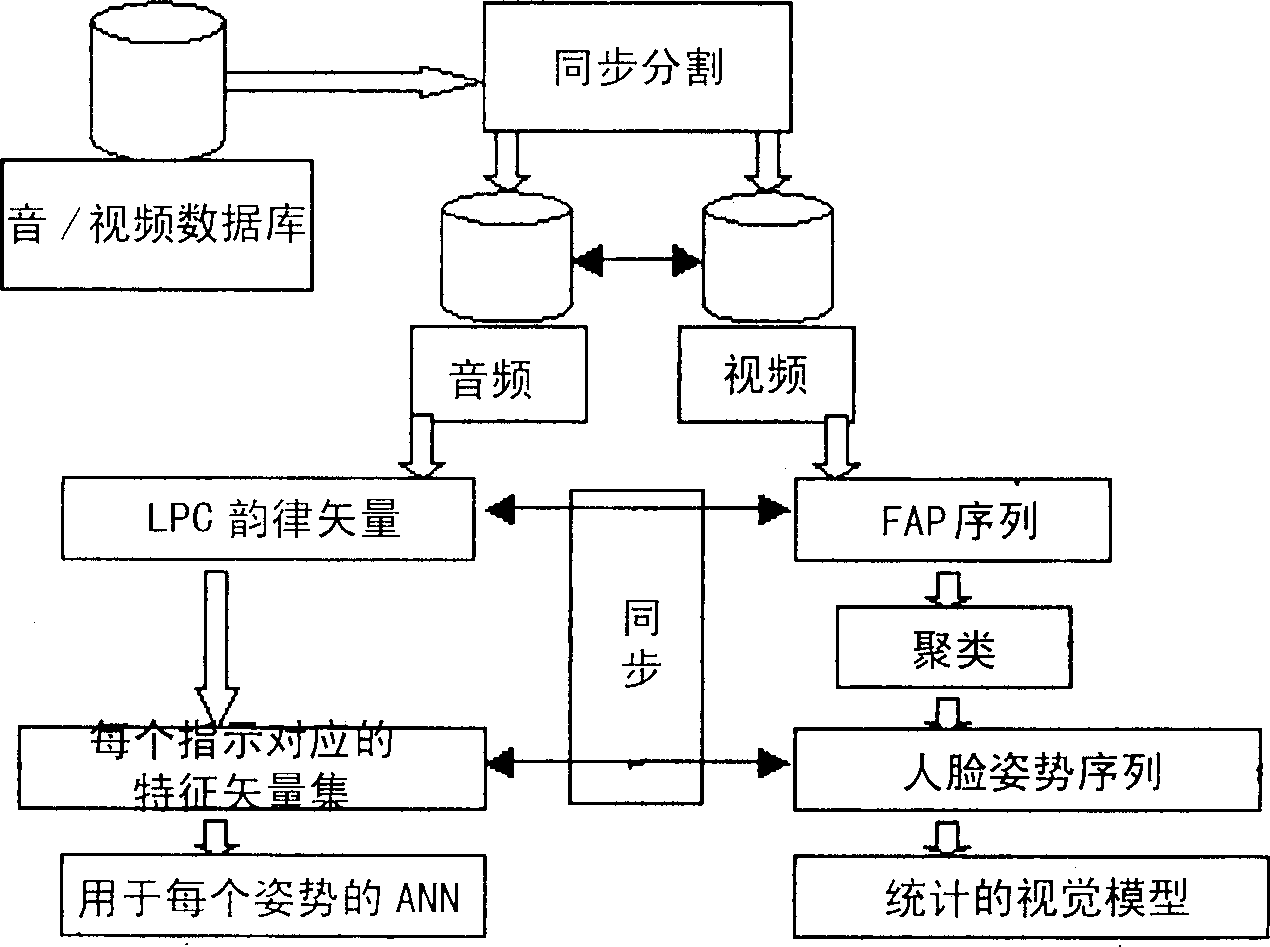

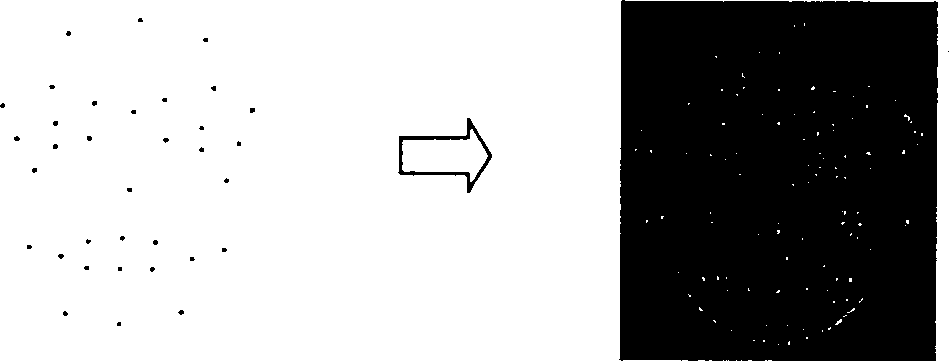

[0019] Firstly, the feature vector class of Face Motion Parameters (FAP) of the video can be obtained by unsupervised cluster analysis. Then count the face dynamic model (essentially the FAP category transition matrix) that occurs synchronously with the voice event, which we call the statistical visual model, and its principle is similar to the statistical language model in natural language processing. Finally, learn to train multiple neural networks (ANN) to complete the allusion from speech mode to facial animation mode. After machine learning, some face animation pattern sequences can be obtained by calculating the new voice data, and the best face motion parameter (FAP) sequence can be selected by using the statistical vision model, and then the FAP sequence can be corrected and supplemented by using the face motion rules , after smoothing is done, use these FAPs to directly drive the face mesh model. This strategy has the following unique features:

[0020] 1) The estab...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com