Multi-path picture mixing method based on DTC space

A picture and space technology, applied in the field of image communication, can solve the problems of secondary coding distortion, difficult to achieve interconnection, heavy calculation, etc., to avoid distortion, enhance flexibility, and break through the bottleneck of calculation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

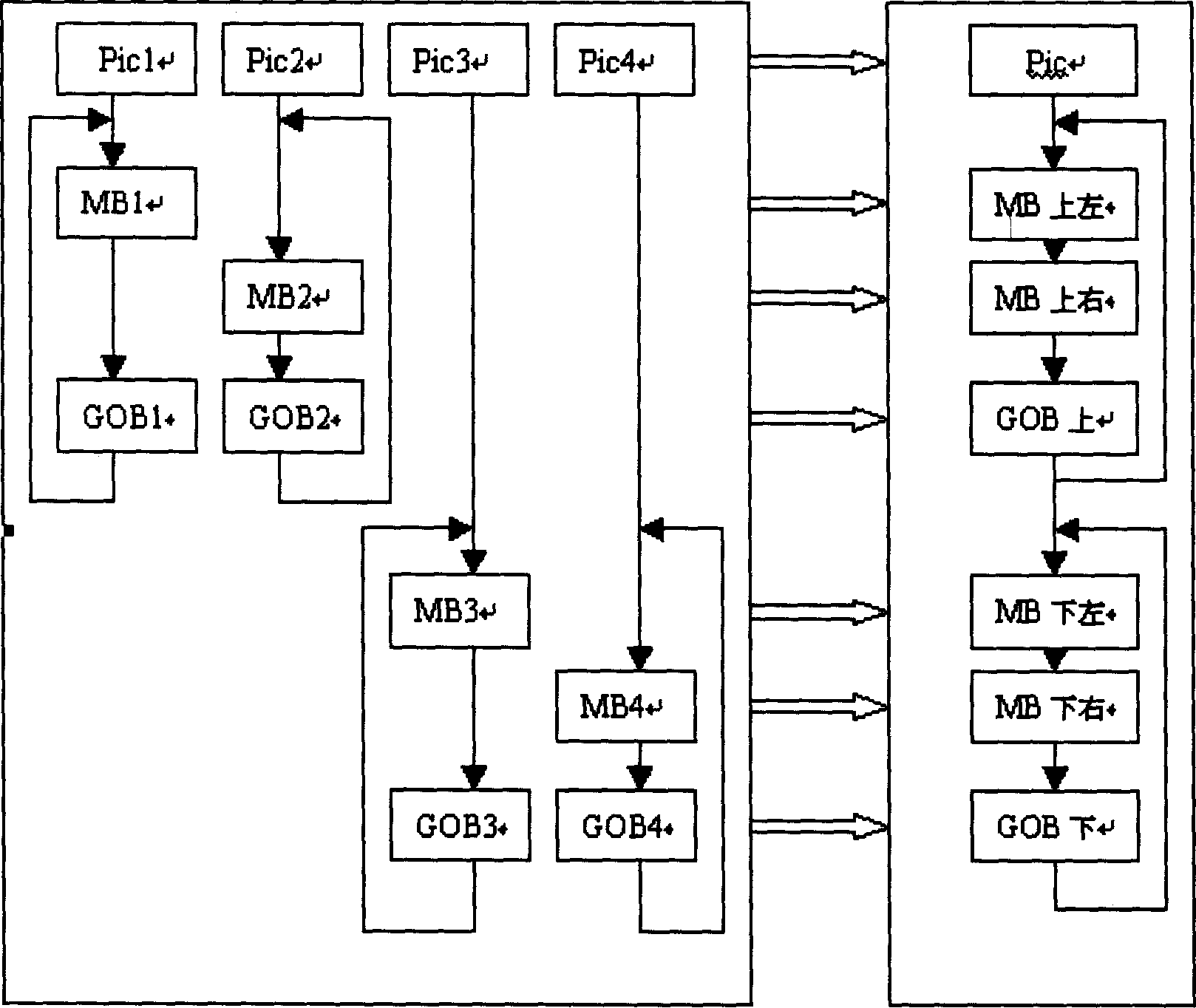

[0029] see figure 1 , at the syntax level of the video stream, multiple video streams are combined according to the mixed spatial position, for example, four QCIF code streams are combined into one CIF code stream, or four CIF code streams are combined into one 4CIF code stream , the video stream is mapped to the syntactic stream of the corresponding macroblock of the mixed large picture, and the image header information, block group header information and macroblock header information of the mixed large picture are generated by the sub-channels participating in the mixing. figure 1 That is, it illustrates that the four code streams Pic1-4 and the corresponding macroblock layers MB1-4 and group-of-block layers GOB1-4 are mapped into the code stream Pic and the corresponding macroblock layer and group-of-block layer processes. The mixing of this method is completely carried out at the code stream level, only the calculation of demultiplexing and multiplexing of video streams, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com