Architecture for controlling a computer using hand gestures

a technology of hand gestures and computer systems, applied in the direction of mechanical pattern conversion, static indicating devices, instruments, etc., can solve the problems of inconvenient and precise operation, inability to meet the needs of a number of applications, and the traditional computer interface is less practical or efficient. , to achieve the effect of reducing the computational requirements of the system

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

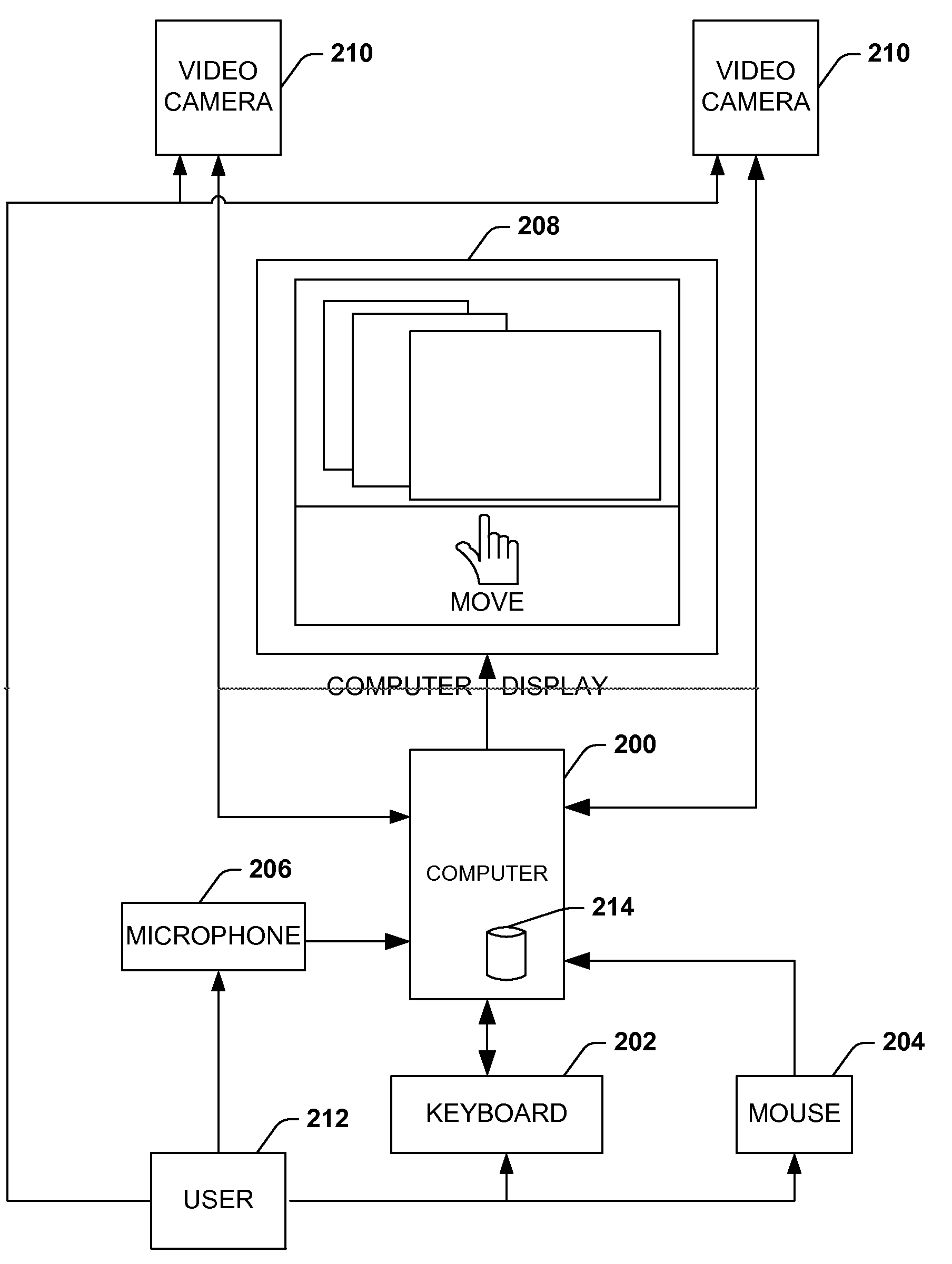

[0039]As used in this application, the terms “component” and “system” are intended to refer to a computer-related entity, either hardware, a combination of hardware and software, software, or software in execution. For example, a component may be, but is not limited to being, a process running on a processor, a processor, an object, an executable, a thread of execution, a program, and / or a computer. By way of illustration, both an application running on a server and the server can be a component. One or more components may reside within a process and / or thread of execution and a component may be localized on one computer and / or distributed between two or more computers.

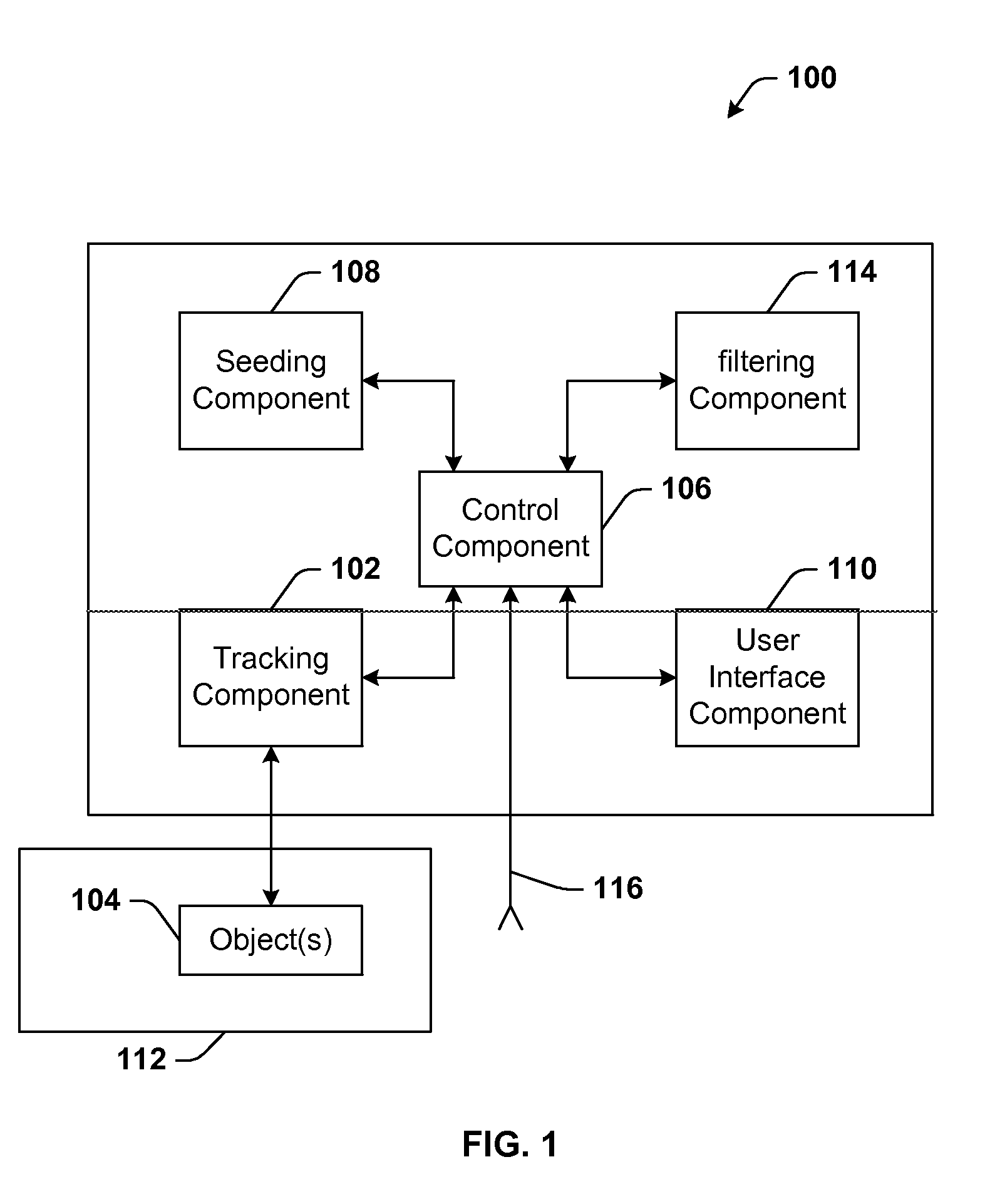

[0040]The present invention relates to a system and methodology for implementing a perceptual user interface comprising alternative modalities for controlling computer programs and manipulating on-screen objects through hand gestures or a combination of hand gestures and / or verbal commands. A perceptual user interface...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com