Picture processing engine and picture processing system including the picture processing engine

一种图像处理、引擎的技术,应用在图像数据处理、图像数据处理、具有多个处理单元的架构等方向,能够解决面积成本大、功耗大、功耗增大等问题

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

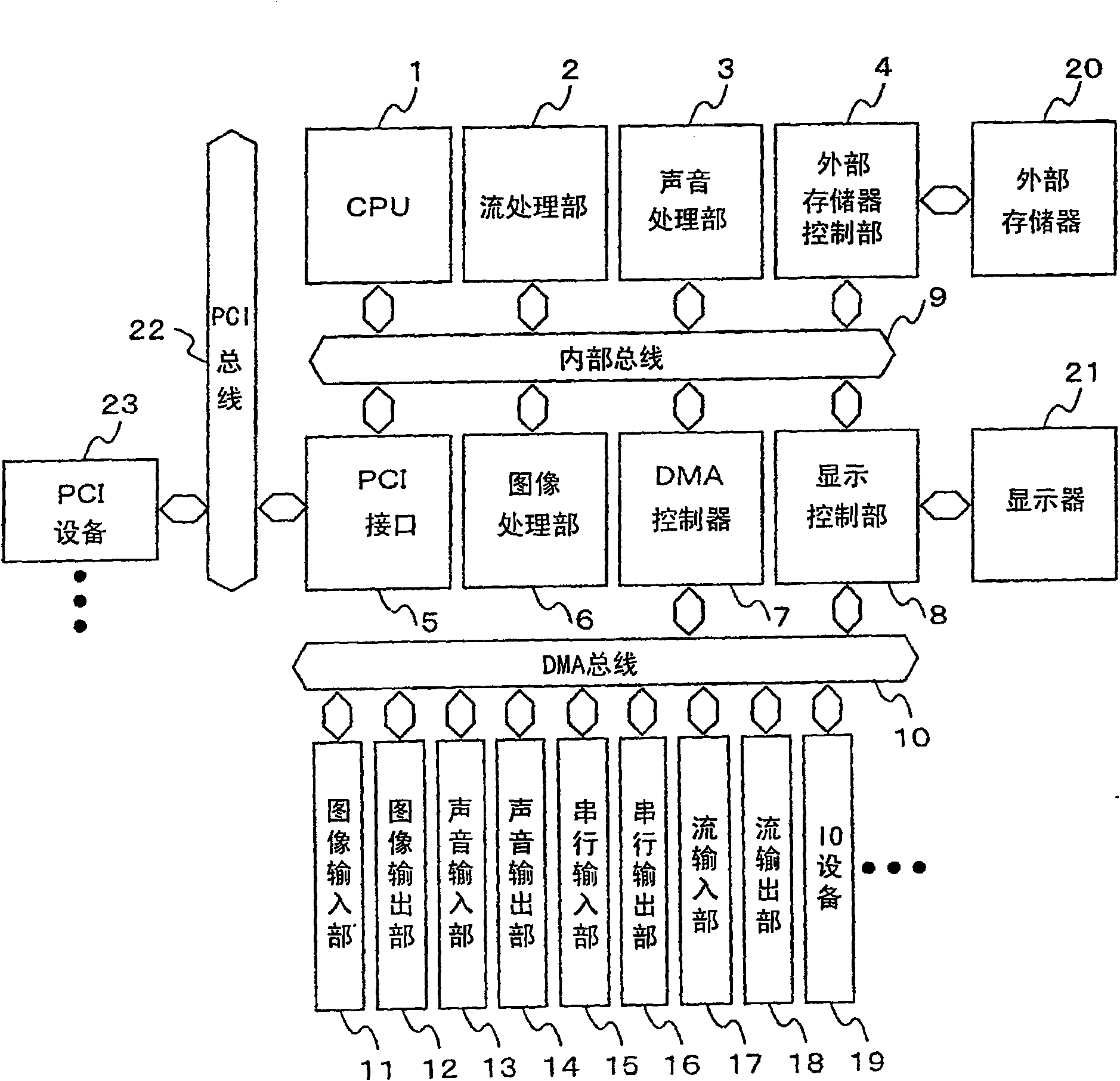

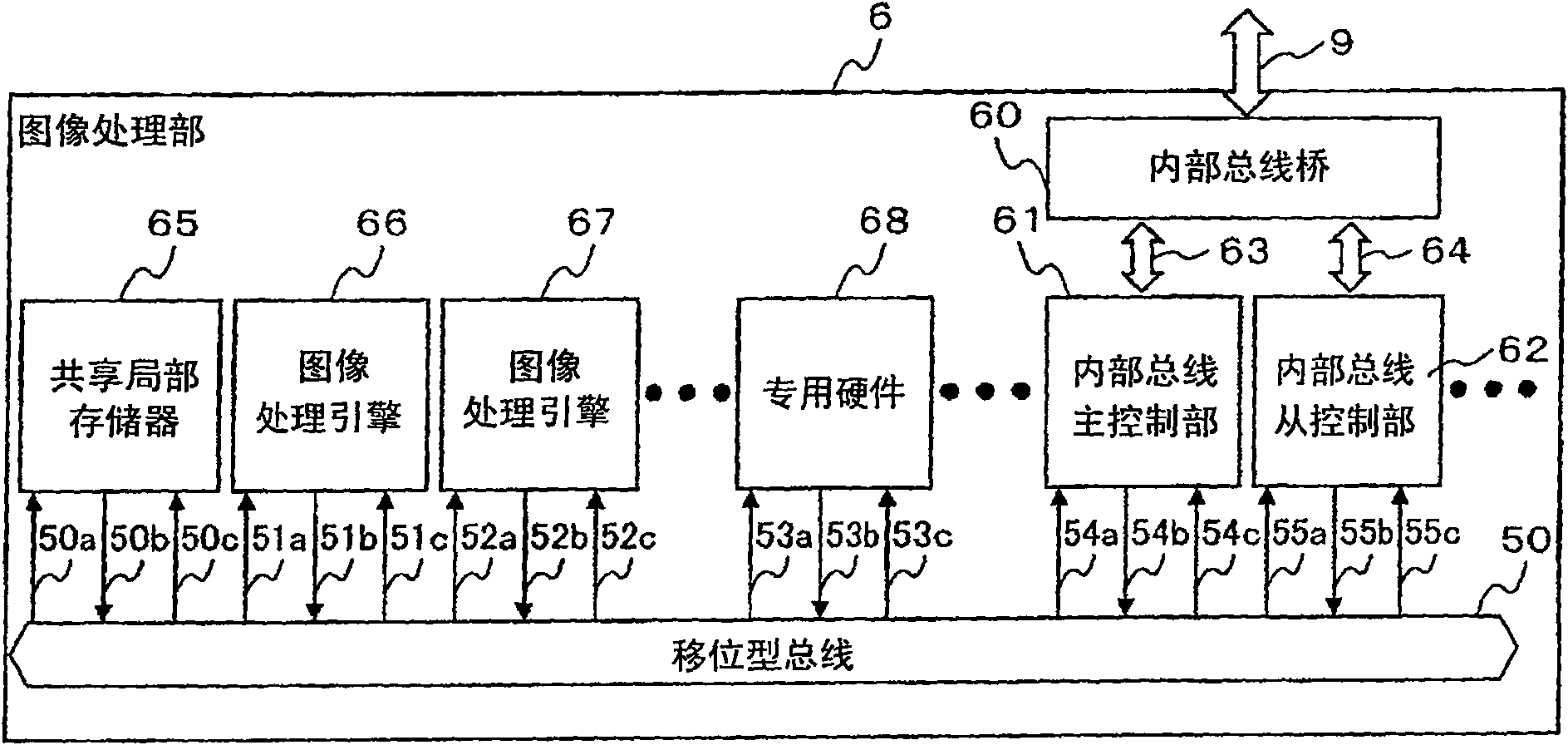

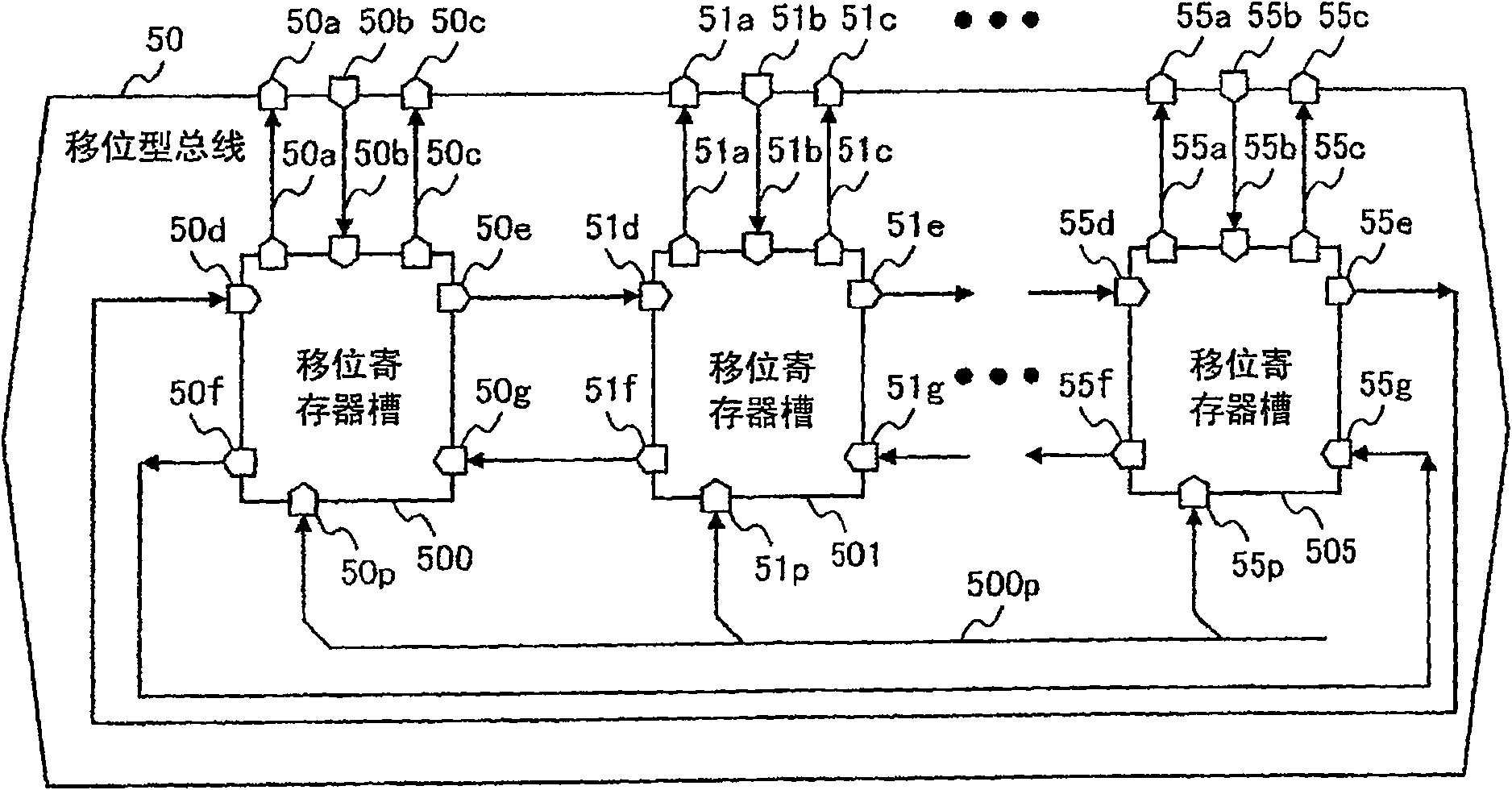

[0051] A first embodiment of the present invention will be described in detail with reference to the drawings. figure 1 is a block diagram of the embedded system of this embodiment. This embedded system interconnects the following parts on the internal bus 9: CPU1, which carries out system control and general processing; stream processing part 2, which carries out 1 processing of image codecs such as MPEG, i.e. stream processing; image processing part 6. Cooperate with the stream processing unit 2 to encode and decode the image codec; the audio processing unit 3 performs encoding and decoding of audio codecs such as AAC or MP-3; The access of the constituted external memory 20; the PCI interface 5 is used to be connected to the standard bus, that is, the PCI bus 22; the display control part 8 controls image display; and the DMA controller 7 performs direct memory access to various IO devices.

[0052] Various I / O devices are connected to the DMA controller 7 via the DMA bus 1...

Embodiment 2

[0151] use Figure 14 A second embodiment of the present invention will be described. Figure 14 It is a block diagram of the image processing unit 66 of this embodiment. compared to Image 6 The image processing engine 66 of the first embodiment shown has three differences. The first point is that the input data 30i and the calculation data 30wb of the CPU unit 30 are connected to the vector calculation unit 46 . The input data 30i is data to be input to the register file 304 in the CPU unit 30, and the data of the register file 304 can be updated. The calculation data 30wb is a calculation result of the CPU unit 30 and is input to the vector calculation unit 46 . The second place is to replace Image 6 The command memory control unit 32 is connected to the command memory control unit 47 . The instruction memory control unit 47 has a plurality of program counters and controls the instruction memory 31 . Furthermore, the third difference is that the vector calculation u...

Embodiment 3

[0171] use Figure 20 The third embodiment will be described. Figure 20 is a configuration diagram of the CPU section arranged in the image processing engine 66 of the present embodiment. In the first embodiment, it is composed of one CPU unit 30 ; in the second embodiment, it is described that it is composed of two CPUs, namely, the CPU unit 30 and the vector operation unit 46 . In the third embodiment, two or more CPUs are connected in series or in a ring. exist image 3 Among them, the CPU unit 30 that can access the data memory 35 is arranged on the first CPU, and a plurality of vector calculation units 46 and 46n are connected in series, and the CPU unit 30s that can access the data memory 35 is connected to the end. The calculation data 30i of the CPU unit 30s is again connected to the input data unit of the CPU unit 30 . At this time, each CPU has a program counter structure, and actually has a plurality of Figure 16 The structure of the program counter in the in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com