System for implementing network search caching and search method

A network query and caching technology, applied in special data processing applications, instruments, electrical and digital data processing, etc., can solve the problems of high buffering timeliness, difficult and high timeliness of materialized views, and different problems, achieving high timeliness, improving The effect of macro throughput and performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] The specific embodiments of the present invention will be described in further detail below with reference to the accompanying drawings.

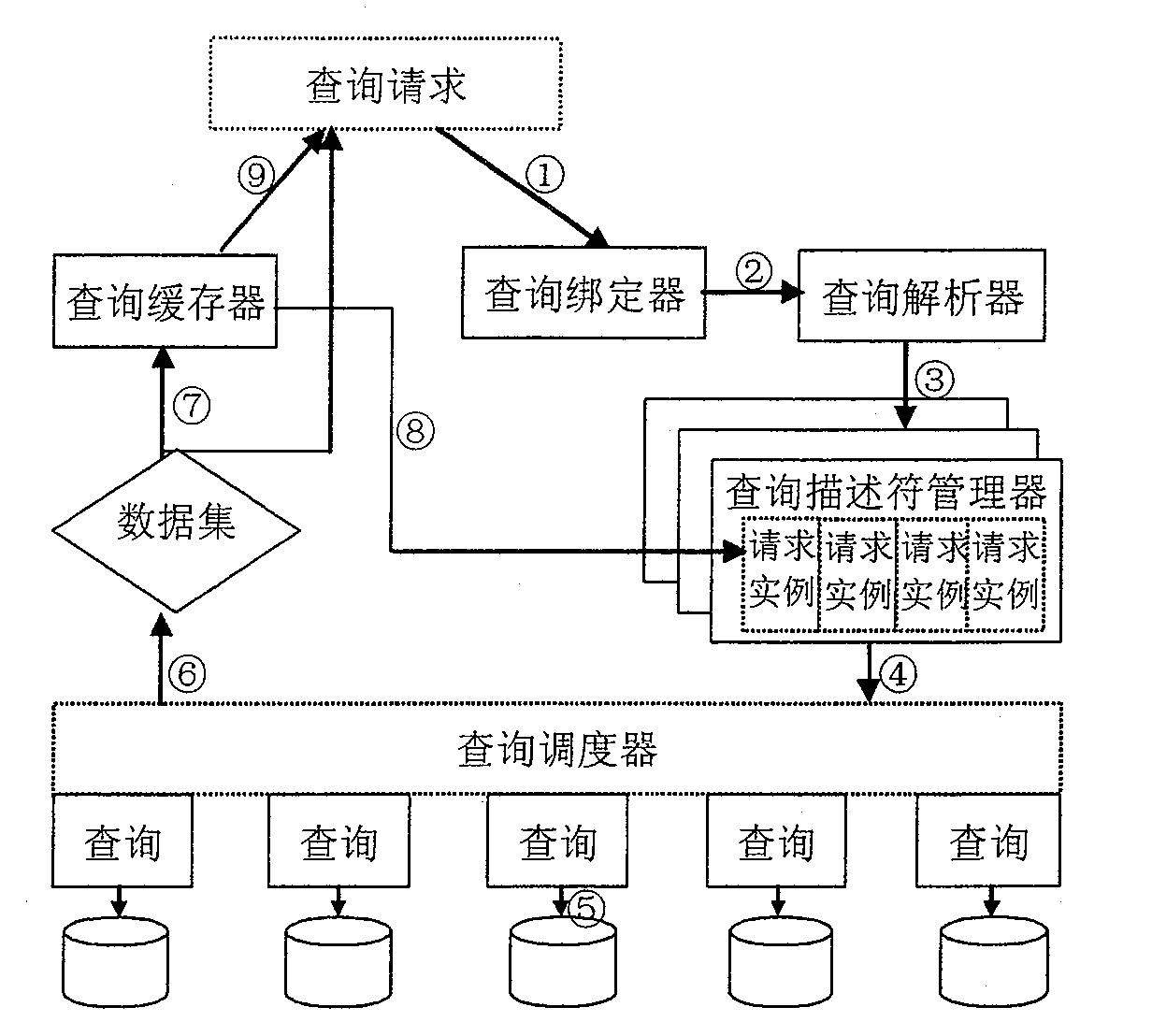

[0052] Such as image 3 As shown, according to an embodiment of the present invention, there is provided a system for implementing incremental caching of distributed queries in a network environment, including a query binder, a query parser, and a query descriptor manager. (query descriptor), query scheduler (queryscheduler), query cache (query cacher) five main functional modules. The function and realization of each component are as follows:

[0053] Query binder: For distributed query requests from multiple data sources, it is necessary to first use virtualization methods and view technology to represent the data required by the application as a virtual view. Since virtual views can form new virtual views through operations such as connection and union of relationships, virtual views required by complex applications can be constructed...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com