Patents

Literature

53 results about "Uplevel" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Uplevel is a command in Tcl that allows a command script to be executed in a scope other than the current innermost scope on the stack. Because the command script may itself call procedures that use the uplevel command, this has the net effect of transforming the call stack into a call tree. It was originally implemented to permit Tcl procedures to reimplement built-in commands and still have the ability to manipulate local variables. For example, the following Tcl script is a reimplementation of the for command:

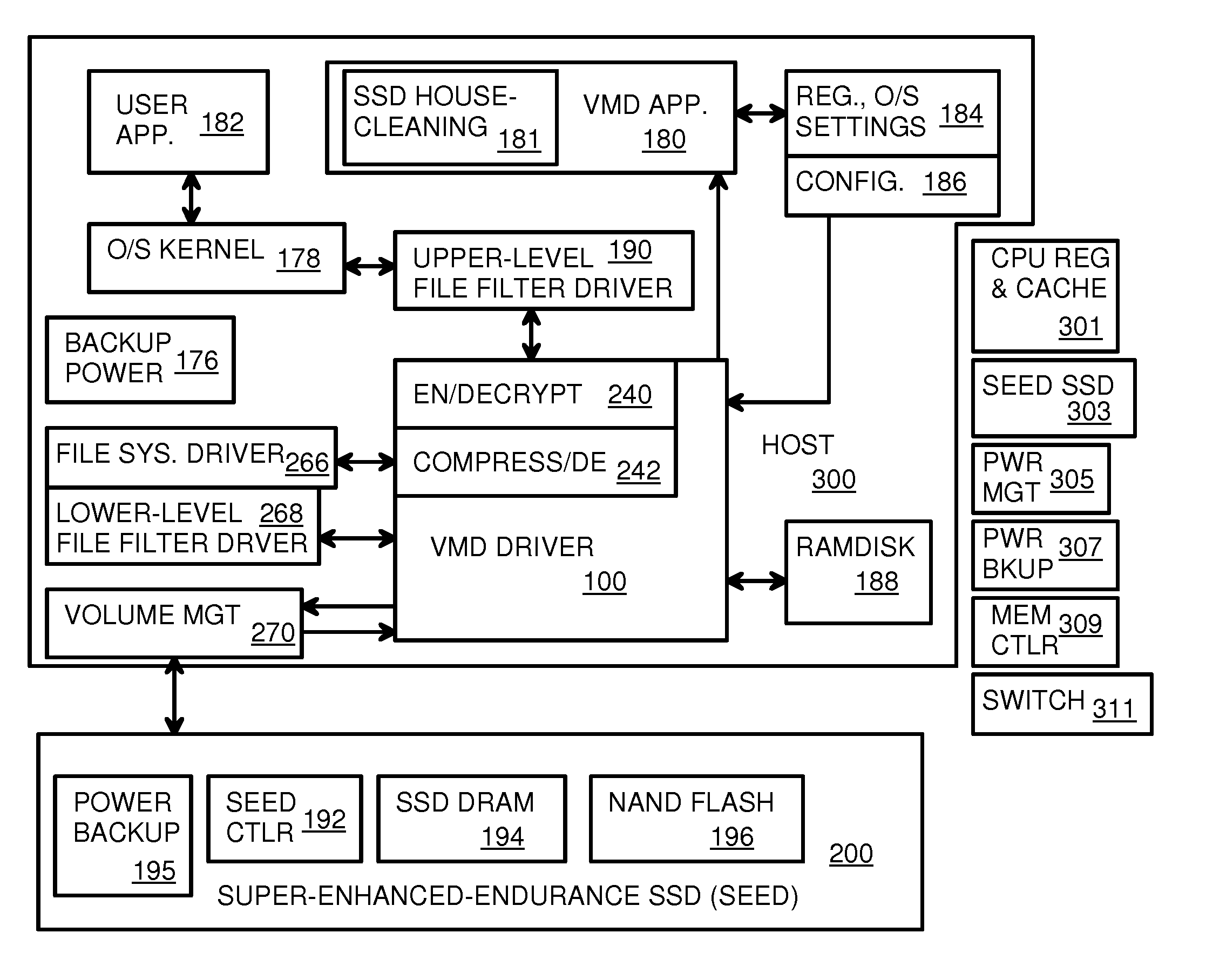

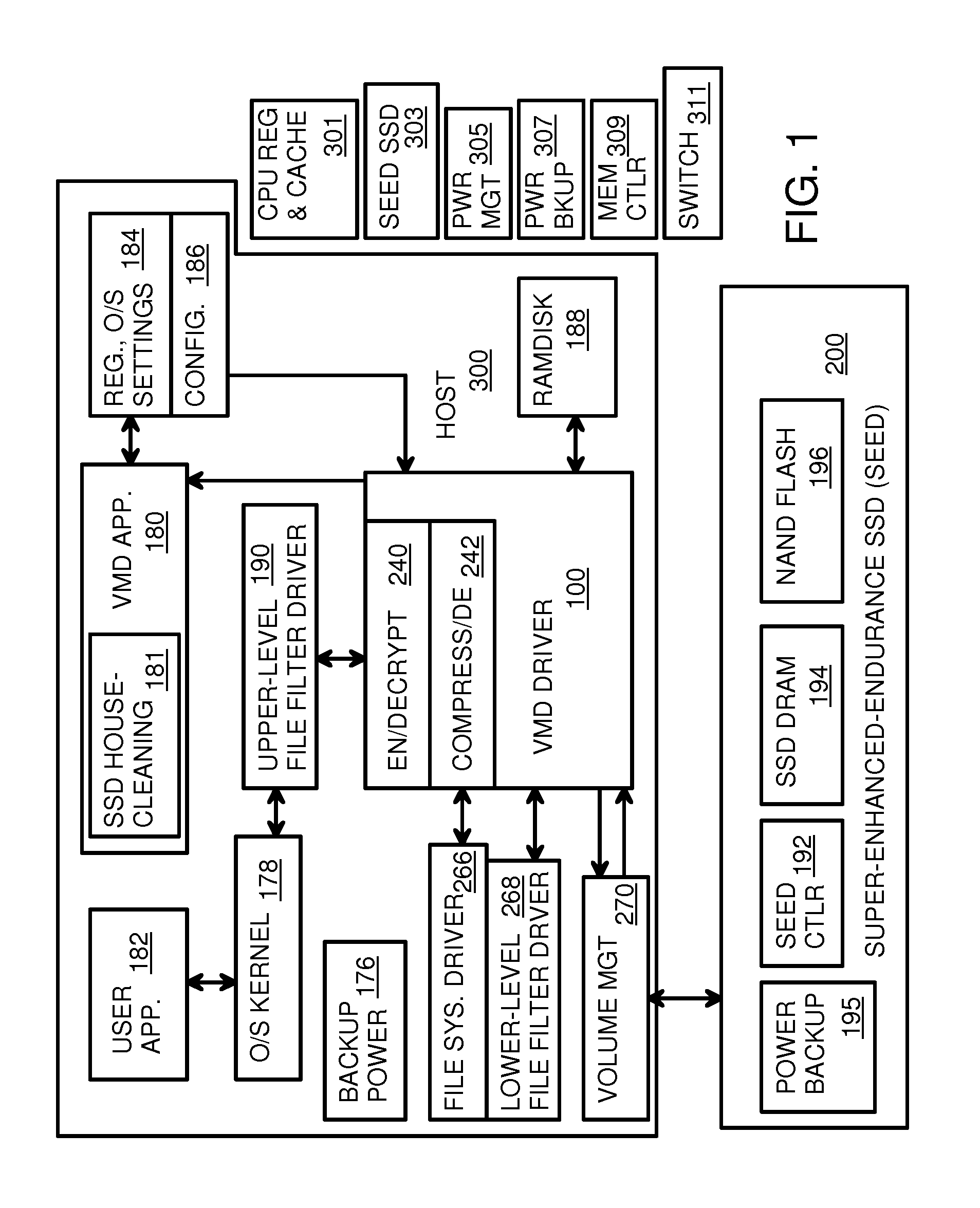

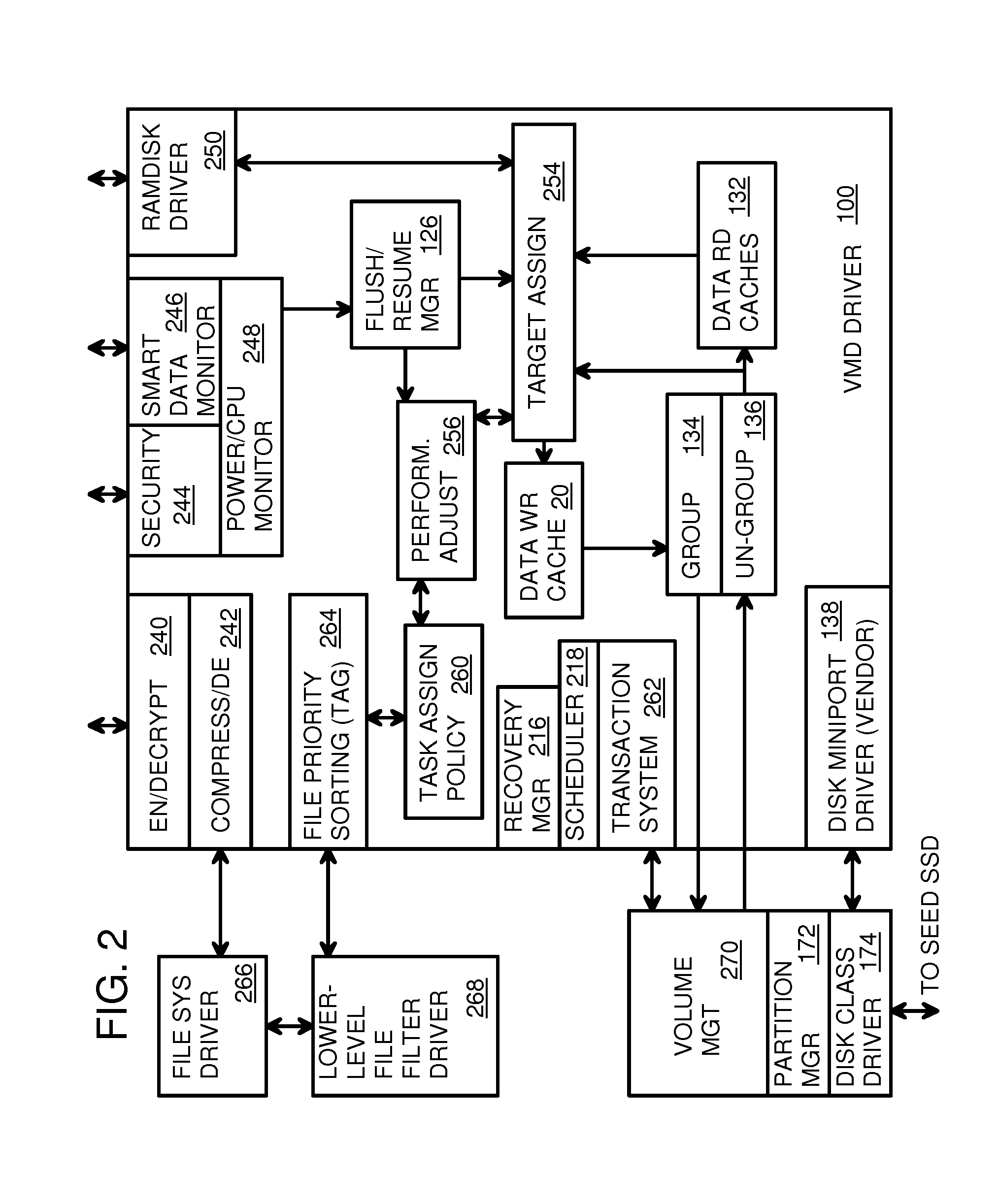

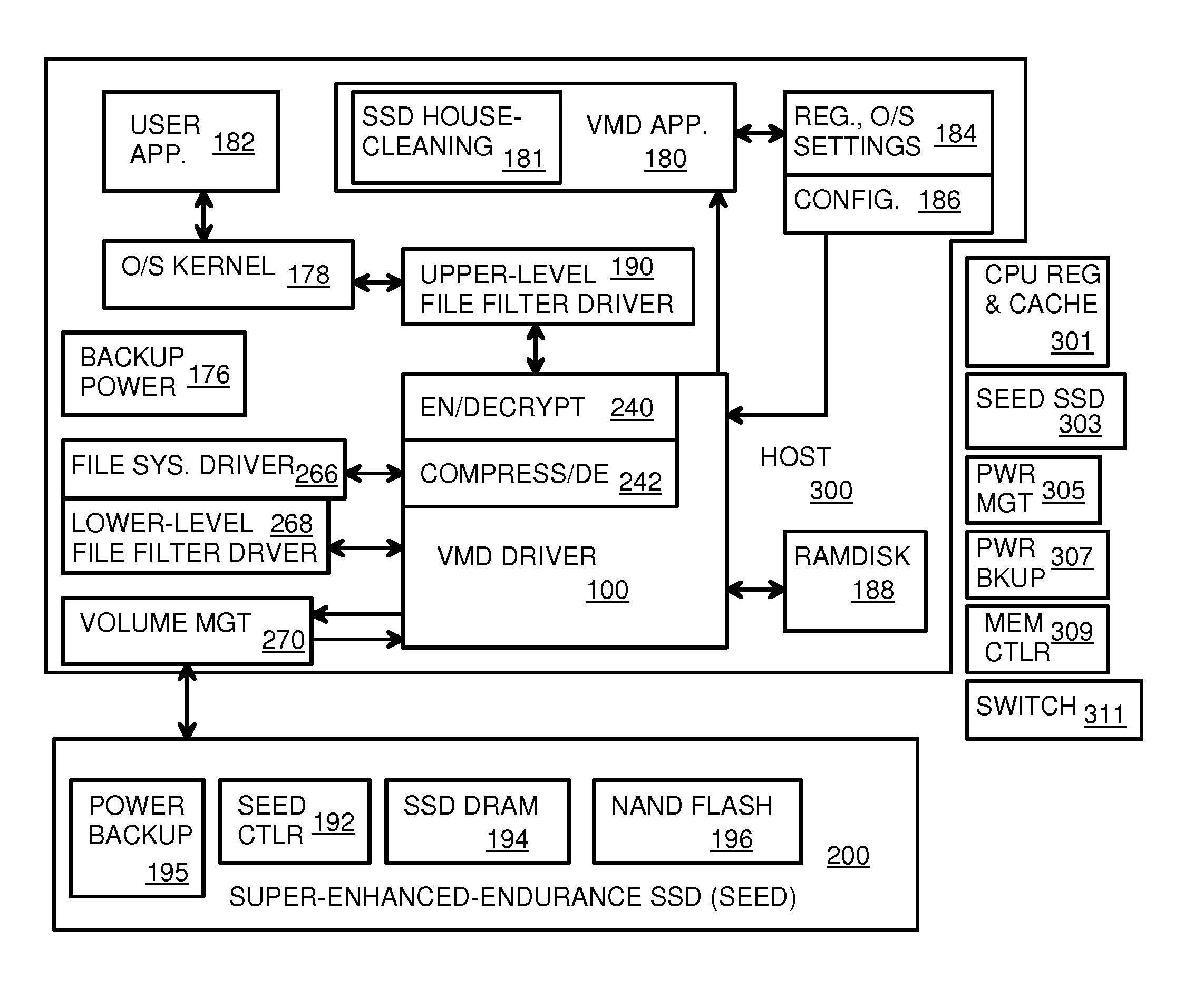

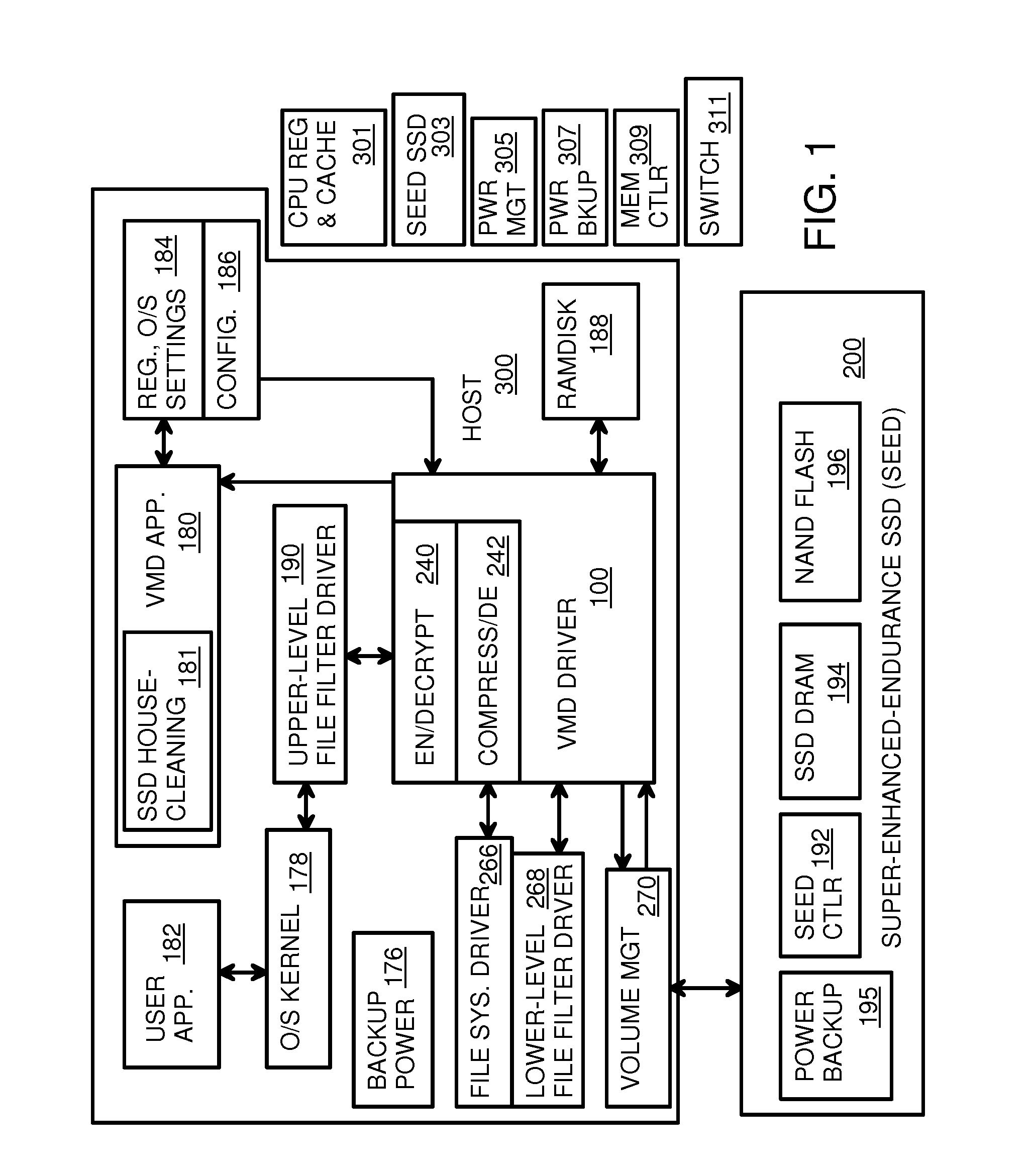

Virtual Memory Device (VMD) Application/Driver with Dual-Level Interception for Data-Type Splitting, Meta-Page Grouping, and Diversion of Temp Files to Ramdisks for Enhanced Flash Endurance

ActiveUS20130145085A1Memory architecture accessing/allocationMemory adressing/allocation/relocationFilename extensionData file

A Virtual-Memory Device (VMD) driver and application execute on a host to increase endurance of flash memory attached to a Super Enhanced Endurance Device (SEED) or Solid-State Drive (SSD). Host accesses to flash are intercepted by the VMD driver using upper and lower-level filter drivers and categorized as data types of paging files, temporary files, meta-data, and user data files, using address ranges and file extensions read from meta-data tables. Paging files and temporary files are optionally written to flash. Full-page and partial-page data are grouped into multi-page meta-pages by data type before storage by the SSD. ramdisks and caches for storing each data type in the host DRAM are managed and flushed to the SSD by the VMD driver. Write dates are stored for pages or blocks for management functions. A spare / swap area in DRAM reduces flash wear. Reference voltages are adjusted when error correction fails.

Owner:SUPER TALENT TECH CORP

Virtual Memory Device (VMD) Application/Driver for Enhanced Flash Endurance

ActiveUS20150106557A1Memory architecture accessing/allocationInput/output to record carriersVirtual memoryFilename extension

A Virtual-Memory Device (VMD) driver and application execute on a host to increase endurance of flash memory attached to a Super Enhanced Endurance Device (SEED) or Solid-State Drive (SSD). Host accesses to flash are intercepted by the VMD driver using upper and lower-level filter drivers and categorized as data types of paging files, temporary files, meta-data, and user data files, using address ranges and file extensions read from meta-data tables. Paging files and temporary files are optionally written to flash. Full-page and partial-page data are grouped into multi-page meta-pages by data type before storage by the SSD. Ramdisks and caches for storing each data type in the host DRAM are managed and flushed to the SSD by the VMD driver. Write dates are stored for pages or blocks for management functions. A spare / swap area in DRAM reduces flash wear. Reference voltages are adjusted when error correction fails.

Owner:SUPER TALENT TECH CORP

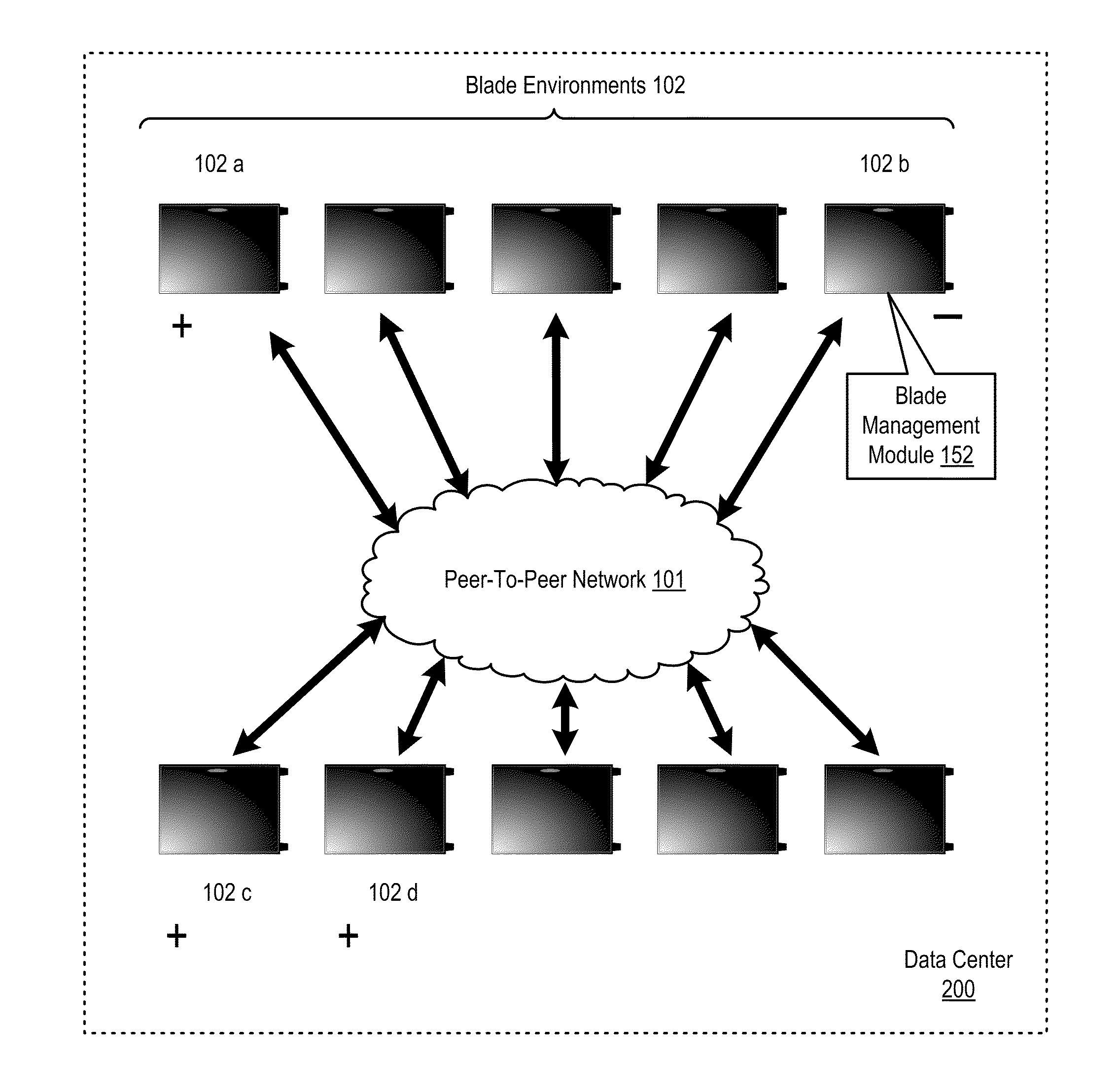

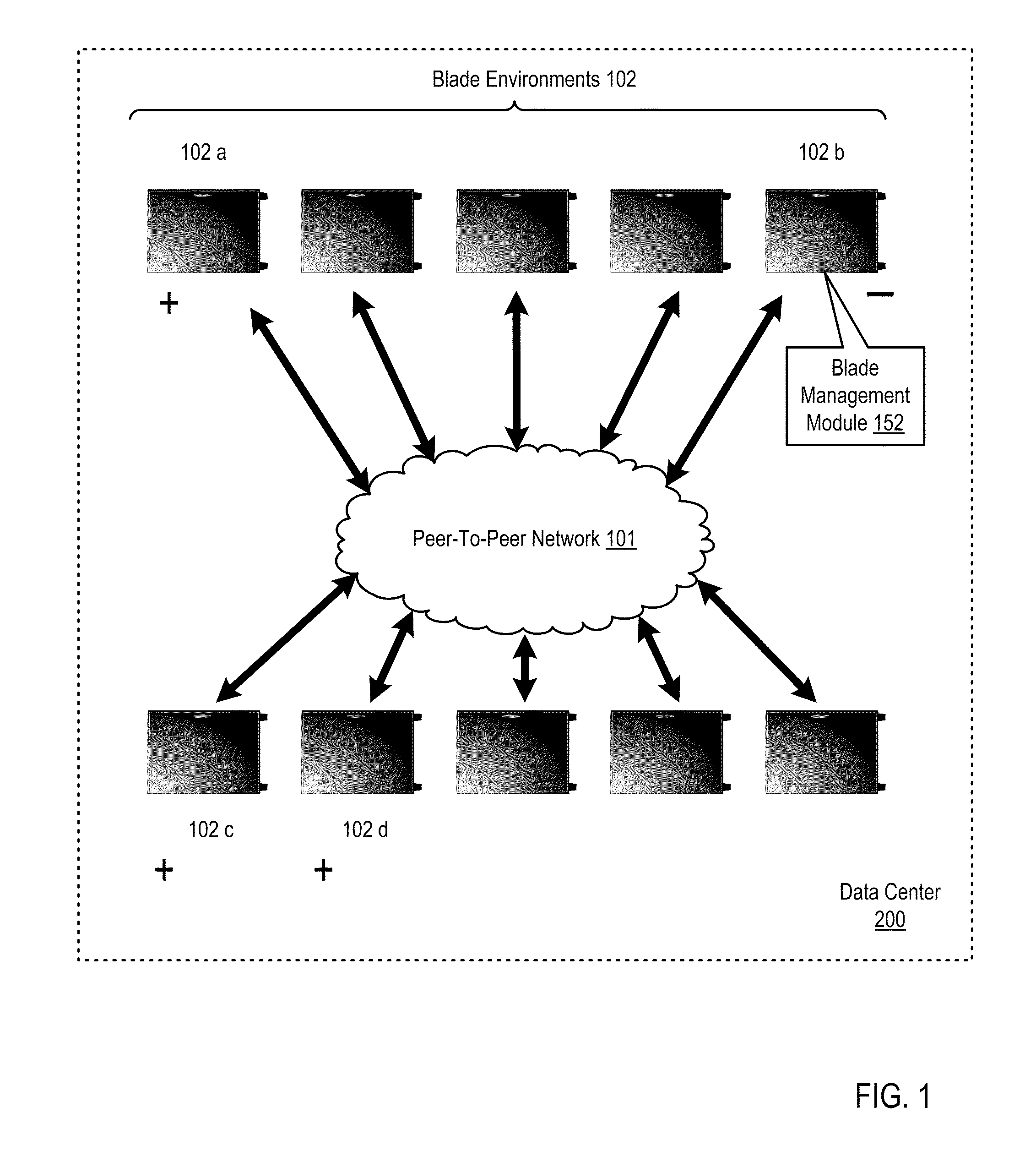

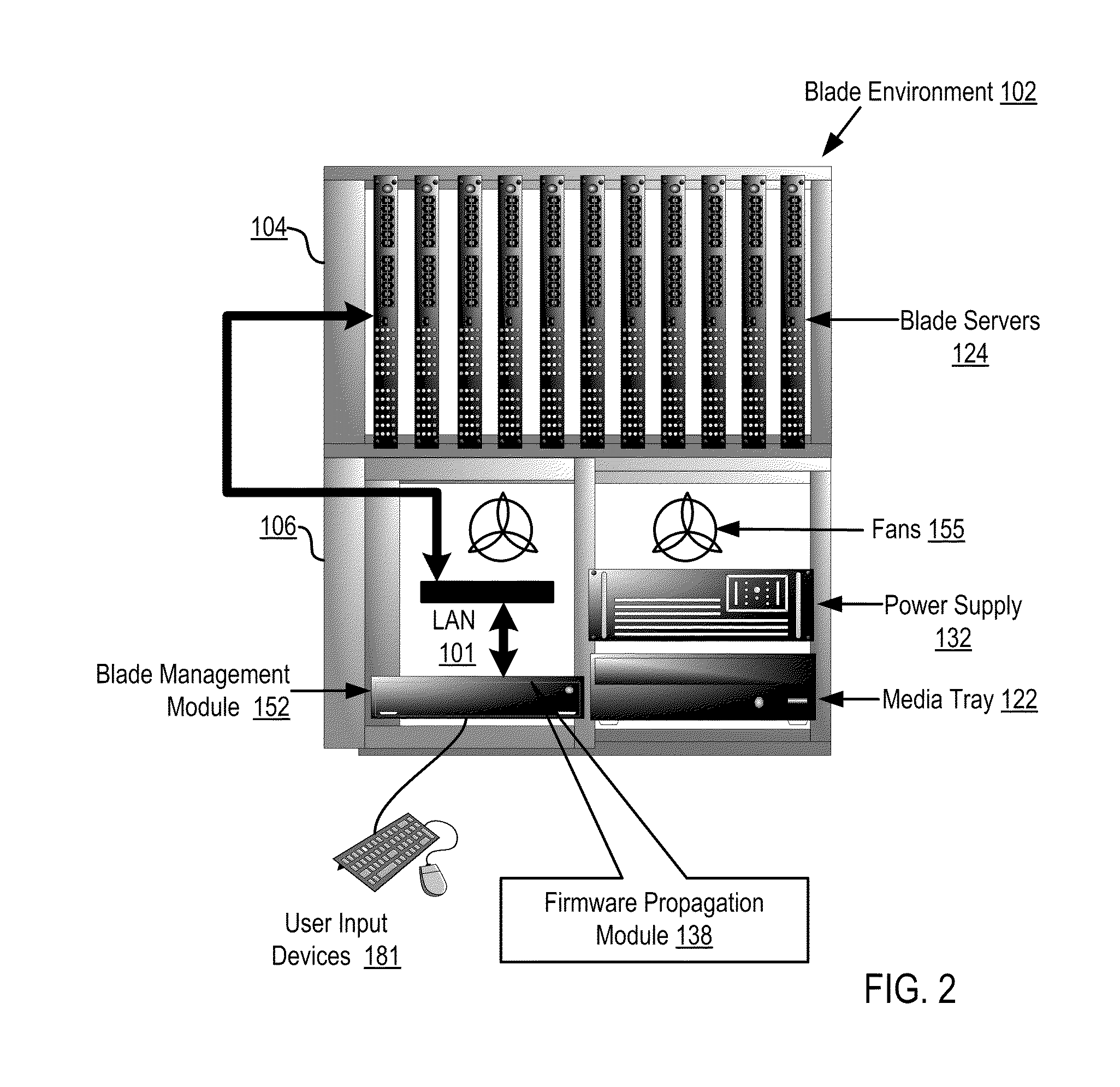

Propagating Firmware Updates In A Peer-To-Peer Network Environment

ActiveUS20110106886A1Multiple digital computer combinationsSpecific program execution arrangementsUplevelBroadcasting

Propagating firmware updates in a peer-to-peer network including identifying, that one or more nodes in the network have firmware that is uplevel with respect to the downlevel node; broadcasting an update request requesting an update to the firmware; receiving, from a plurality of nodes having firmware uplevel with respect to the downlevel node, a plurality of portions of the update, metadata describing each portion of the update received, and metadata describing the firmware installed on each of the plurality of nodes having firmware uplevel with respect to the downlevel node; determining, in dependence upon the metadata describing each portion of the update received and the metadata describing the firmware installed on each of the plurality of nodes having firmware uplevel with respect to the downlevel node, whether the portions of the update received comprise an entire update; and updating the firmware if the portions of the update received comprise the entire update.

Owner:LENOVO GLOBAL TECH INT LTD

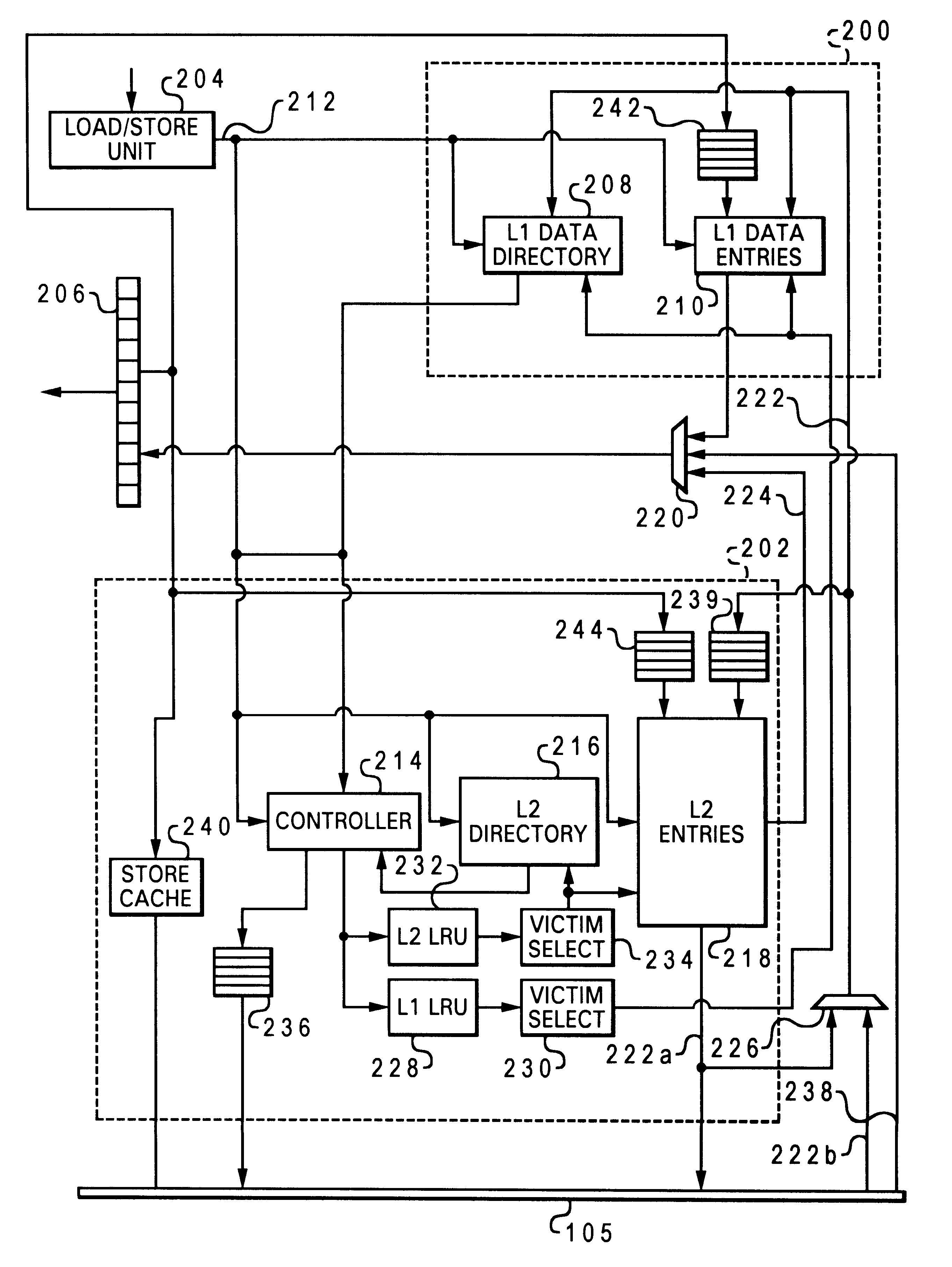

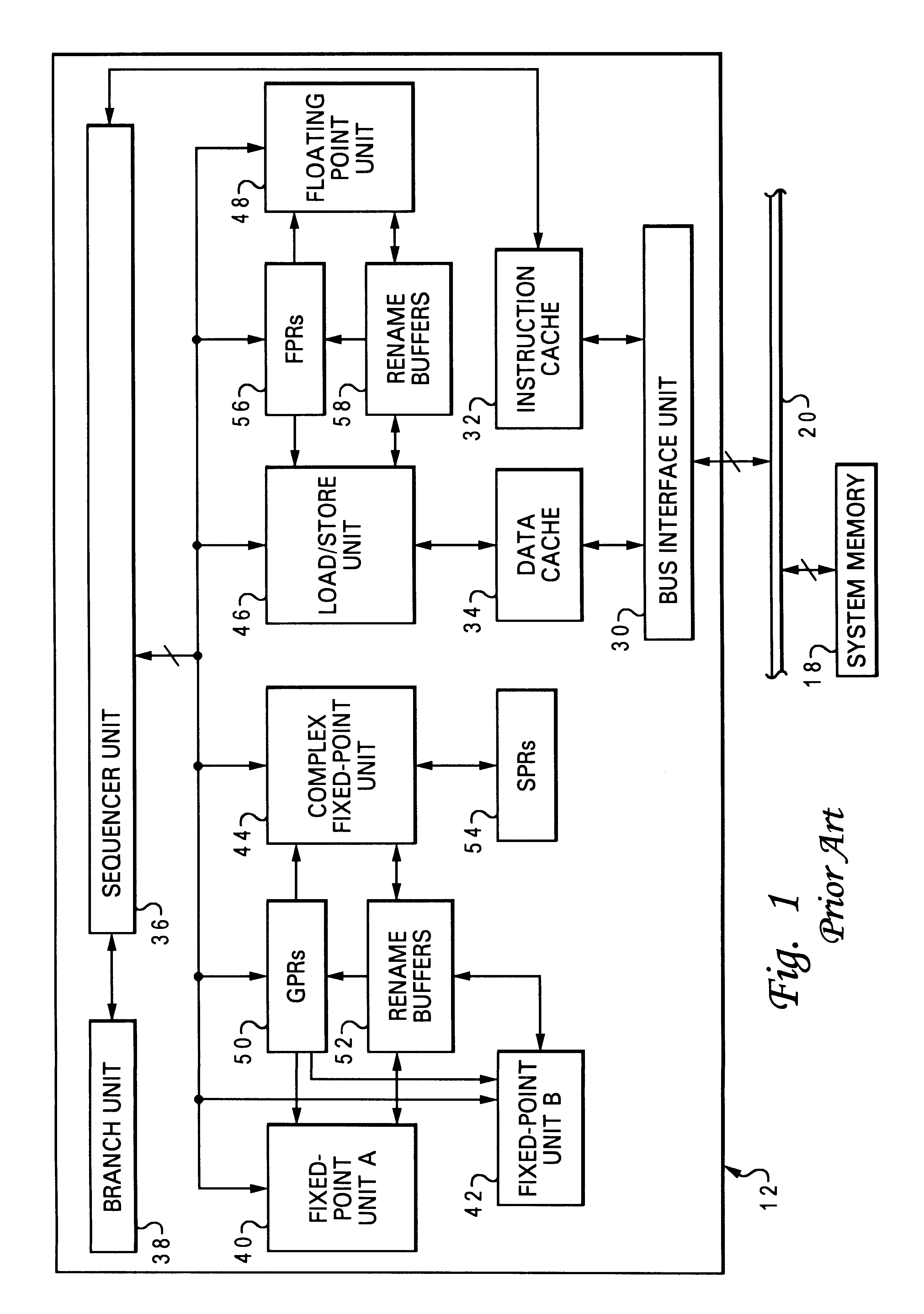

Layered local cache with lower level cache updating upper and lower level cache directories

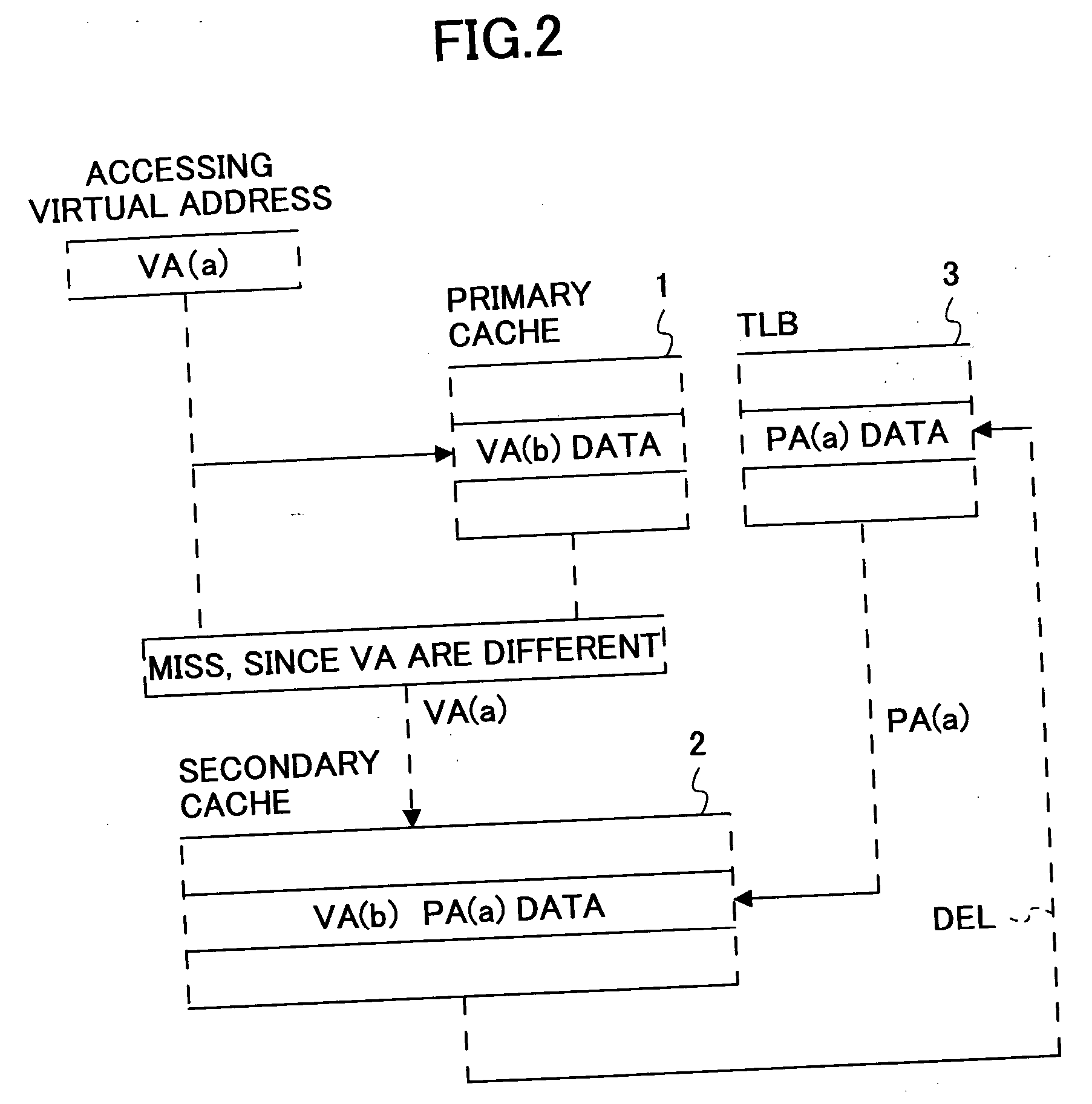

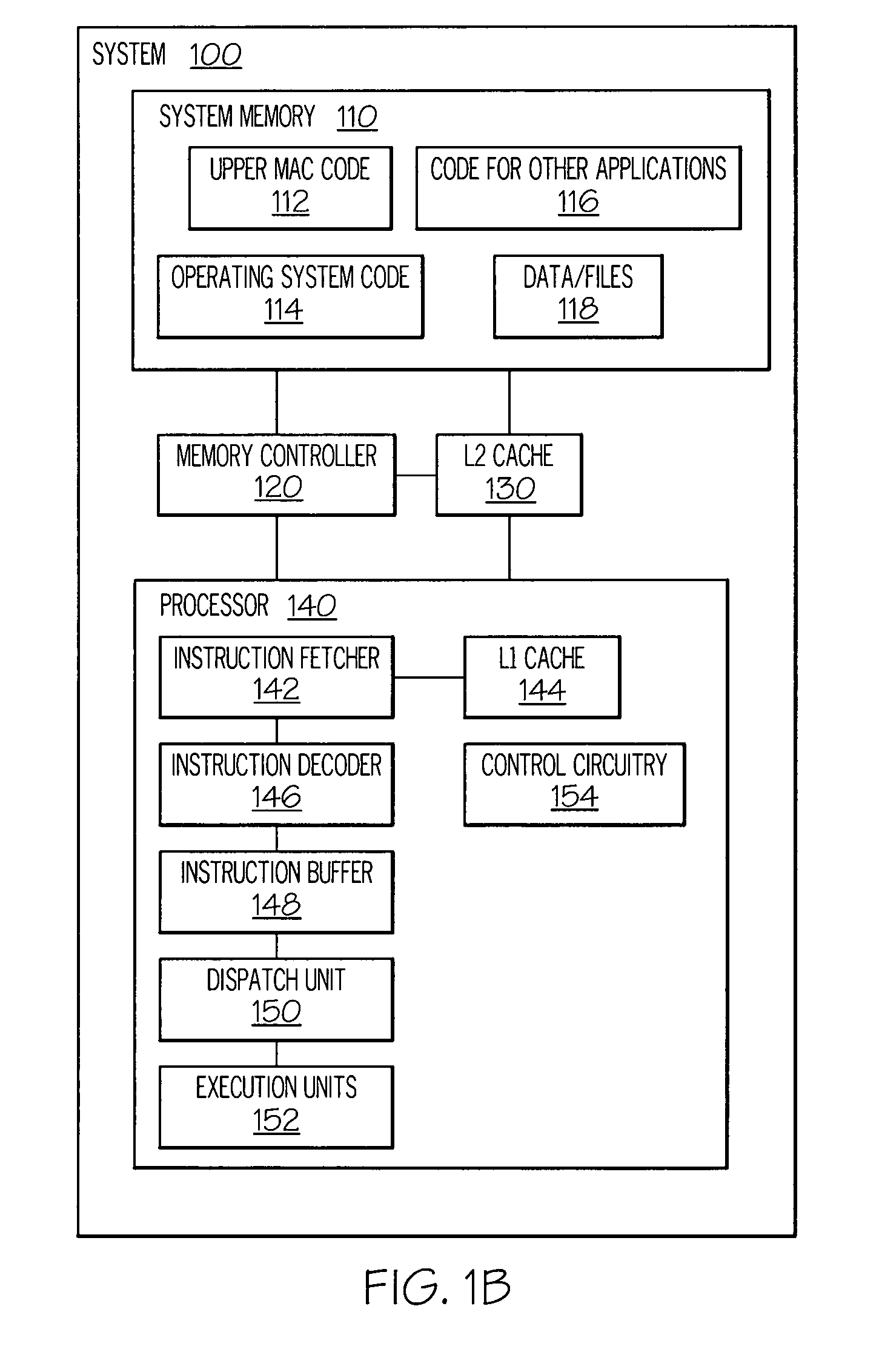

A method of improving memory access for a computer system, by sending load requests to a lower level storage subsystem along with associated information pertaining to intended use of the requested information by the requesting processor, without using a high level load queue. Returning the requested information to the processor along with the associated use information allows the information to be placed immediately without using reload buffers. A register load bus separate from the cache load bus (and having a smaller granularity) is used to return the information. An upper level (L1) cache may then be imprecisely reloaded (the upper level cache can also be imprecisely reloaded with store instructions). The lower level (L2) cache can monitor L1 and L2 cache activity, which can be used to select a victim cache block in the L1 cache (based on the additional L2 information), or to select a victim cache block in the L2 cache (based on the additional L1 information). L2 control of the L1 directory also allows certain snoop requests to be resolved without waiting for L1 acknowledgement. The invention can be applied to, e.g., instruction, operand data and translation caches.

Owner:IBM CORP

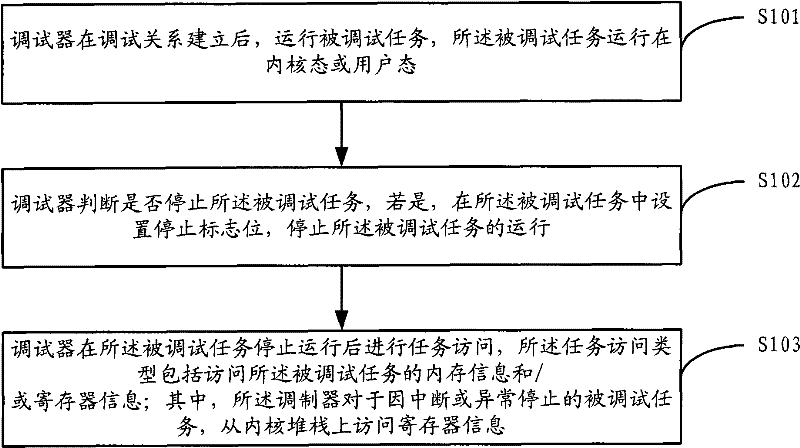

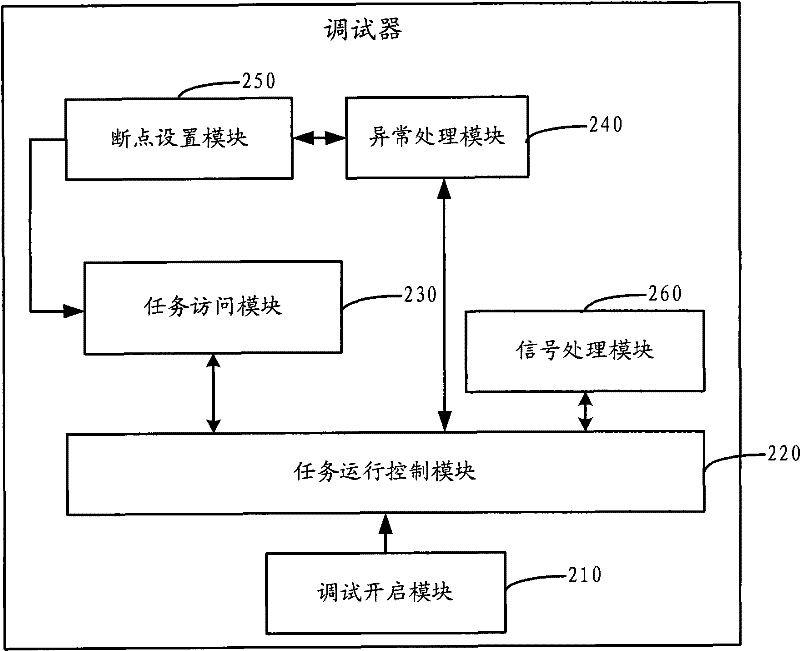

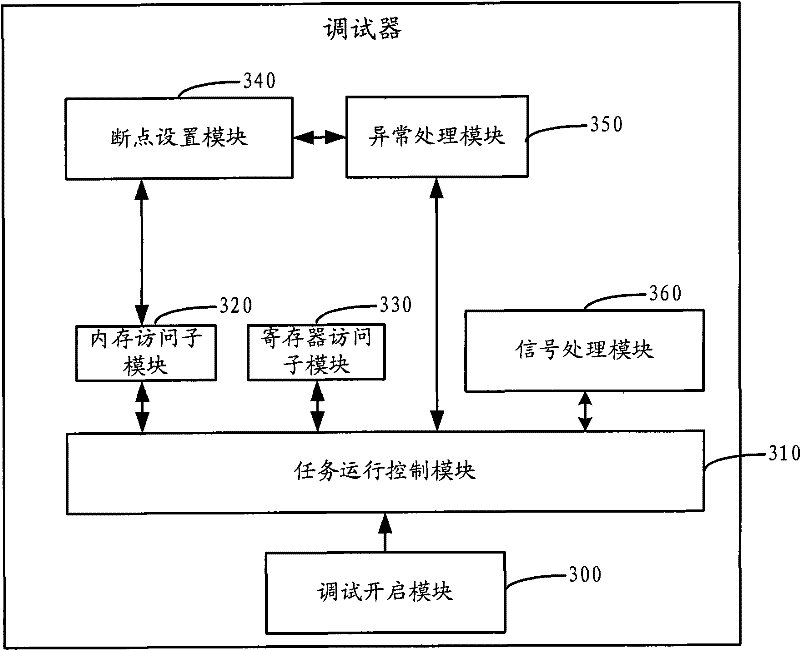

Debugger and debugging method thereof

The invention discloses a debugger and a debugging method thereof. The debugging method comprises the following steps: after the debugging relation is established, a debugged task runs in a kernel mode or a user mode by means of the debugger; when the debugger receives a stop instruction sent by a top level or runs into an abnormal event during the debugging process of the debugged task or captures a concerned signal message during the debugging process of the debugged task in the user mode, the debugged task stops running, and a stop mark bit is set in the debugged task; and after the debugged task stops running, the debugger accesses the memory information and / or register information of the debugged task. By adopting the debugging method, the running status of the debugged task in user address space and kernel address space can be tracked and debugged.

Owner:ZTE CORP

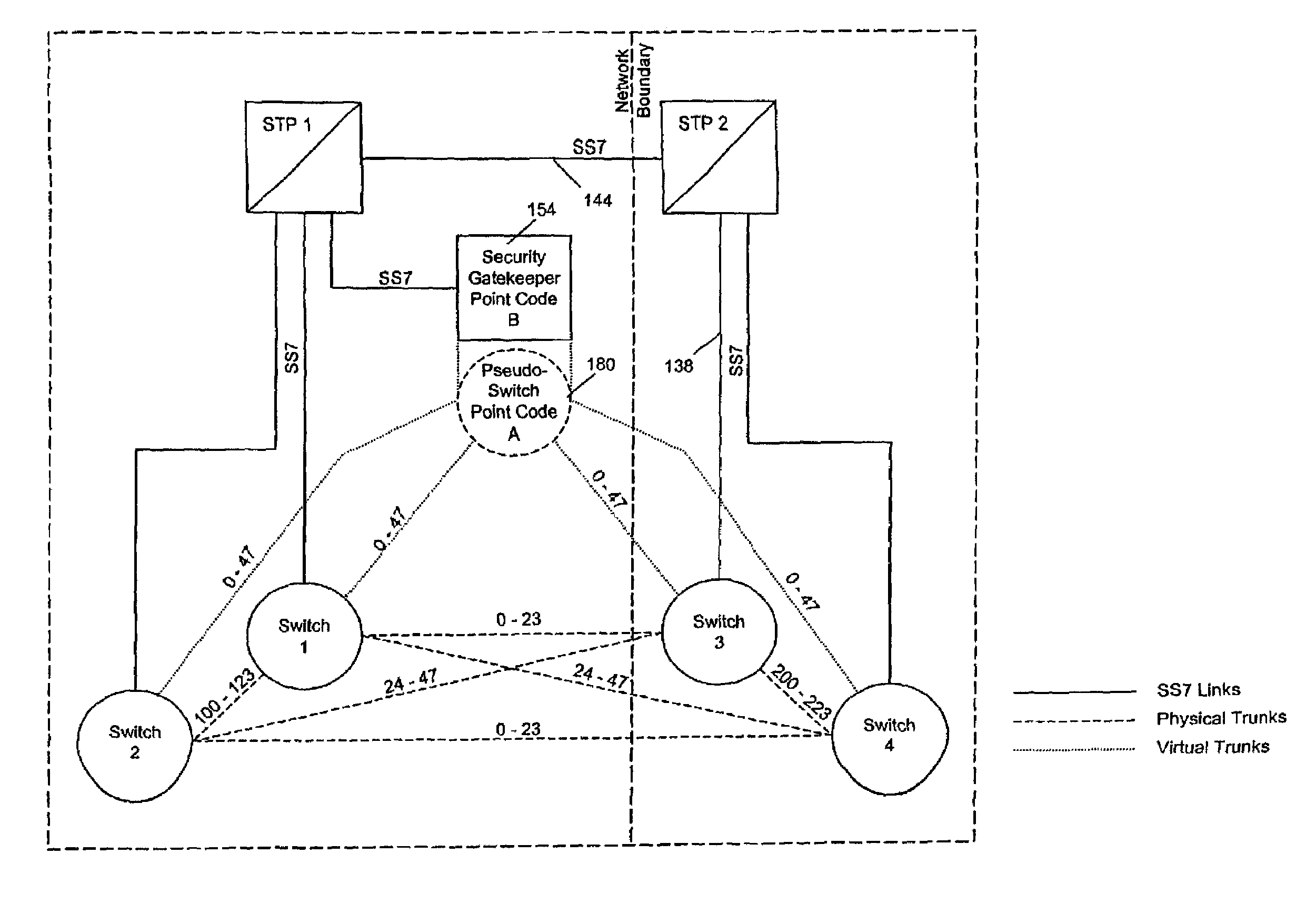

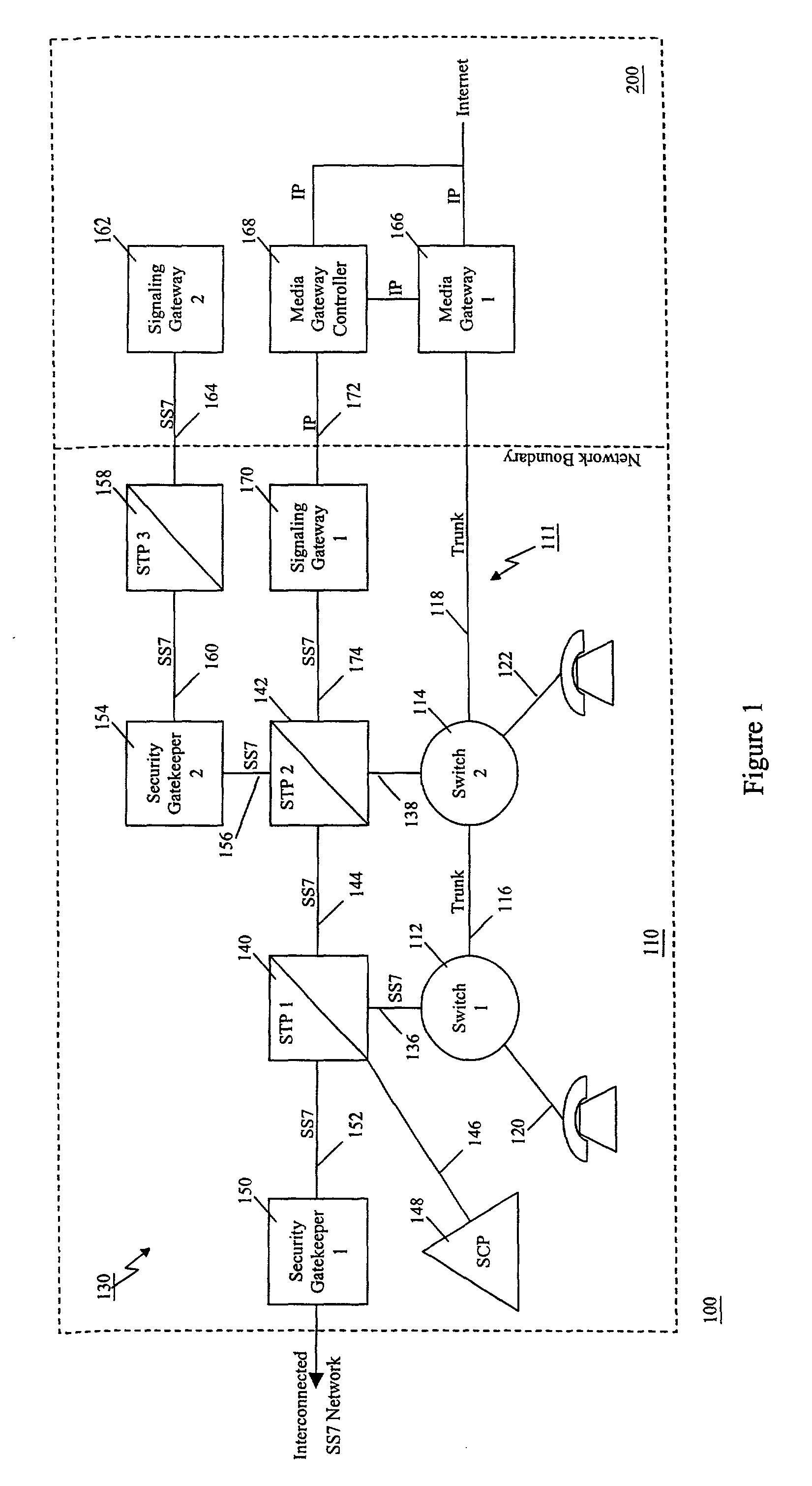

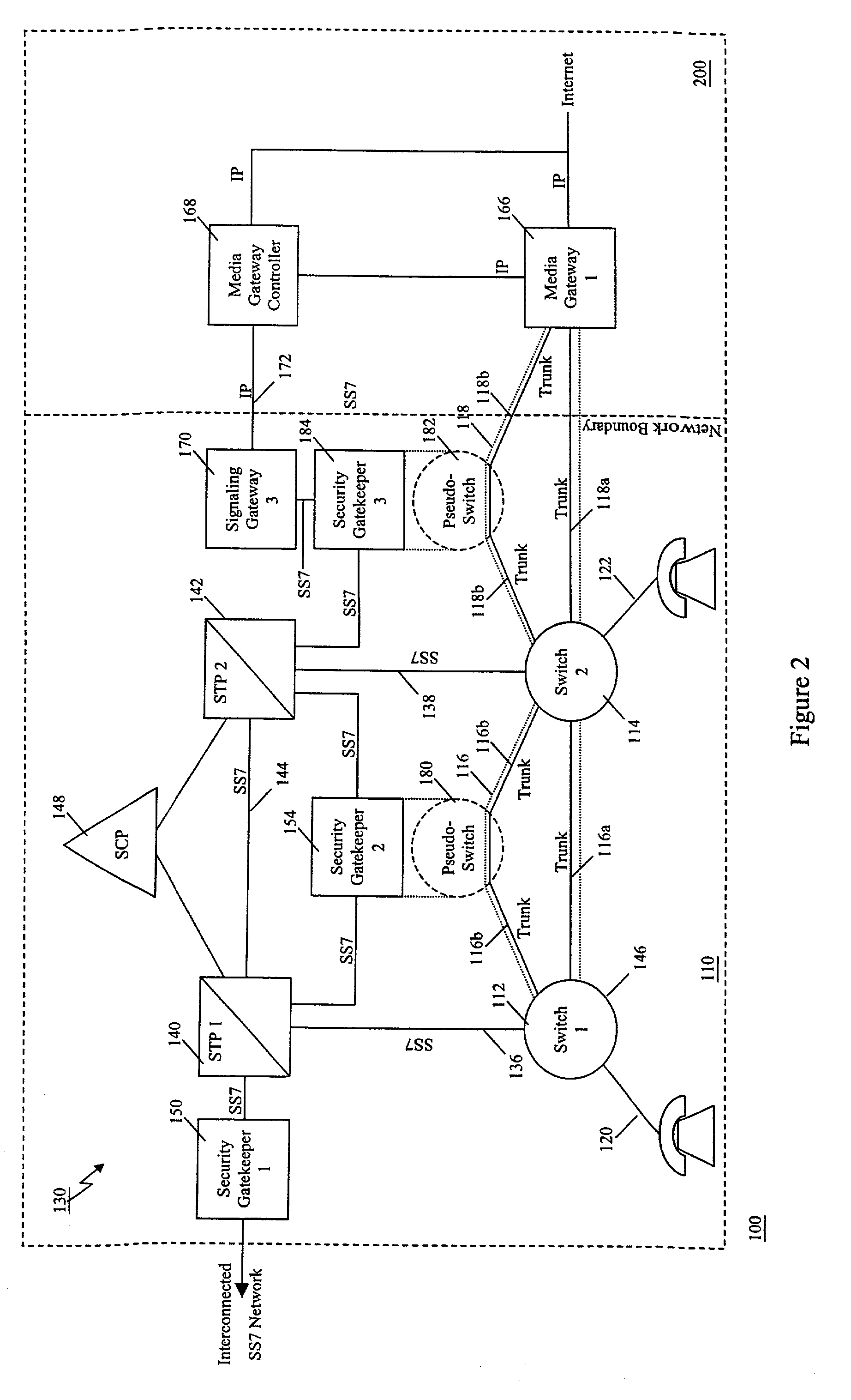

Method of and apparatus for mediating common channel signaling messages between networks using a pseudo-switch

InactiveUS7224686B1Fast communicationMeet growth needsInterconnection arrangementsTime-division multiplexTraffic capacityTimestamp

A communication network includes an SS7 Security Gatekeeper that authenticates and validates network control messages within, transiting, entering and leaving an overlying control fabric such as an SS7 network. The SS7 Security Gatekeeper incorporates several levels of checks to ensure that messages are properly authenticated, valid, and consistent with call progress and system status. In addition to message format, message content is checked to ensure that the originating node has the proper authority to send the message and to invoke the related functions. Predefined sets of templates may be used to check the messages, each set of templates being associated with respective originating point codes and / or calling party addresses. The templates may also be associated with various system states such that messages corresponding to a particular template cause a state transition along a particular edge to a next state node at which another set of templates are defined. Thus, system and call state is maintained. The monitor also includes signaling point authentication using digital signatures and timestamps. Timestamps are also used to initiate appropriate timeouts and so that old or improperly sequenced message may be ignored, corrected or otherwise processed appropriately. The SS7 Security Gatekeeper may be located at the edge of a network to be protected so that all messaging to and from the protected network most egress by way of the Gatekeeper. Alternatively, the SS7 Security Gatekeeper may be internal to the protected network and configured as a “pseudo switch” so that ISUP messaging is routed through the Gatekeeper while actual traffic is trunked directly between the associated SSPs, bypassing the Gatekeeper.

Owner:VERIZON PATENT & LICENSING INC

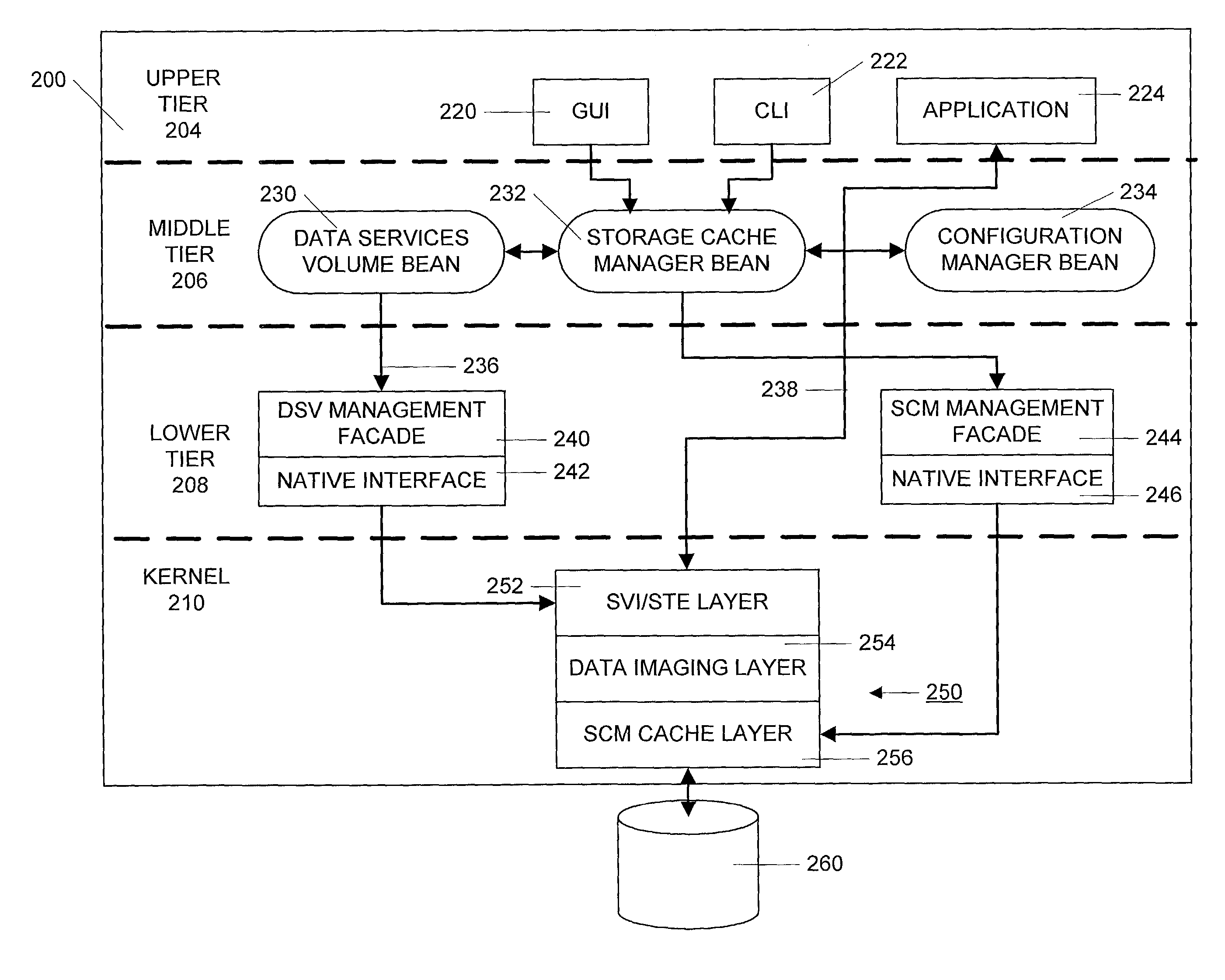

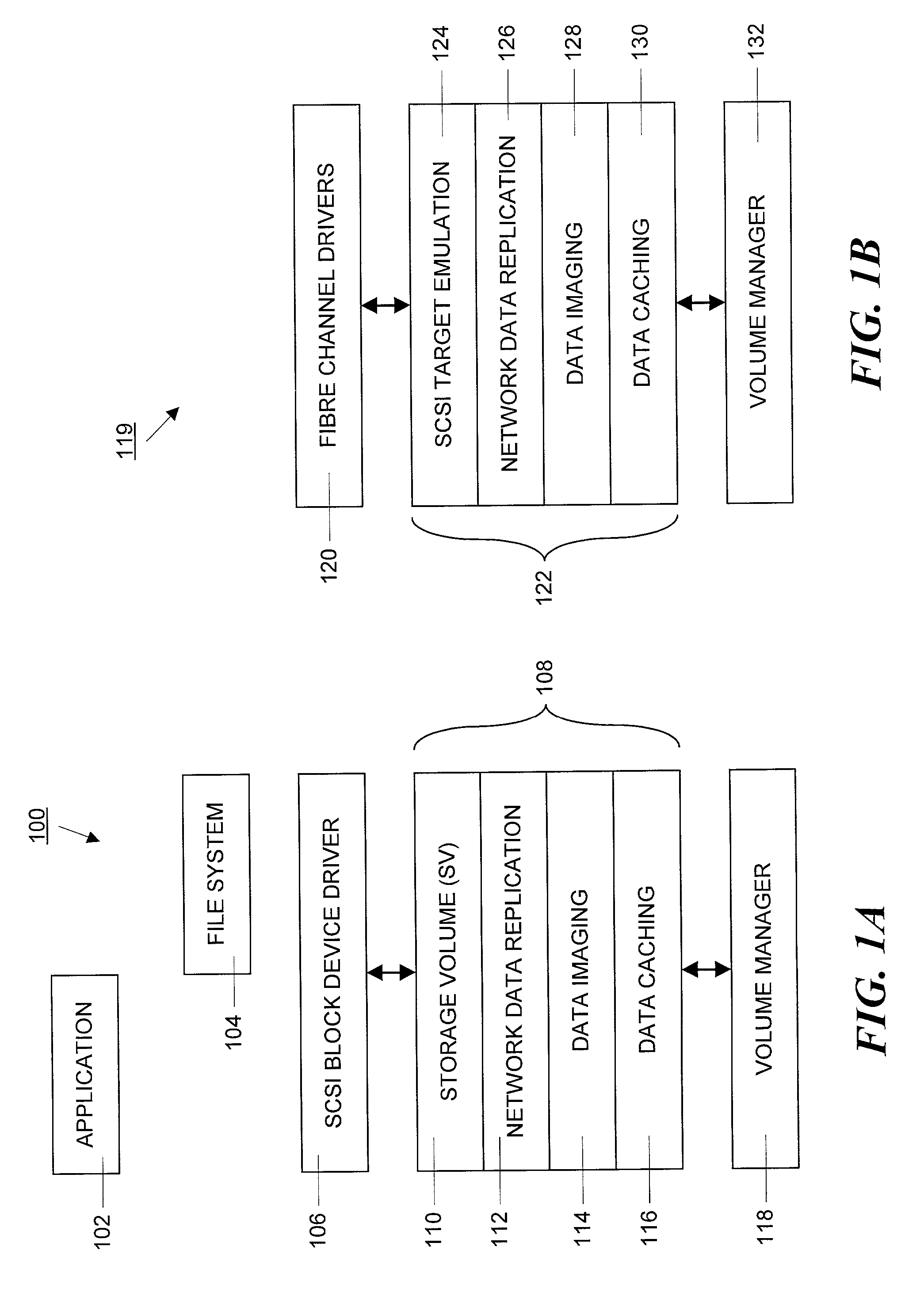

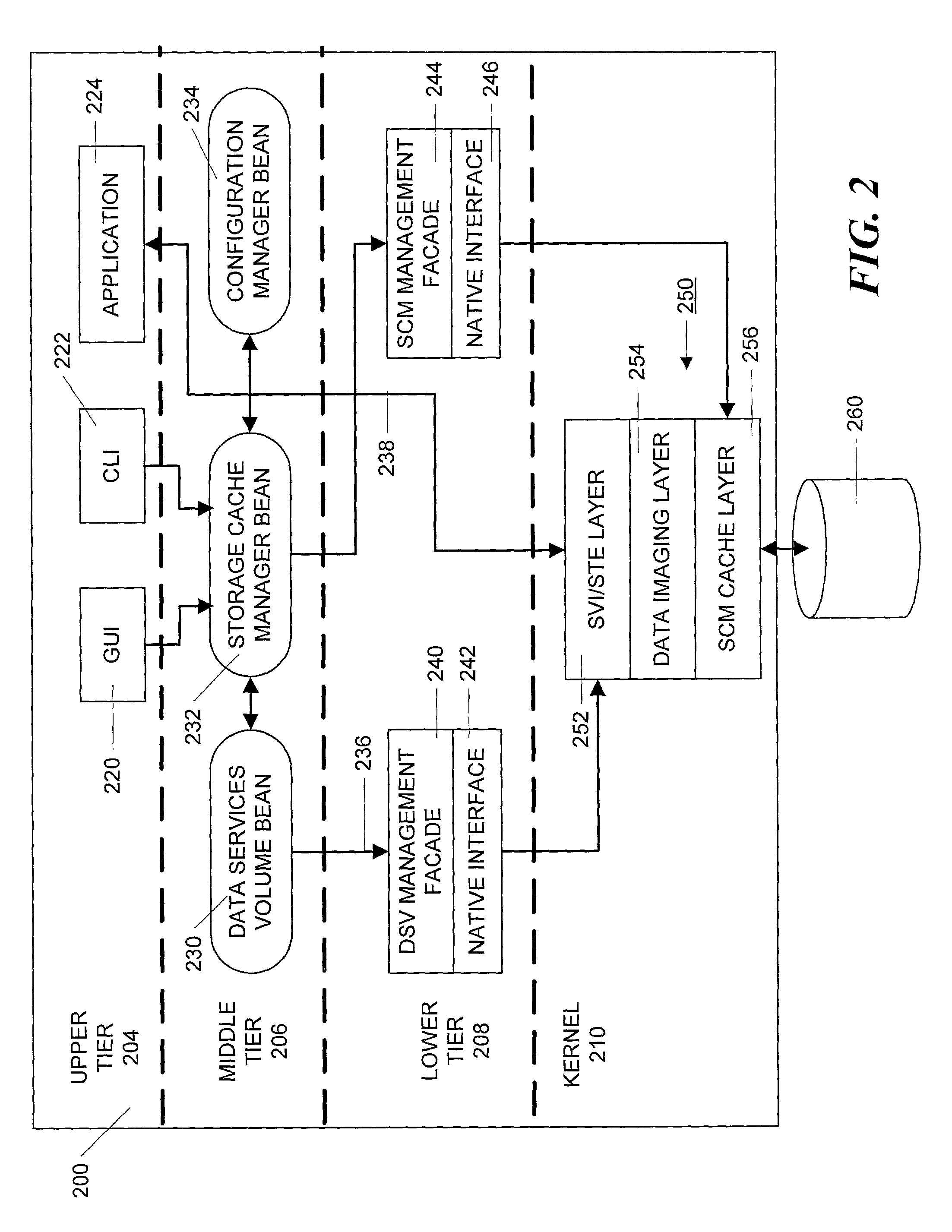

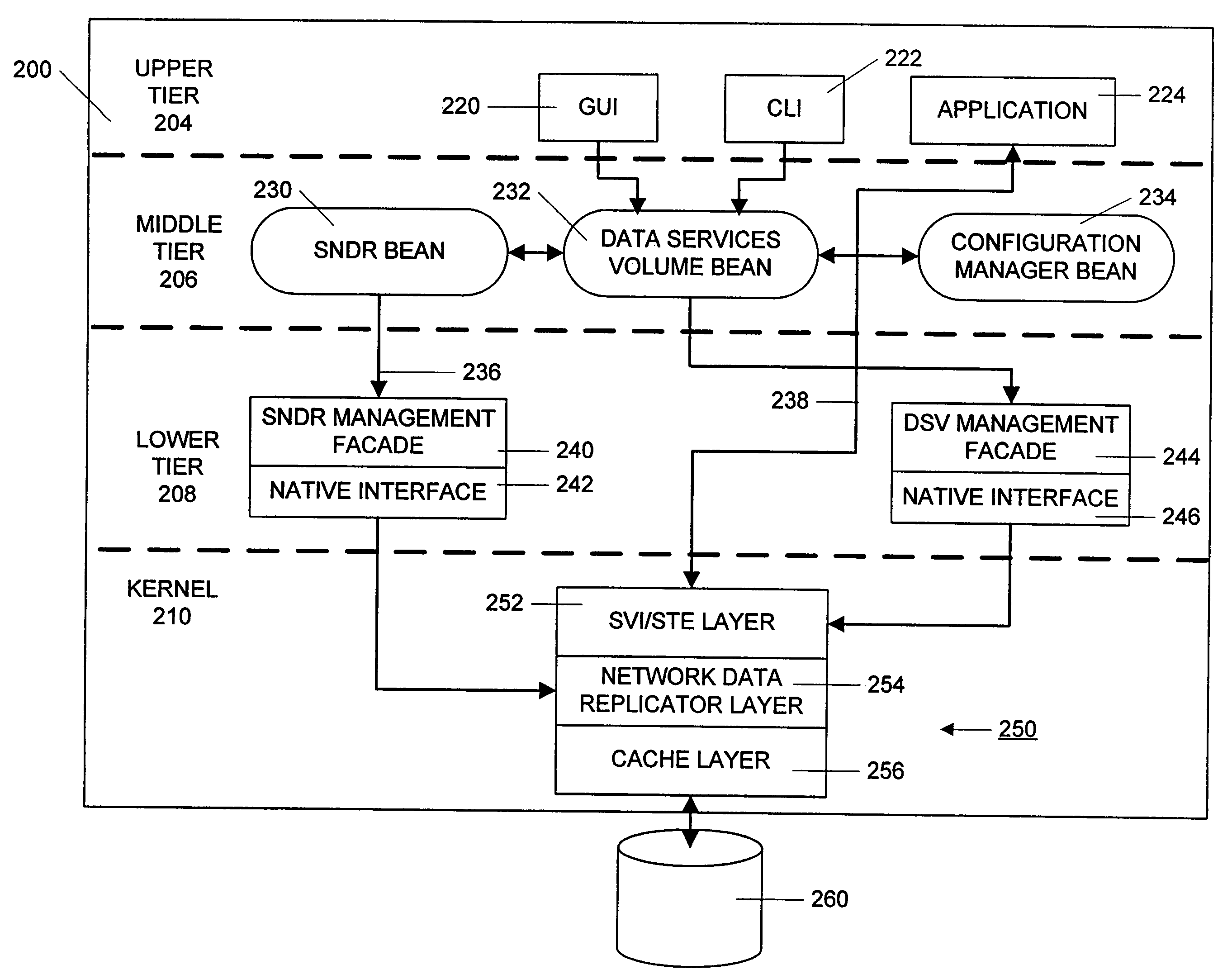

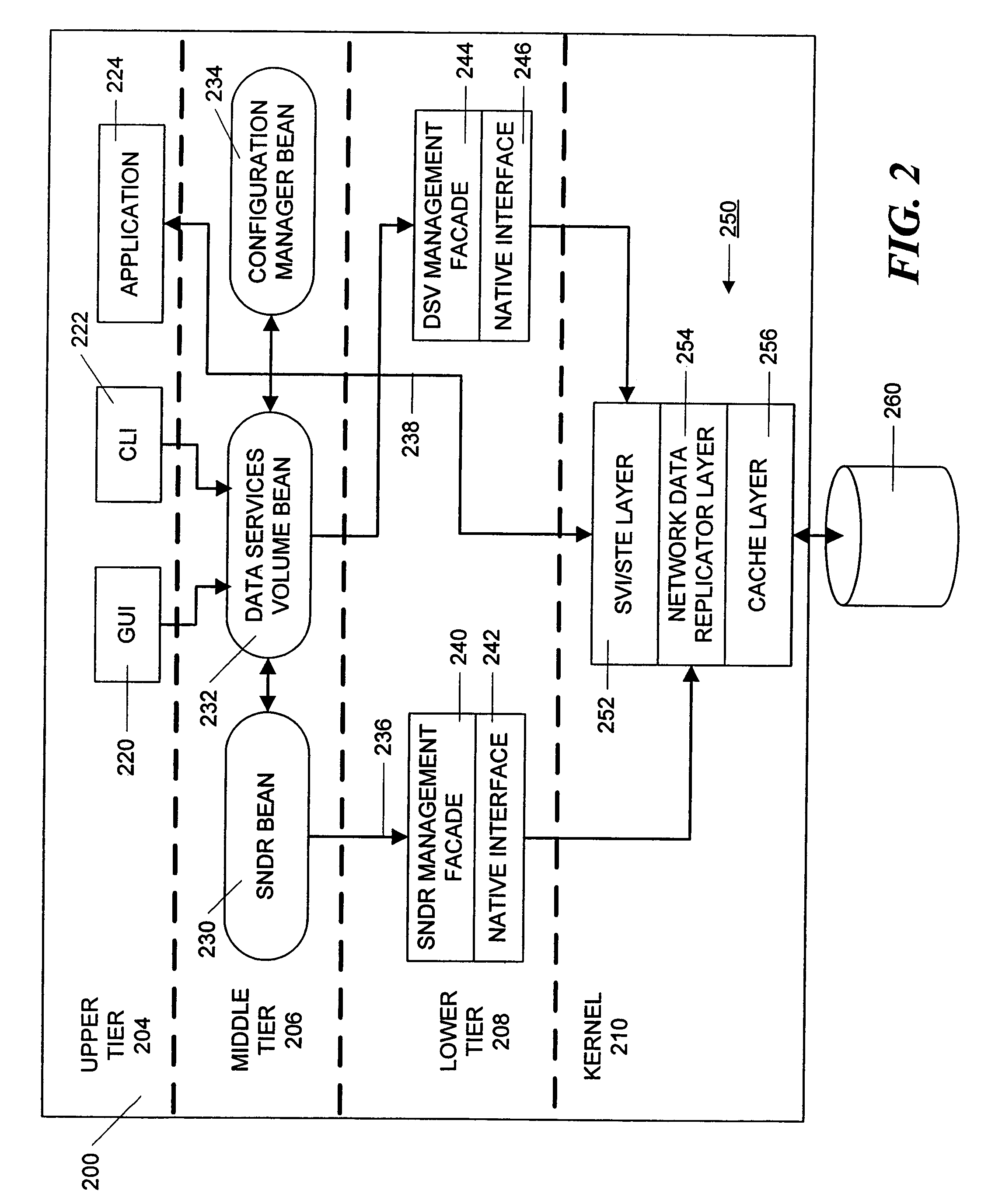

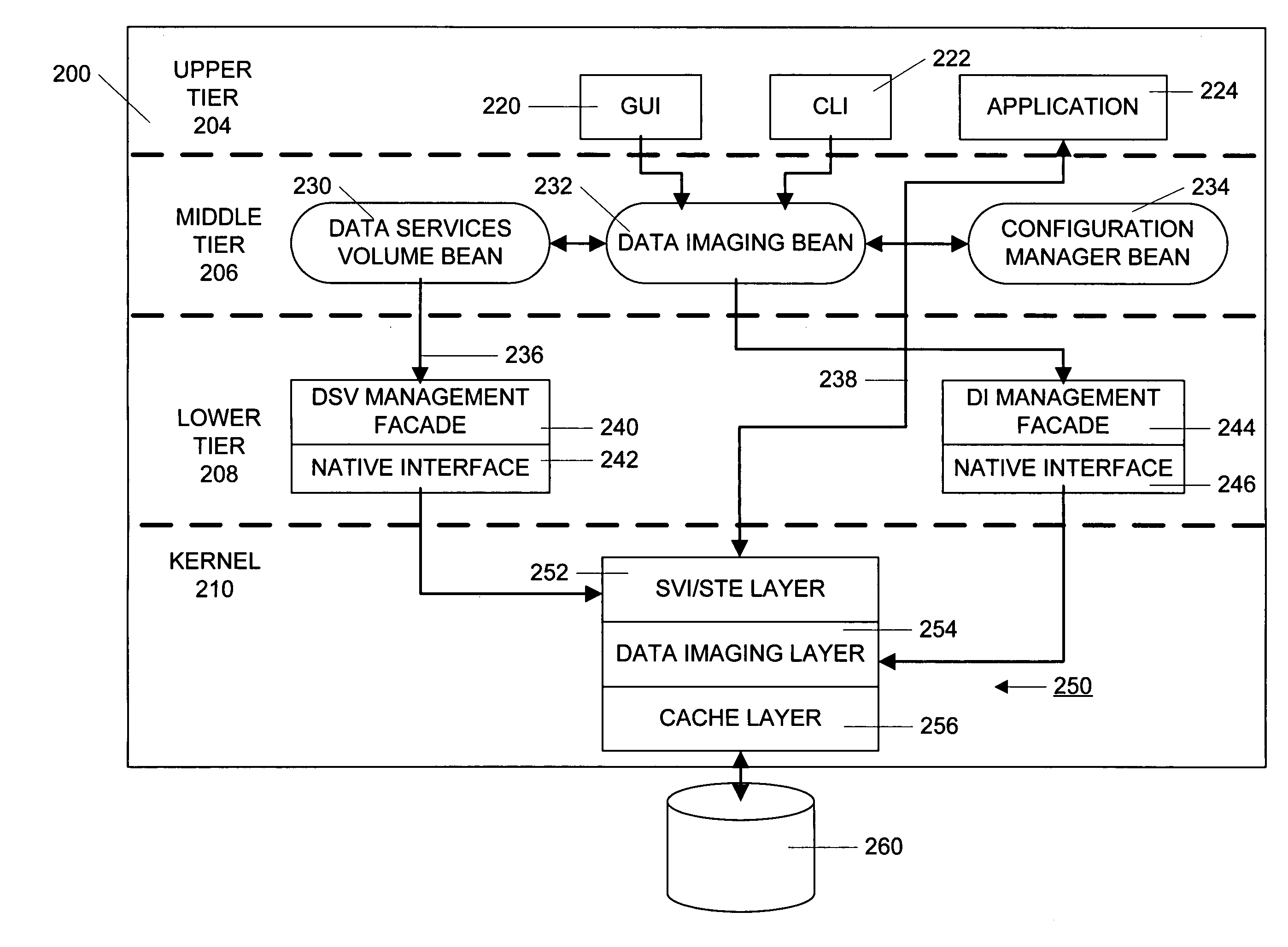

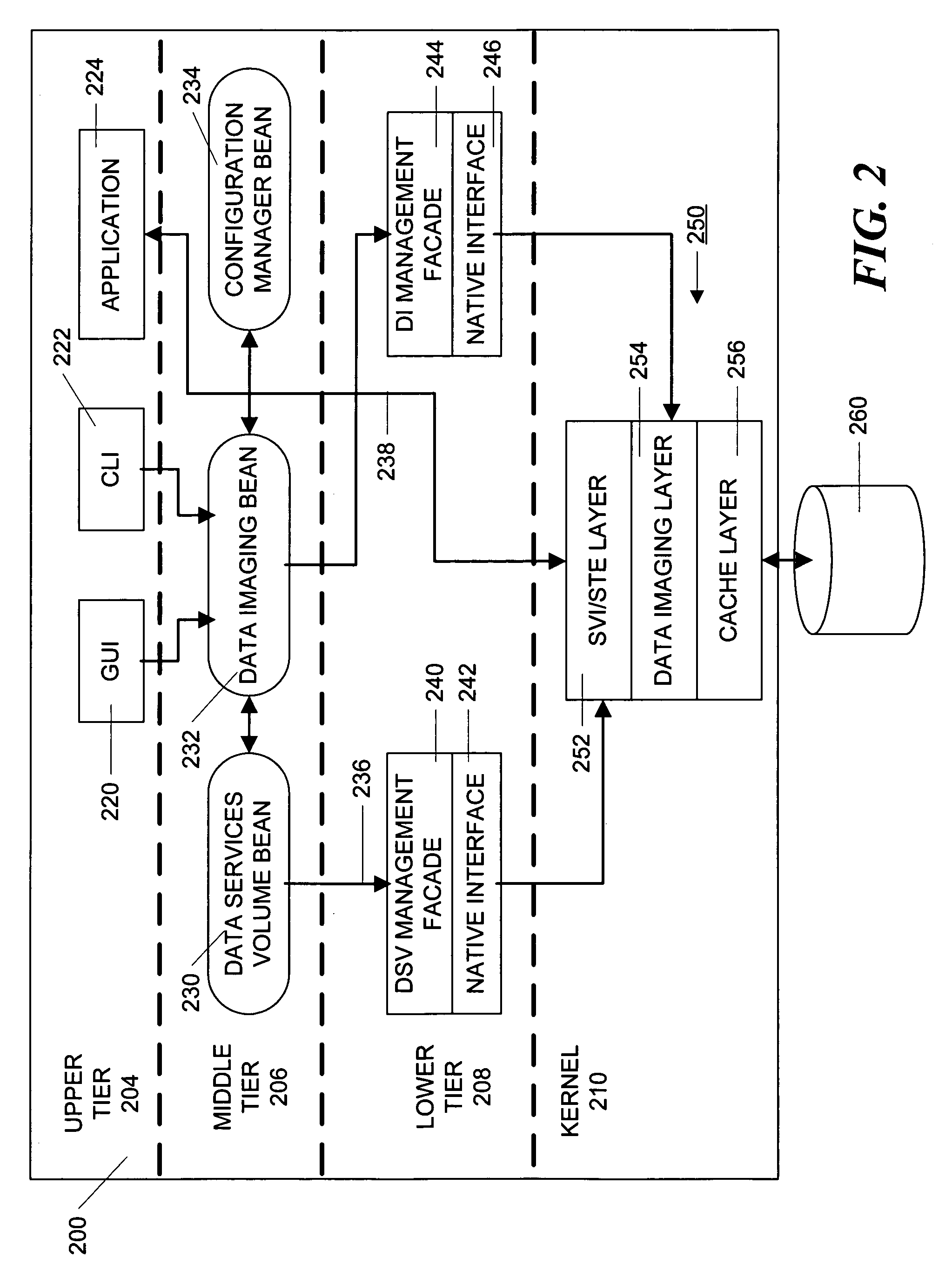

Method and apparatus for managing data caching in a distributed computer system

A three-tiered data caching system is used on a distributed computer system comprising hosts connected by a network. The lowest tier comprises management facade software running on each machine that converts a platform-dependent interface written with low-level kernel routines that actually implement the data caching system to platform-independent method calls. The middle tier is a set of federated Java beans that communicate with each other, with the management facades and with the upper tier of the system. The upper tier of the inventive system comprises presentation programs that can be directly manipulated by management personnel to view and control the system. In one embodiment, the federated Java beans can run on any machine in the system and communicate, via the network. A data caching management facade runs on selected hosts and at least one data caching bean also runs on those hosts. The data caching bean communicates directly with a management GUI or CLI and is controlled by user commands generated by the GUI or CLI. Therefore, a manager can configure and control the data caching system from a single location.

Owner:ORACLE INT CORP

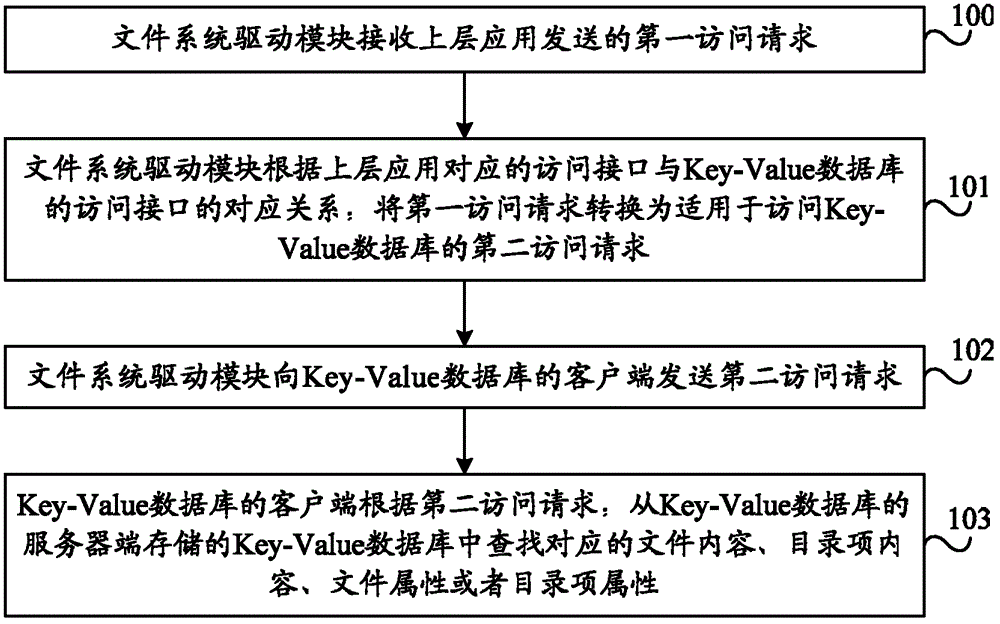

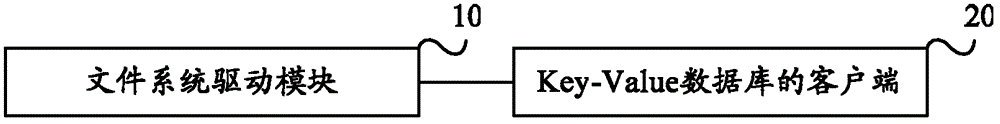

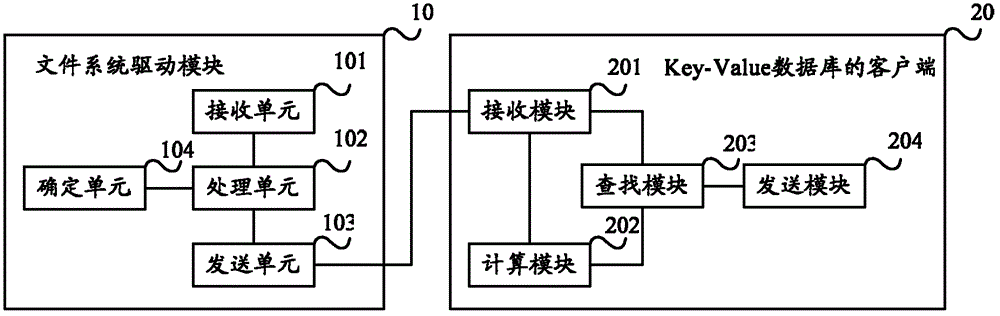

Method and system of file access

ActiveCN102725755AReduce access overheadImprove access efficiencyDatabase queryingSpecial data processing applicationsUplevelFile system

Embodiments of the present invention provide a method and a system of file access. The method comprises that: a file system driving module receives a first access request sent by an upper application; the file system driving module, according to the corresponding relation between an access interface corresponding to the upper application and an access interface of the key-value database, converts the first access request into a second access request which is suitable for access to the key-value database; the file system driving module sends the second access request to a client of the key-value database; and the client of the key-value database, according to the second access request, searches corresponding file content or directory entry content or file attributes or directory entry attributes from the key-value database which is stored in a server of the key-value database. When the technical schemes in the embodiments are compared with technical schemes of layer-by-layer access of metadata in file systems of POSIX in the prior art, access cost is relatively small and access efficiency is relatively high.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

Nested visibility for a container hierarchy

ActiveUS7173530B2Quickly and easily gather informationElectric signal transmission systemsDigital data processing detailsVisibilityUplevel

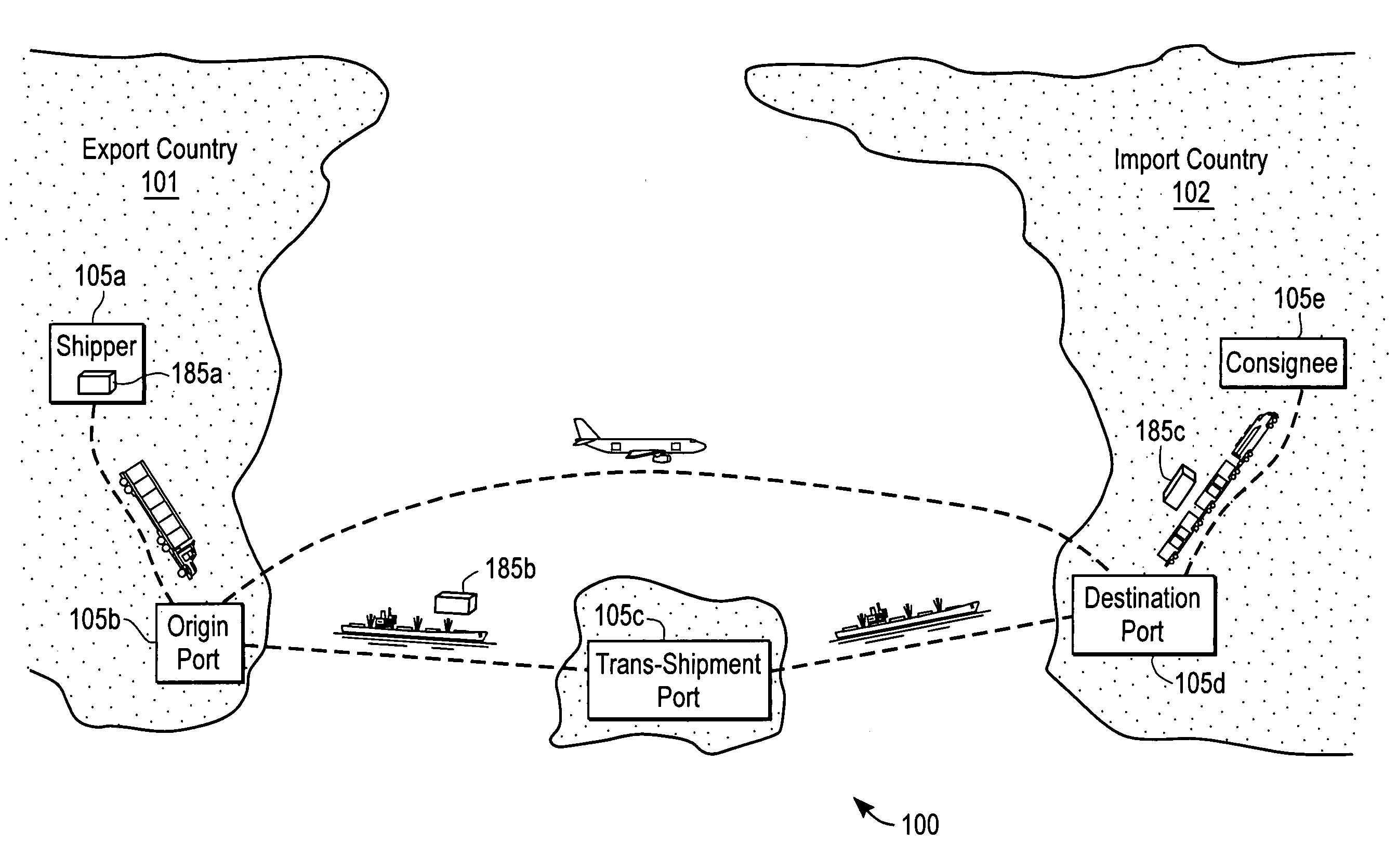

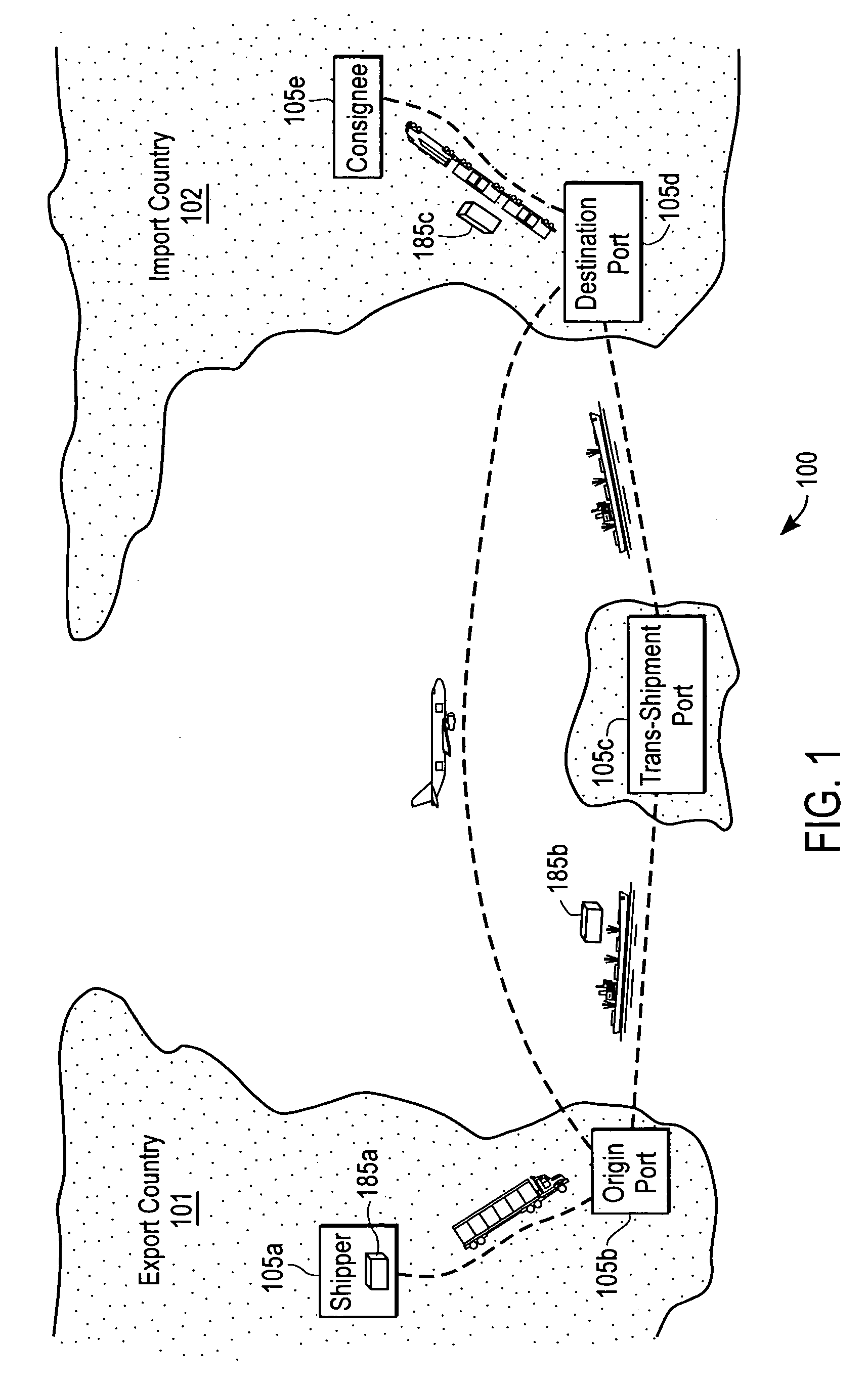

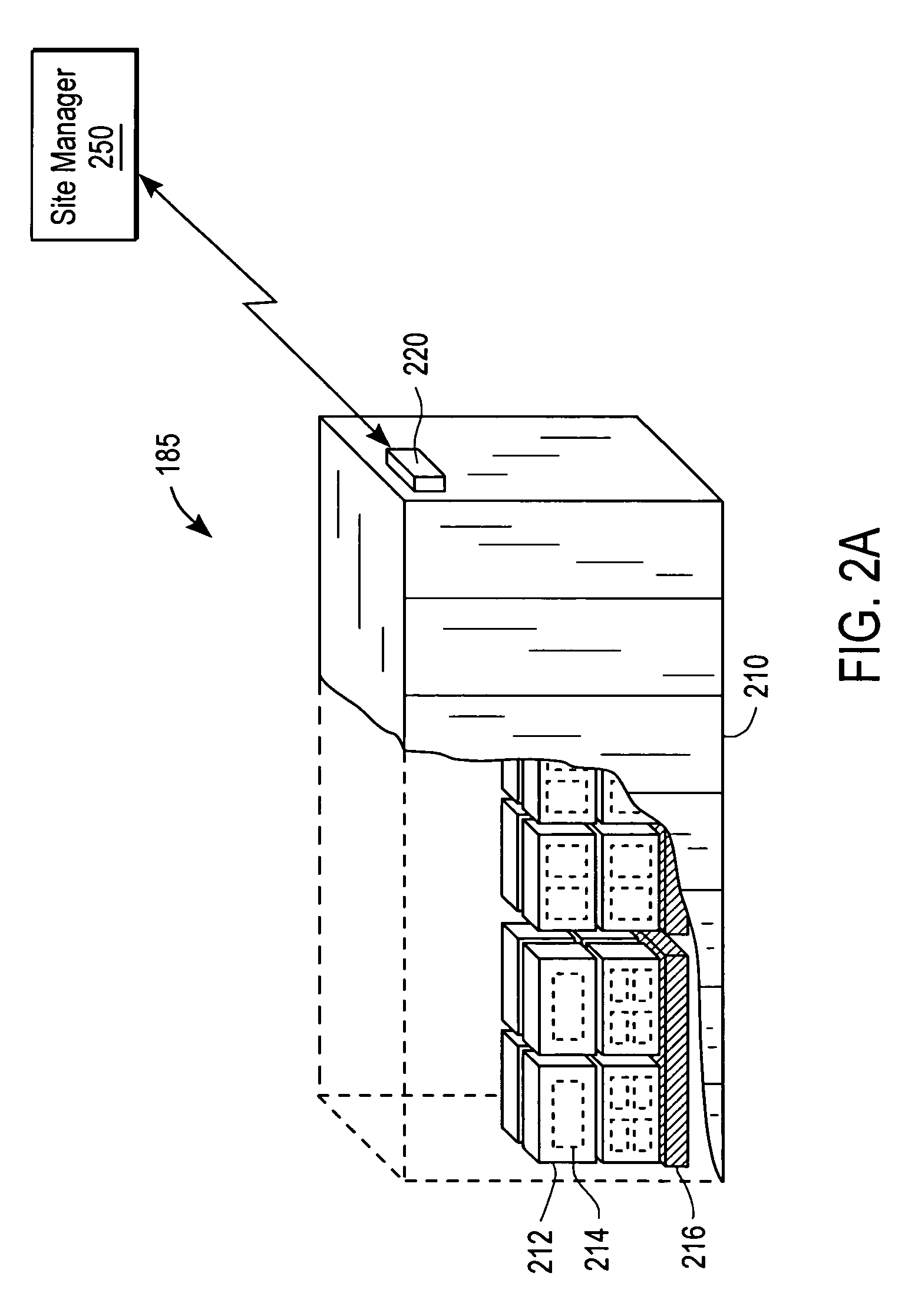

A nested container establishes a relative hierarchy of associated containers as logistical units. The relative hierarchy comprises lower-layer containers and upper-layer containers relative to the nested container. To do so, the nested container sends interrogation signals to neighboring containers in order to retrieve identification information and layer information. Also, the nested container sends its own information identification information and layer information responsive to received interrogation signals. The nested container outputs the relative hierarchy to, for example, a site server or agent using a hand-held device. The identification module comprises, for example, an RFID (Radio Frequency IDentification) device.

Owner:SAVI TECH INC

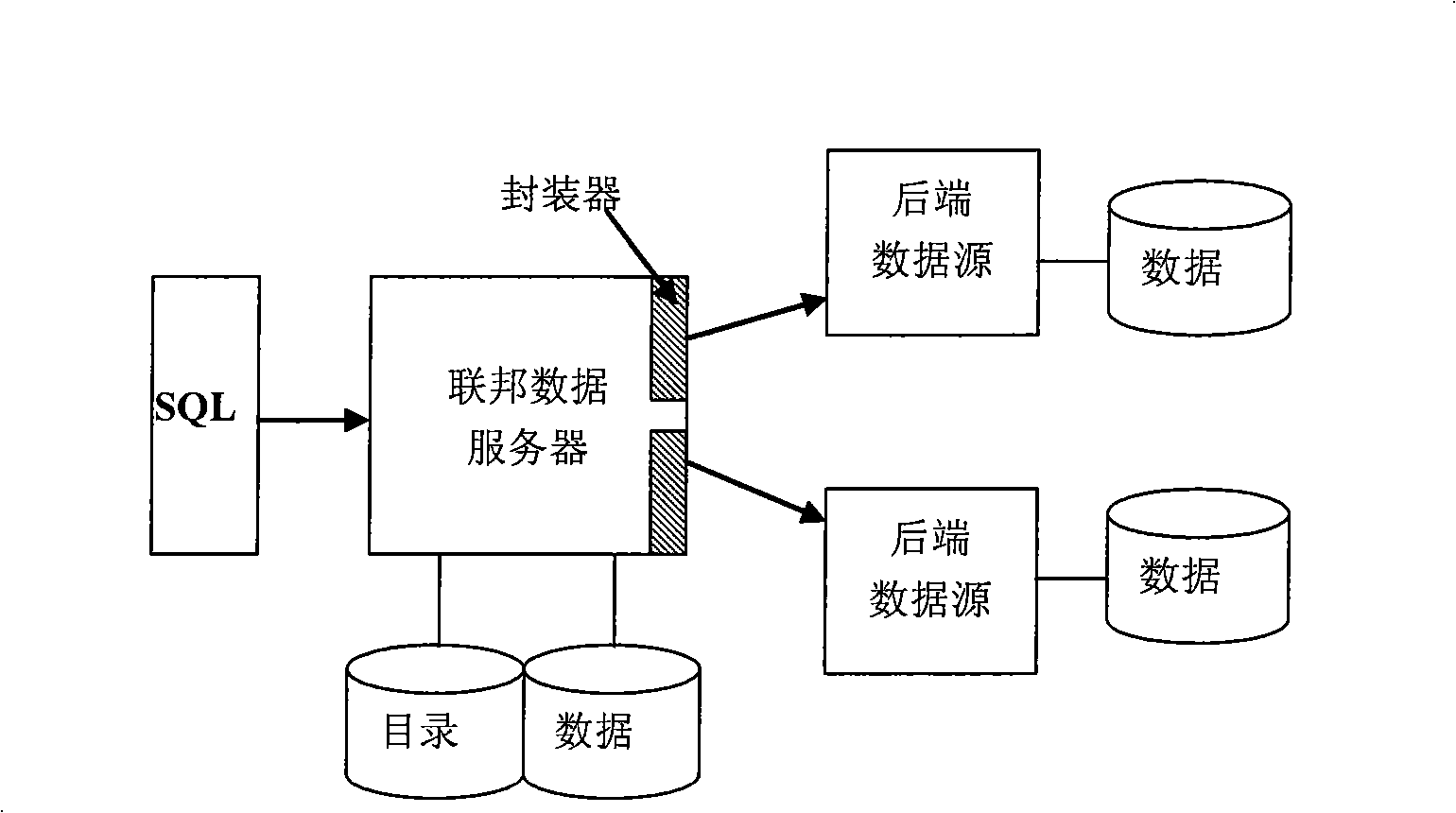

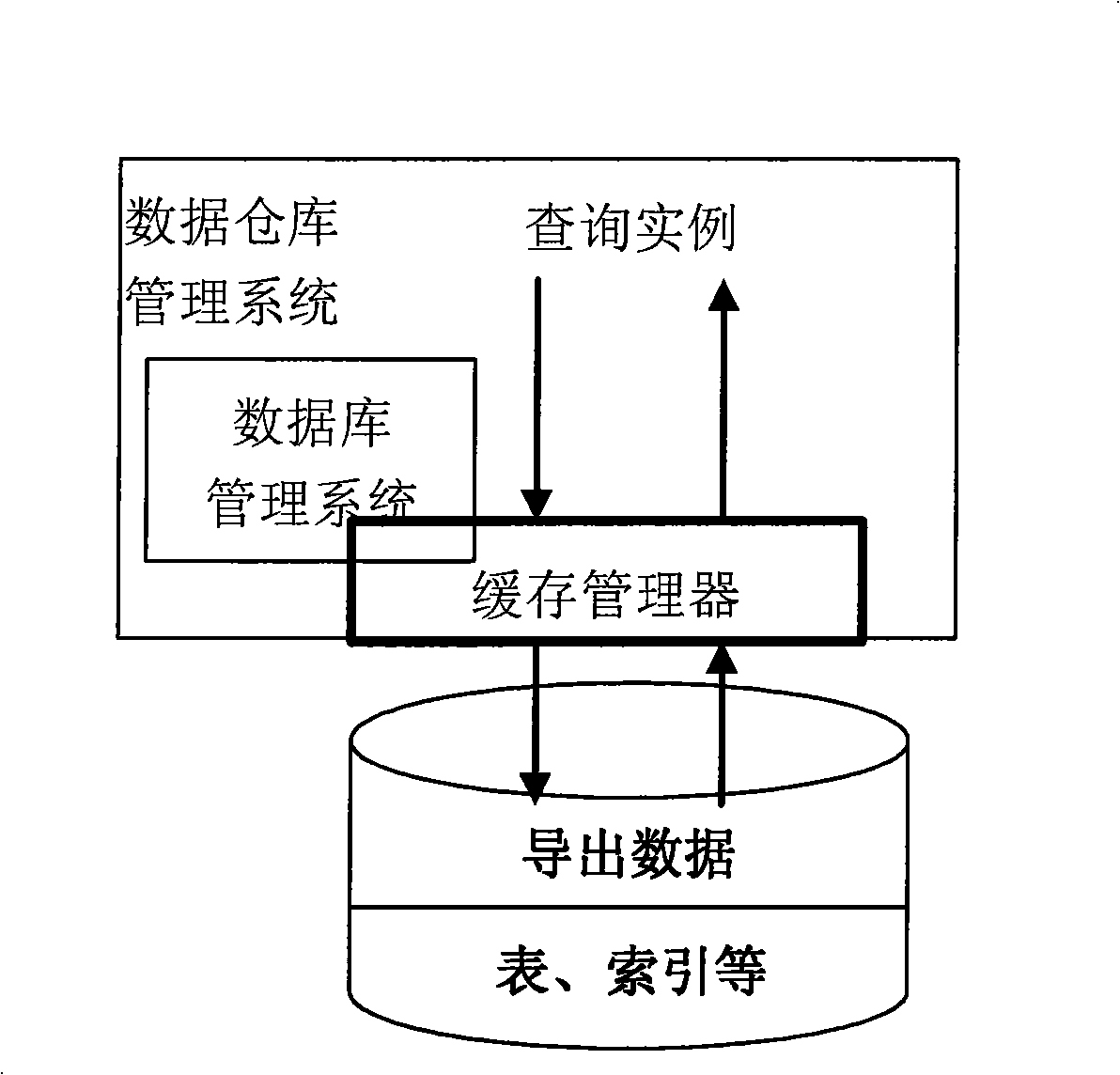

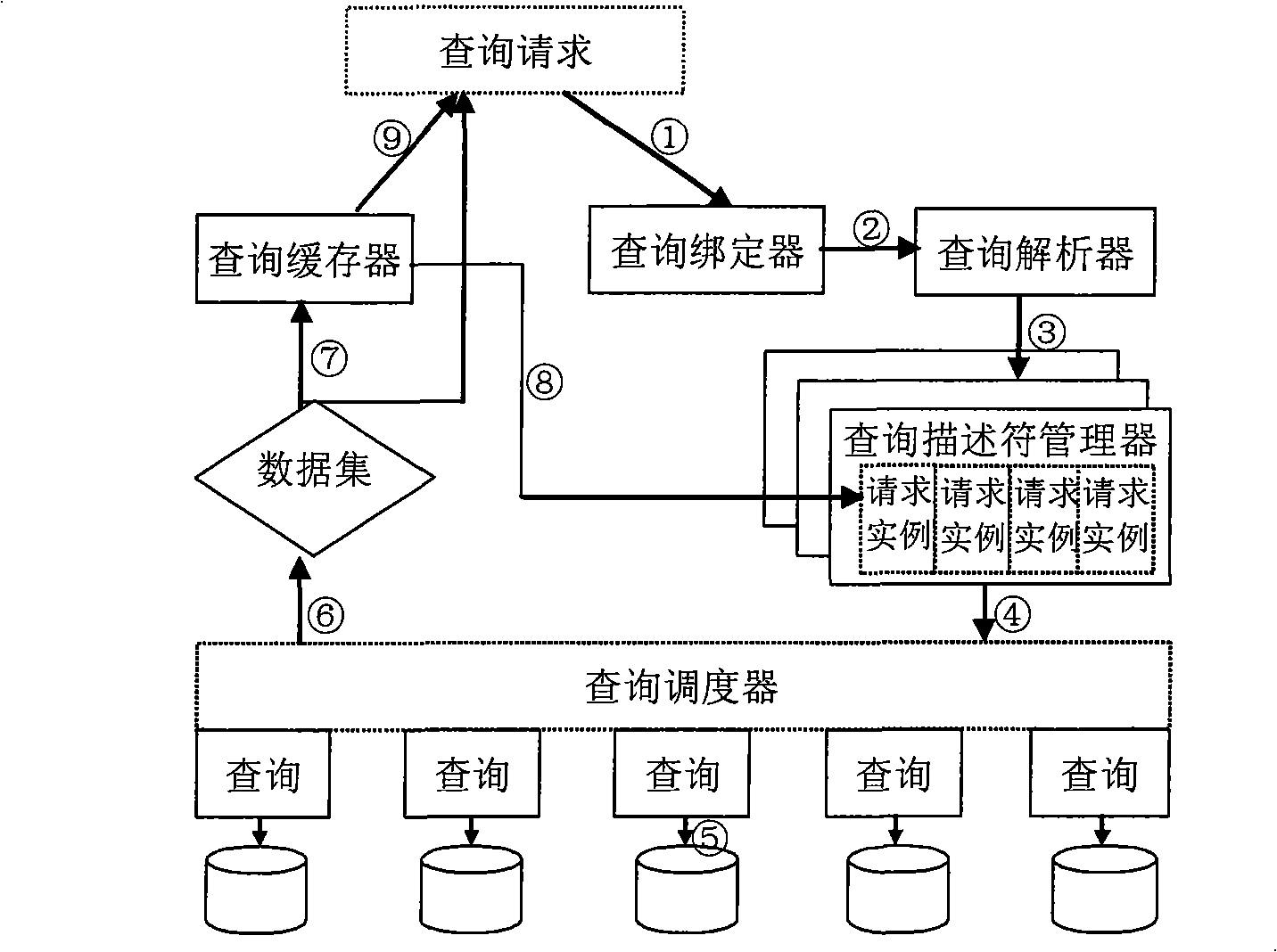

System for implementing network search caching and search method

InactiveCN101329686AApplication is completely transparentImprove timelinessSpecial data processing applicationsTime markData set

The invention provides a caching system for realizing network inquiry, which comprises an inquiry binder, an inquiry resolver, an inquiry descriptor manager, an inquiry scheduler and an inquiry buffer. The query binder is used for binding virtual view meta-information relating to the examples of inquiry requests to a system memory and setting in sequence time marks of write operation of virtual views from a lower layer to an upper layer; the inquiry resolver is used for resolving the virtual views into a querying tree according to the validity of the examples of the inquiry requests; the inquiry descriptor manager is used for storing a queue of the examples of the inquiry requests imposed on the virtue views, determining examples of the inquiry requests to enter and exit the queue, and judging the validity of the examples of the inquiry requests; the inquiry scheduler is used for dispatching the inquiry nodes of the querying tree so as to execute inquiry; the inquiry buffer is used for establishing and deleting temporary tables, managing data set in the temporary tables, updating time characteristics making of examples of the inquiry requests in the inquiry descriptor and outputting inquiry result. The cache of the system of the invention is carried out based on increment, thereby improving inquiring performance and throughout rate, and providing transparent support for the implementation process of the inquiry requests.

Owner:海南南海云信息技术有限公司

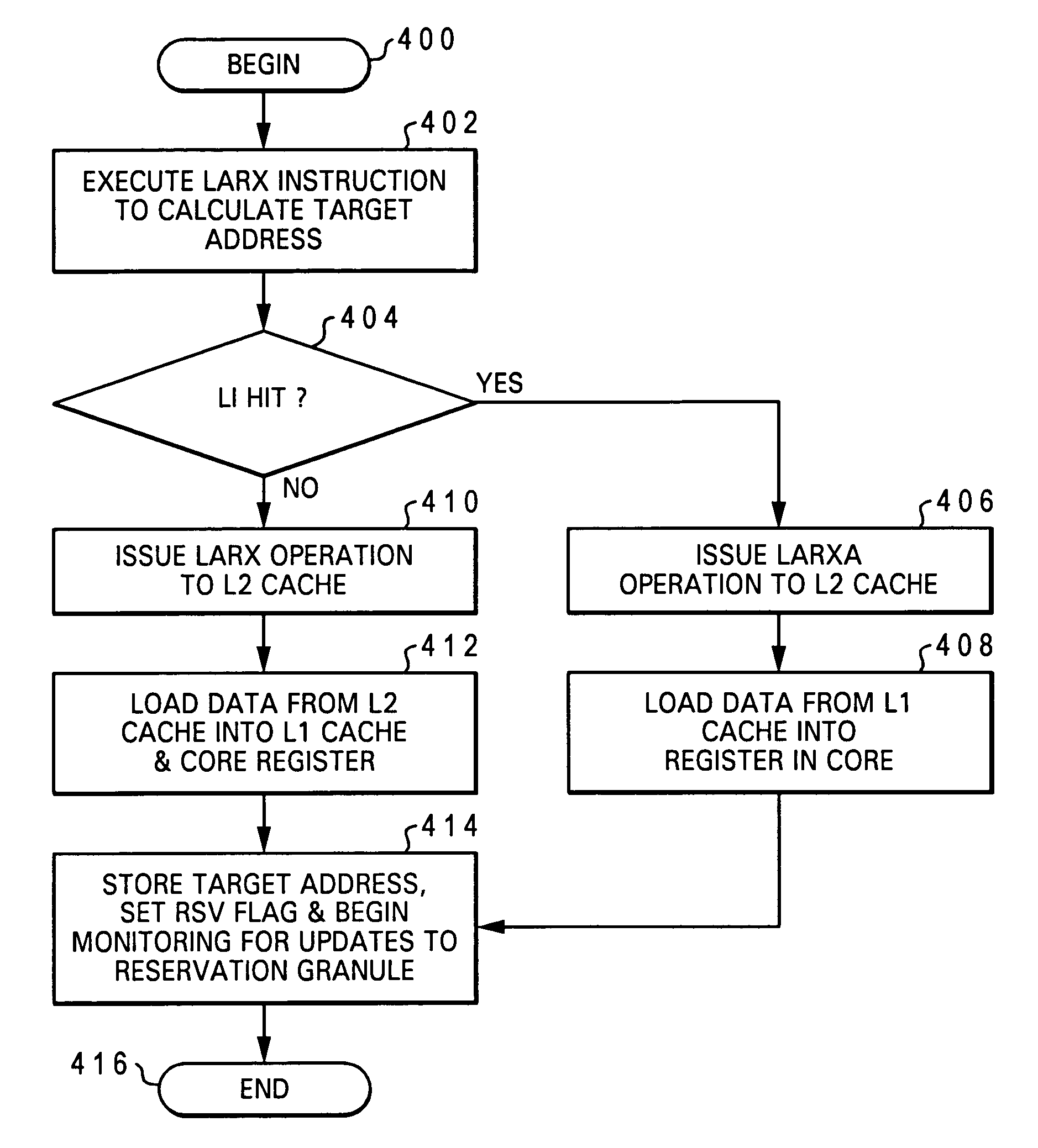

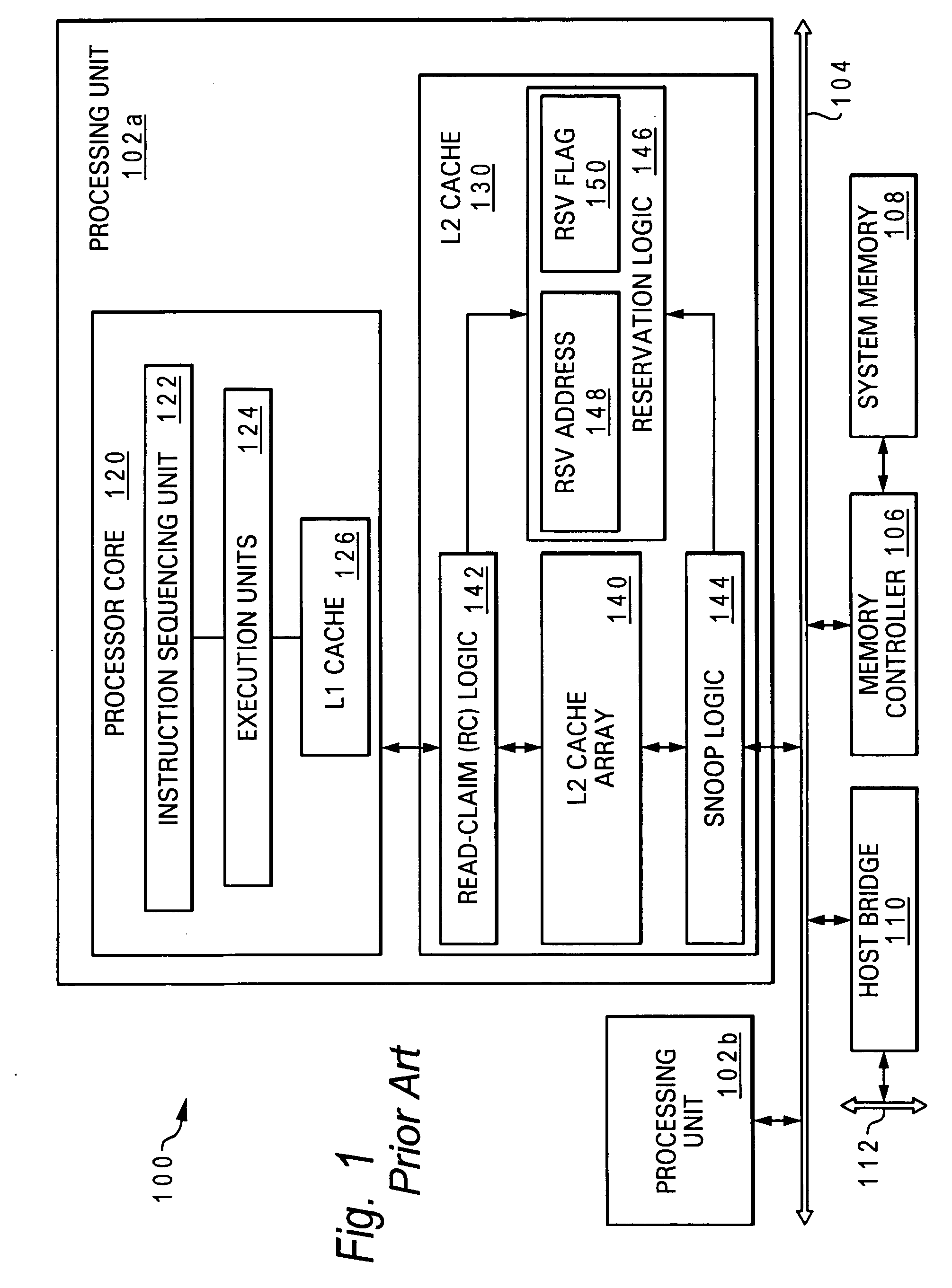

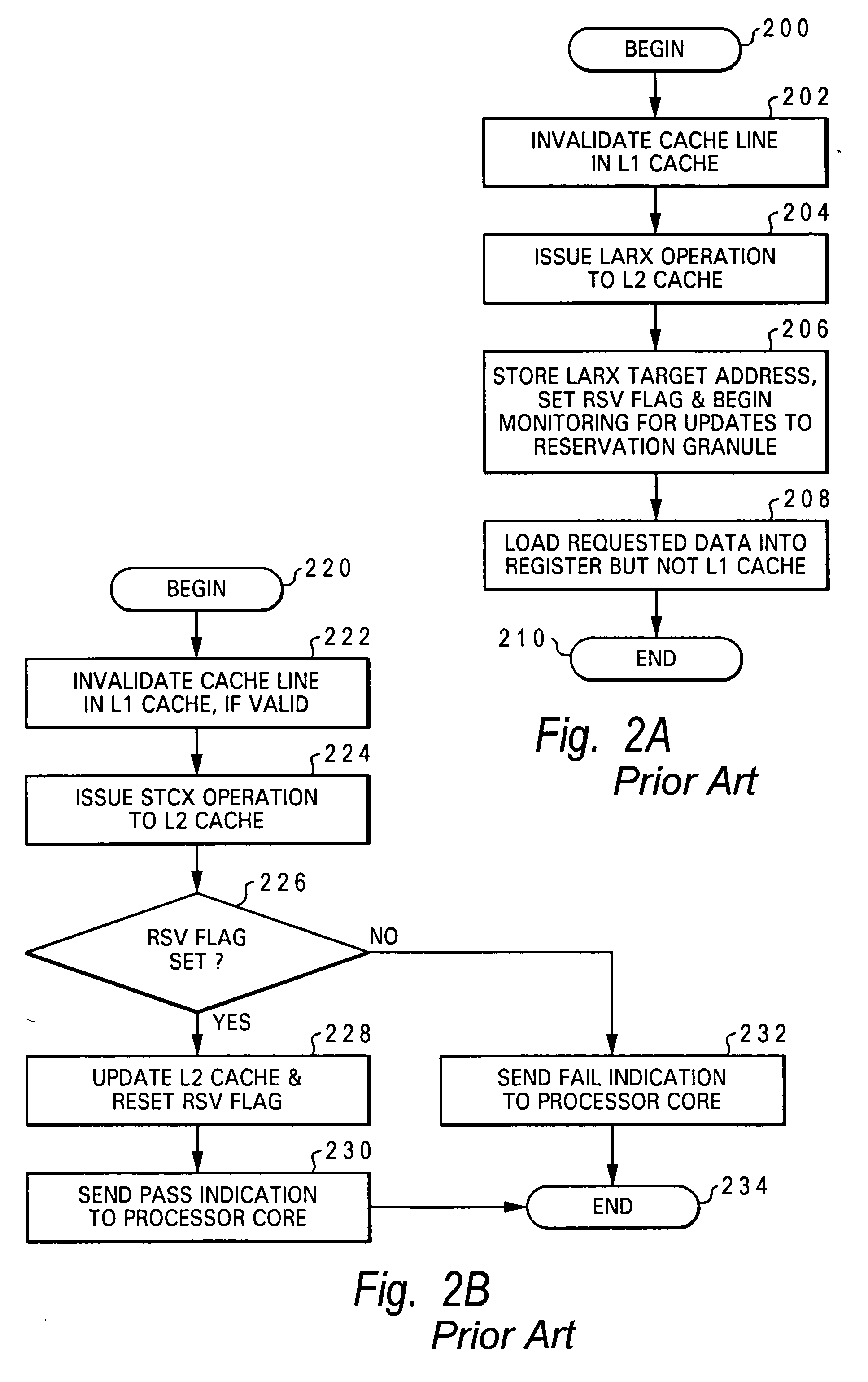

Processor, data processing system and method for synchronzing access to data in shared memory

A processing unit for a multiprocessor data processing system includes a store-through upper level cache, an instruction sequencing unit that fetches instructions for execution, at least one instruction execution unit that executes a store-conditional instruction to determine a store target address, a store queue that, following execution of the store-conditional instruction, buffers a corresponding store operation, sequencer logic associated with the store queue. The sequencer logic, responsive to receipt of a latency indication indicating that resolution of the store-conditional operation as passing or failing is subject to significant latency, invalidates, prior to resolution of the store-conditional operation, a cache line in the store-through upper level cache to which a load-reserve operation previously bound.

Owner:GOOGLE LLC

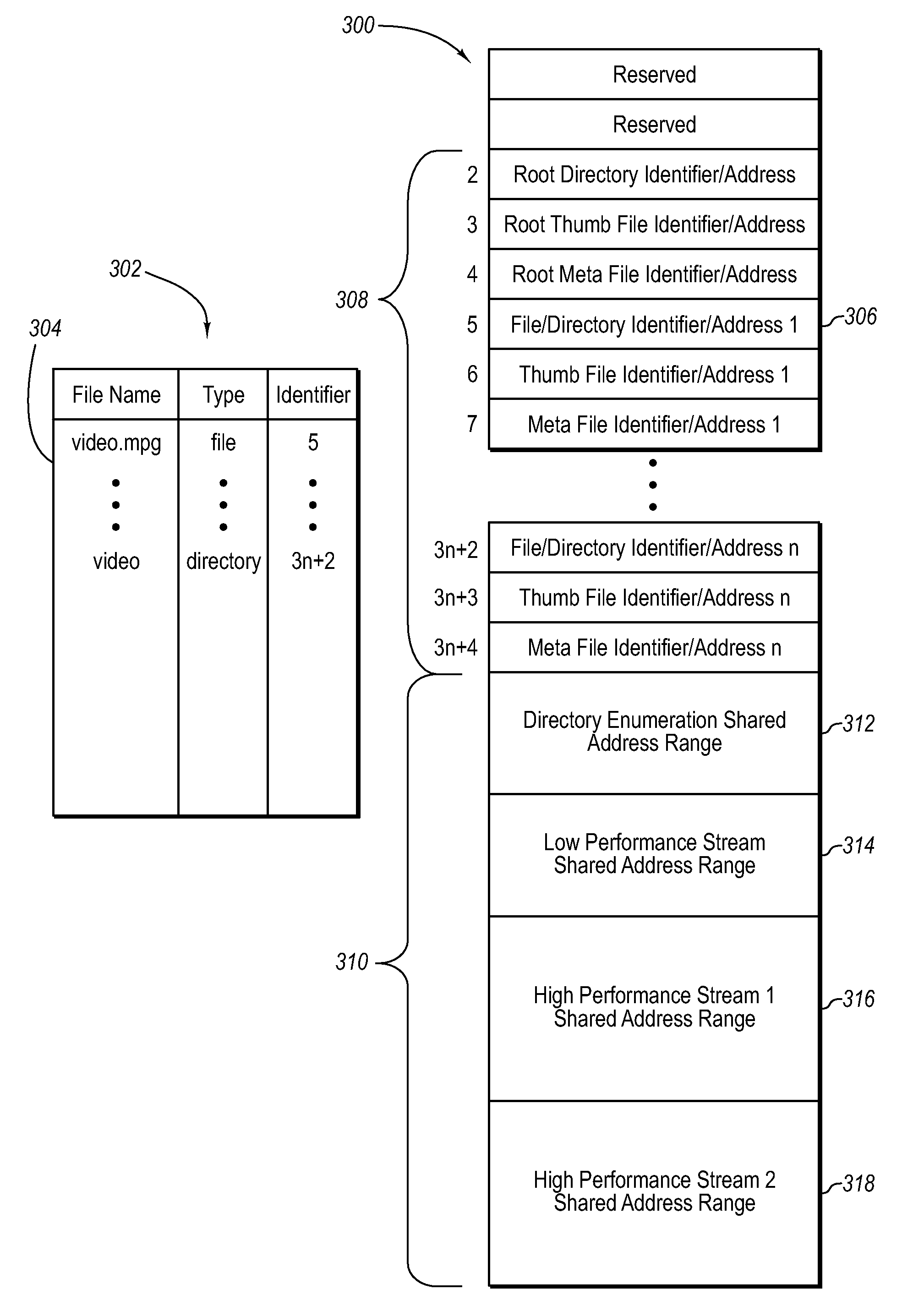

Network directory file stream cache and id lookup

InactiveUS20080028033A1Digital computer detailsSpecial data processing applicationsUplevelFile system

Caching meta information including file relationships at a local processor. A local processor requests files and directories from the remote file system by sending a network identifier used by the remote file system. At the local processor, a network identifier is used to access a remote upper level directory at the remote file system. Meta information is obtained from the remote file system about files or directories hierarchically below the upper level directory. The meta information about the files or directories hierarchically below the upper level directory is cached along with relationship information about the files or directories without storing network identifiers for the files or directories. A network identifier is cached or known for a directory hierarchically above the files or directories that can be used to obtain the network identifiers for desired files or directories from the remote file system.

Owner:KESTRELINK CORP

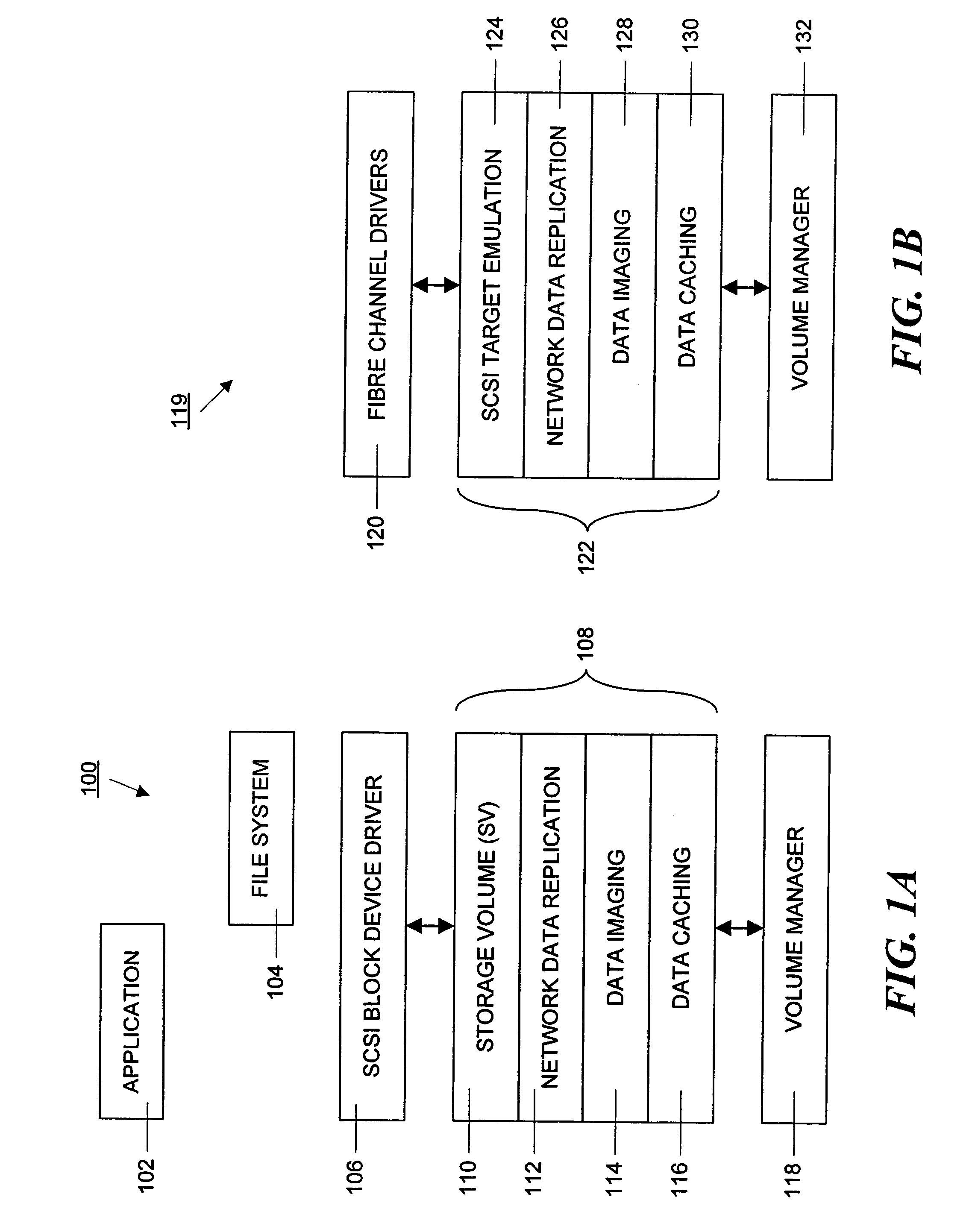

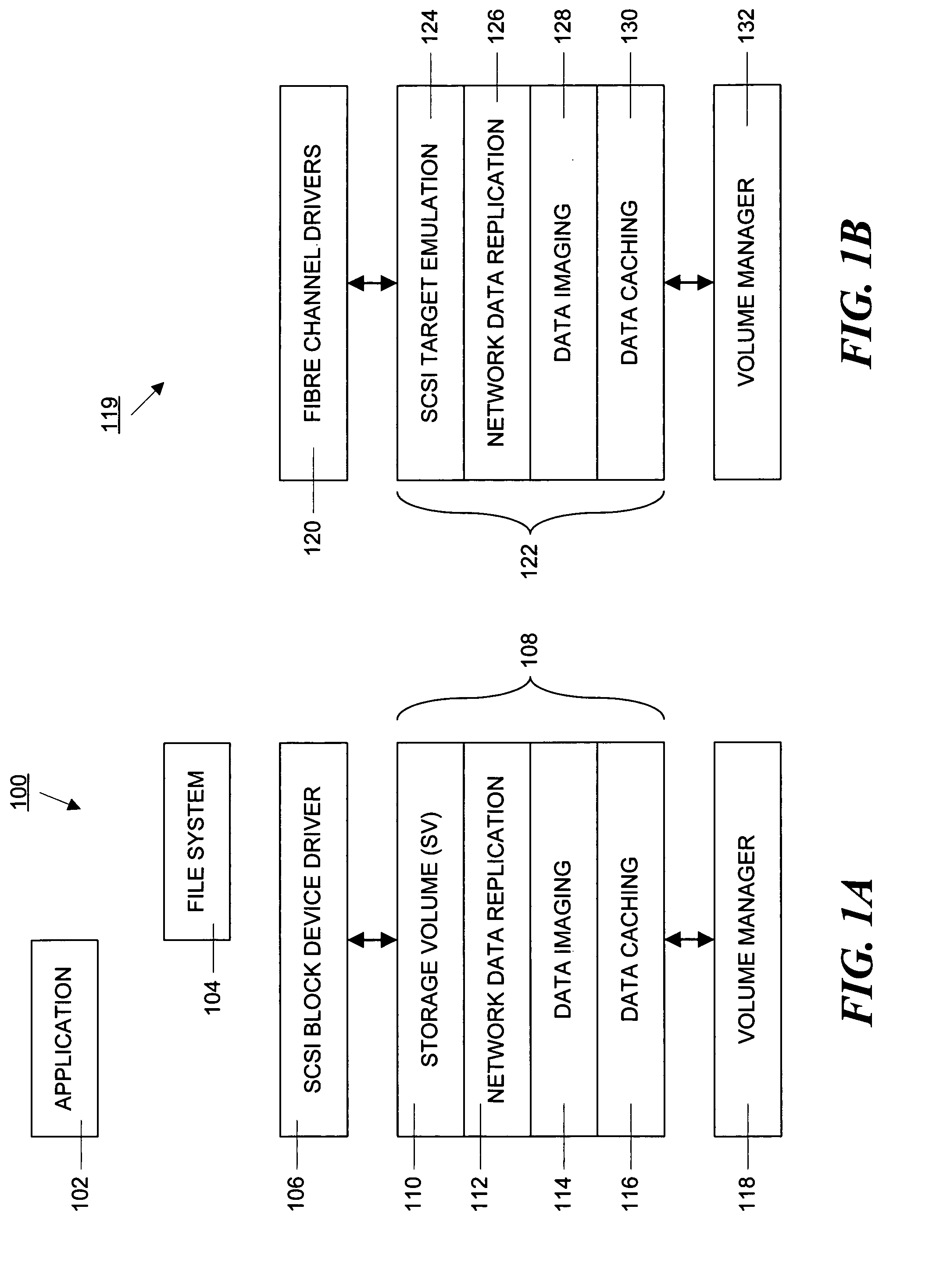

Method and apparatus for managing data volumes in a distributed computer system

Data volumes on local hosts are discovered and managed by federated Java beans that run on each host. The Java beans form part of a three-tiered data services management. The lowest tier comprises management facade software running on each machine that converts platform-dependent interface written with the low-level kernel routines to platform-independent method calls. The middle tier is a set of federated Java beans that communicate with the management facades and with the upper tier of the system. The upper tier of the inventive system comprises presentation programs that can be directly manipulated by management personnel to view and control the system. The federated beans can configure and control data volumes with either a SCSI terminal emulation interface or a storage volume interface and use a logical disk aggregator to present all volumes available on a local host as a single “logical volume” in which all information regarding the various volumes is presented in a uniform manner.

Owner:ORACLE INT CORP

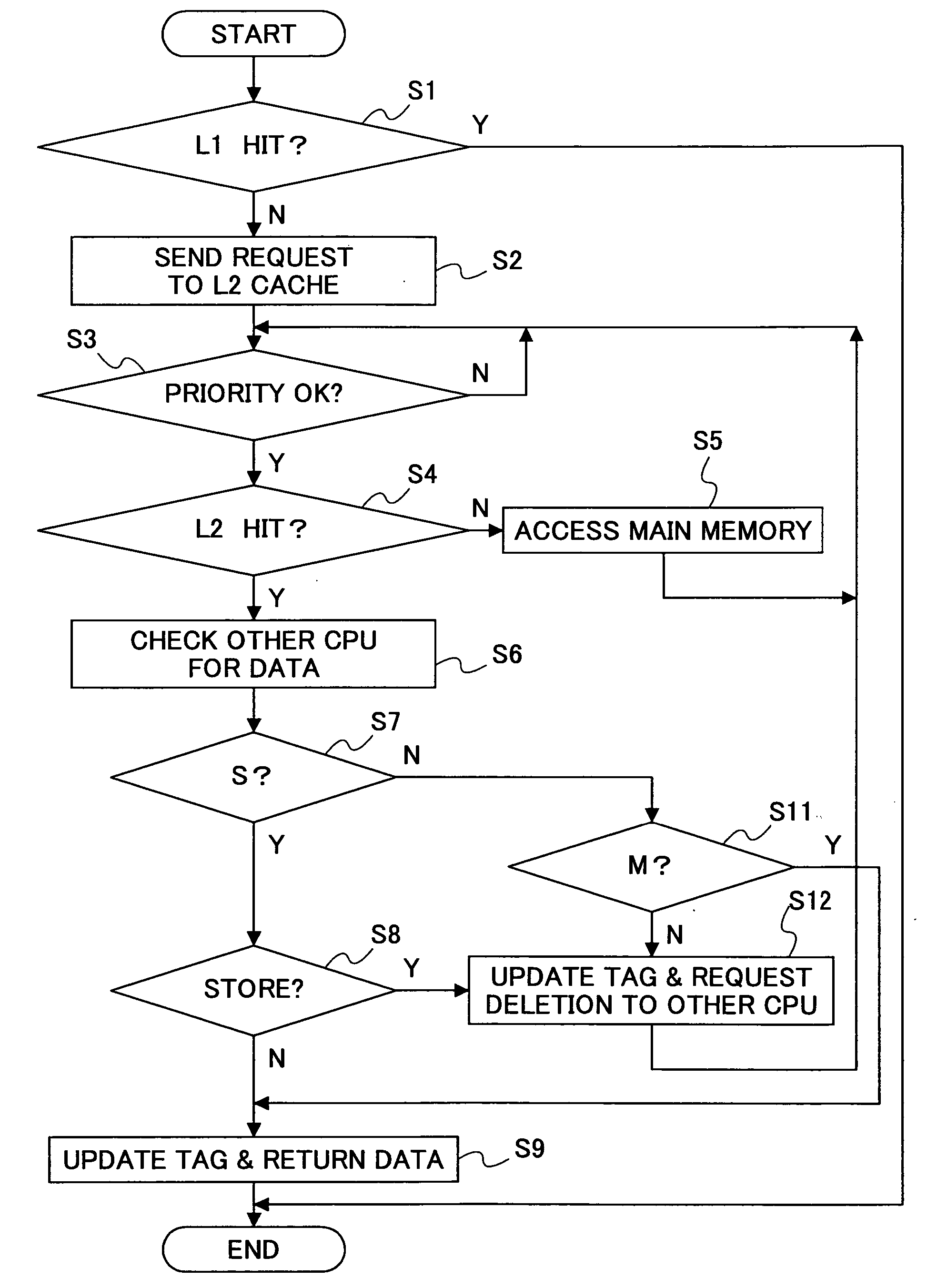

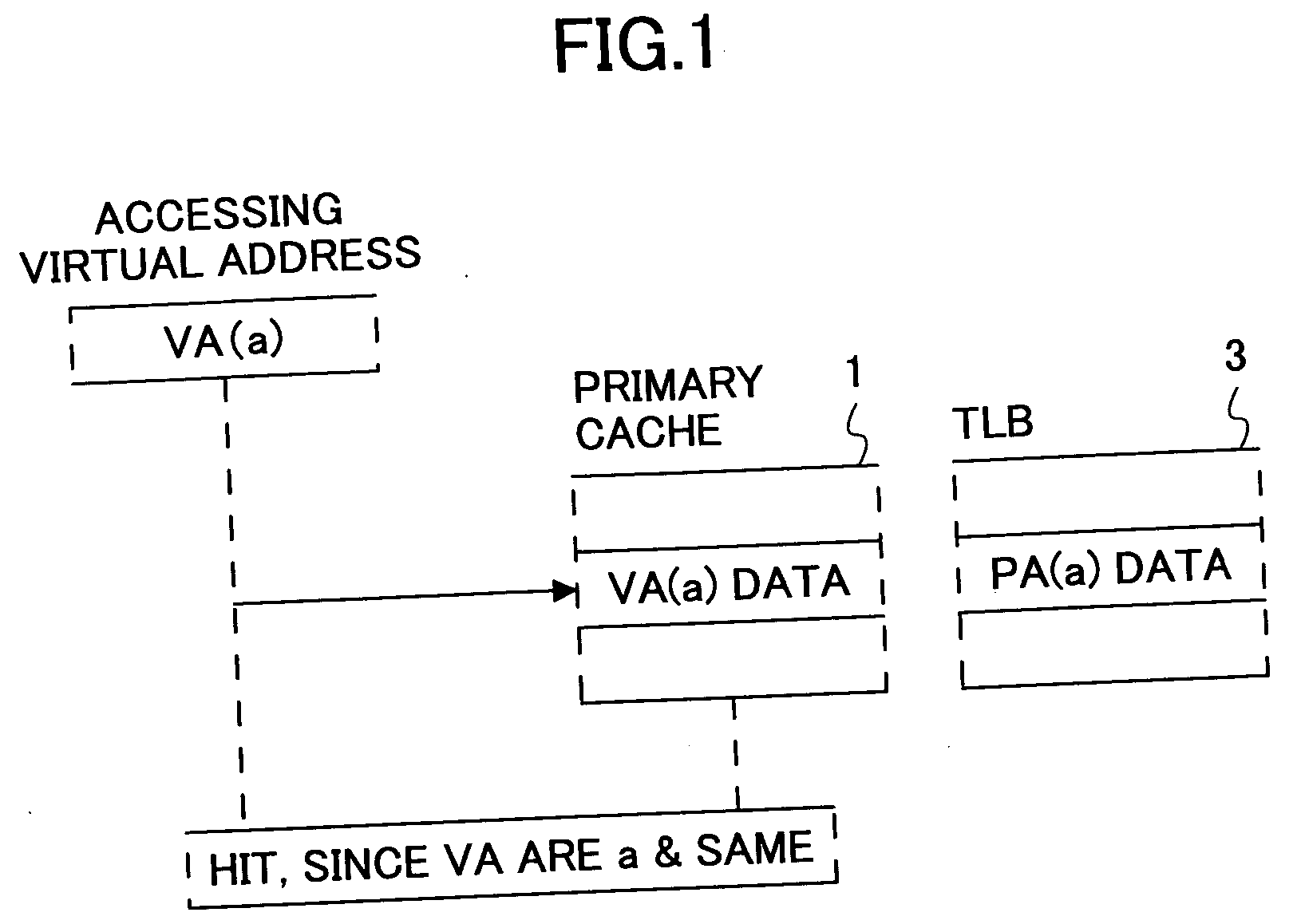

Cache control method and processor system

ActiveUS20050102473A1Shorten the timeImprove utilization efficiencyMemory adressing/allocation/relocationMicro-instruction address formationUplevelParallel computing

A cache control method controls data sharing conditions in a processor system having multi-level caches that are in an inclusion relationship. The cache control method indexes an upper level cache by a real address and indexes a lower level cache by a virtual address, and prevents a real address that is referred by a plurality of different virtual addresses from being registered a plurality of times within the same cache. A plurality of virtual addresses are registrable within the upper level cache, so as to relax the data sharing conditions.

Owner:FUJITSU LTD

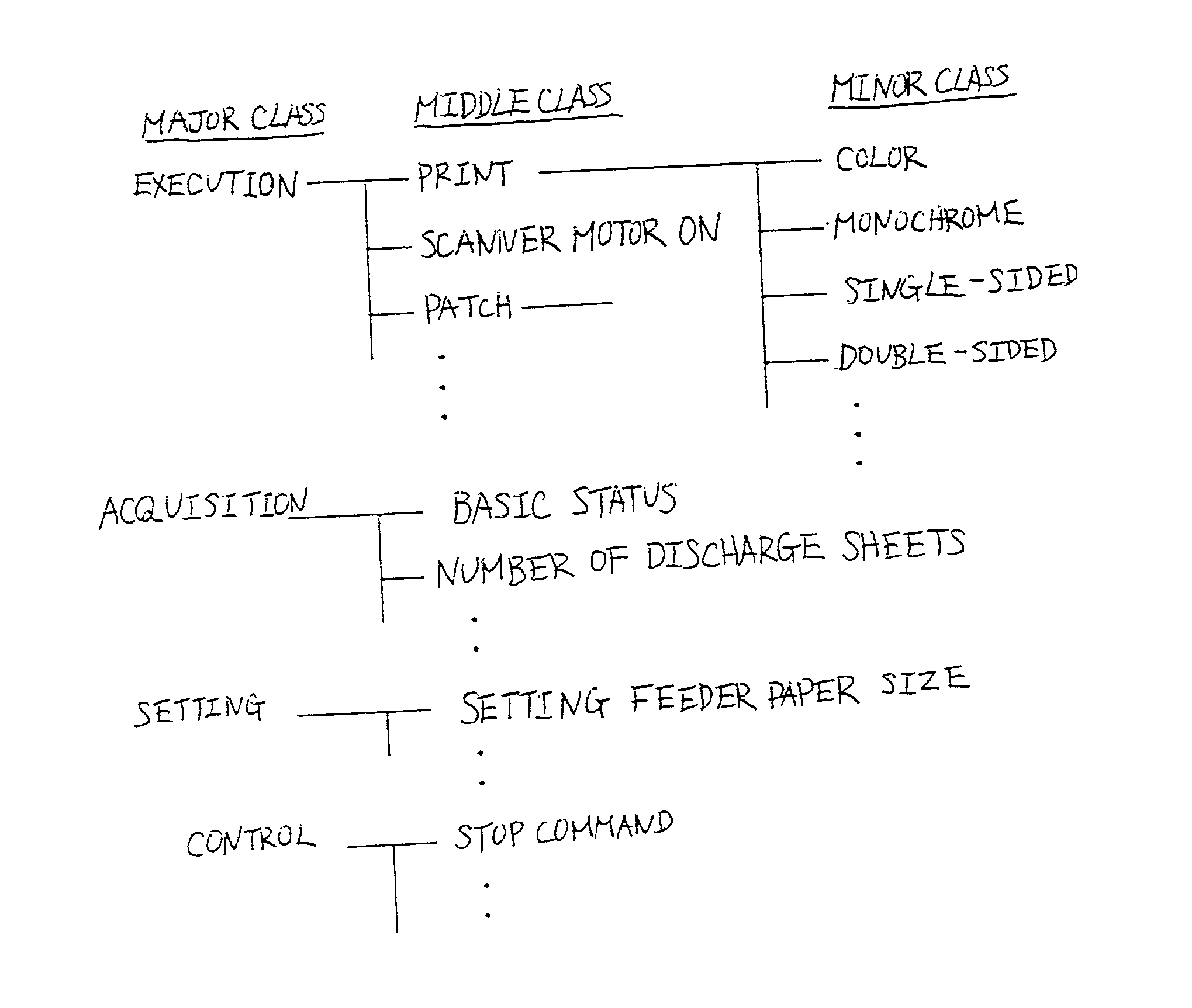

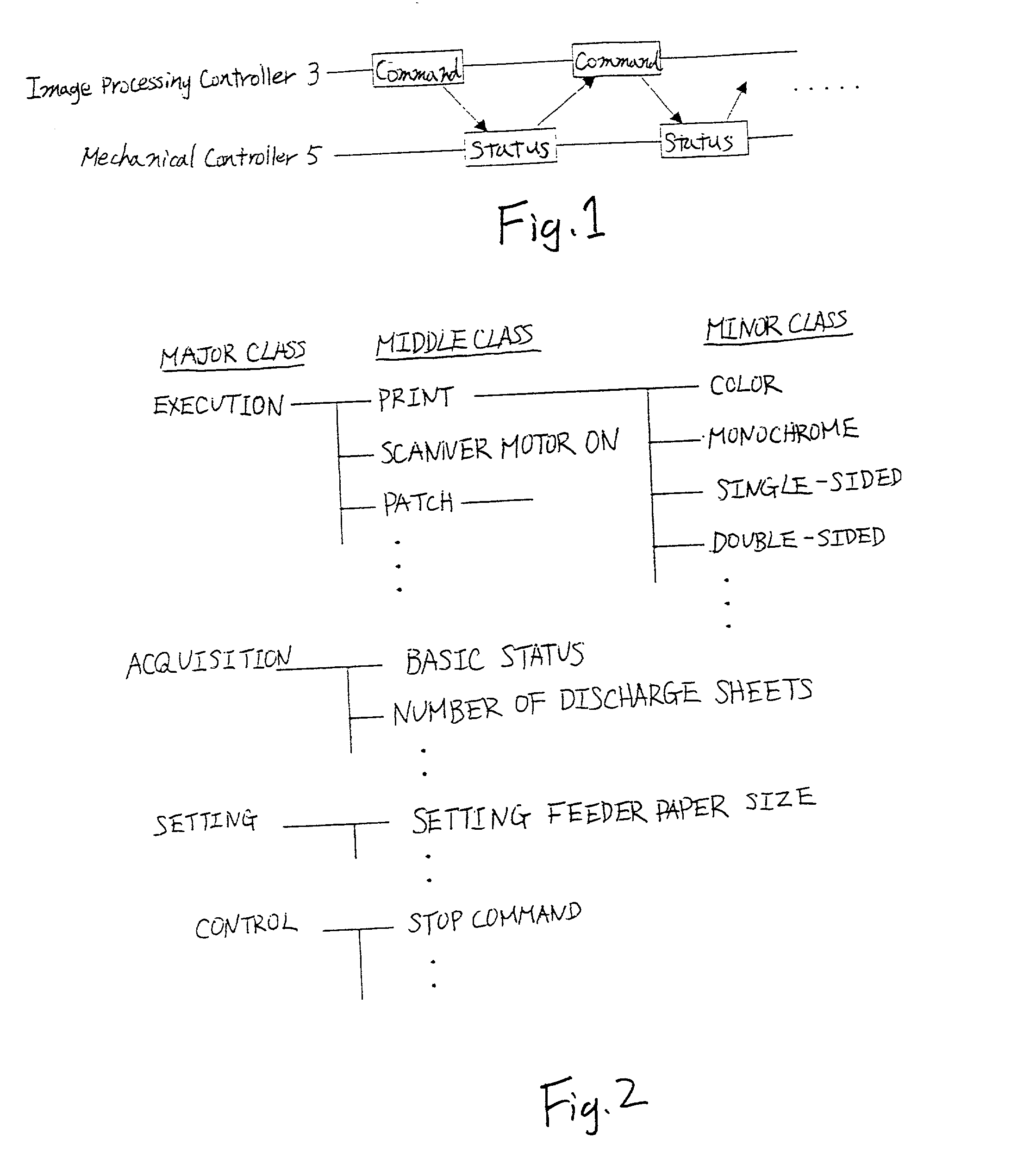

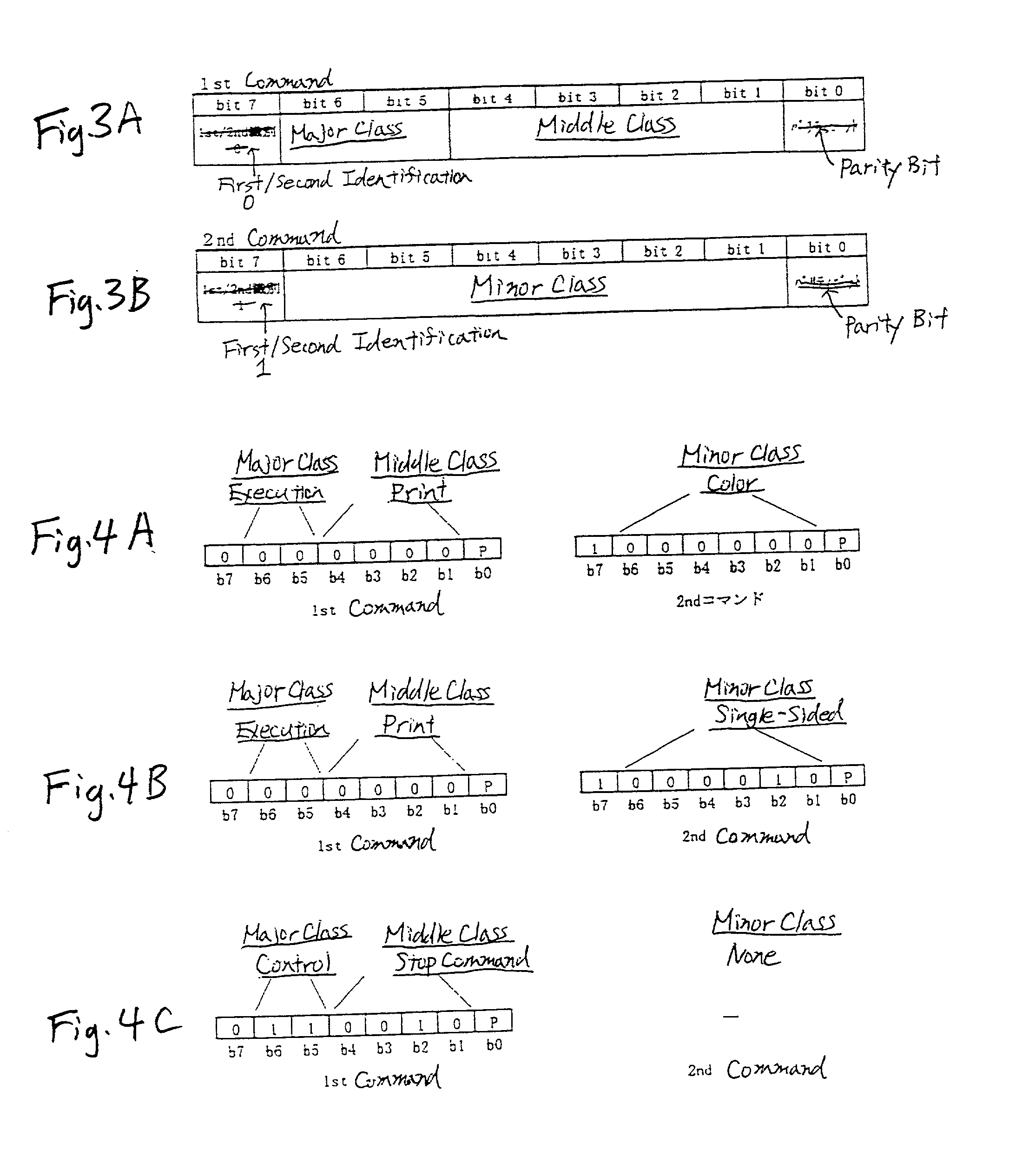

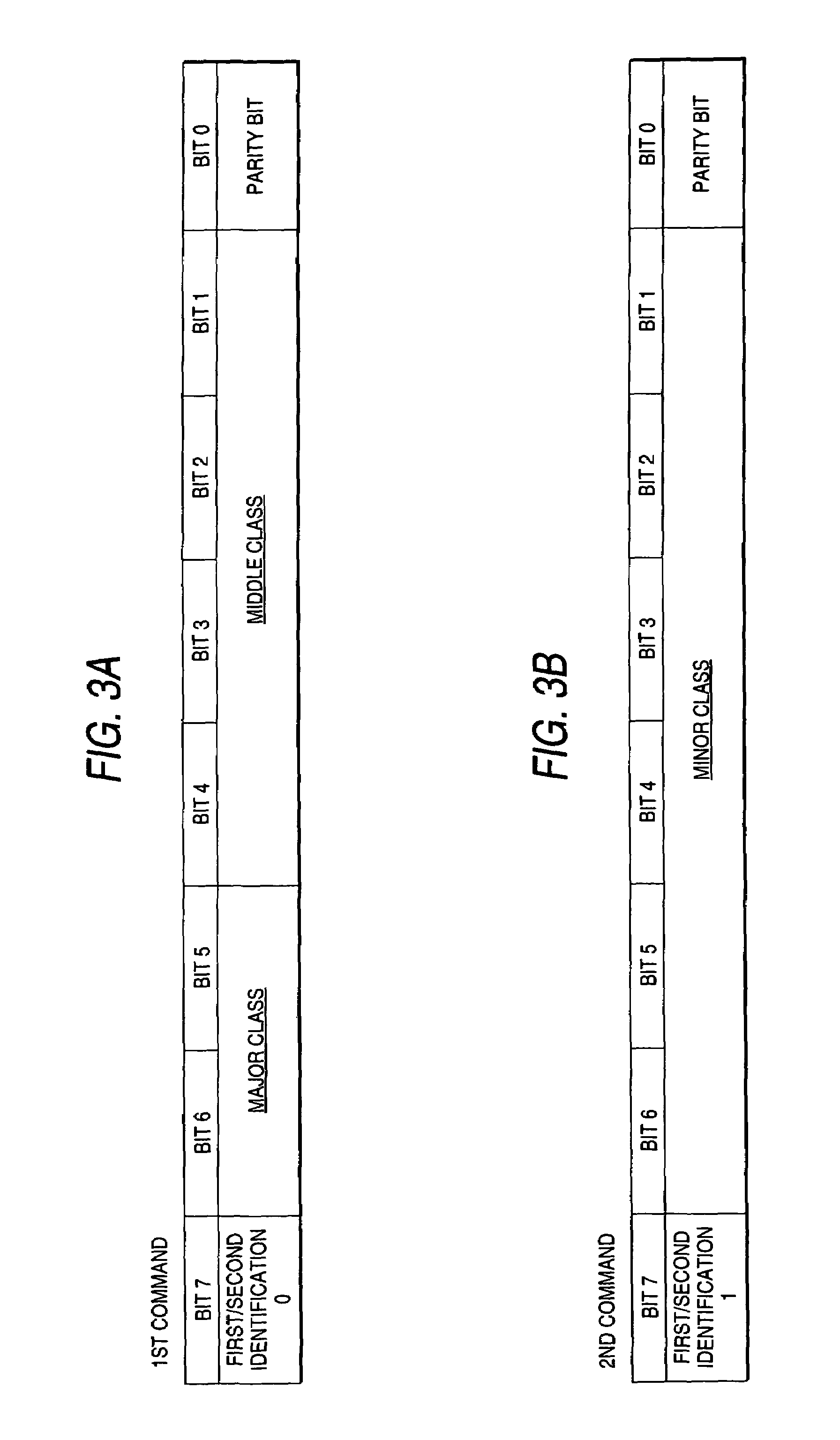

Printer having controller and print engine

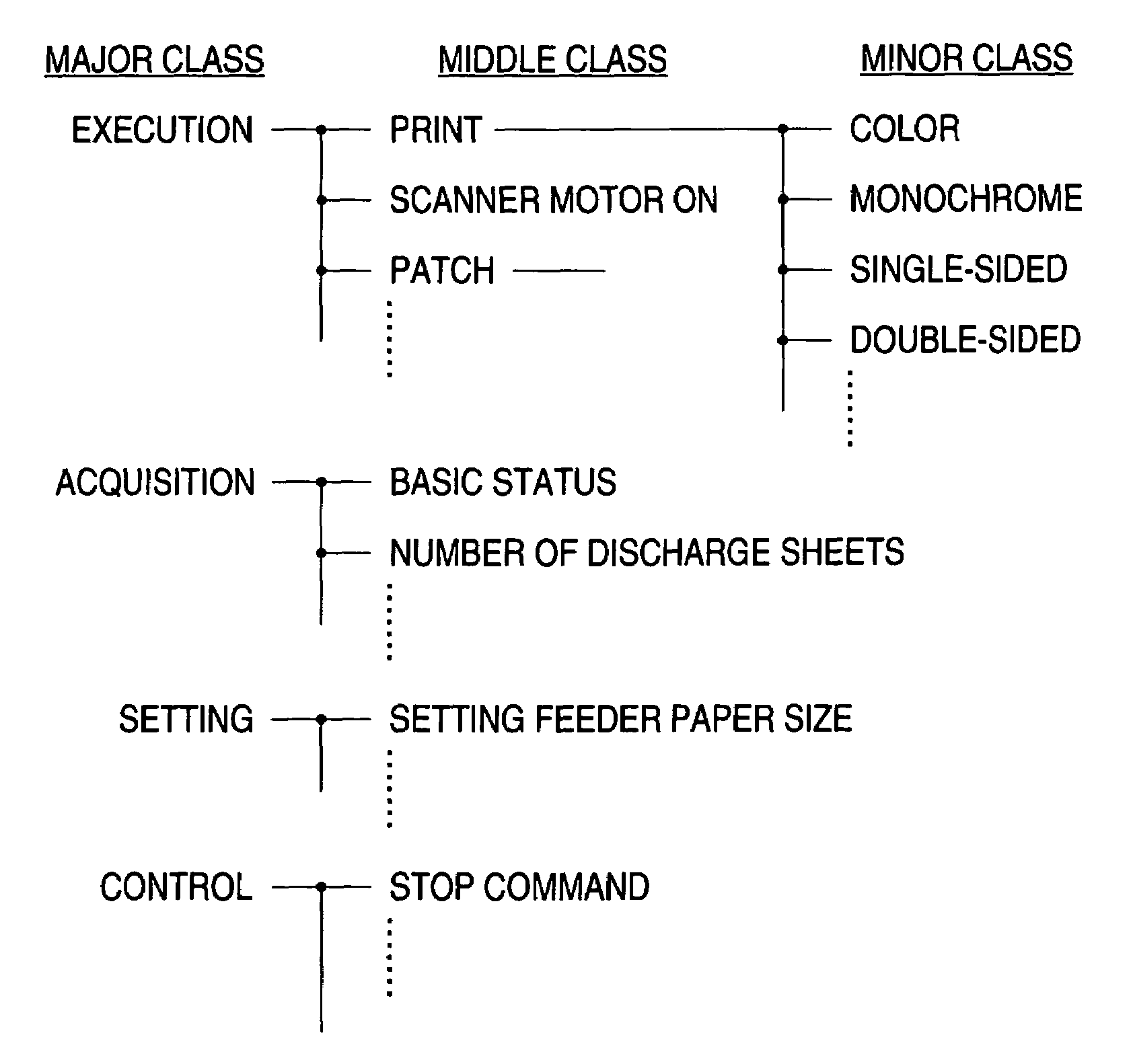

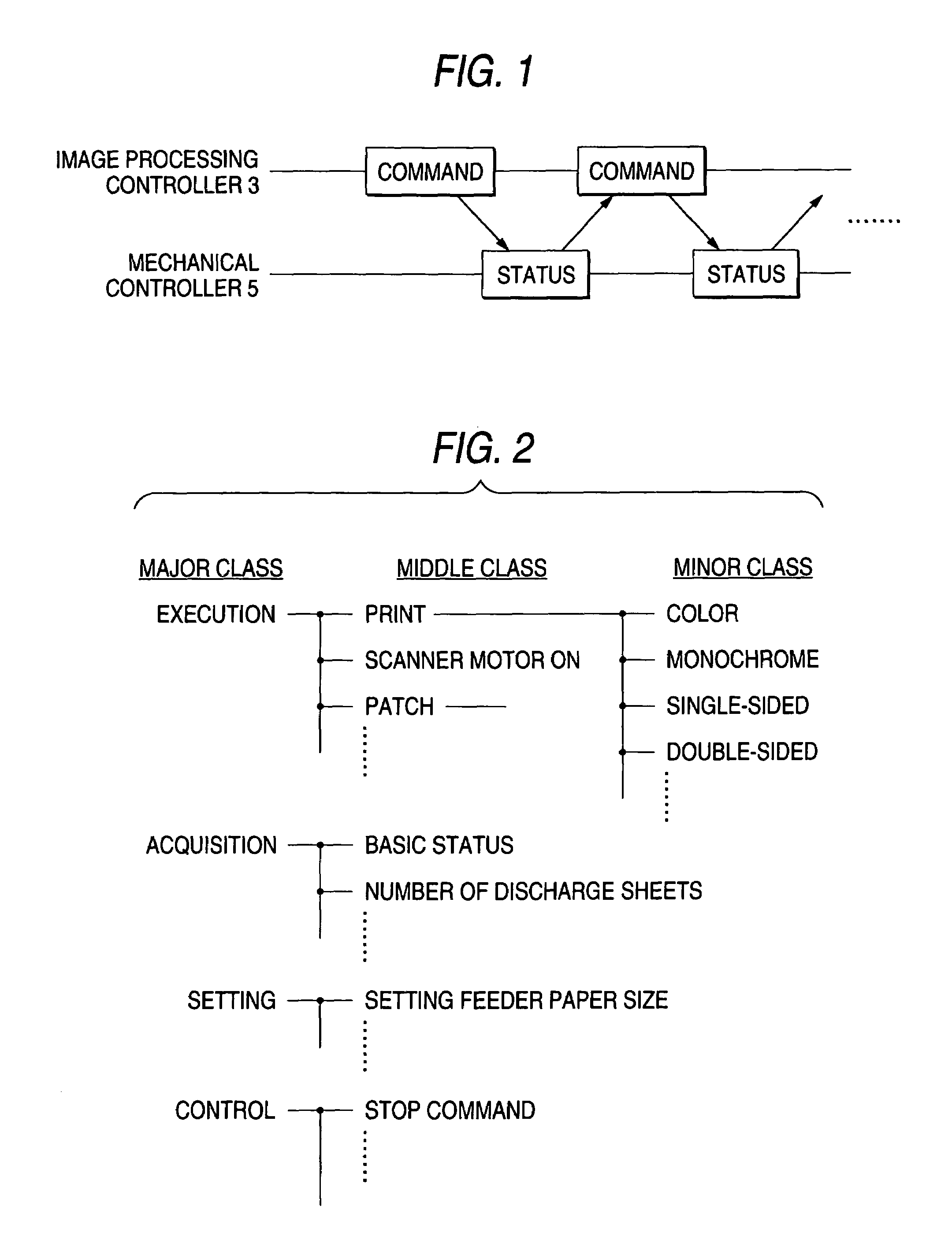

InactiveUS20020015172A1Increase printing speedSimple interfaceVisual presentation using printersOther printing apparatusImaging processingUplevel

Commands transmitted from an image processing controller to an engine are classified into a plurality of layers in response to the information contents of the commands and when the engine receives a command of a subordinate layer, it recognizes that the command is received together with the command of a superior layer to that command, last issued preceding the command. Therefore, the engine performs internal control in accordance with the second command of the subordinate layer received and the first command of the superior layer not received at the time, but last received preceding the second command. For various instructions concerning execution of print, the commands of the superior layer of commands representing the instructions are made common.

Owner:SEIKO EPSON CORP

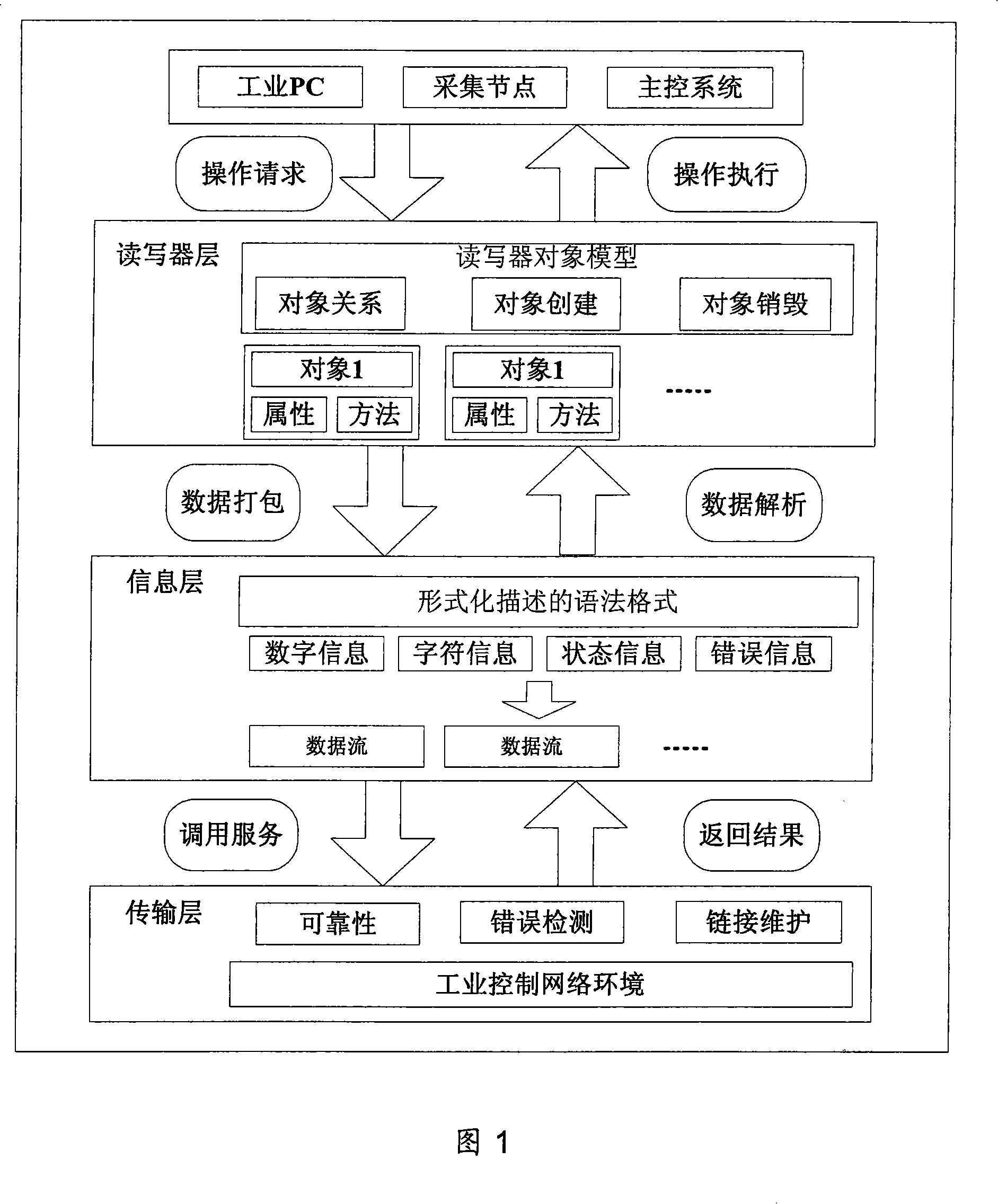

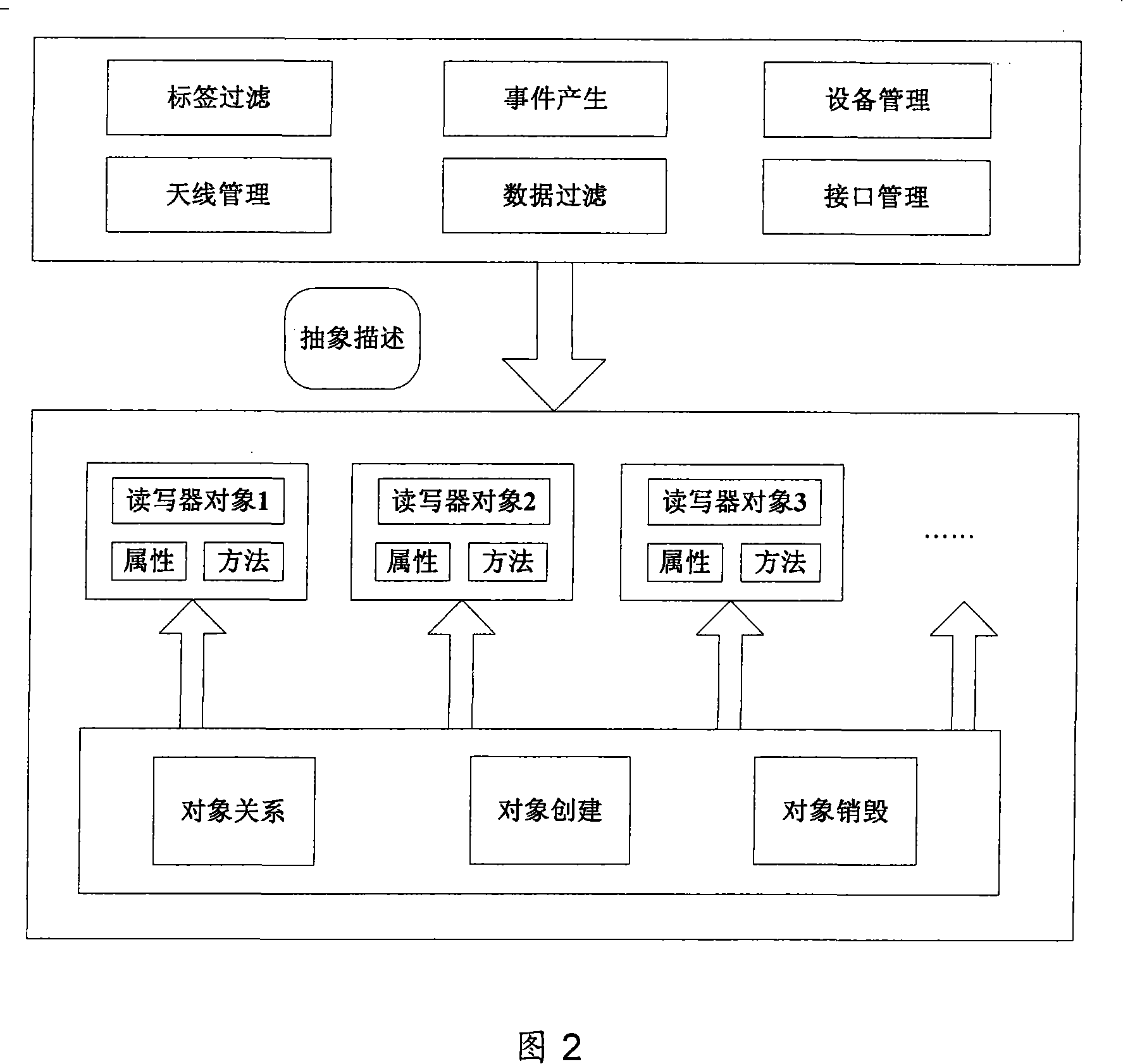

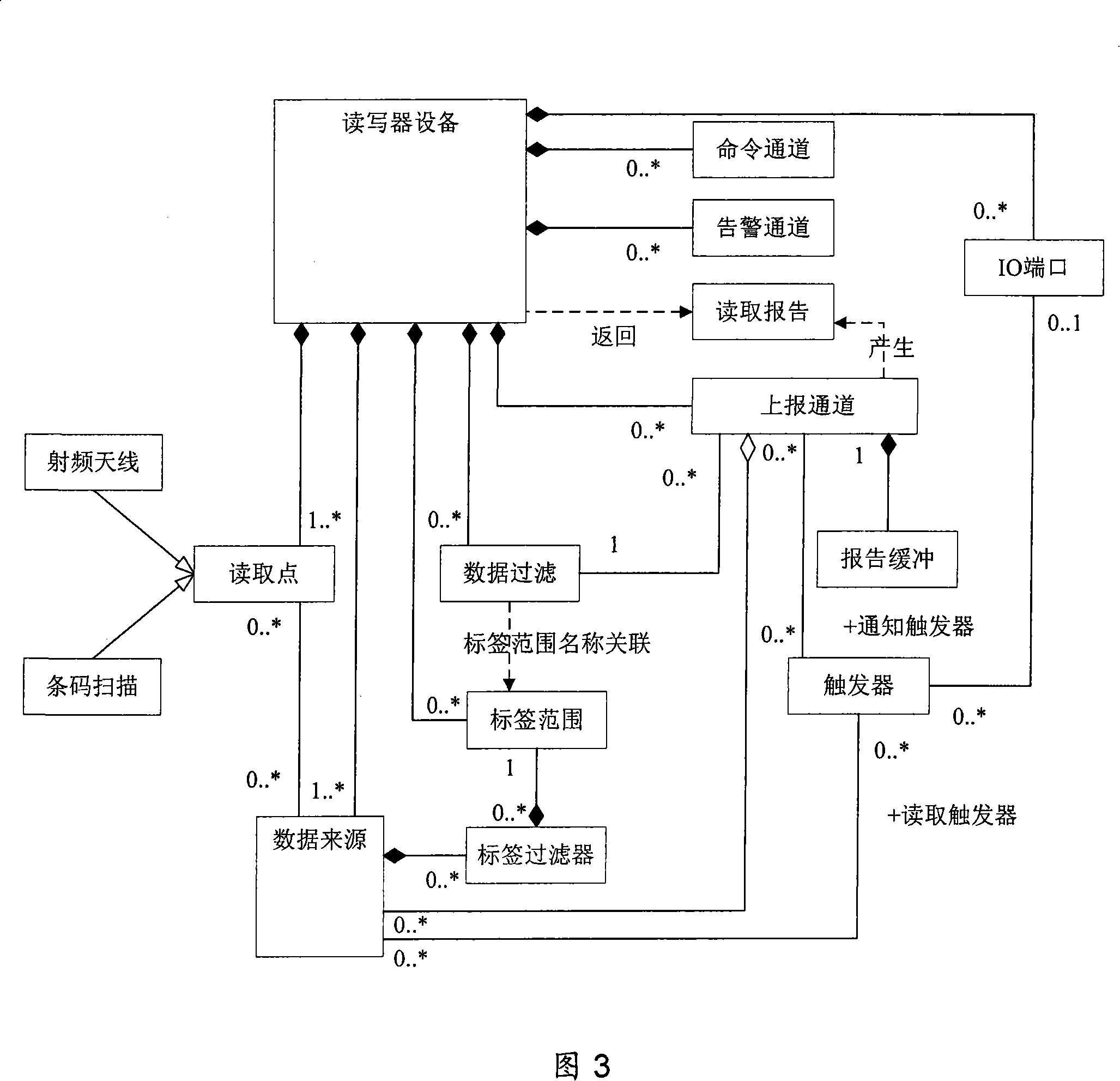

Universal read-write machine communicating protocol based on EPC read-write machine specification

InactiveCN101114331ALess integrationReduce difficultySensing record carriersTransmissionInformation layerComputer hardware

A general reader communication agreement based on EPC reader agreement comprises a reader and writer layer, an information layer, a transmission layer; wherein, the reader and writer layer are responsible to abstract an operation request of users to a method and an attribute of an executed object which is then transmitted to the information layer, or to receive the method and the attribute of an operated object and the executed object from the information layer to transform into requirements; the information layer is responsible to encapsulate the method and the attribute of the object which is abstracted out by the reader and writer layer into a request message, or to tell out the method and the attribute of the operated object from the receiving and request message of the transmission layer; simultaneously, the host computer processing request message actively responds to the processing, and the reader processing and receiving request message actively responds to the processing and sending; the transmission layer is responsible to send the well-encapsulated message of the information layer to one end of the network, to receive the message sent from the other end, and then to sent the message to the information layer. The invention establishes the unified and general reader equipment communication agreement, reduces the difficulties in the integration of heterogeneous system and in the application and development of the upper layer, and further enhances the frequency identification adaptability.

Owner:BEIHANG UNIV

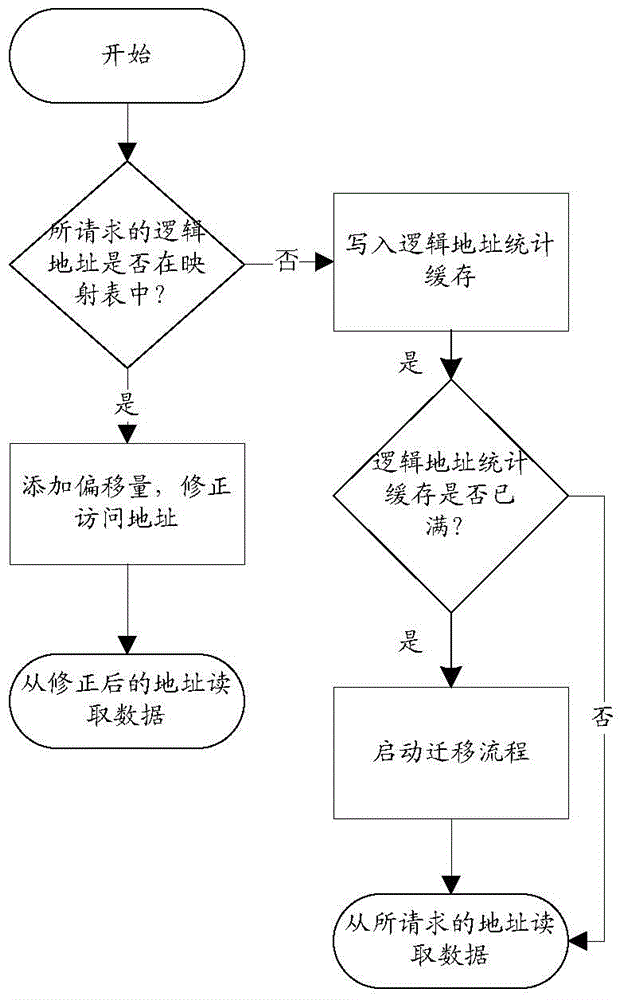

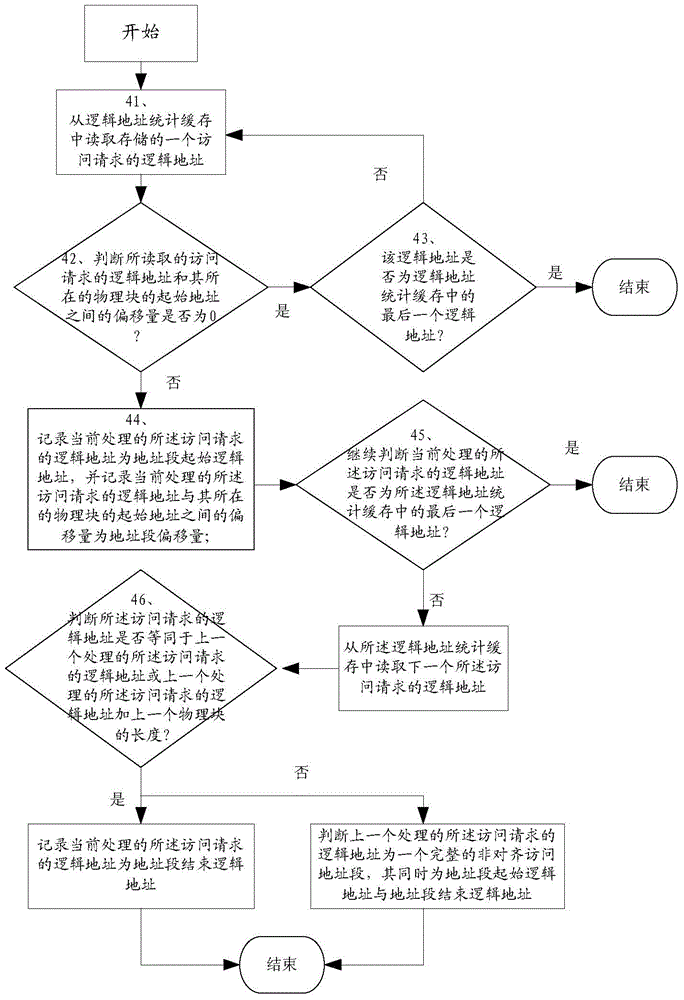

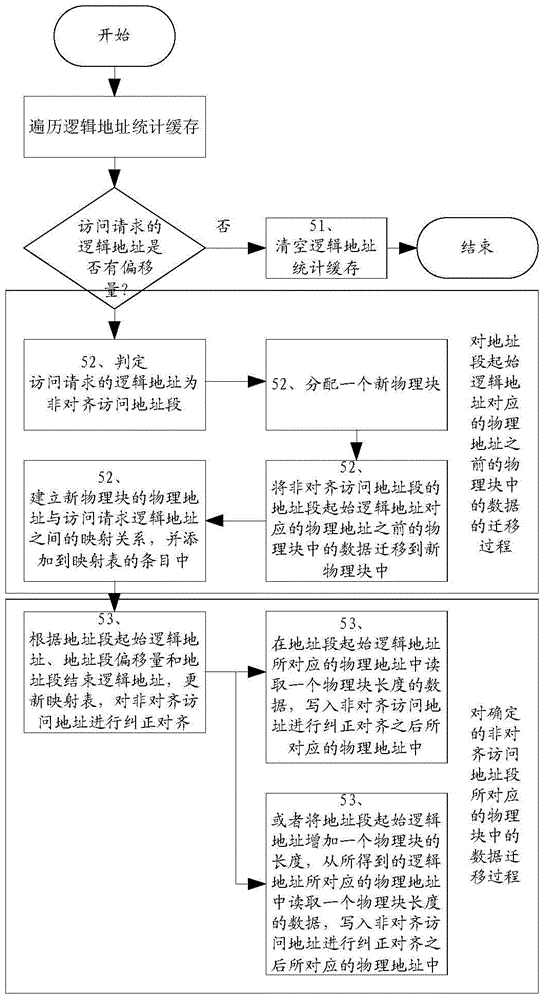

Automatic correcting method and device for aligning storage device addresses

ActiveCN104461925ASolve the need to reconfigure the upper layer applicationResolving needs to be done manuallyMemory adressing/allocation/relocationUplevelUsability

The invention discloses an automatic correcting method and device for aligning storage device addresses. The method includes the steps that the logic address of an access request is detected to be an unaligned access address through a mapping table, correction alignment operation is performed on the unaligned access address according to offset recorded in the mapping table, and if the logic address of the access request is not detected to be the unaligned access address, the logic address is saved in a logic address statistic cache. When the number of items in the cache reaches the upper limit, the migration process is executed; unaligned access address fields not detected by the mapping table are judged, the mapping relation between the logic address and a physical address is established and added in the items of the mapping table, and the unaligned access address fields are aligned; data in the physical address corresponding to the logic address of the aligned access request are read. Due to the scheme, the defects that in an existing alignment scheme, an upper application needs to be reconfigured, execution is performed manually, and usability is poor are overcome.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

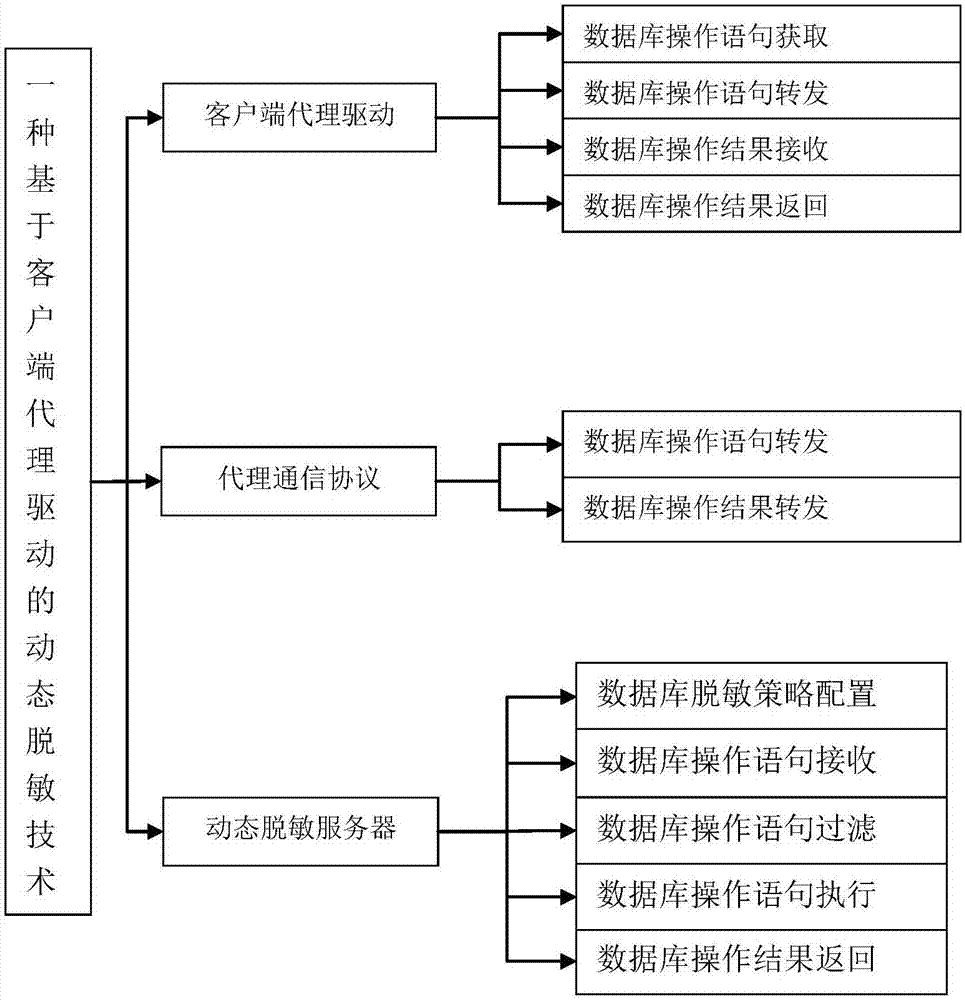

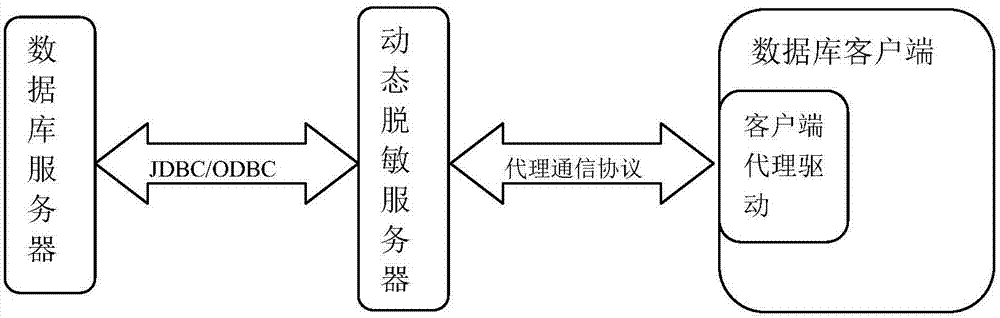

Dynamic database desensitization method and equipment

InactiveCN107194276AReduce risk of leakageElevate the authority control levelDigital data protectionSpecial data processing applicationsUplevelData access

The purpose of the invention is to provide a dynamic database desensitization method and equipment. The method comprises the steps: a client proxy driver acquires a database operation statement of an upper-layer application through a standard client driver interface; and the client proxy driver returns a desensitized execution result to the upper-layer application through the standard client driver interface. The client proxy driver can be compatible to a mainstream database manufacturer and a big data analysis platform easily; in addition, the overall filtering and permission control can be carried out on the operation statement and / or the returned result, thus the permission control grade of database access is improved, the safety protection grade of data access is enhanced and the data leakage risk is reduced.

Owner:SUNINFO INFORMATION TECH

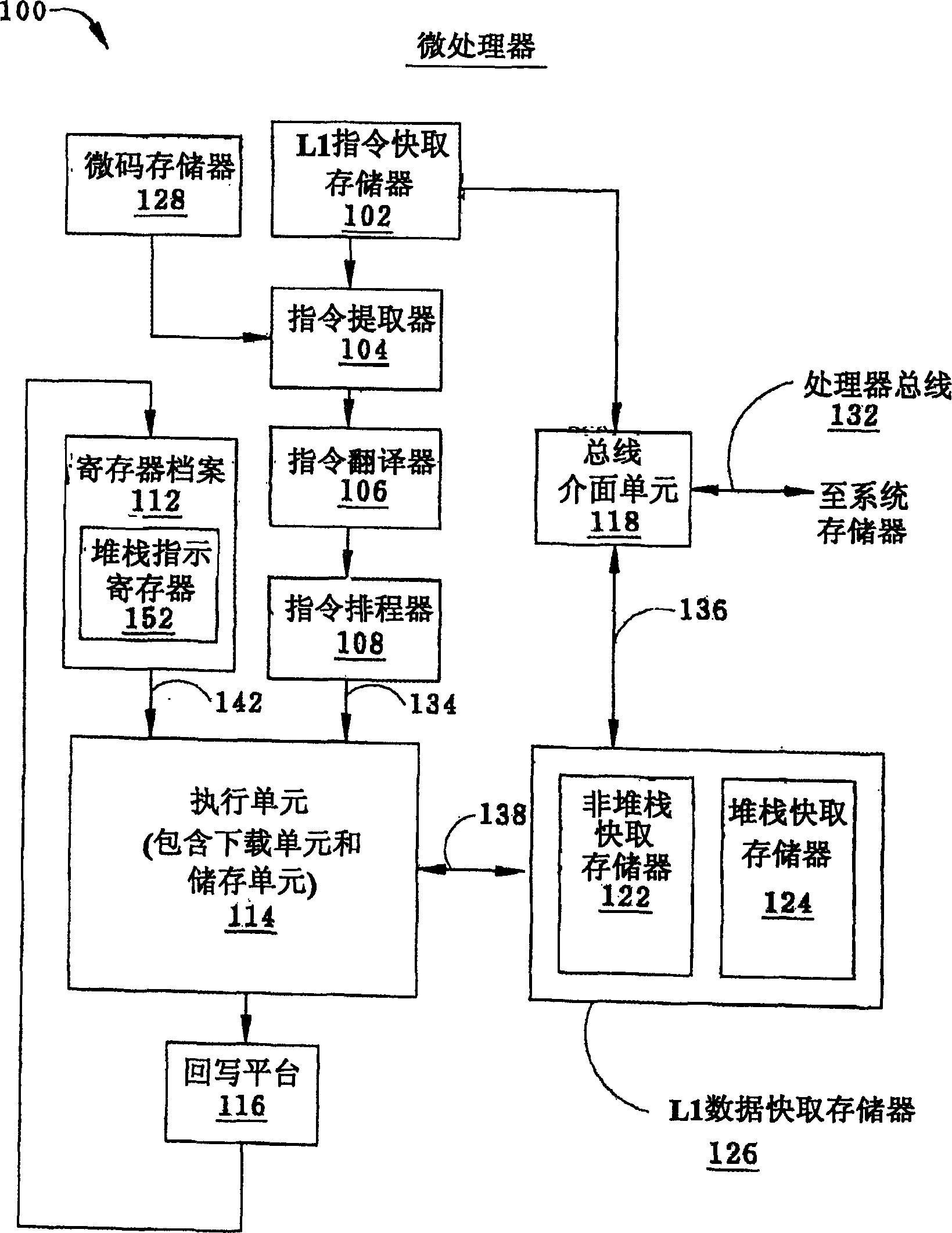

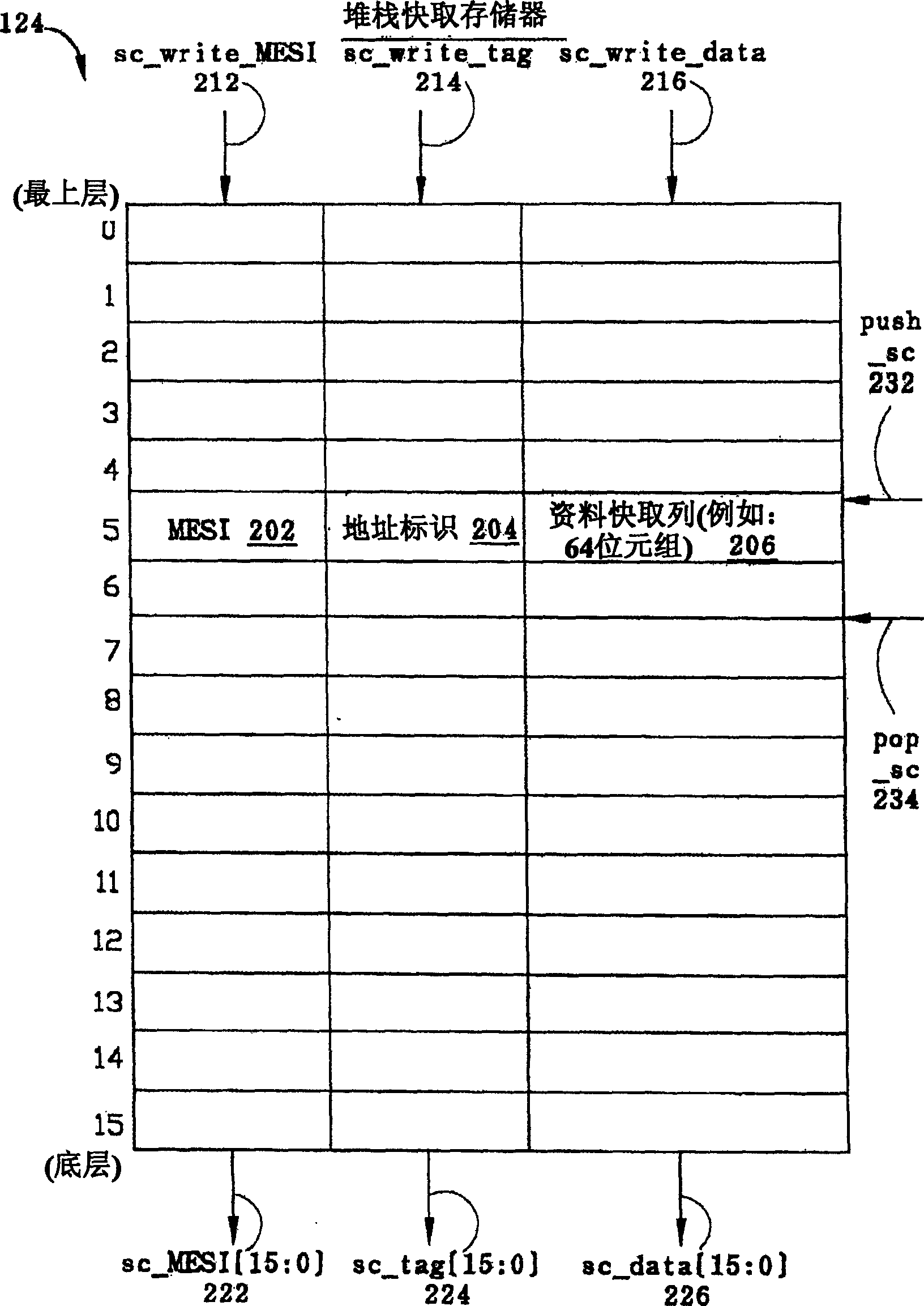

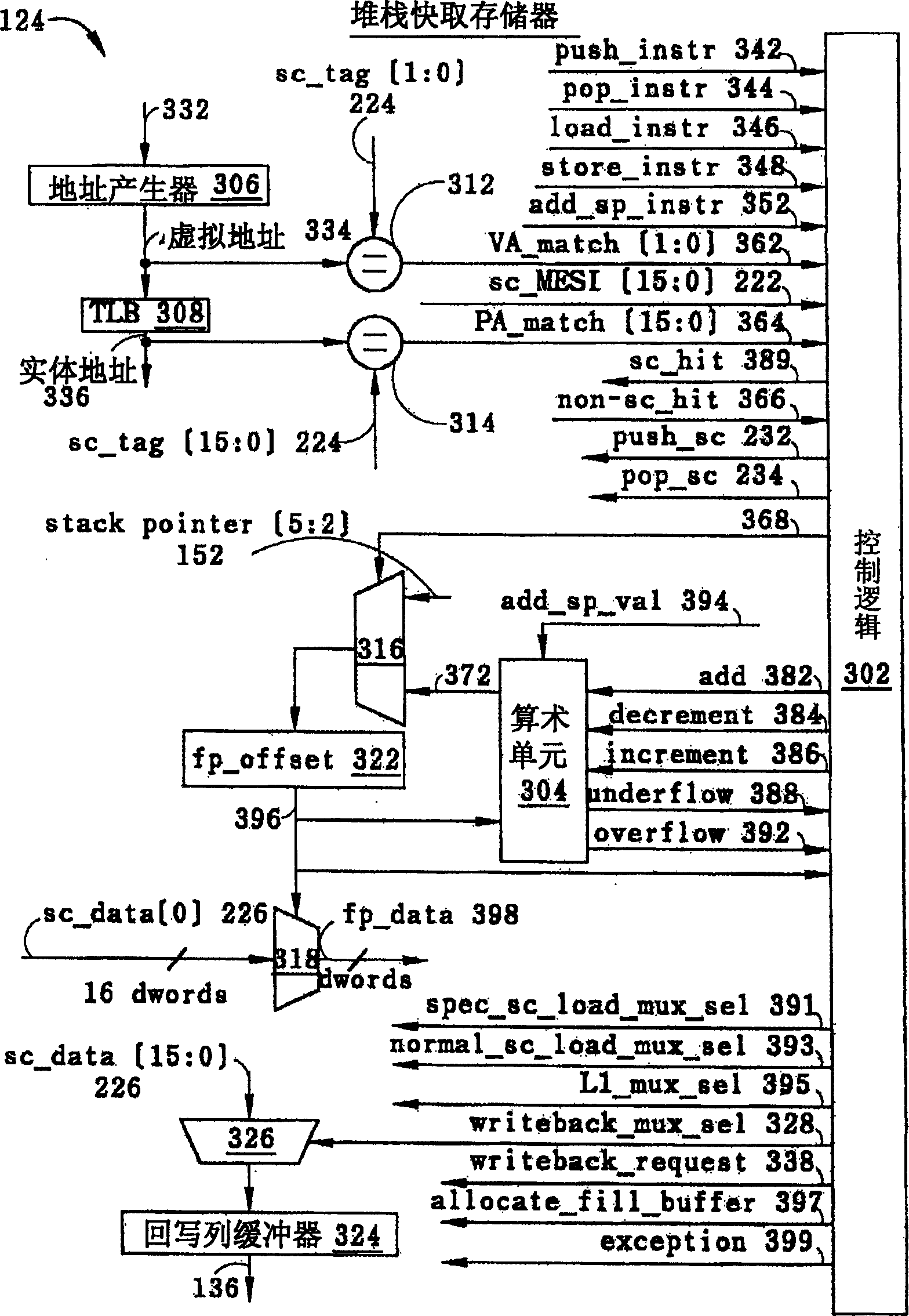

Variable latency stack cache and method for providing data

ActiveCN1632877AMemory adressing/allocation/relocationConcurrent instruction executionUplevelLow speed

The present invention discloses a variable latency stack cache memory and a method for providing data. It includes a plurality of storage elements, and stores stack memory data in a last-in-first-out operation mode. This cache memory can distinguish between the request of fetch and download instructions, and operate by guessing that the fetch data may exist in the uppermost cache line of the cache memory; in addition, this cache memory will also store the stack data requested by the download instruction Make a guess and assume that its data will exist in the topmost cache rank of cache memory or in multiple cache ranks above it. Therefore, when the source virtual address of the download instruction hits the uppermost cache line of the cache memory, the speed of the cache memory to provide the download data will be faster than when the data is located in the lower cache line; or with a physical address Comes faster when comparing; or faster than when the data has to be provided from the microprocessor's off-stack cache memory.

Owner:IP FIRST

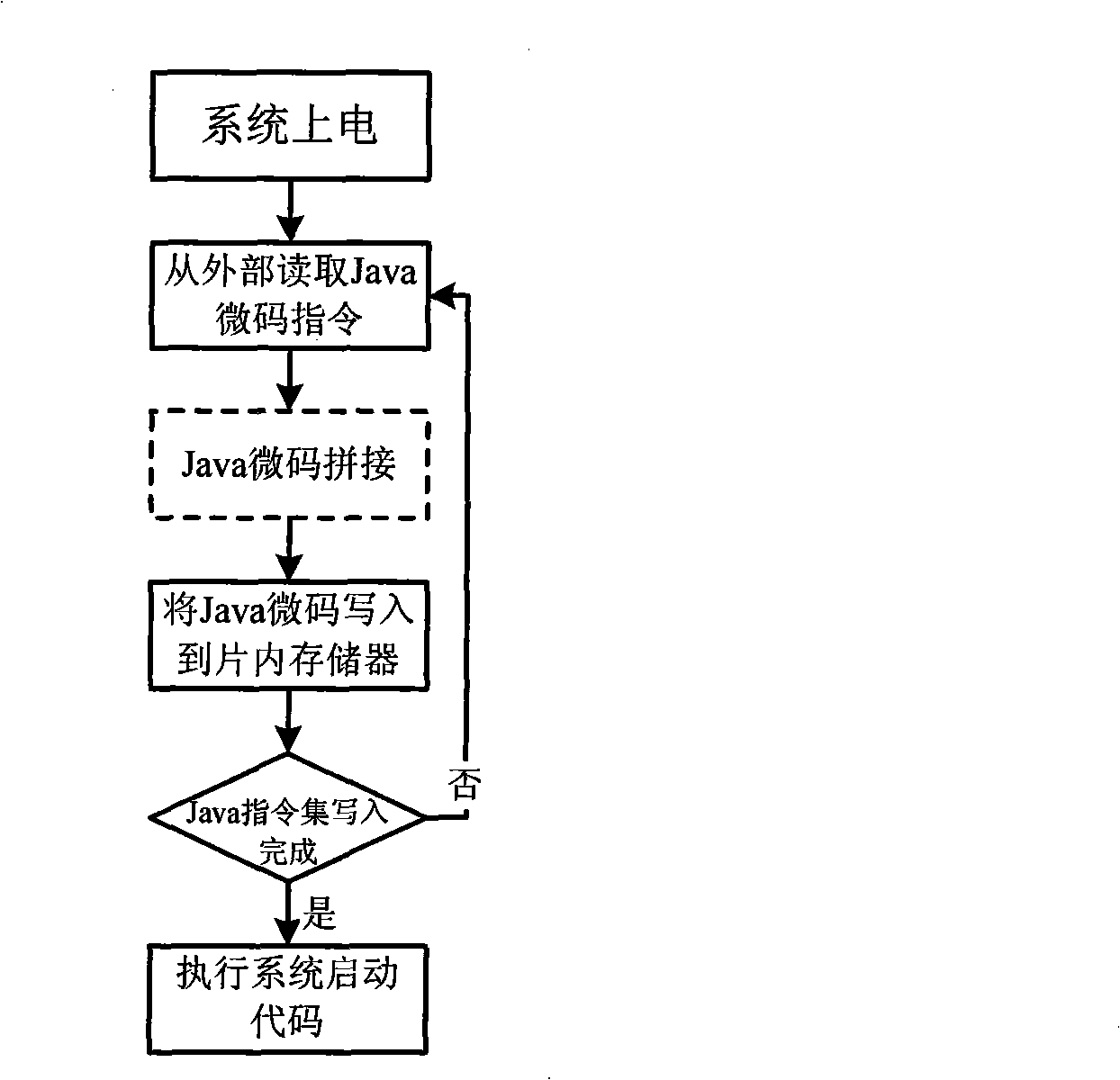

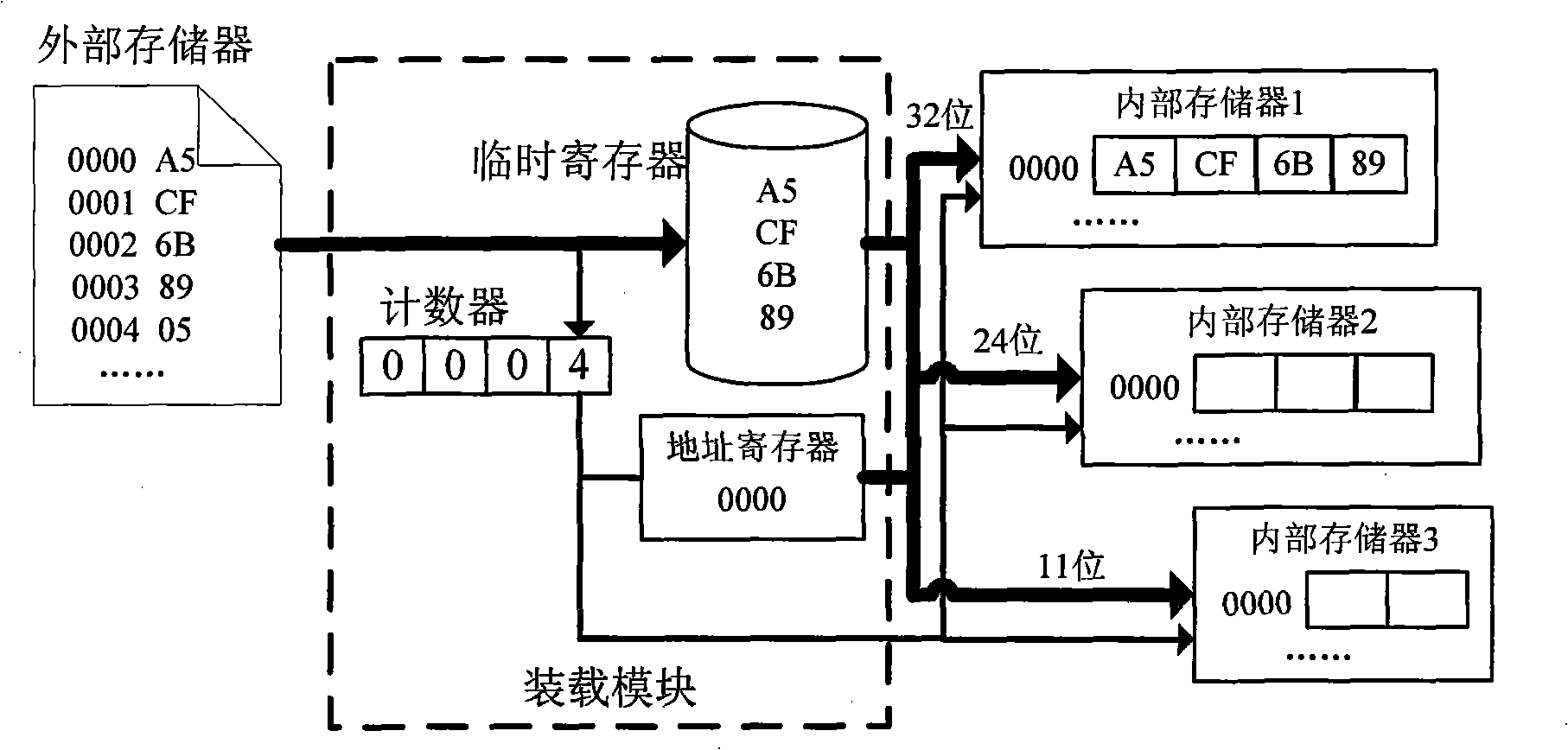

Method for dynamically loading embedded type Java processor microcode instruction set

InactiveCN101349973AGuaranteed versatilityMulti-space storageProgram loading/initiatingInternal memoryEmbedded Java

The invention discloses a java processor microcode instruction set dynamic loading technique, which belongs to the embedded processor design field. The invention increases an instruction set loading module on the basis of an original processor. The technique comprises: firstly reading microcode instructions from an external memory by a loading module, registering the microcode instructions in a temporary register of the loading module, then, solving the problem that the digit numbers of the external memory and an internal memory disaccord through the microcode mosaic technique, finally, writing the microcode instructions which are spliced well into the internal memory, and starting executing starting codes to enter the normal working state by the system when all internal memories finish initialization, namely after an instruction set is loaded to the inside of a chip. The invention not only enables Java programs of other platforms to operate accurately on the platform to guarantee the universality of the upper application, but also can update and optimize a local instruction set aiming at different embedded application environments to modify the starting codes on real time and to improve the efficiency of the processor.

Owner:SUN YAT SEN UNIV

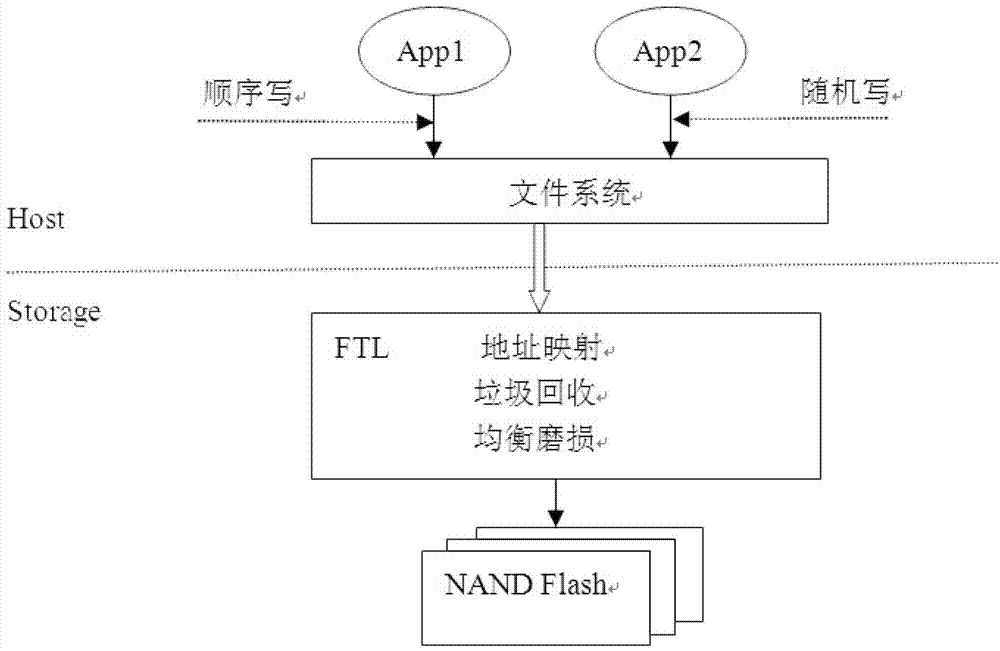

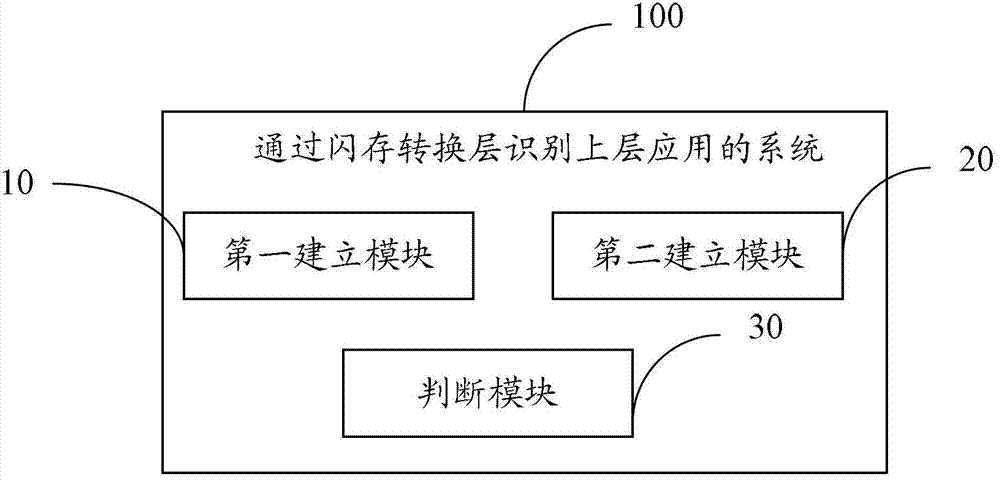

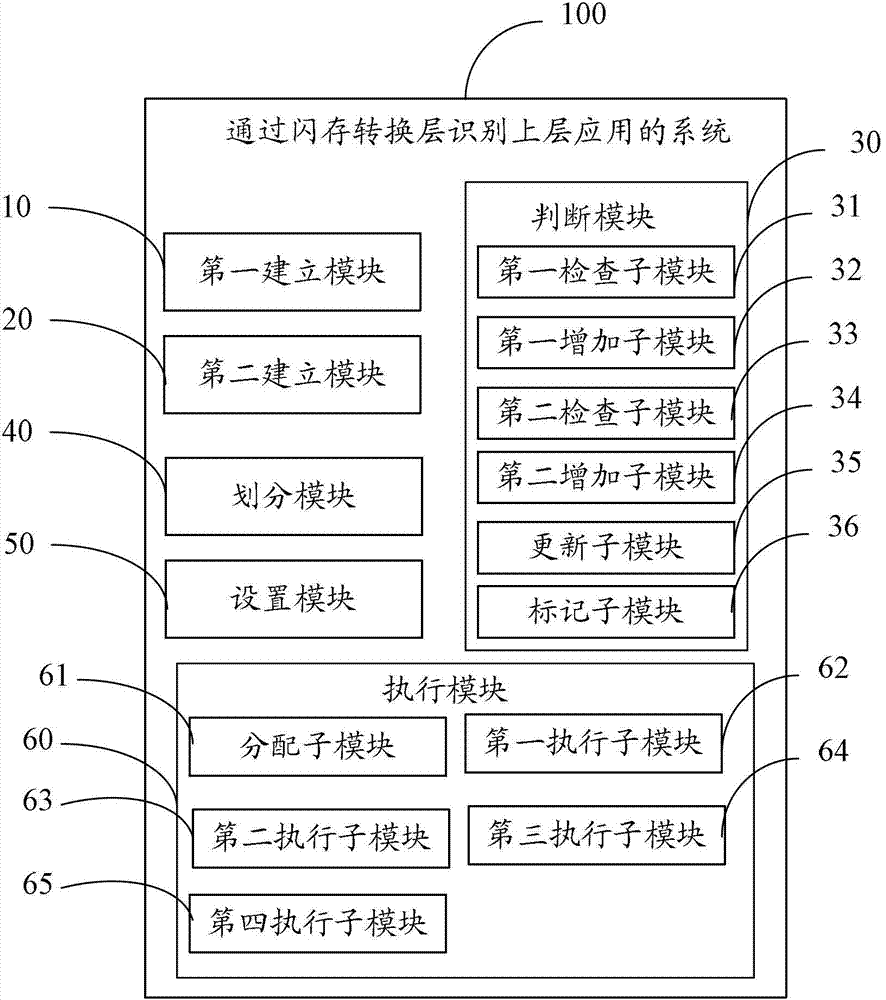

Method and system for recognizing upper-layer application by flash memory transfer layer

ActiveCN103034586AIncrease the function of identifying upper-layer applicationsImprove recycling efficiencyMemory adressing/allocation/relocationUplevelComputer engineering

The invention is suitable for the technical field of memory and provides a method and a system for recognizing an upper-layer application by a flash memory transfer layer. The method comprises the following steps of: establishing a sequential write screening list on the flash memory transfer layer, and recording the logic block addressing of the next write instruction of the upper-layer application by the sequential write screening list; establishing a sequential write candidate list on the flash memory transfer layer, and recording a write instruction issued by the upper-layer application by the sequential write candidate list; and when the write instruction is issued by the upper-layer application, judging the type of the write instruction issued by the upper-layer application according to the records of the sequential write screening list and the sequential write candidate list. Therefore, the type of the write instruction issued by the upper-layer application can be recognized by the flash memory transfer layer.

Owner:RAMAXEL TECH SHENZHEN

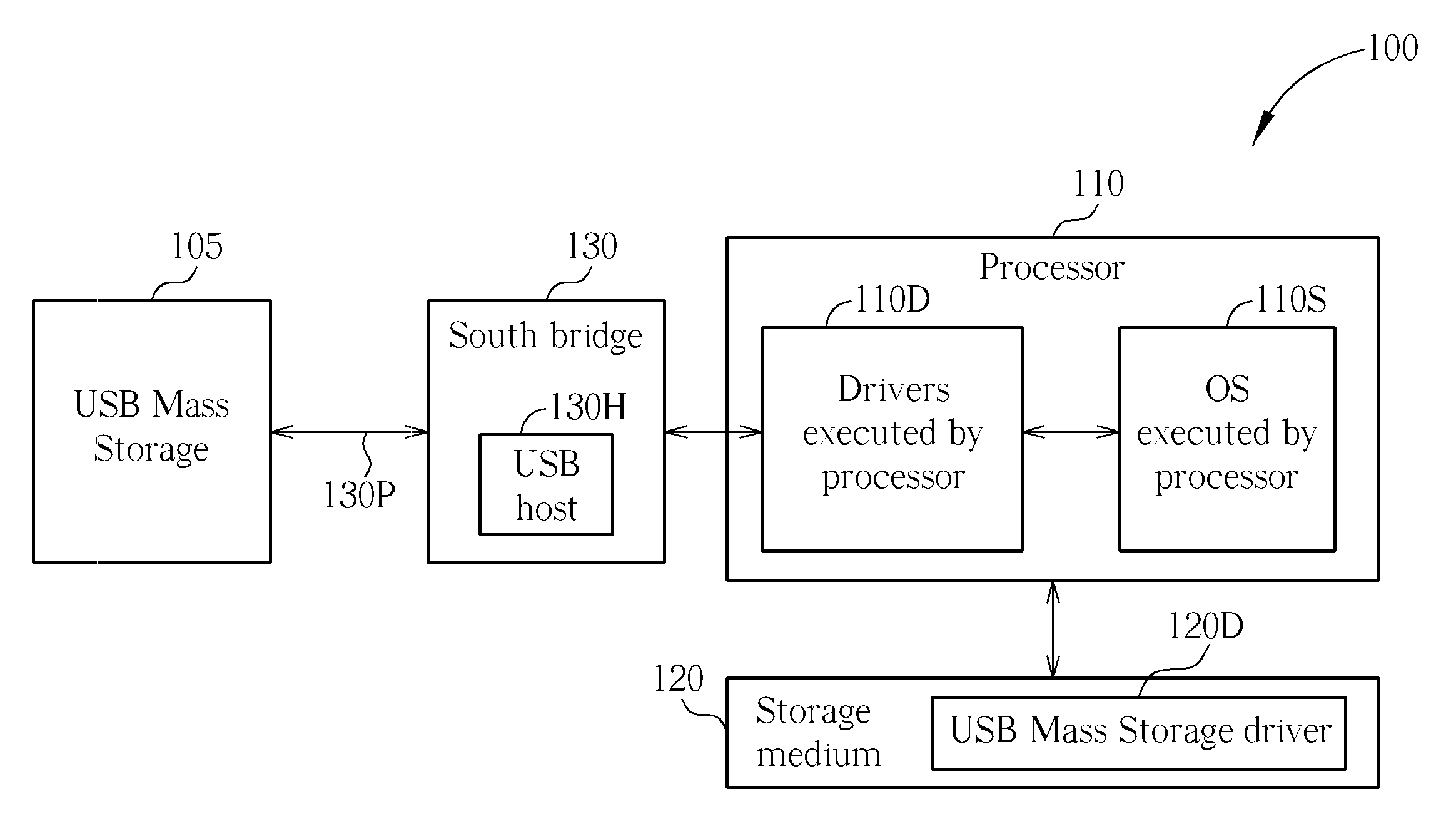

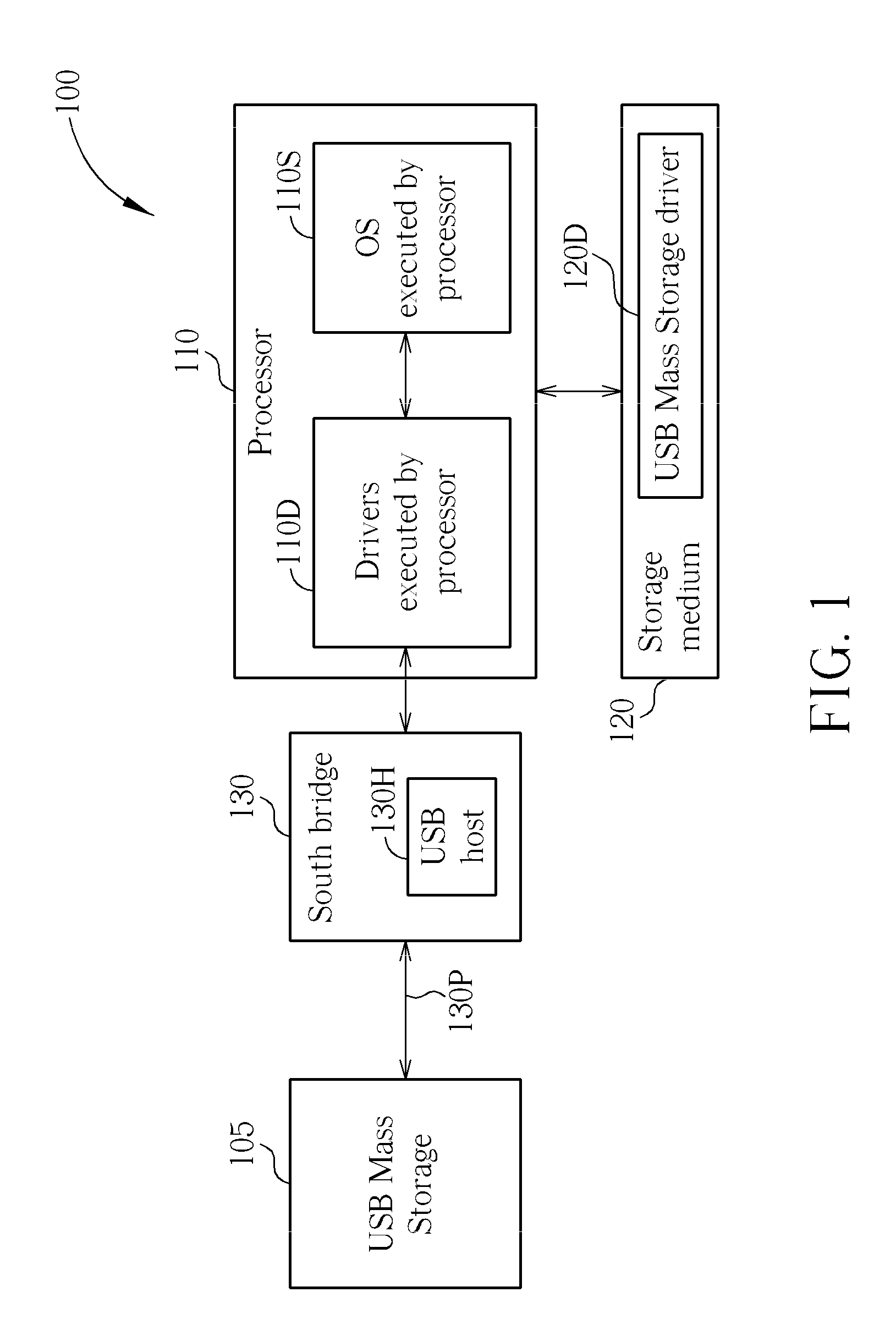

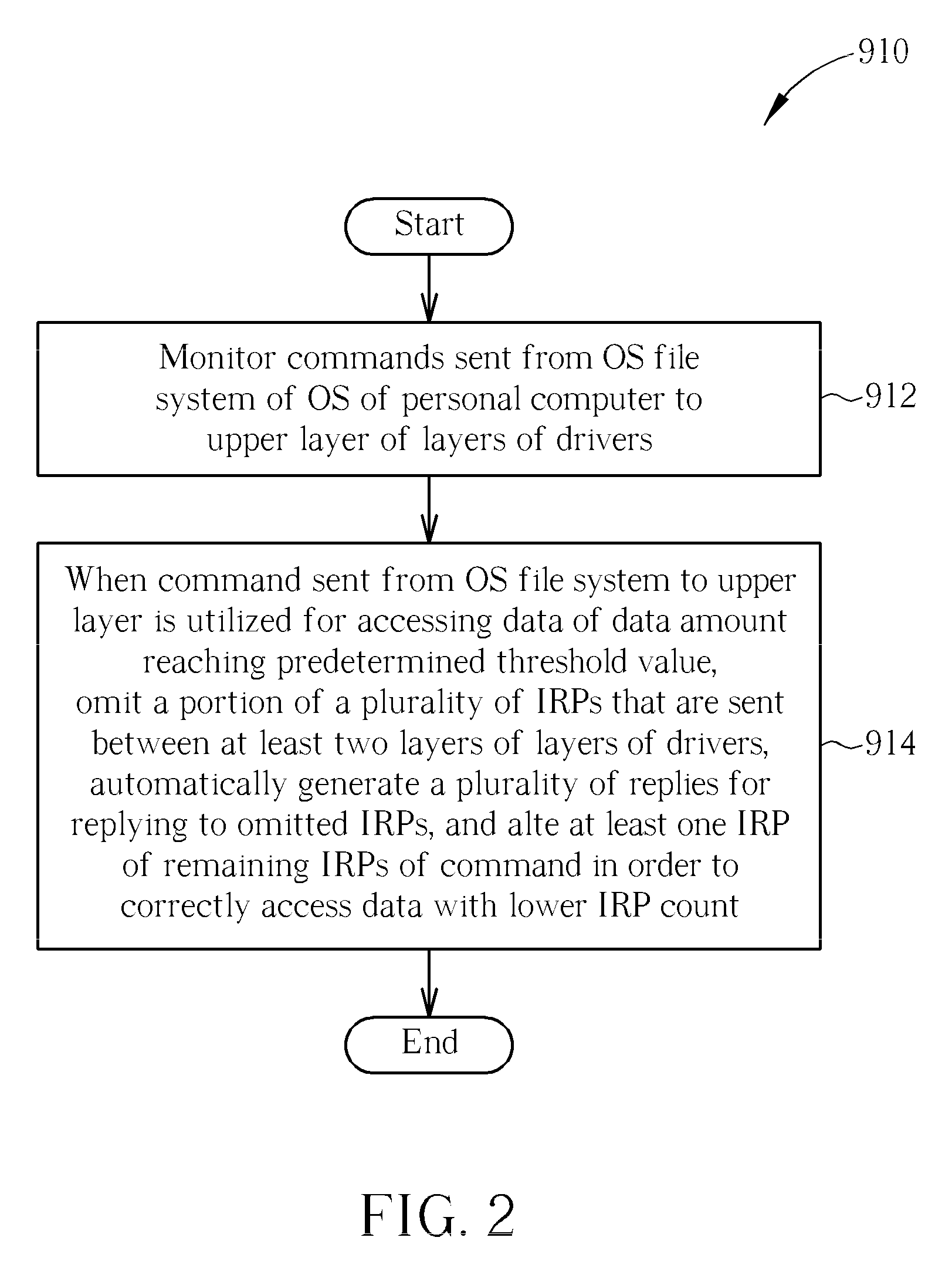

Method for enhancing performance of data access between a personal computer and a USB mass storage, associated personal computer, and storage medium storing an associated USB mass storage driver

ActiveUS20110060858A1Improve data access performanceAccurate accessInput/output processes for data processingData conversionMass storageUplevel

A method for enhancing performance of data access between a personal computer and a USB Mass Storage is provided. The personal computer is equipped with a plurality of layers of drivers regarding USB data access, and a lower layer of the layers of the drivers includes a USB Bus Driver. The method includes: monitoring commands sent from an operating system (OS) file system to an upper layer; and when a command sent from the OS file system to the upper layer is utilized for accessing data of a data amount that is greater than a predetermined threshold value, omitting a portion of a plurality of IRPs, automatically generating a plurality of replies for replying to the omitted IRPs, and altering at least one IRP of remaining IRPs in order to correctly access the data with a lower IRP count, wherein the plurality of IRPs is associated with the command.

Owner:SILICON MOTION INC (TW)

Method and apparatus for managing data imaging in a distributed computer system

A three-tiered data imaging system is used on a distributed computer system comprising hosts connected by a network. The lowest tier comprises management facade software running on each machine that converts a platform-dependent interface written with low-level kernel routines that actually implement the data imaging system to platform-independent method calls. The middle tier is a set of federated Java beans that communicate with each other, with the management facades and with the upper tier of the system. The upper tier of the inventive system comprises presentation programs that can be directly manipulated by management personnel to view and control the system. In one embodiment, the federated Java beans can run on any machine in the system and communicate, via the network. A data imaging management facade runs on selected hosts and at least one data imaging bean also runs on those hosts. The data imaging bean communicates directly with a management GUI or CLI and is controlled by user commands generated by the GUI or CLI. Therefore, a manager can configure the entire data imaging system from a single location.

Owner:ORACLE INT CORP

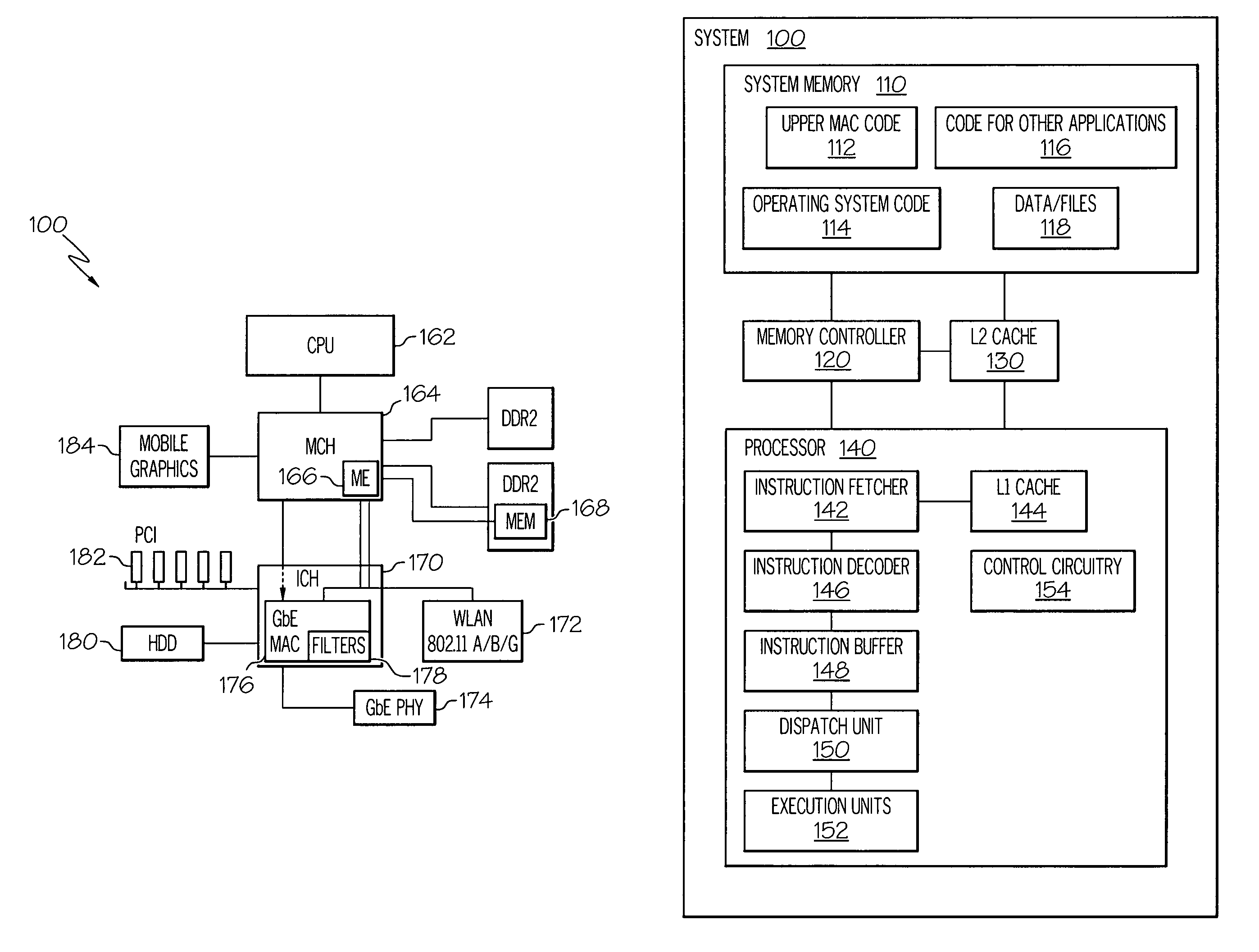

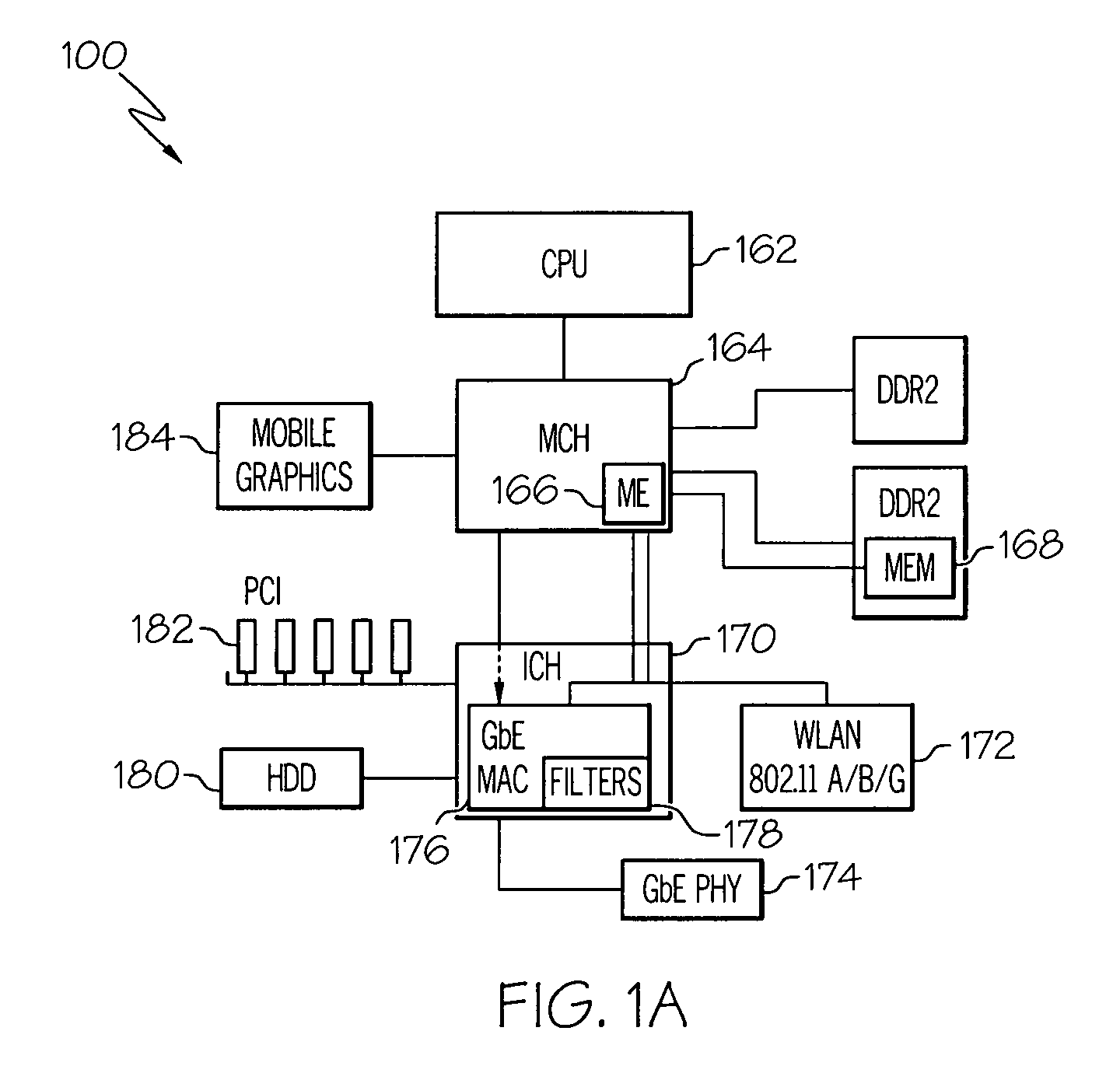

Systems and methods for wake on event in a network

Embodiments include systems and methods for allowing a host CPU to sleep while service presence packets and responses to search requests are sent by an alternate processor. While the CPU is in a low power state, the alternate processor monitors the network for incoming request packets. Also, while the CPU is asleep, the alternate processor periodically may transmit presence packets, announcing the presence of a service available from the host system of the CPU. In one embodiment, the alternate processor is a low power processor. If a search request is received when the CPU is in a low power state, the alternate processor responds to the search request according to whether the PC provides that service. If a service request is received, then the ME wakes the CPU of the PC to provide the requested service. In the wireless case, when the CPU is asleep, portions of the wireless upper MAC are implemented by the ME. When the CPU is awake the wireless upper MAC is implemented in the CPU. Thus, embodiments enable the PC to appear available to wireless devices when the CPU is asleep.

Owner:INTEL CORP

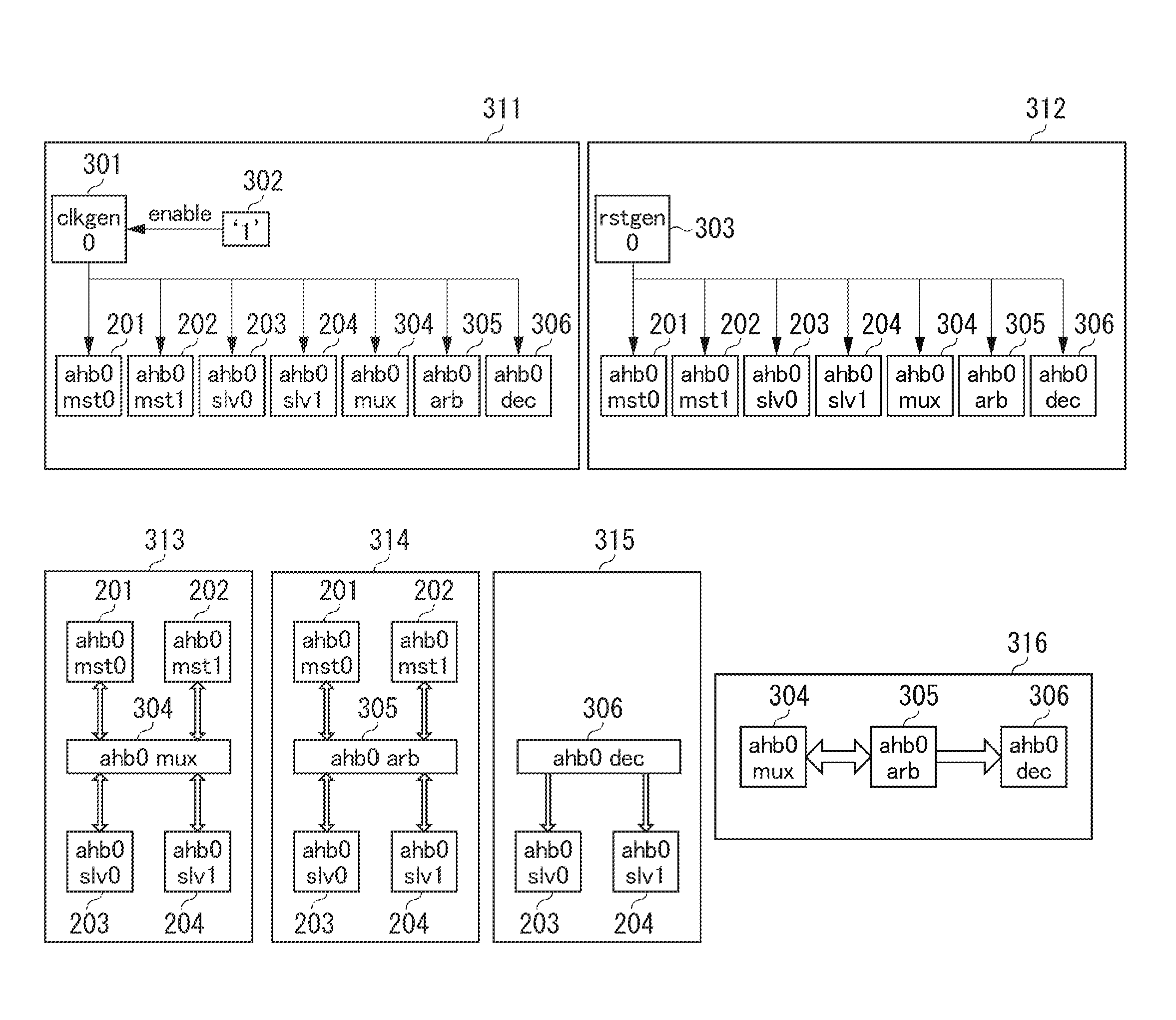

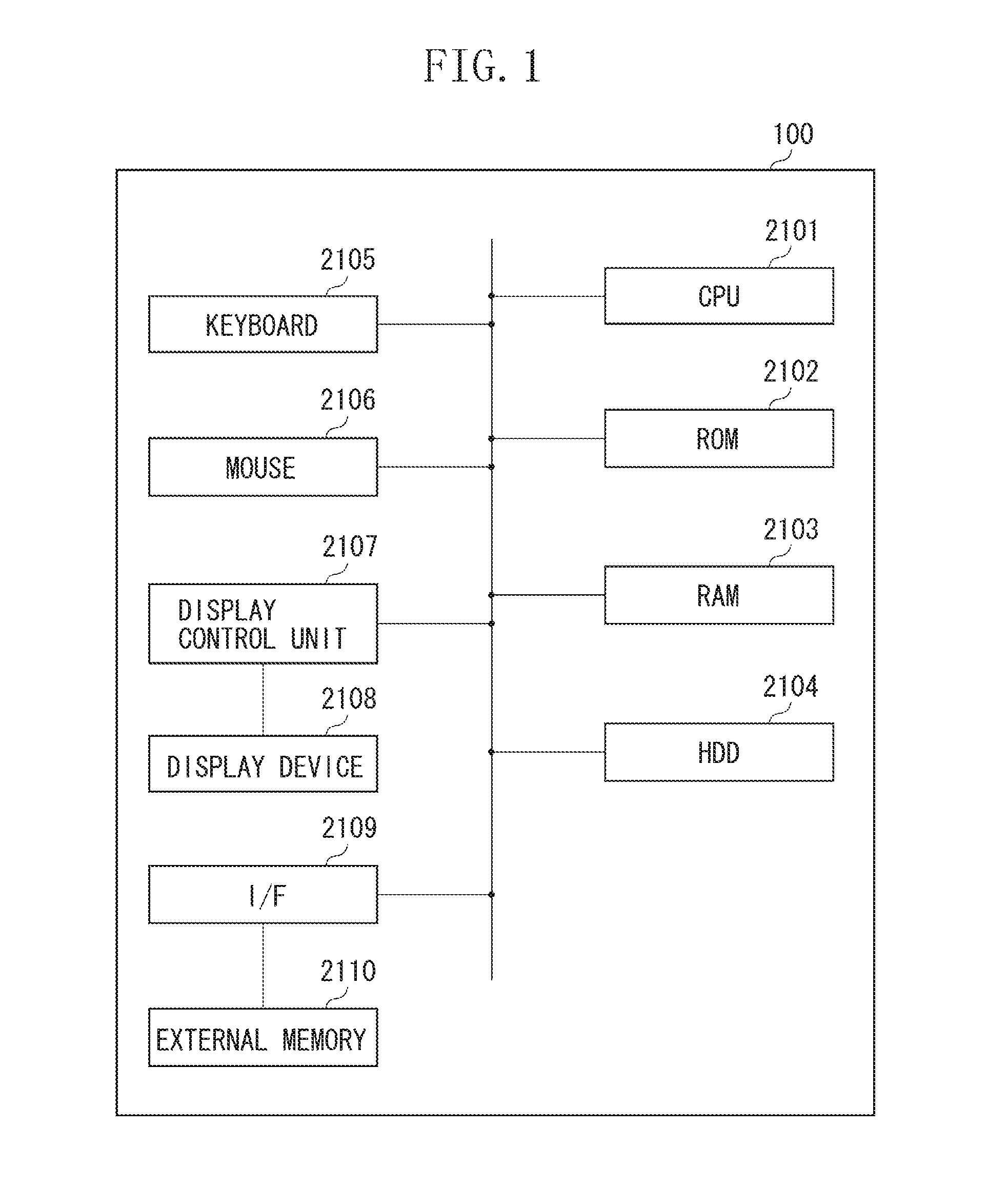

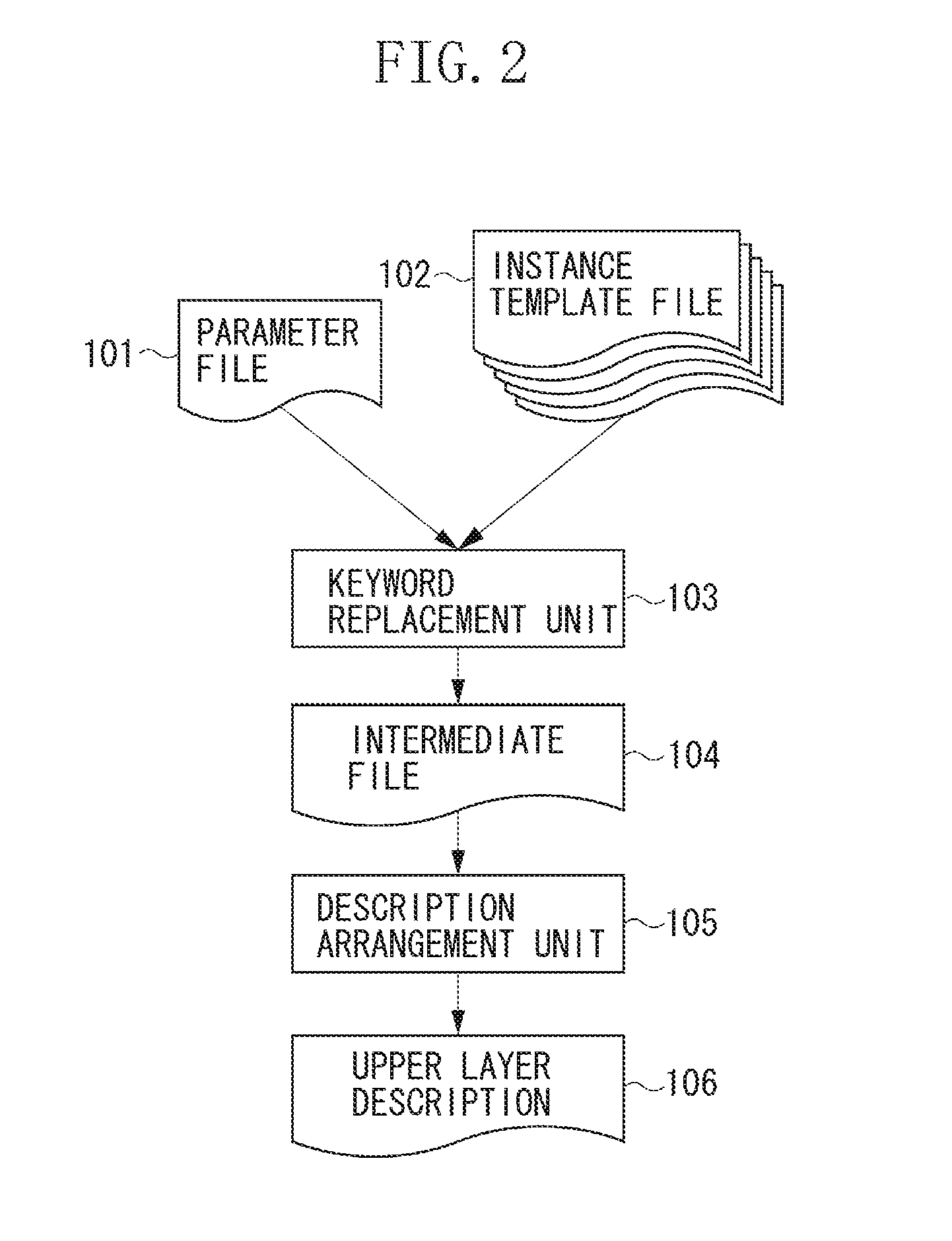

Upper layer description generator, upper layer description generation method, and computer readable storage medium

There is an issue of a great workload in changing an upper layer configuration. The present invention solves the issue by including a generation unit configured to generate an instance description by keyword replacement based on one or more instance template files in which an instance description for each module is described by a keyword and a parameter file representing a configuration of an upper layer, and an arrangement unit configured to arrange the instance description generated by the generation unit as a description conforming to grammar of each language, and output a upper layer description.

Owner:CANON KK

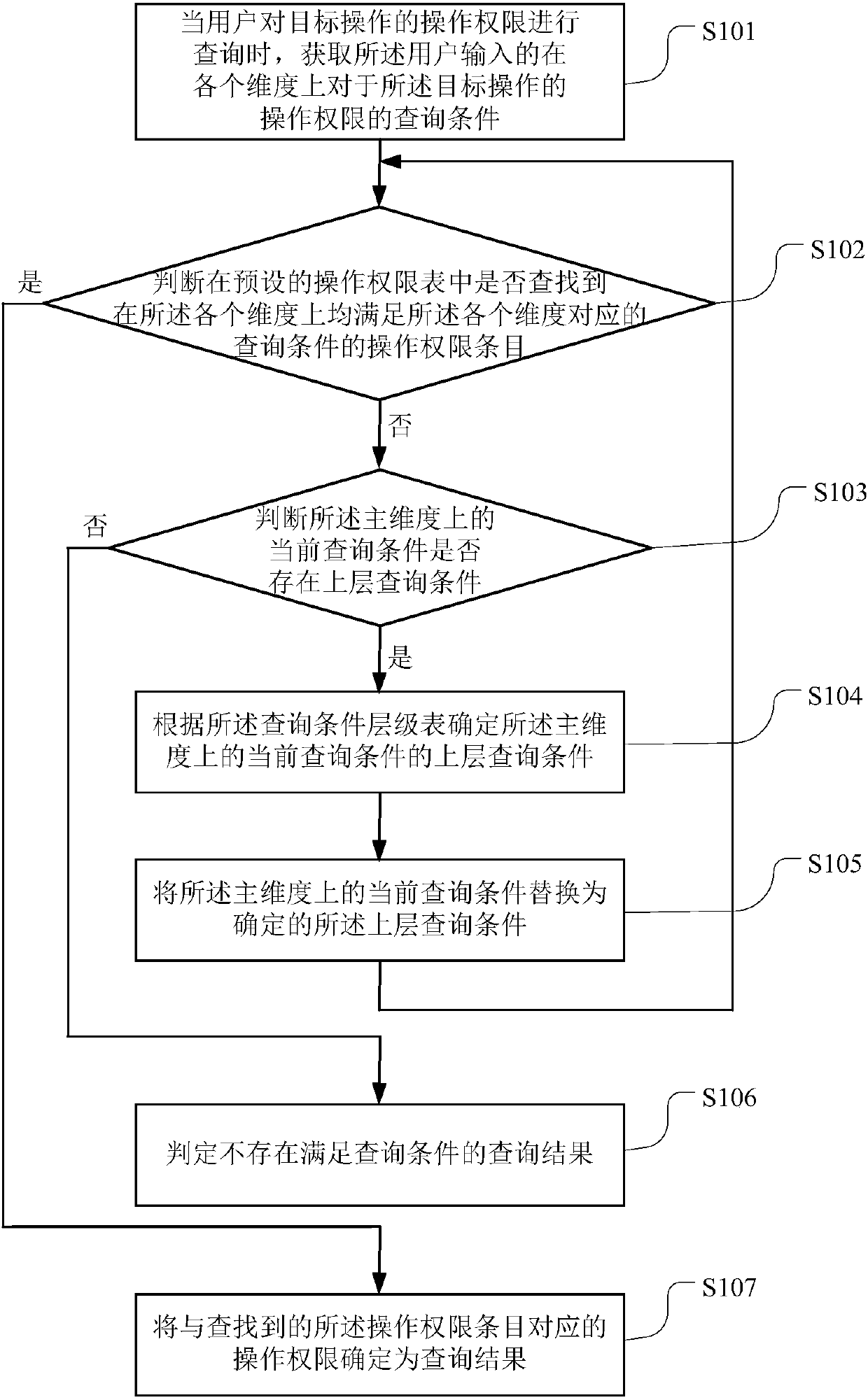

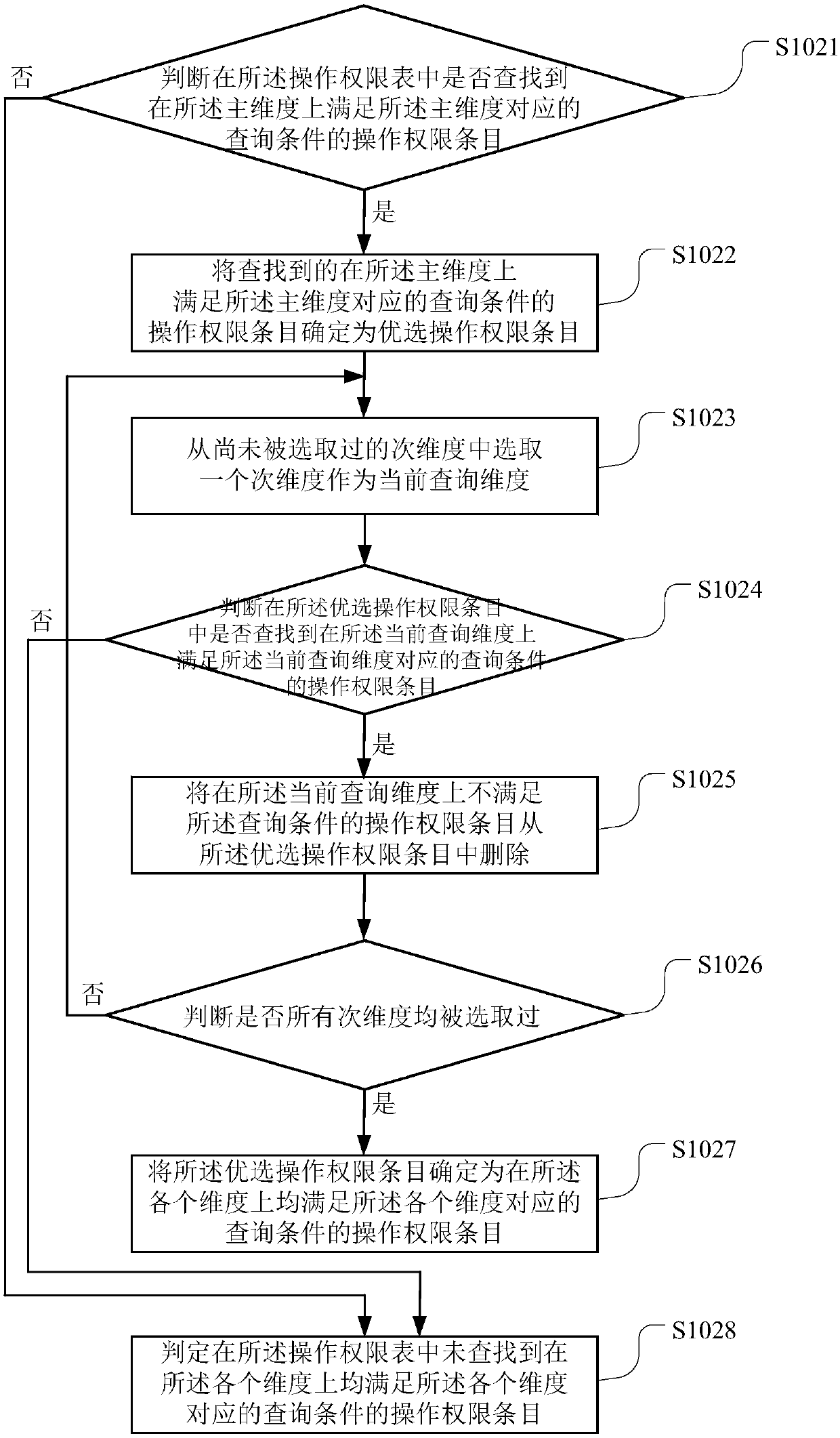

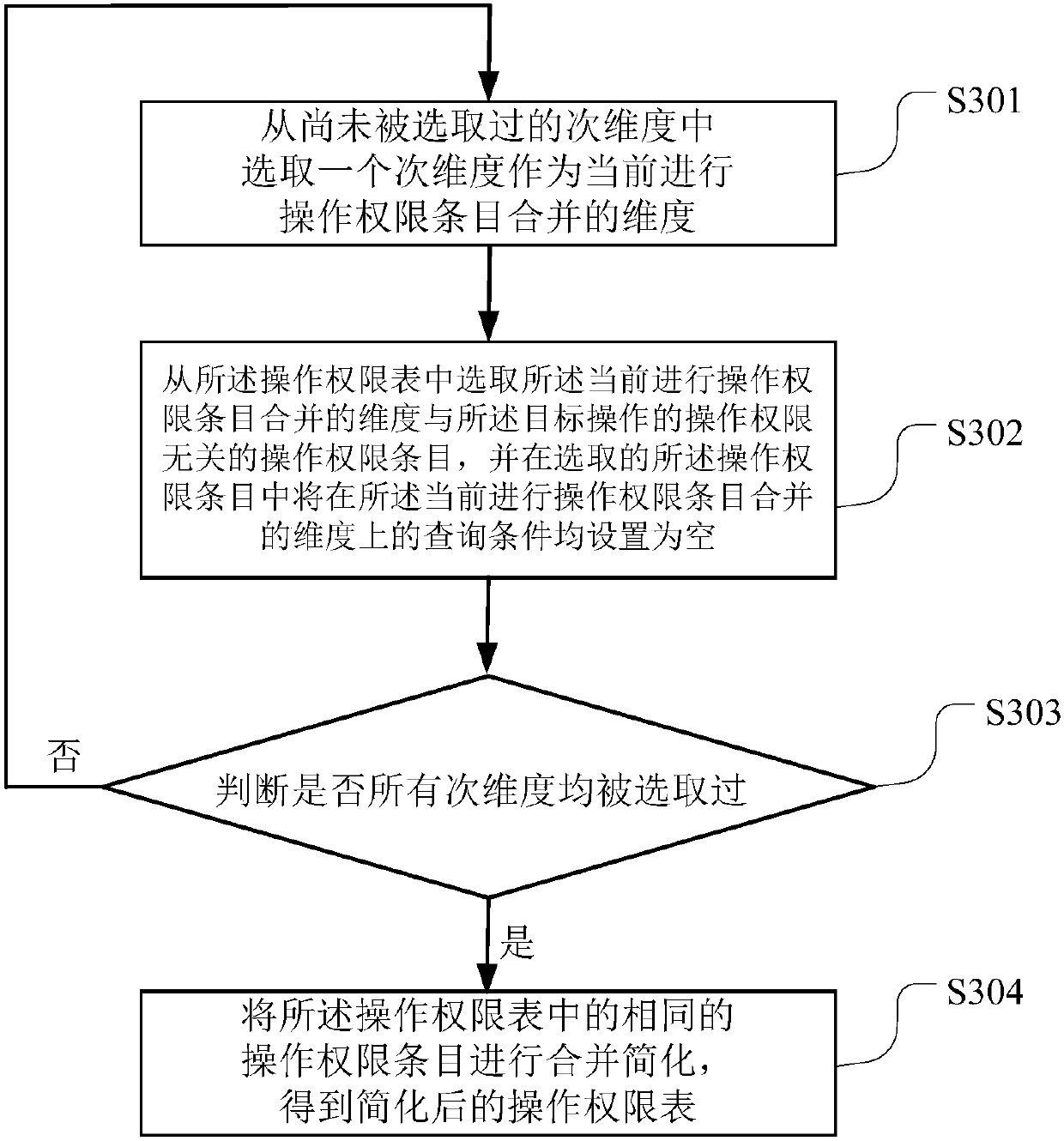

Operating privilege inquiry method and terminal equipment

ActiveCN107784091AInquiry is convenient and orderlyEasy to findSpecial data processing applicationsUplevelUser input

The invention belongs to the technical field of computers and particularly relates to an operating privilege inquiry method and terminal equipment. Operating privileges of a target operation are divided according to two or more preset dimensions, and operating privileges of the target operation on each dimension are recorded in a preset operating privilege list; when a user inquires the operatingprivileges of the target operation, firstly, the inquiry conditions, input by the user, of the operating privileges of the target operation on each dimension are acquired, then, operating privilege items meeting the inquiry condition corresponding to each dimension on each dimension are looked up from the operating privilege list, if no operating privilege items are looked up, the current inquirycondition on the main dimension is replaced with an upper-layer inquiry condition, and the look-up process is executed. With the adoption of multiple dimensions and an upwards backtracking operating privilege inquiry mode based on the main dimension, the fineness of inquiry results is improved, and the method can be applied to complex multi-dimensional service scenes.

Owner:CHINA PING AN PROPERTY INSURANCE CO LTD

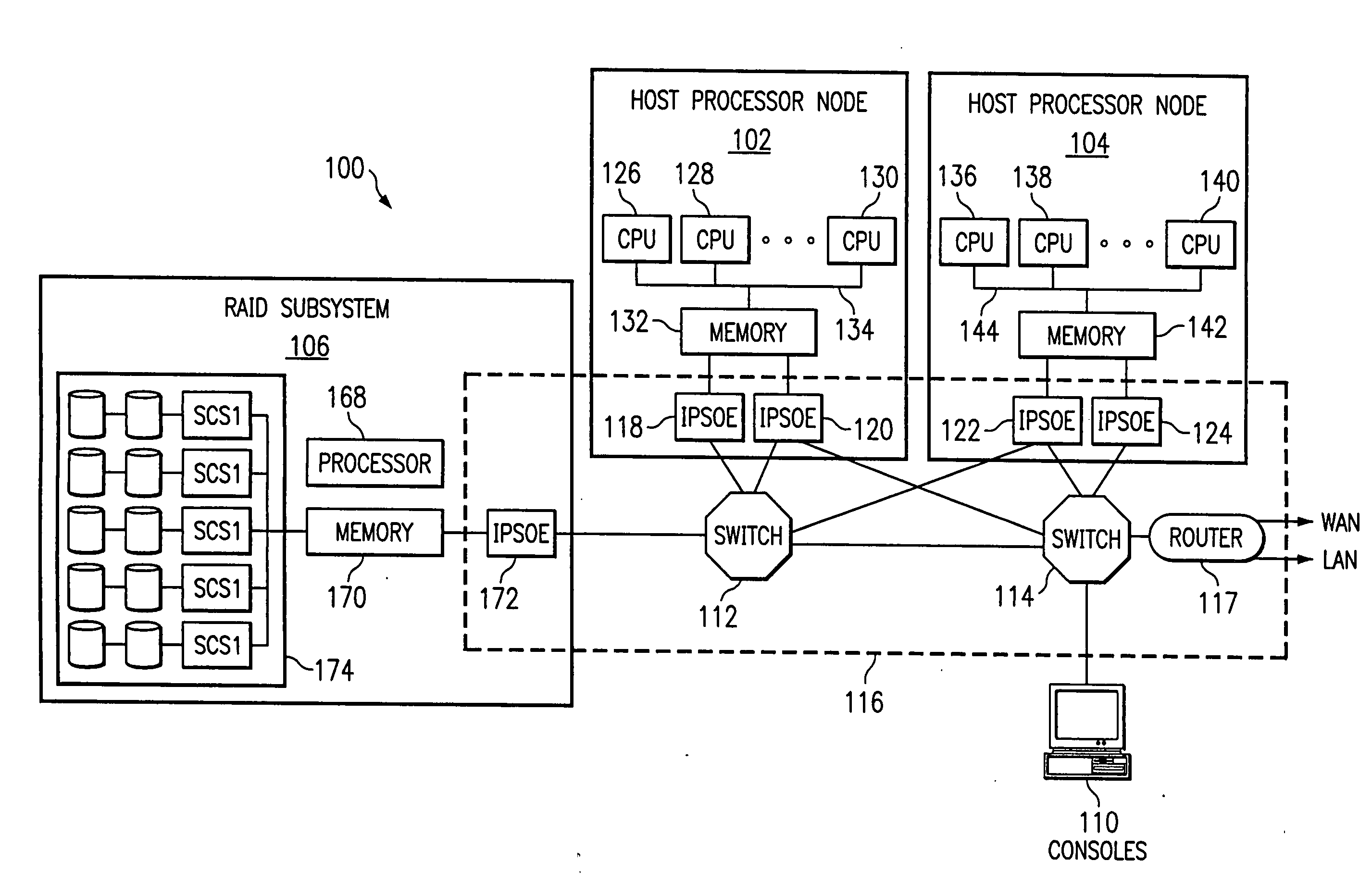

Split socket send queue apparatus and method with efficient queue flow control, retransmission and sack support mechanisms

InactiveUS20060212563A1Avoid bufferingReduce processing overheadDigital computer detailsData switching networksUplevelComputer science

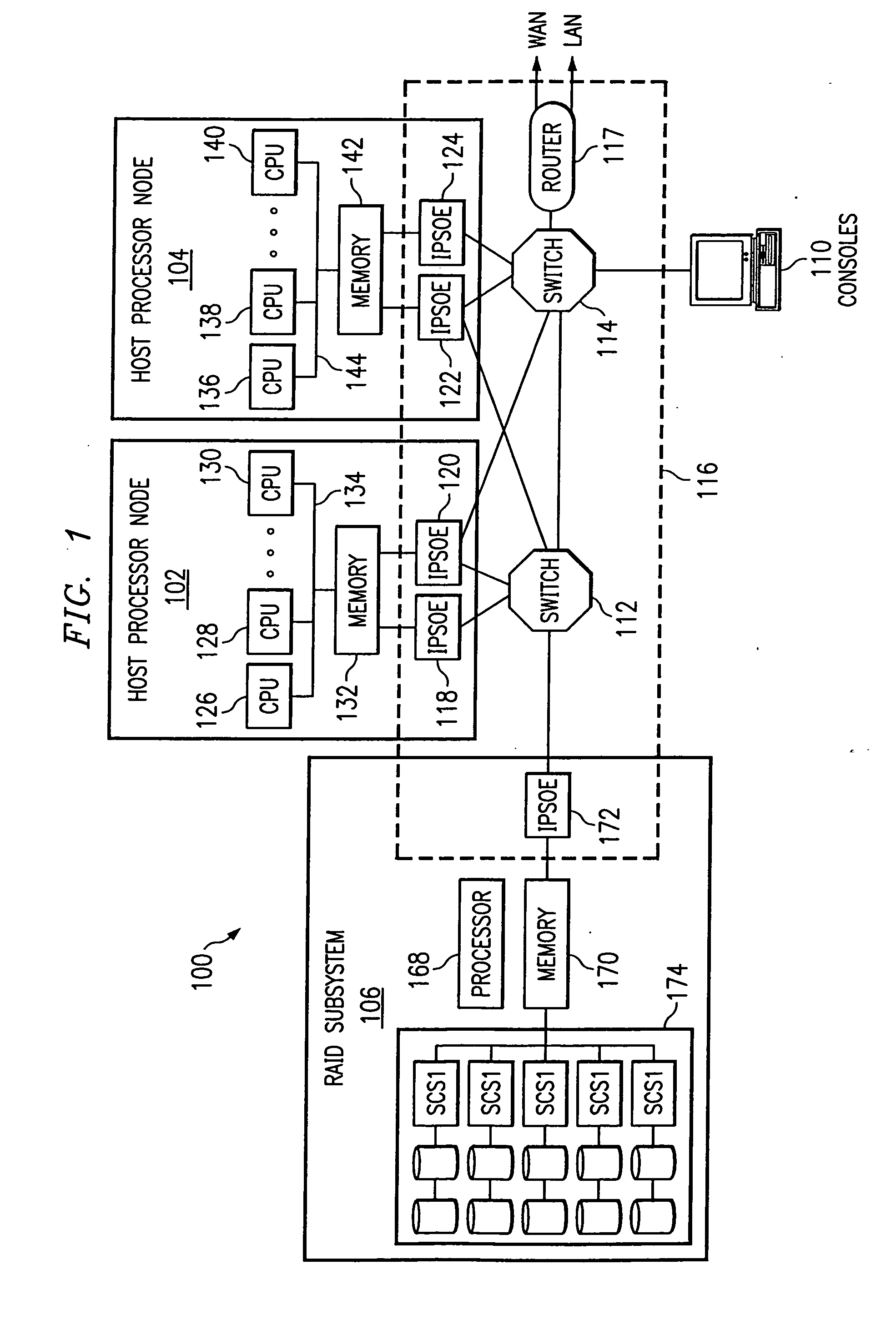

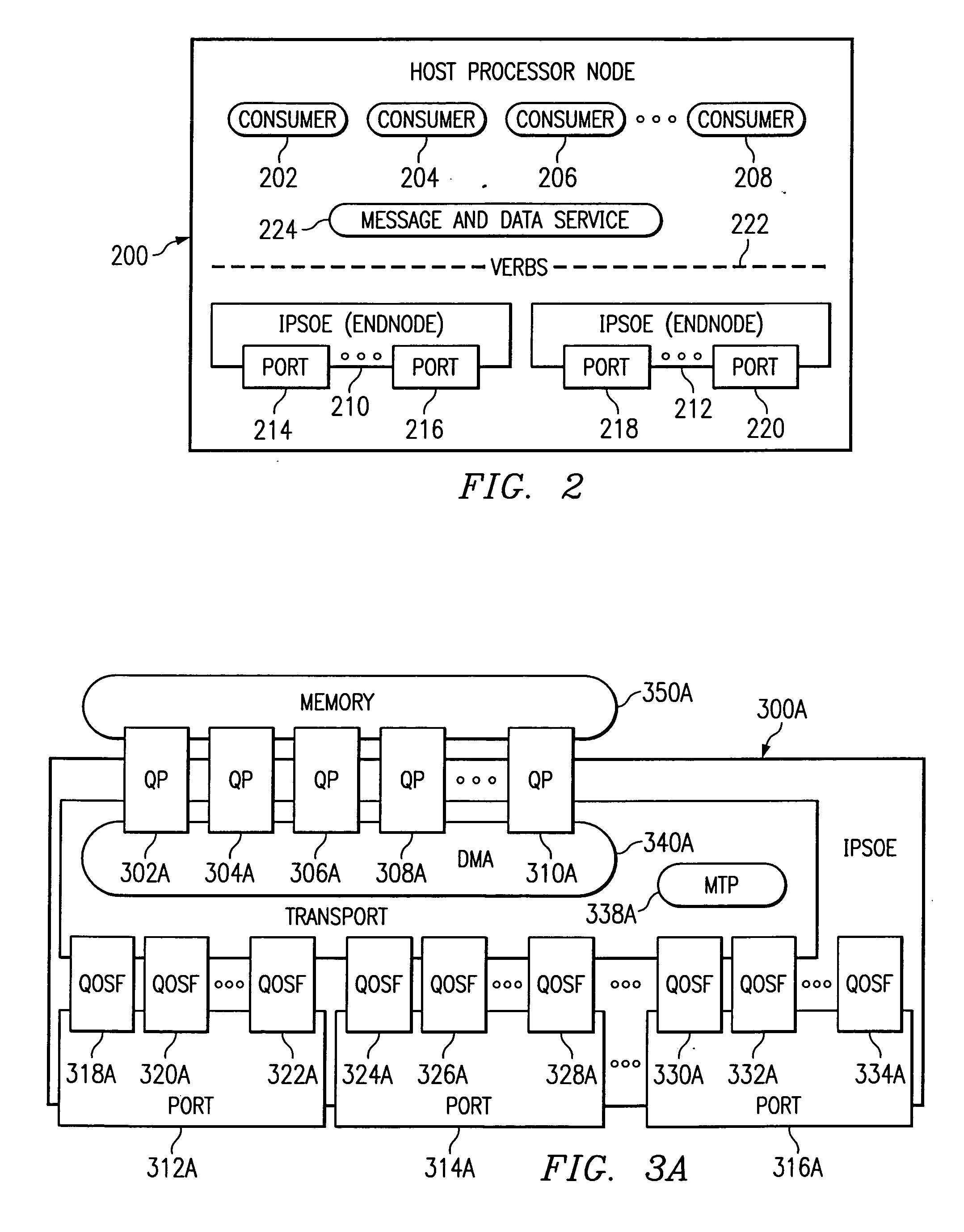

A mechanism for offloading the management of send queues in a split socket stack environment, including efficient split socket queue flow control and TCP / IP retransmission support. As consumers initiate send operations, send work queue entries (SWQEs) are created by an Upper Layer Protocol (ULP) and written to the send work queue (SWQ). The Internet Protocol Suite Offload Engine (IPSOE) is notified of a new entry to the SWQ and it subsequently reads this entry that contains pointers to the data that is to be transmitted. After the data is transmitted and acknowledgments are received, the IPSOE creates a completion queue entry (CQE) that is written into the completion queue (CQ). After the CQE is written, the ULP subsequently processes the entry and removes it from the CQE, freeing up a space in both the SWQ and CQ. The number of entries available in the SWQ are monitored by the ULP so that it does not overwrite any valid entries. Likewise, the IPSOE monitors the number of entries available in the CQ, so as not overwrite the CQ. The flow control between the ULP and the IPSOE is credit based. The passing of CQ credits is the only explicit mechanism required to manage flow control of both the SWQ and the CQ between the ULP and the IPSOE.

Owner:IBM CORP

Printer having controller transmitting commands to print engine responsive to commands

InactiveUS7012709B2Extension of timeIncrease printing speedVisual presentation using printersOther printing apparatusUplevelImaging processing

Commands transmitted from an image processing controller to an engine are classified into a plurality of layers in response to the information contents of the commands and when the engine receives a command of a subordinate layer, it recognizes that the command is received together with the command of a superior layer to that command, last issued preceding the command. Therefore, the engine performs internal control in accordance with the second command of the subordinate layer received and the first command of the superior layer not received at the time, but last received preceding the second command. For various instructions concerning execution of print, the commands of the superior layer of commands representing the instructions are made common.

Owner:SEIKO EPSON CORP

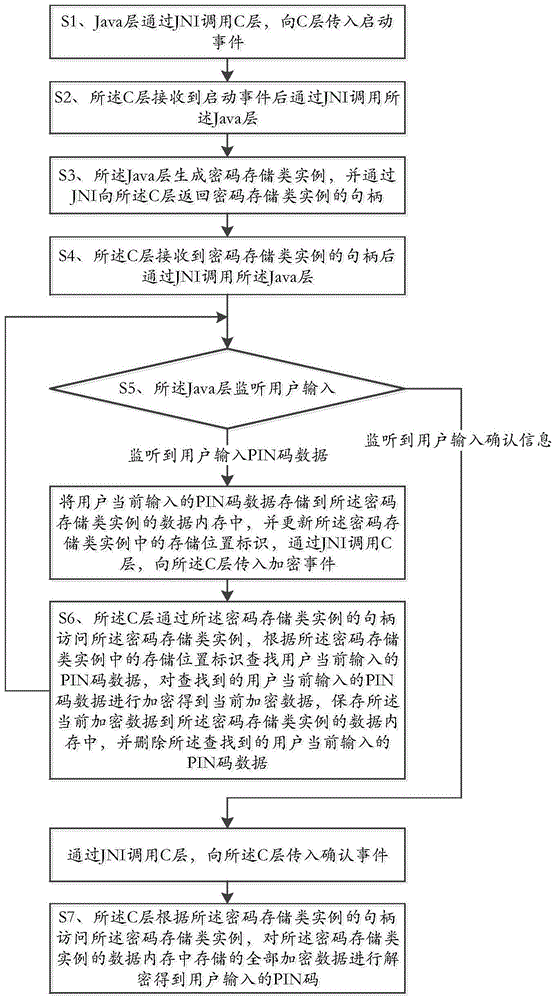

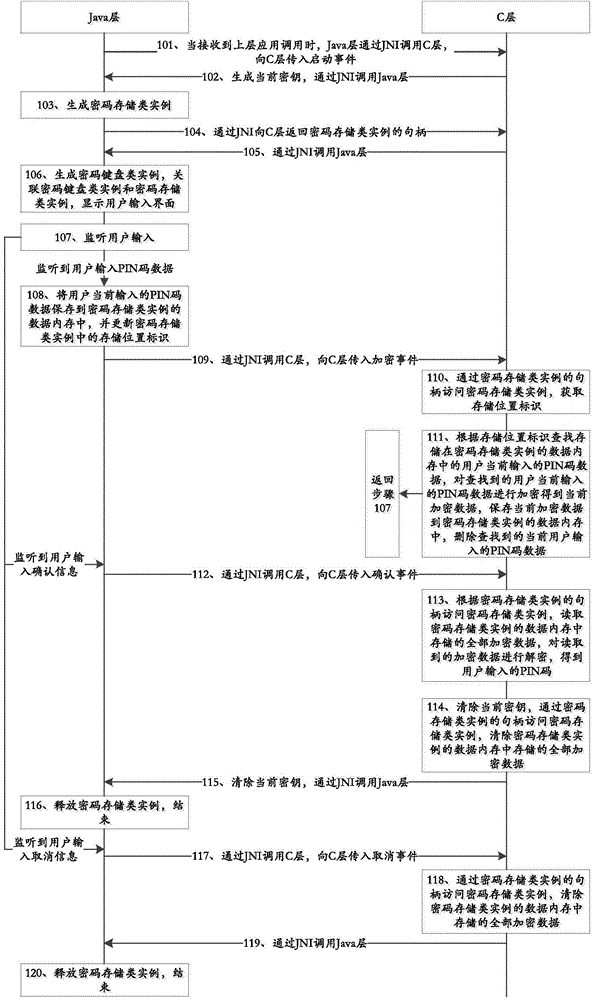

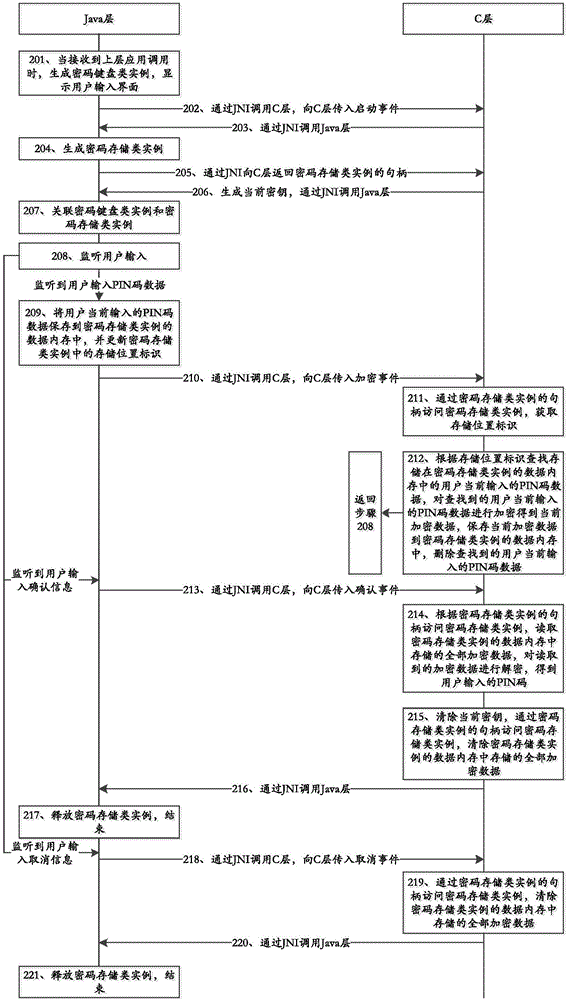

PIN code protection method under Android platform

ActiveCN104915602AImprove securityAvoiding the risk of interceptionDigital data protectionInternal/peripheral component protectionUplevelComputer hardware

The invention discloses a PIN code protection method under an Android platform, and belongs to the field of information security. The method comprises the steps that after receiving an upper-layer call, a Java layer transmits a start event to a C layer; after the C layer receives the start event, a code storage class example is generated by calling the Java layer through a JNI; after the C layer receives a handle returned by the Java layer, the Java layer is called again to monitor user input; when the Java layer monitors that a user inputs PIN code data, the Java layer stores the PIN code data in an example memory, updates the storage position identification and transmits an encryption event to the C layer, and when the Java layer monitors that the user inputs confirmation information, the Java layer transmits a confirmation event to the C layer; when the C layer receives the encryption event, the PIN code data are encrypted through a handle access example, and when the C layer receives the confirmation event, the encrypted data in the example memory is decrypted through the handle access example to obtain a PIN code. The PIN code protection method has the advantages of improving the security of the PIN code under the Android platform.

Owner:FEITIAN TECHNOLOGIES

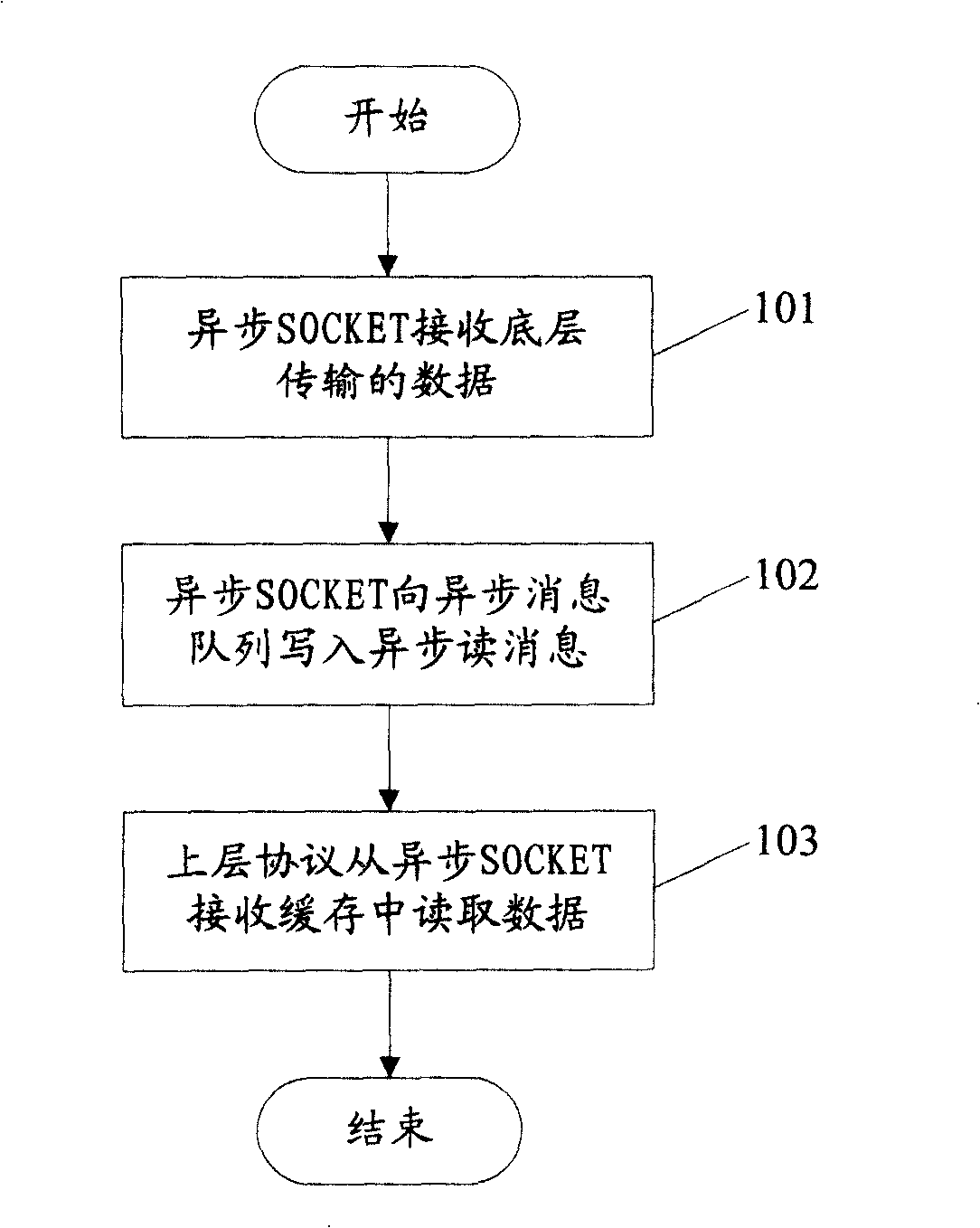

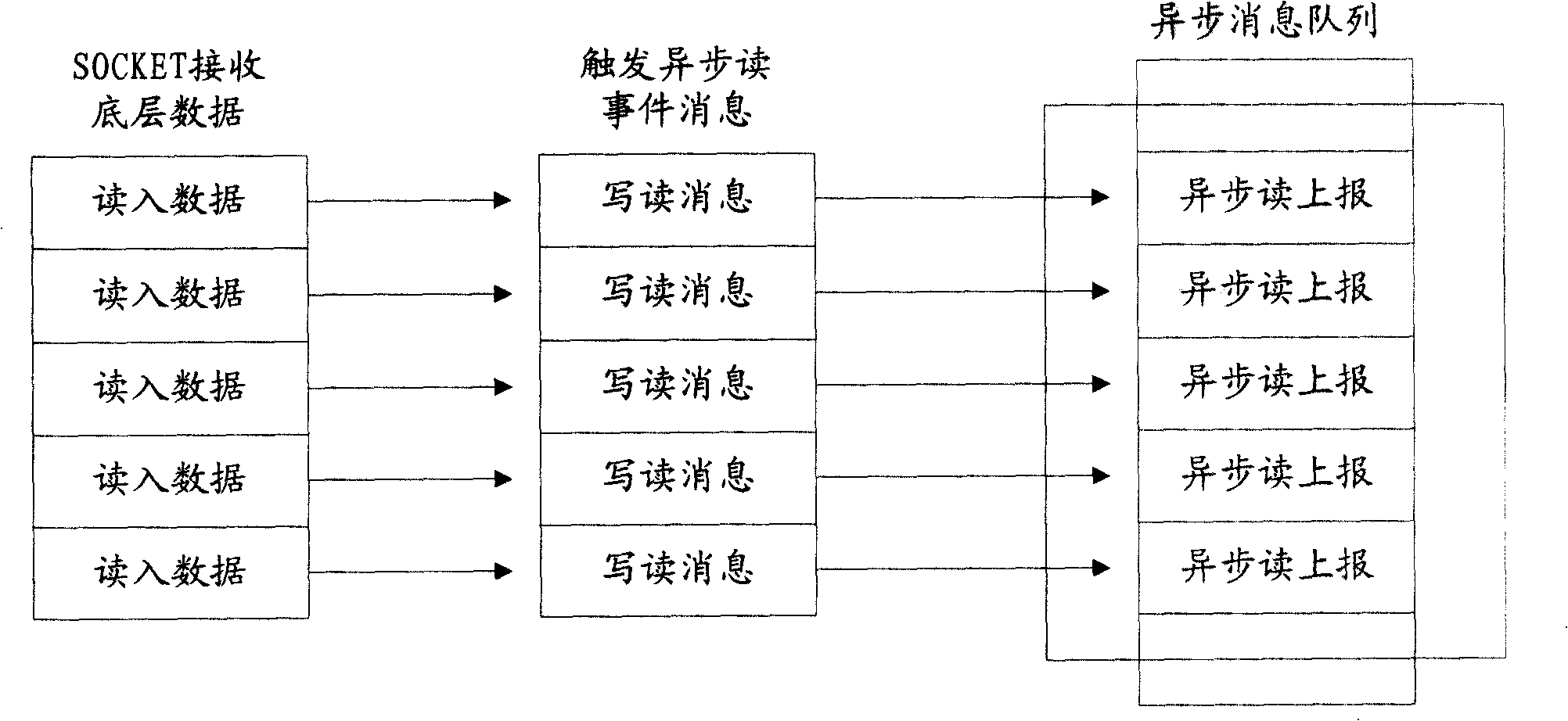

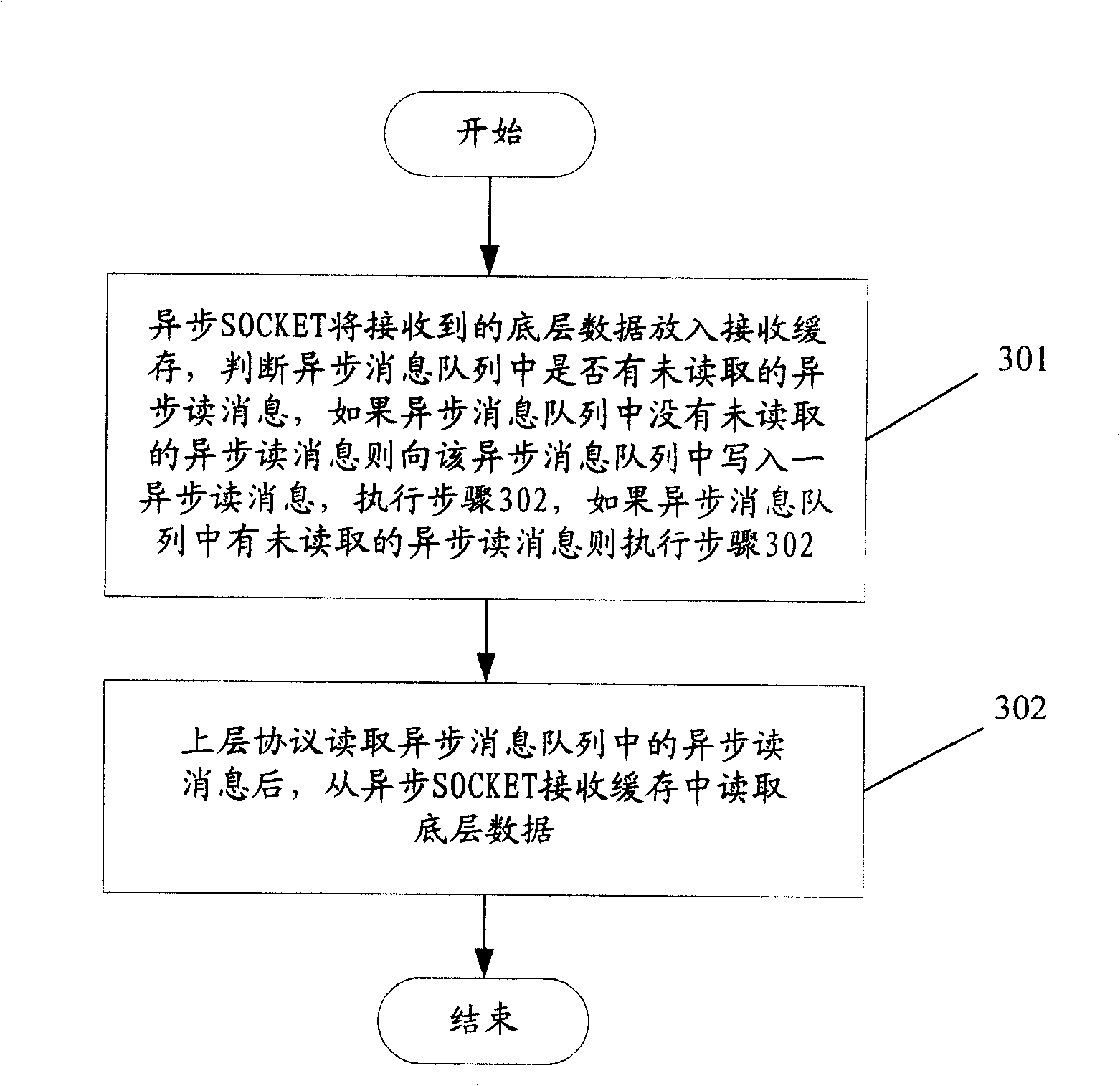

Method, system and asynchronous SOCKET for processing asynchronous message alignment

InactiveCN101247319AReduce occupancyImprove processing efficiencyData switching networksMessage queueUplevel

The invention discloses a method for processing an asynchronous message queue, which includes the steps that: A. asynchronous data puts received underlying data into a receiving cash, an asynchronous read message in the asynchronous message queue is judged whether to read or not, if none of the asynchronous read message is in the asynchronous message queue, an item of the asynchronous read message is written to the asynchronous message queue, then step B is executed, else, the step B is directly executed; B. an upper protocol reads the asynchronous message queue, then the underlying data is red from a asynchronous SOCKET receiving cash. The invention also discloses a system for processing the asynchronous message queue and an asynchronous SOCKET. The application of the invention is that space for the asynchronous message queue occupied by the asynchronous read message is reduced; the speed for according packet data processed by the upper protocol is increased, the station of missing data caused by full load of the asynchronous message queue is avoided when large number of routing is initially booted and routing is shaken.

Owner:HUAWEI TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com