Variable latency stack cache and method for providing data

A memory and data technology, applied in static memory, memory system, digital memory information, etc., can solve the problems of short core clock cycle time, insufficient space, and reduced cache memory size.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

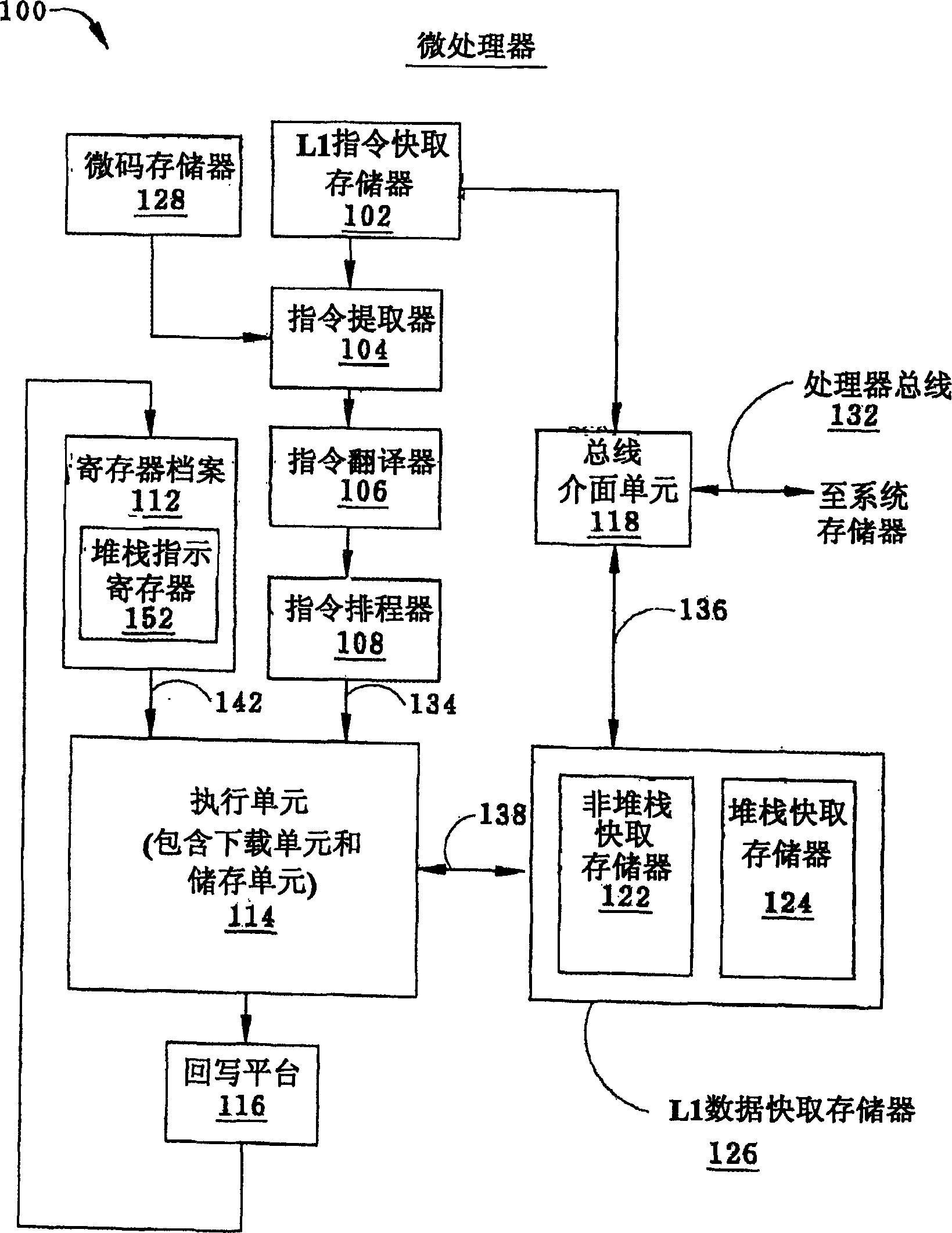

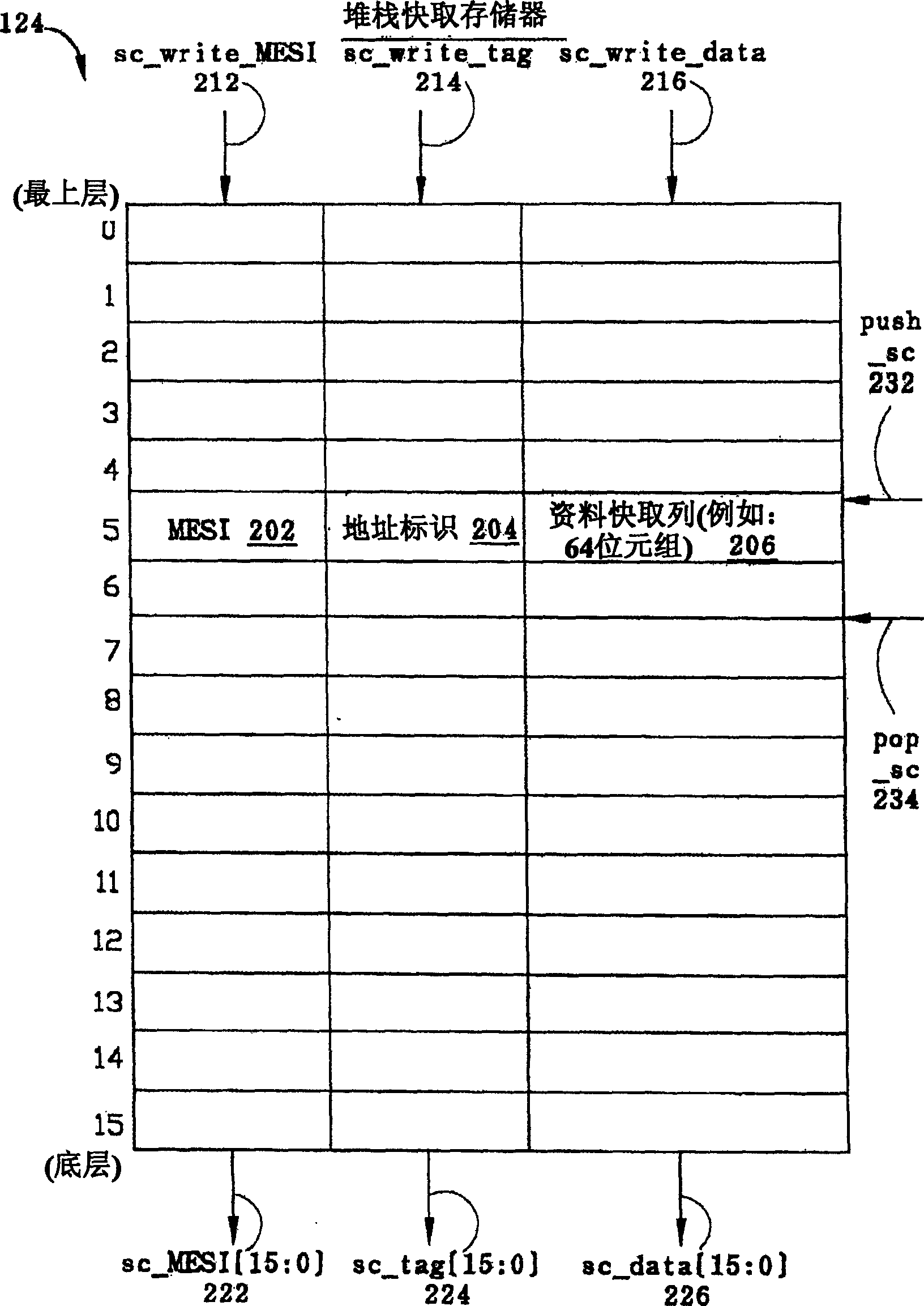

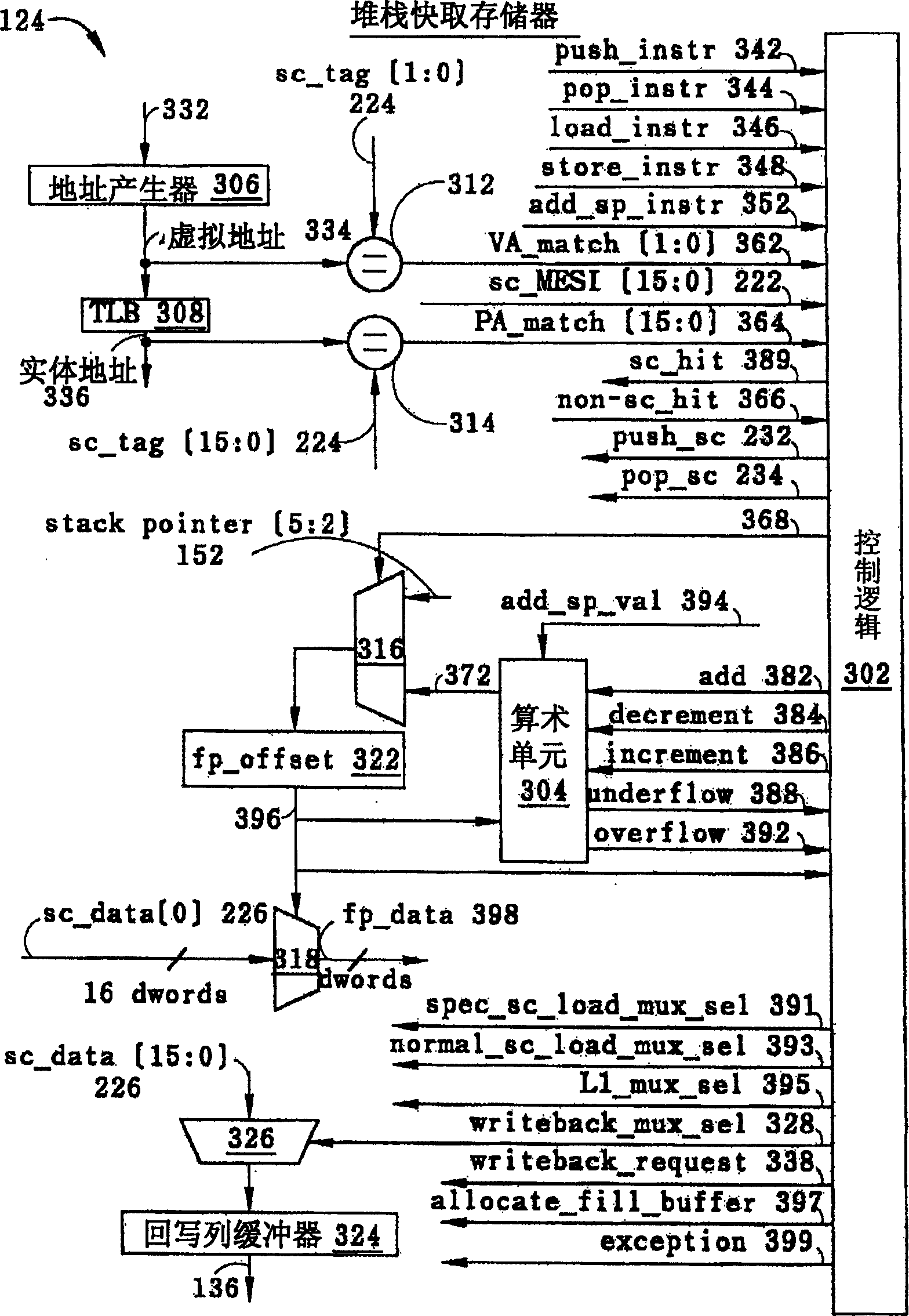

Embodiment Construction

[0066] Generally speaking, the program usually divides the system memory into two parts: a stack area and a non-stack area, and the present invention is proposed based on this fact. The non-stack area is also commonly referred to as the heap area. The main difference between a stack and a heap is that the stack is accessed in a random access manner; while the stack is generally operated in a last-in-first-out (LIFO) manner. Another point of difference between the two is the manner in which a read or write command is executed (or executed) to indicate a read or write address. In general, instructions that read or write to the stack typically specify memory addresses explicitly. Conversely, an instruction to read or write to the stack usually indicates its memory address indirectly through a special register in the microprocessor, wherein the special register is generally referred to as a stack pointer register. The push instruction updates the stack pointer register according...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com