Data stream prefetching method based on access instruction

A data flow and data technology, applied in the direction of concurrent instruction execution, machine execution device, etc., can solve problems such as hindering the execution of memory access instructions, excessive occupation, affecting the performance of processor memory access, etc., to avoid instruction reordering and buffering, improve The effect of improving hit rate and test performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

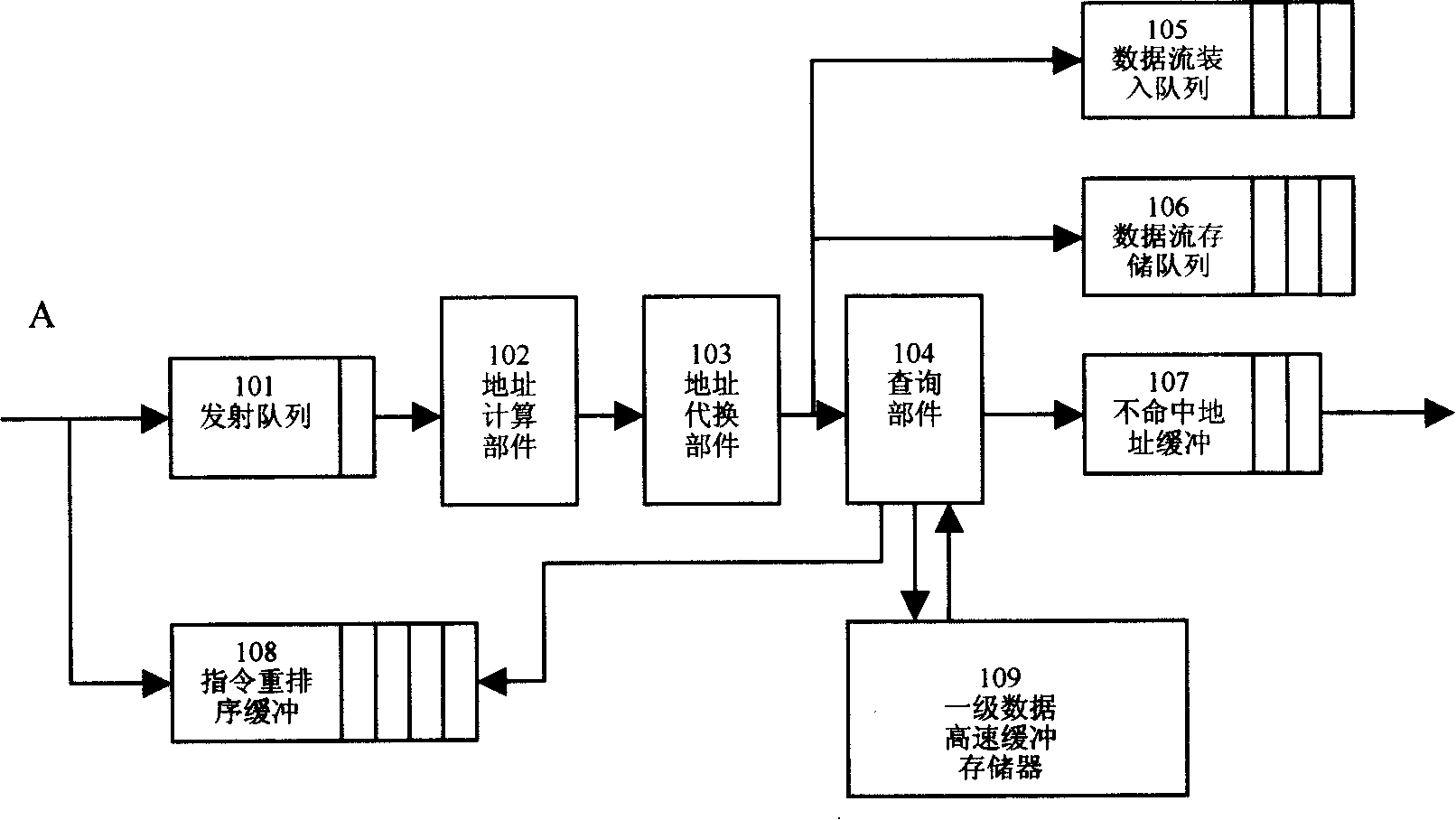

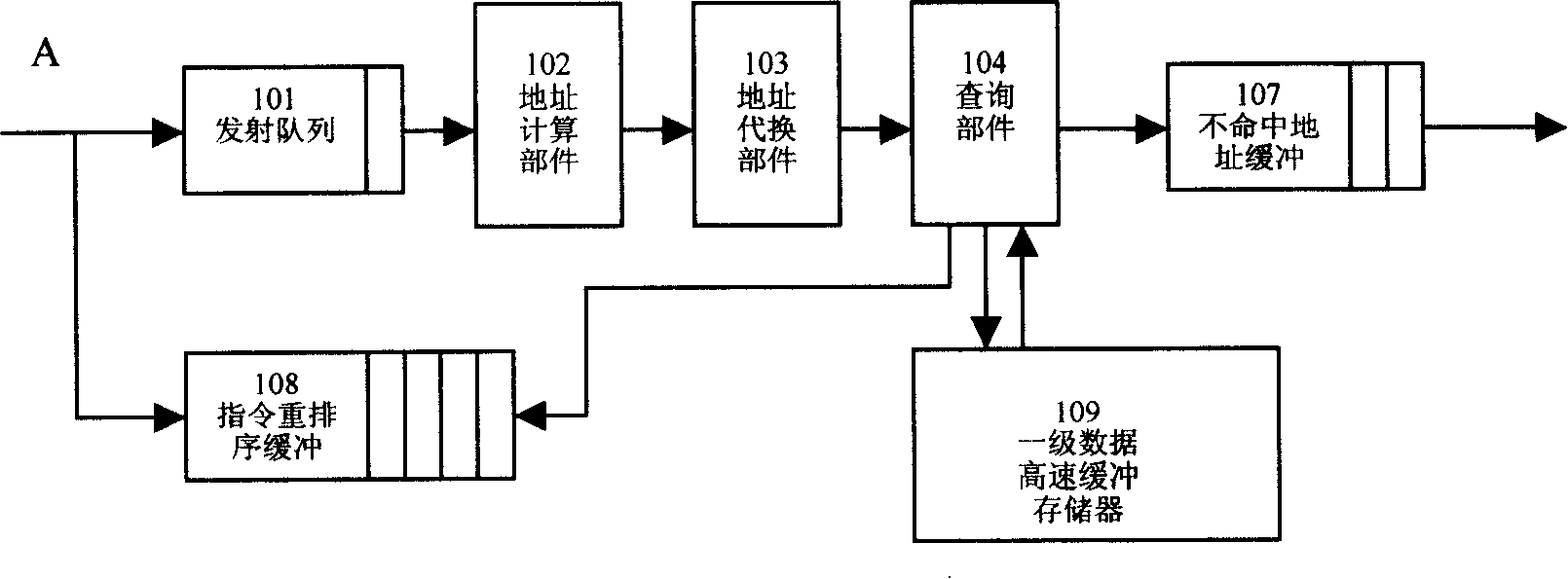

[0021] Such as figure 2 As shown, while the data flow prefetch instruction A enters the issue queue 101, it also enters the instruction reordering buffer 108, waiting for the instruction to exit. After the instruction A is issued, the physical address required for memory access is generated after being processed by the address calculation unit 102 and the address substitution unit 103 . If a precise breakpoint fault or exception occurs during this process, the data stream prefetch instruction will unconditionally give up the memory access operation, and immediately notify the instruction reordering buffer 108 that the instruction can exit, but no error is reported.

[0022] Utilize this physical address, inquire about the data in the first-level data cache memory 109 by query unit 104, if hit the data in the first-level data cache memory 109 and this memory line state meets the requirement of data stream prefetch request, then end the prefetch operation, Instruction reorder ...

Embodiment 2

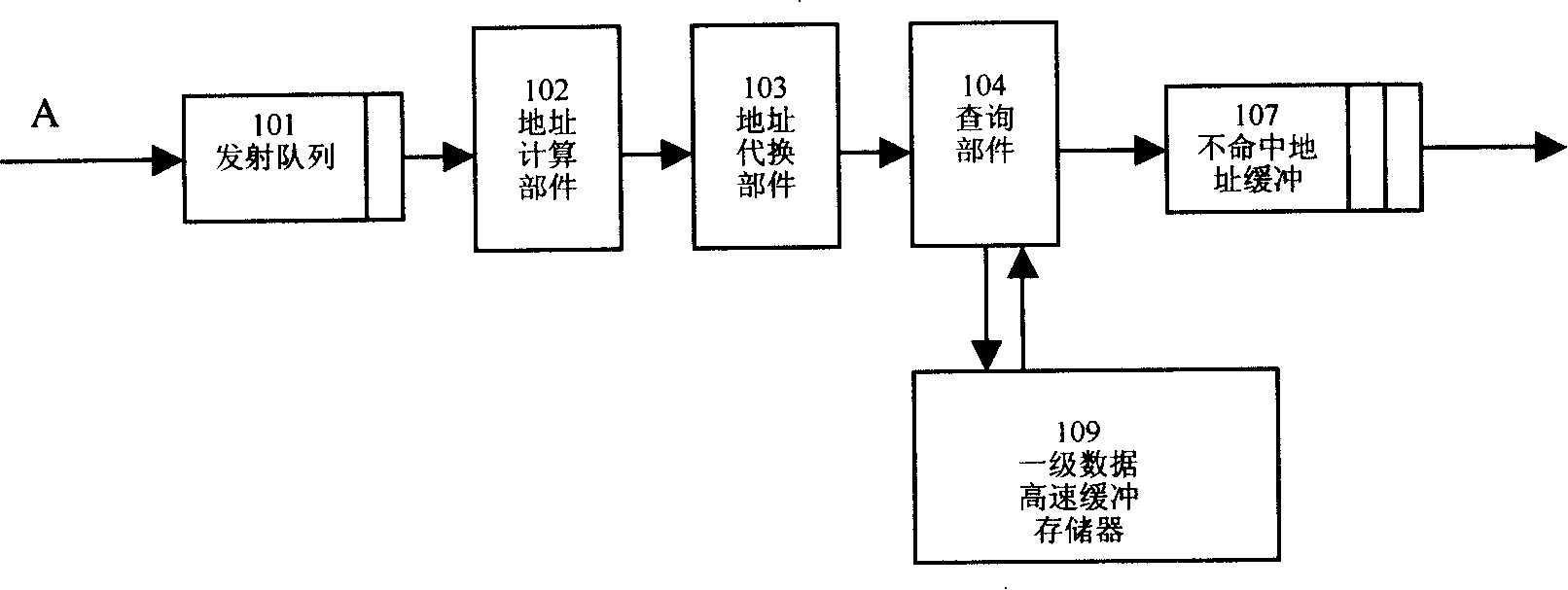

[0024] Such as image 3 As shown, when the data flow prefetch instruction A enters the issue queue, it exits immediately without entering the instruction reordering buffer 108, and the age number assigned to this instruction is the same as that of the previous instruction. After the instruction A is issued, the physical address required for memory access is generated through the address calculation unit 102 and the address substitution unit 103 . If a precise breakpoint fault or exception occurs during this process, the data stream prefetch instruction will unconditionally give up the memory access operation, but no error will be reported.

[0025] Use the physical address to query the primary data cache memory 109 through the query unit 104. If the primary data cache memory 109 is hit and the state of the memory line meets the requirements of the data stream prefetch request, the prefetch operation ends. Otherwise, apply for an entry to the miss address buffer 107, and if th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com