Visual and efficient three-dimensional human body movement data retrieval method based on demonstrated performance

A human motion and data retrieval technology, applied in the field of 3D human motion data retrieval, can solve the problems of not enough intuitive retrieval efficiency, difficult to describe input information, and low performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

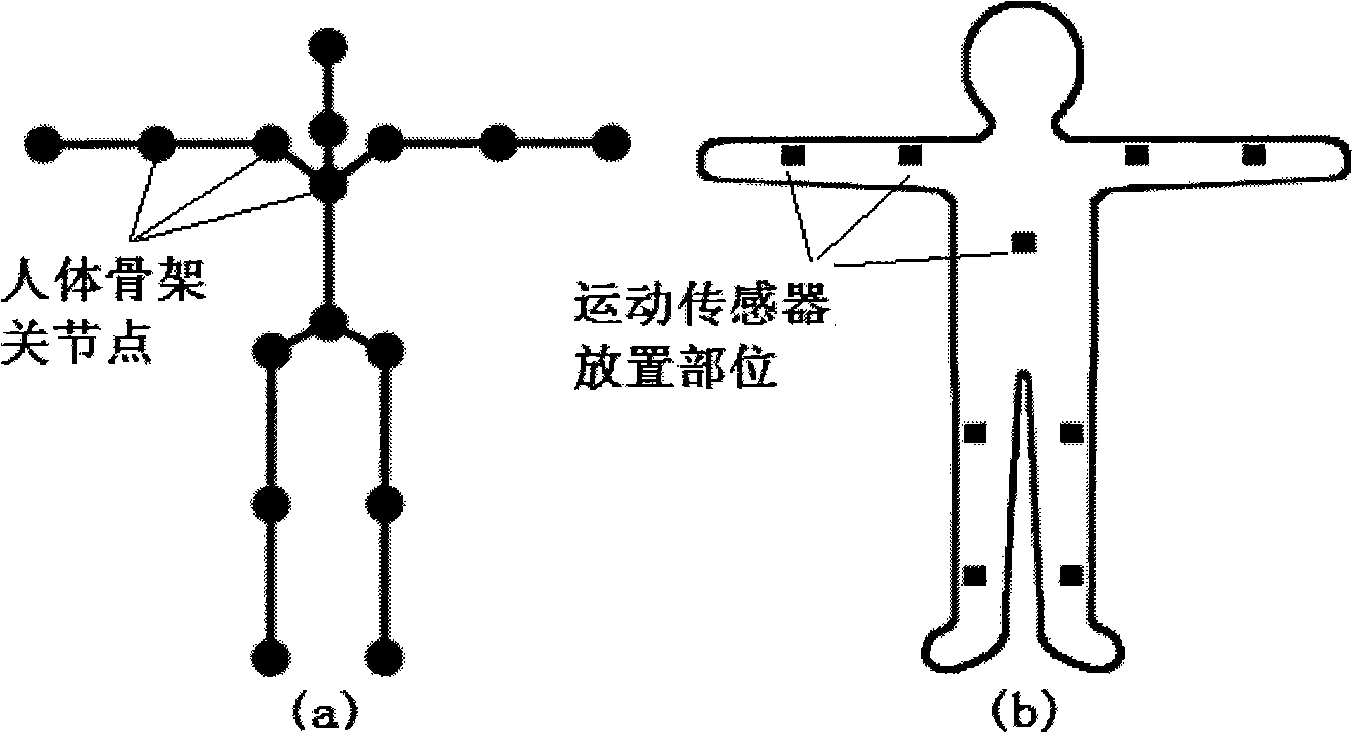

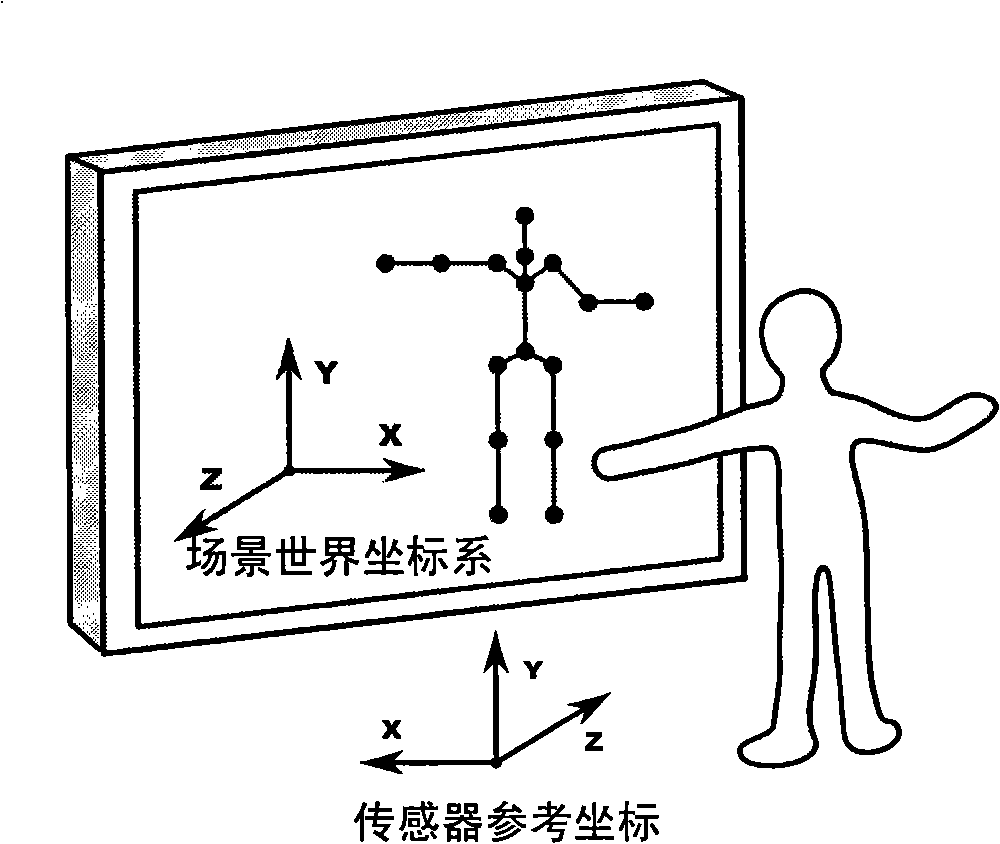

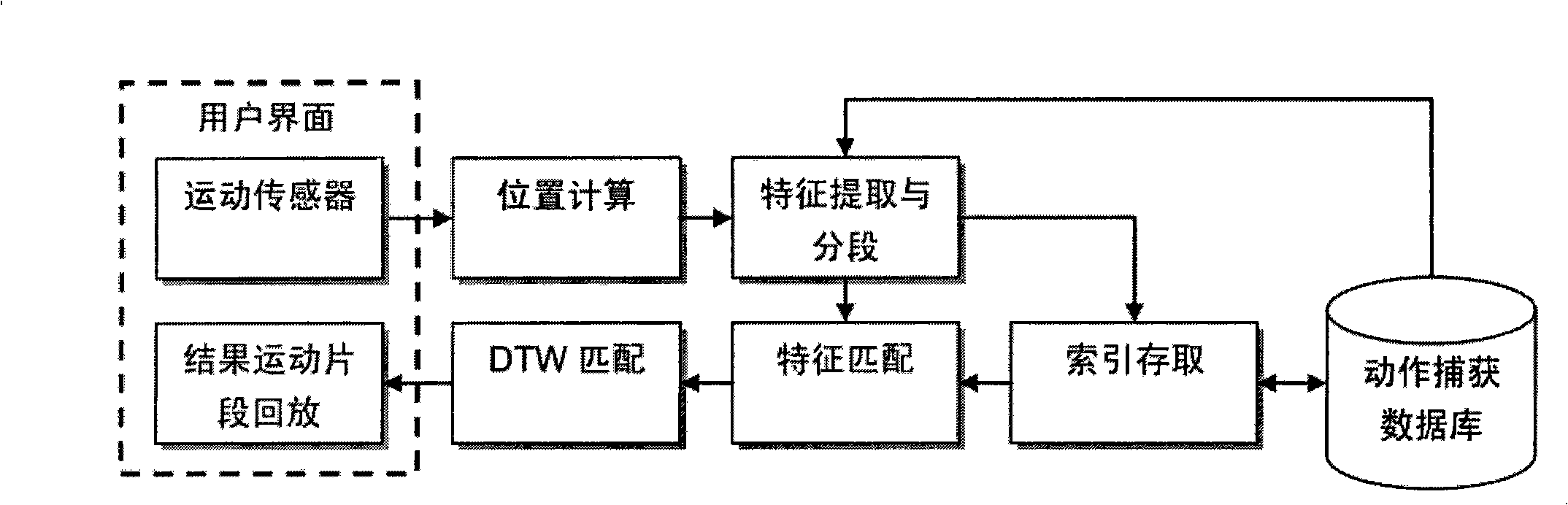

[0060] First, preprocess the large-capacity motion database, perform feature extraction and segmentation for each motion data, and construct a motion index, and then perform intuitive and efficient 3D human motion data retrieval based on demonstration performances according to the process, see image 3 . Connect the motion sensor, set its global reference coordinate system, and input the standard 3D human skeleton, see figure 1 (a), if the user only pays attention to local body movements, such as the movement of the upper body, only need to select 5 feature nodes of the chest, left elbow, right elbow, left wrist, and right wrist, and use 5 motion sensors to obtain demonstration performance movements of the upper body , specify the corresponding relationship between the motion sensor and joint points through program interface interaction or input configuration files, and then place the motion sensor on the corresponding parts of the human body, seefigure 1 (b), after pose align...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com