Process for positioning spatial position of pipe mouth based on vision

A technology of spatial position and positioning method, applied in the field of image processing and pattern recognition, which can solve the problems of low degree of automation, error, and low efficiency of robot execution.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

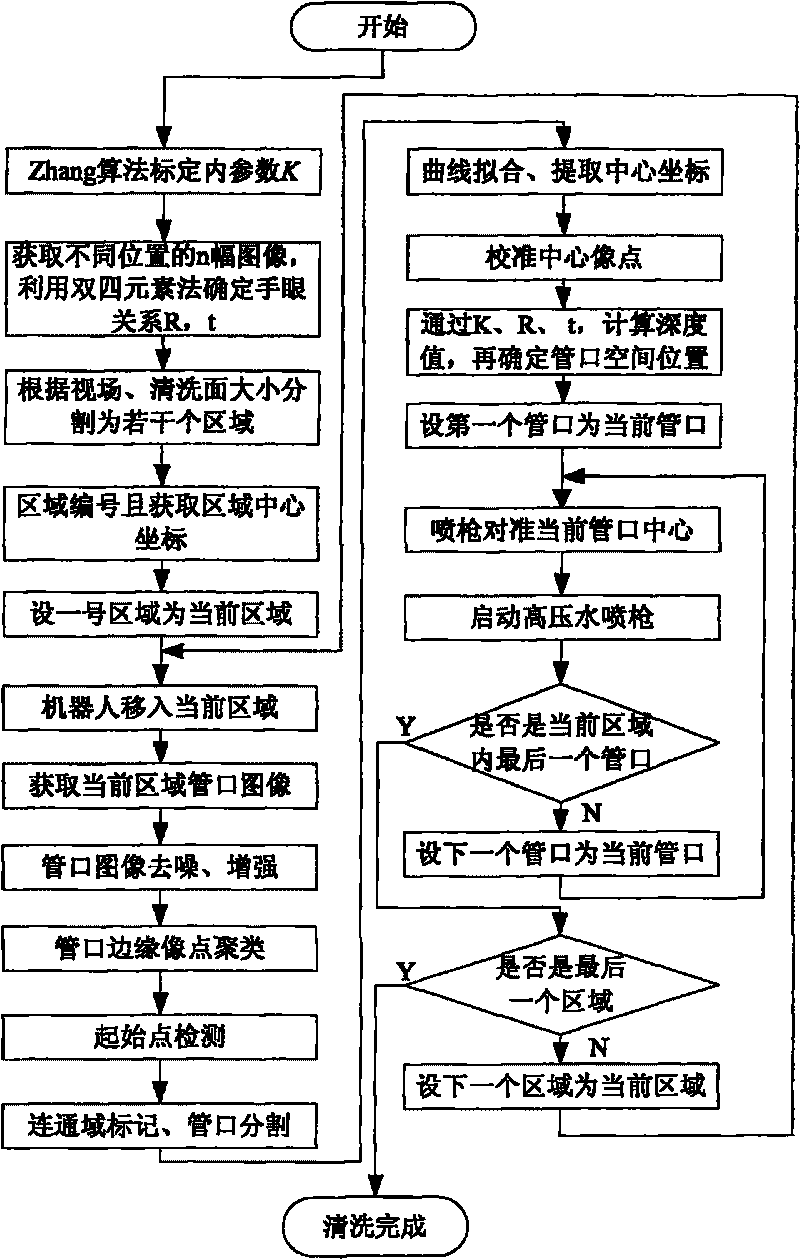

[0059] Place the underwater cleaning robot in harsh environments such as large power plants and steel mills to perform nozzle cleaning tasks. The robotic arm of the robot is equipped with a vision system, which senses the nozzle to be cleaned and calculates the corresponding nozzle space Location. According to the size of the work site and the effective field of view of the camera, the working surface is divided into areas by offline manual calculation, and the rough positioning is divided into blocks to control the movement of the robot. When the robot moves to a certain position of the rough positioning, the camera observes the nozzle in the local area. distribution, using the vision system to precisely locate the position of each nozzle.

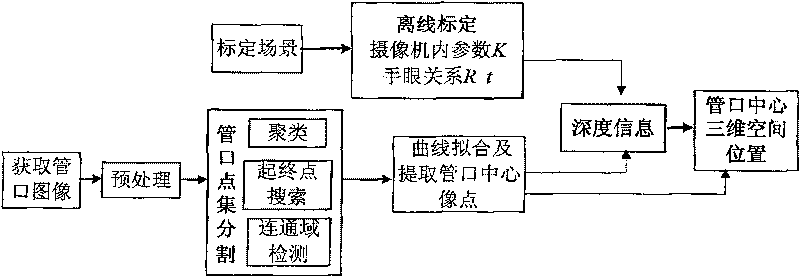

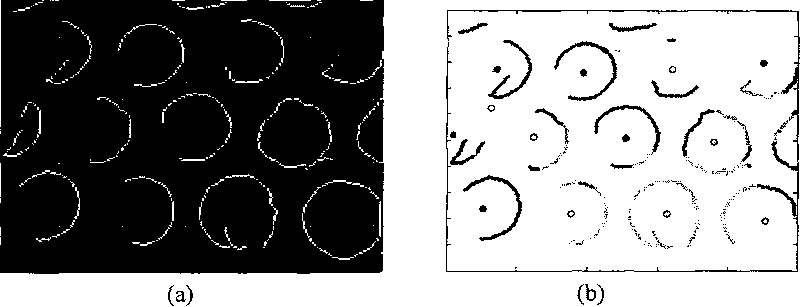

[0060] The process principle block diagram of the present invention is as follows figure 1 Firstly, the hand-eye relationship of the robot is calibrated off-line, and the geometric mapping relationship between the 2D pixel coordinates an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com