Low-expense block synchronous method supporting multi-core assisting thread

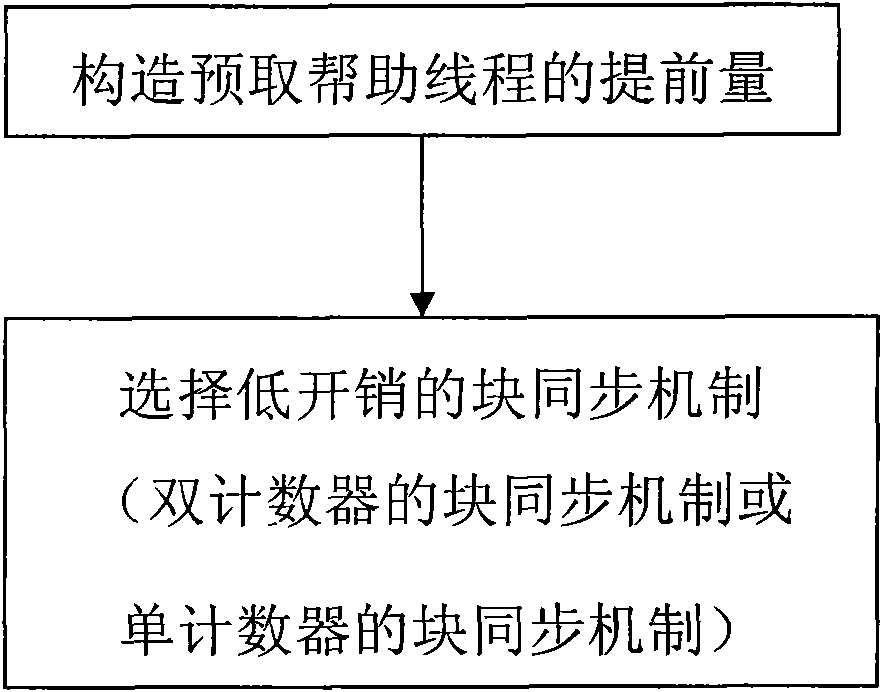

A block synchronization, low-overhead technology, applied in the field of multi-core computers, can solve the problem that the execution performance of computing threads cannot be improved, and achieve the effect of ensuring sustainable operation ability, reducing synchronization overhead, and improving execution performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0068] According to the above technical solutions, the present invention will be described in detail below in conjunction with the examples.

[0069] Take the following simple program as an example, add the ADDSCALE variable in the header file ldsHeader.h, and control the calculation workload of each node in the linked list by changing the value of the variable. The calculation of the linked list nodes is:

[0070] while(iterator){

[0071] temp=iterator->i_data;

[0072] while(i++

[0073] temp+=1;

[0074] }

[0075] res+=temp;

[0076] i=0;

[0077] iterator=iterator->next;

[0078]}

[0079] The calculation workload is adjusted by continuously changing the value of ADDSCALE, starting from ADDSCALE is 0, and the value of ADDSCALE is increased by 5 each time, so that we have ADDSCALE as 0, 5, 10, 15, 20 and so on.

[0080] Combining the above examples, the definitions of relevant terms are given as follows:

[0081] Defi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com