A Spatial Trajectory Retrieval Method for Human Motion Data

A technology of human movement and movement, applied in electrical digital data processing, special data processing applications, instruments, etc., can solve problems such as the impact of retrieval accuracy, the impact of upper limb movements, and the loss of low-dimensional feature data.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

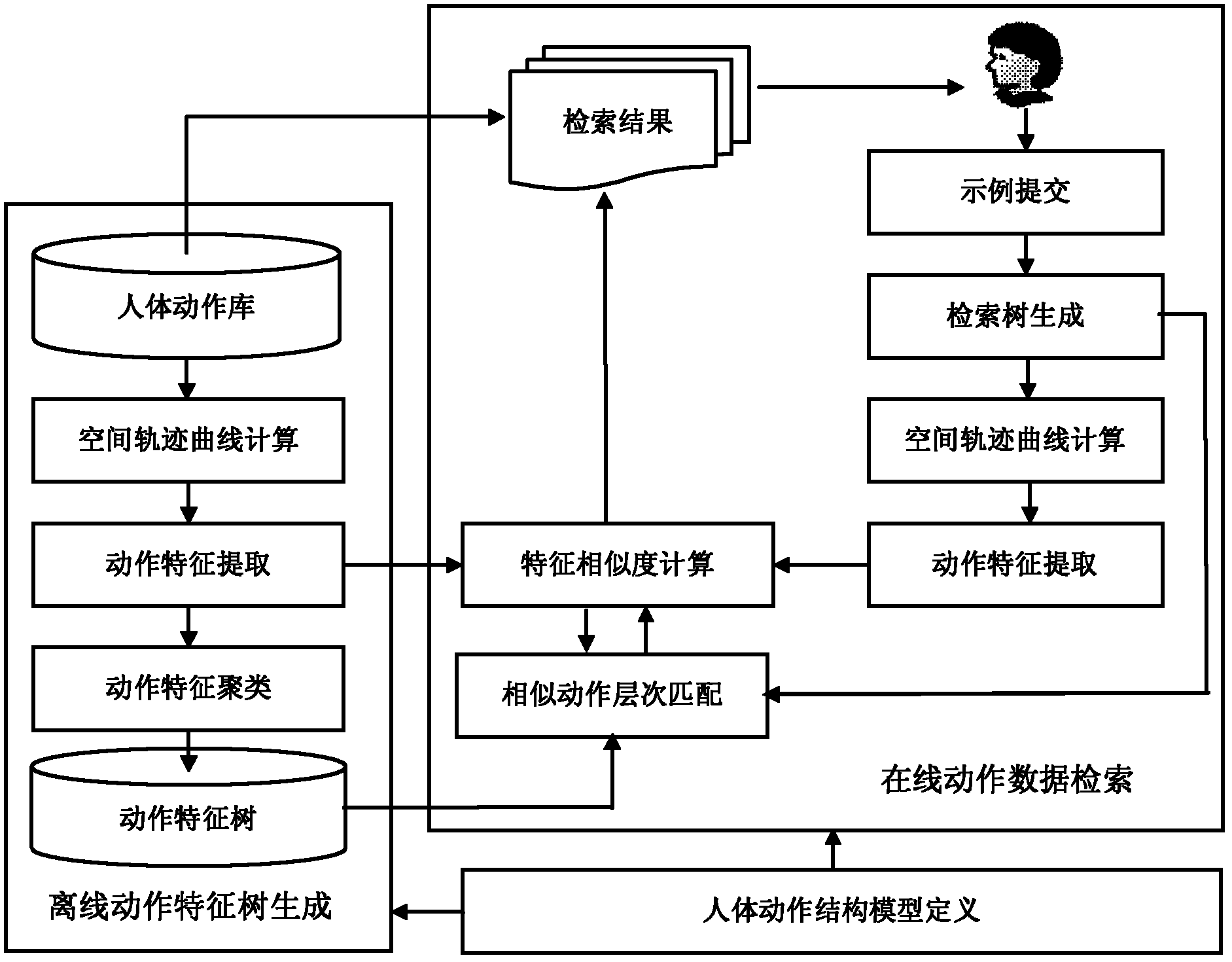

[0091] The processing flow chart of this embodiment is as follows figure 1 As shown, the whole method is divided into three main steps: definition of human motion structure model, generation of offline motion feature tree, and online motion data retrieval. The main processes of each embodiment are described below.

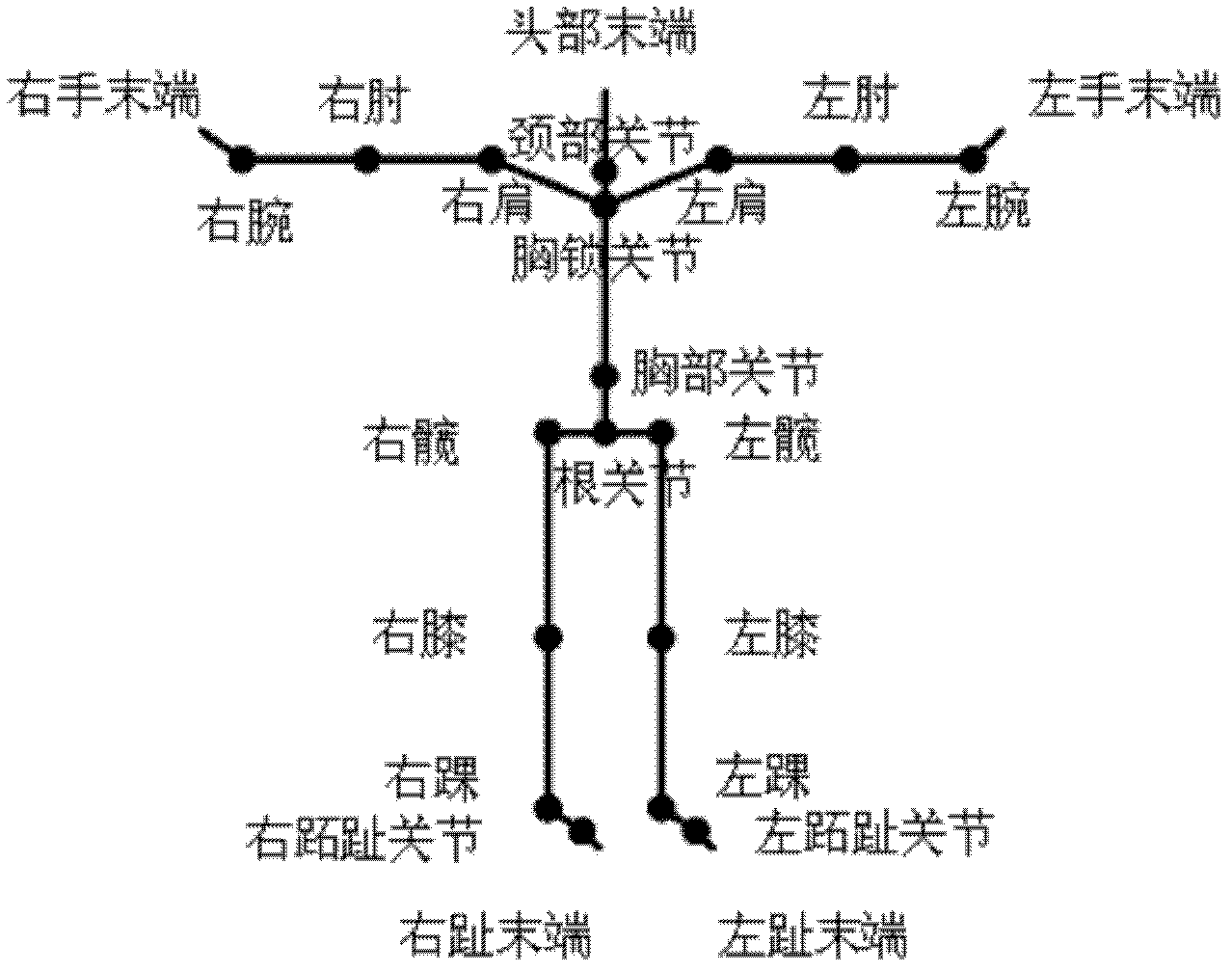

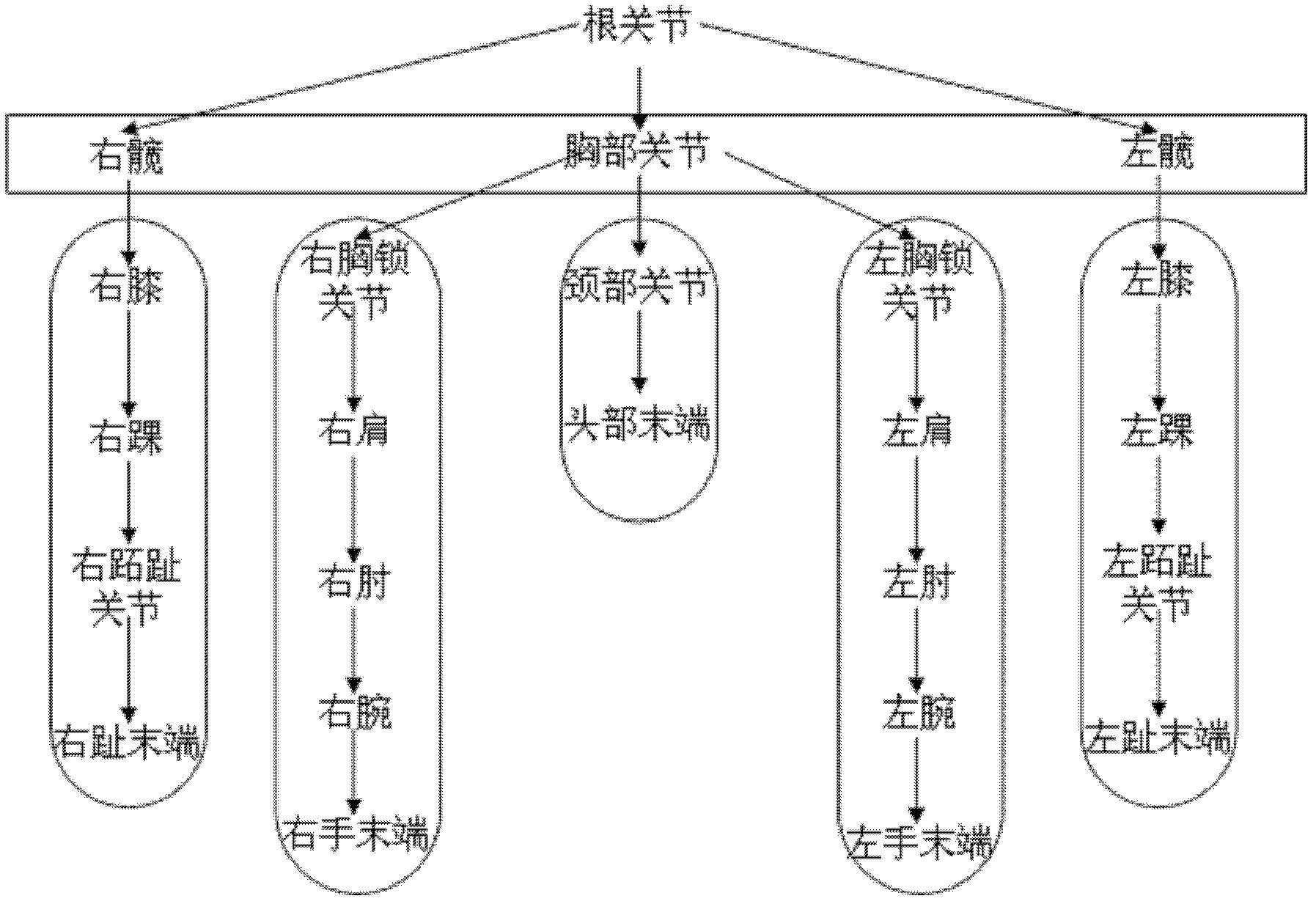

[0092] 1. Definition of human action structure model

[0093] The hierarchical structure of the human joint model makes the trajectory curve of the joint in three-dimensional space depend on the movement of its own joints and various predecessor joints. For example, the spatial trajectory curve of the end of the left hand relative to the chest joint depends on the left sternoclavicular joint, left shoulder, left elbow, and left wrist. As well as the movement of its own joints, if the spatial trajectory curves of the left-hand end of the two actions are similar, since the spatial trajectory curve of the left-hand end is the cumulative effect of its own and predecess...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com