Method, apparatus, and program for aligning images

A technology for aligning devices and images, which is applied in image enhancement, image analysis, image data processing, etc., can solve the problems of artifacts in subtraction images, and achieve high-accuracy results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

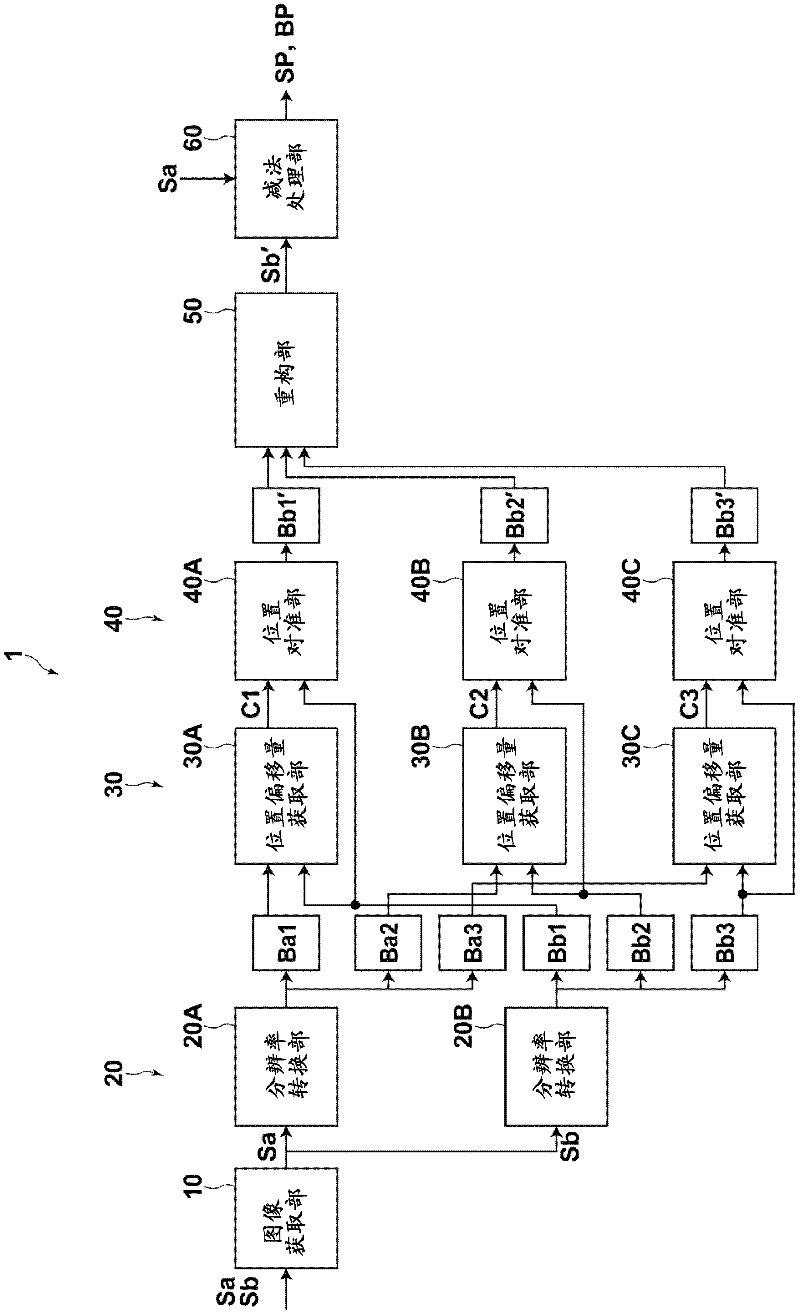

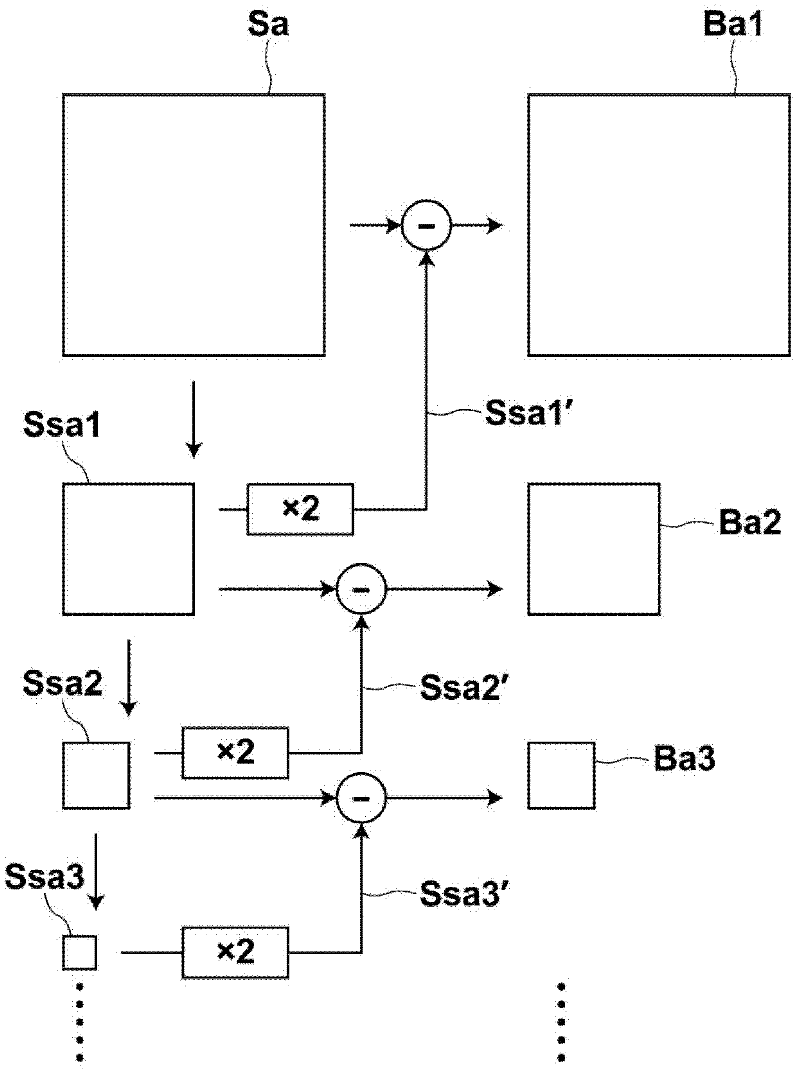

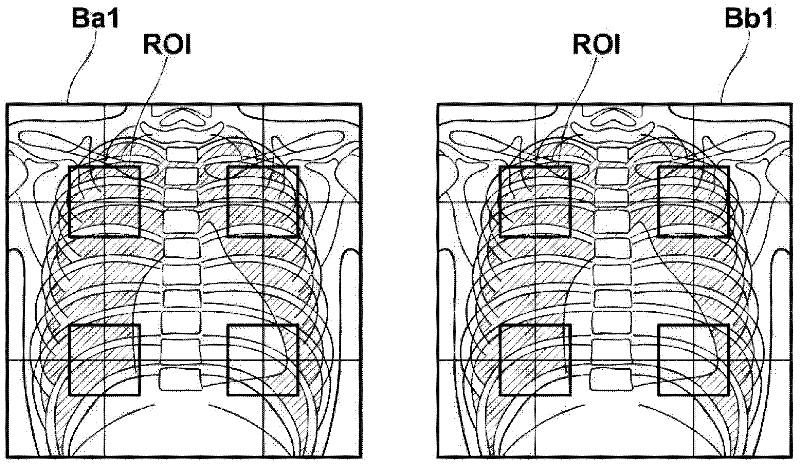

[0061] Hereinafter, embodiments of the present invention are described with reference to the drawings. figure 1 is a block diagram showing a schematic configuration of the image position alignment device 1 according to the first embodiment of the present invention. Notice, figure 1 The image position alignment device 1 in is applied to an energy subtraction device that performs subtraction processing using radiographic image pairs. For example, the energy subtraction device is installed on an imaging console that uses a radiation detector to acquire radiographic images. By executing an image alignment processing program downloaded to an auxiliary storage device on a computer (such as a personal computer), such as figure 1 The structure of the image position alignment device 1 is shown. At this time, the image alignment processing program is installed in a computer after being recorded on a data recording medium such as a CD-ROM or distributed via a network such as the Inter...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com