Target identification method and device

A target recognition and target technology, applied in the field of human-computer interaction, can solve the problem of high probability of misrecognition, and achieve the effect of improving accuracy and reducing the probability of misrecognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

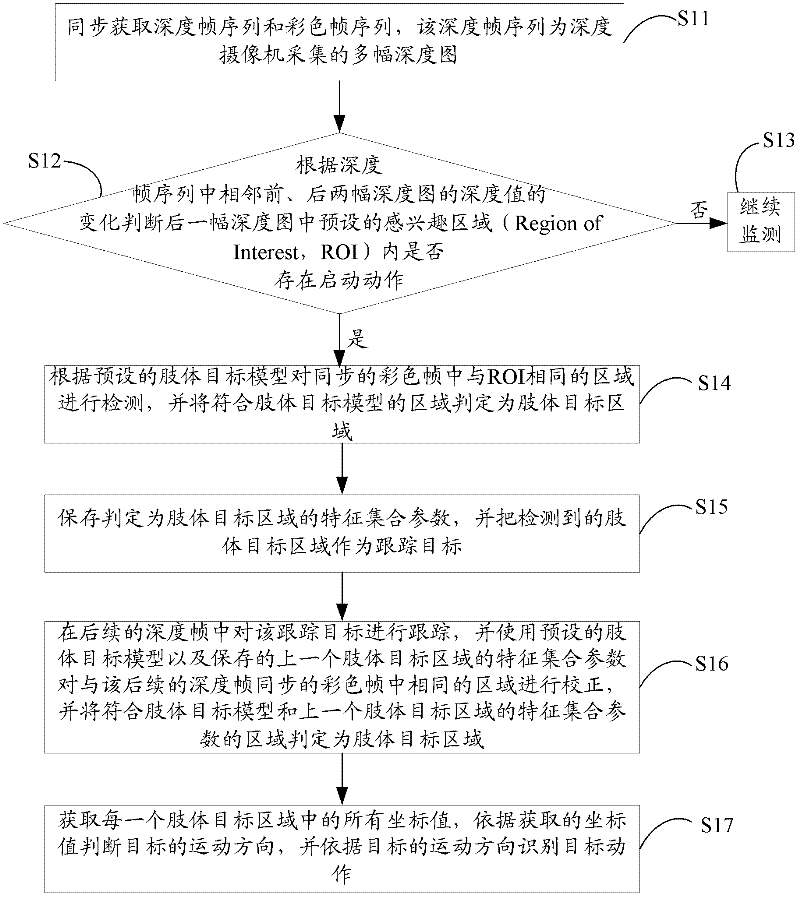

[0031] figure 1 A flow chart of the target recognition method provided in the first embodiment of the present invention is shown, and the target recognition method provided in this embodiment includes:

[0032] Step S11, synchronously acquire a sequence of depth frames and a sequence of color frames, the sequence of depth frames is a plurality of depth images collected by a depth camera.

[0033] In this embodiment, the depth camera is used to collect depth maps greater than 10 frames per second, usually 25 frames per second, and each depth map is numbered sequentially, and these multiple depth maps with incremental numbers form a depth sequence of frames.

[0034] In this embodiment, a common camera or a depth camera is used to collect color images, the frequency of collecting color images is the same as the frequency of collecting depth images, and it is the same scene as the collected depth images, and each color image is also numbered sequentially, A sequence of color fr...

Embodiment 2

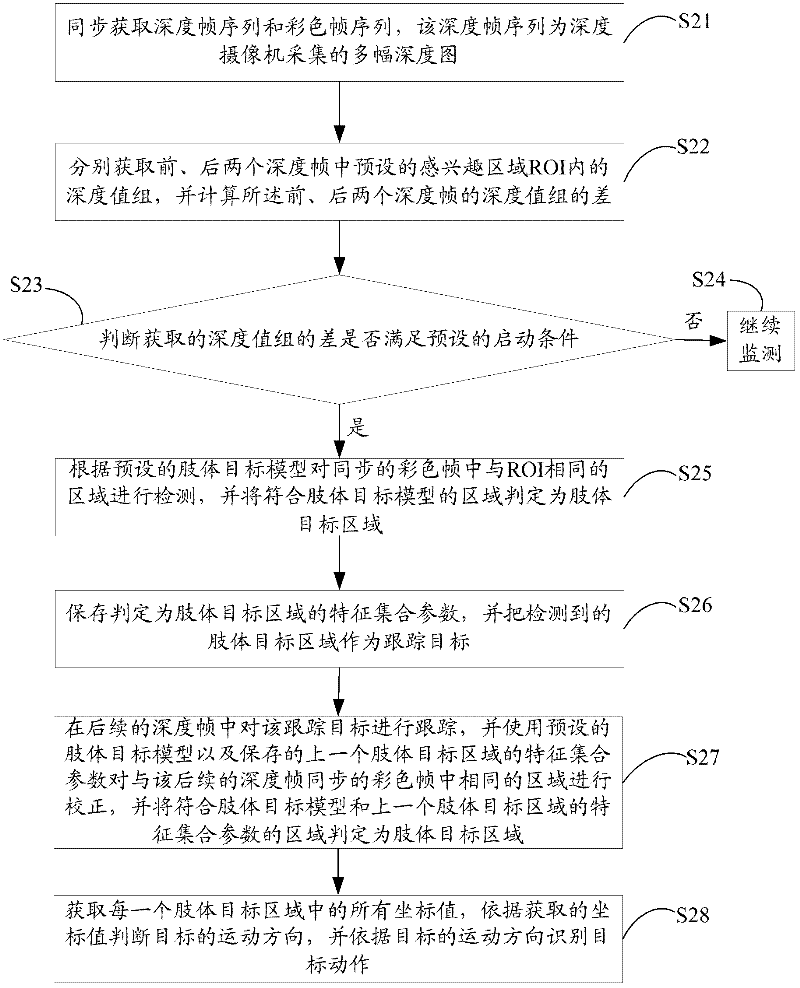

[0052] figure 2 The flow of the target recognition method provided by the second embodiment of the present invention is shown. In this embodiment, step S12 of the first embodiment is mainly described in more detail. The target recognition method provided by the second embodiment mainly includes:

[0053] Step S21, synchronously acquiring a sequence of depth frames and a sequence of color frames, the sequence of depth frames is a plurality of depth images collected by a depth camera.

[0054] In this embodiment, the execution process of step S21 is the same as the execution process of step S11 in the first embodiment above, and the description will not be repeated here.

[0055] Step S22 , respectively acquiring the depth value groups in the preset region of interest ROI in the previous and subsequent depth frames, and calculating the difference between the depth value groups of the previous and subsequent depth frames.

[0056] In this embodiment, the depth value group in th...

Embodiment 3

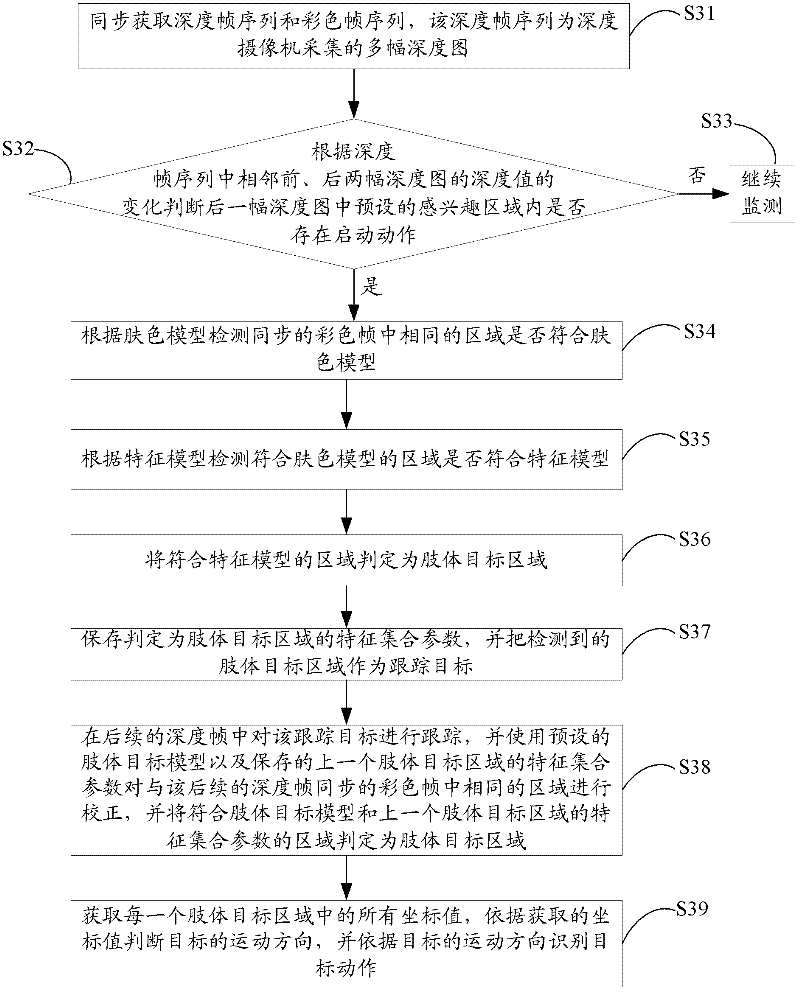

[0072] image 3 It shows the process flow of the target recognition method provided by the third embodiment of the present invention. In this embodiment, the limb target selected is a human hand. This embodiment mainly focuses on the step S15 of the first embodiment, the step S26 of the second embodiment and the first embodiment Step S16 of step S16, step S27 of embodiment two are described in more detail:

[0073] Step S31, synchronously acquiring a sequence of depth frames and a sequence of color frames, the sequence of depth frames is a plurality of depth images collected by a depth camera.

[0074] Step S32, according to the changes in the depth values of the adjacent front and rear depth maps in the depth frame sequence, it is judged whether there is an activation action in the preset region of interest in the next depth map, if not, go to step S33, if yes , execute step S34.

[0075] Step S33, continue monitoring.

[0076] In this embodiment, the execution process o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com