Method for dynamically dividing shared high-speed caches and circuit

A partition algorithm and dynamic technology, applied in the computer field, can solve problems such as system performance degradation, and achieve the effect of reducing degradation and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

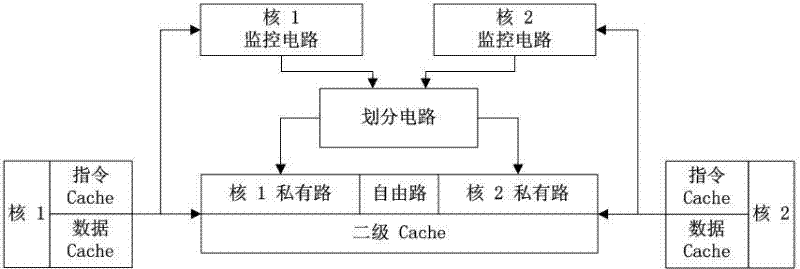

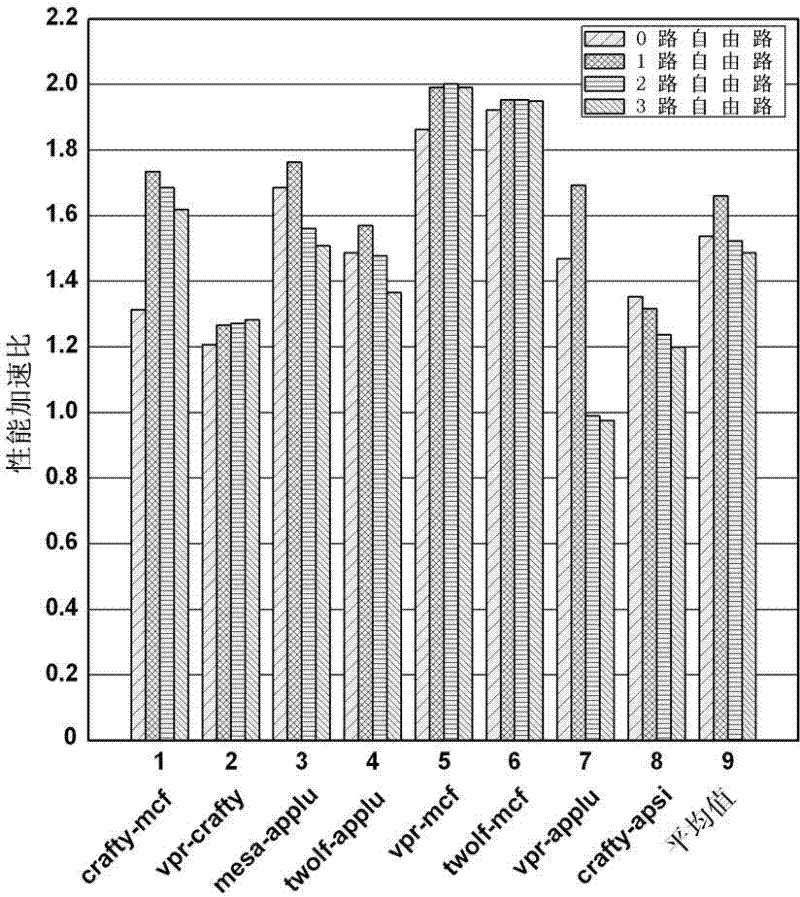

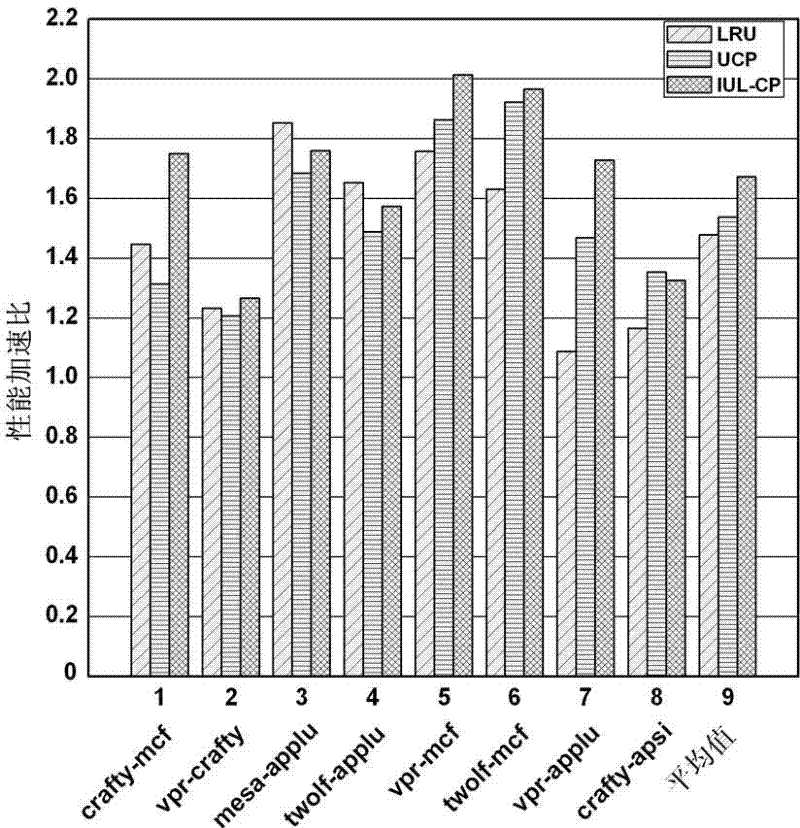

[0016] The present invention adopts the multi-core system simulator M-Sim to simulate the dual-core system based on the Alpha instruction set, and the specific configuration is shown in Table 1. In the dual-core shared secondary Cache system, the overall framework of applying the novel shared Cache dynamic division method proposed by the present invention is as follows: figure 1 shown. We select multiple test cases from the SPEC CPU2000 test set, and test them in pairs in pairs under the dual-core system architecture built by M-Sim, as shown in Table 2.

[0017] Table 1 Simulation environment configuration

[0018] CPU 2 cores, 8 wide, out of order, 48 LSQ, 128 ROB Level 1 Instruction Cache private, 2KB, 32B line-size, 2-way, LRU Level 1 Data Cache private, 2KB, 32B line-size, 2-way, LRU Level 2 Cache shared, 64KB, 32B line-size, 16-way Memory 300-cycle access latency

[0019] Table 2 Test Cases

[0020] serial numbe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com